Ask or search anything...

Harbin Insitute of Technology

Enhancing Large Language Models Against Inductive Instructions with Dual-critique Prompting

Numerous works are proposed to align large language models (LLMs) with human intents to better fulfill instructions, ensuring they are trustful and helpful. Nevertheless, some human instructions are often malicious or misleading and following them will lead to untruthful and unsafe responses. Previous work rarely focused on understanding how LLMs manage instructions based on counterfactual premises, referred to here as \textit{inductive instructions}, which may stem from users' false beliefs or malicious intents. In this paper, we aim to reveal the behaviors of LLMs towards \textit{inductive instructions} and enhance their truthfulness and helpfulness accordingly. Specifically, we first introduce a benchmark of \underline{\textbf{Indu}}ctive {In\underline{\textbf{st}}ruct}ions (\textsc{\textbf{INDust}}), where the false knowledge is incorporated into instructions in multiple different styles. After extensive human and automatic evaluations, we uncovered a universal vulnerability among LLMs in processing inductive instructions. Additionally, we identified that different inductive styles affect the models' ability to identify the same underlying errors, and the complexity of the underlying assumptions also influences the model's performance. Motivated by these results, we propose \textsc{Dual-critique} prompting to improve LLM robustness against inductive instructions. Our experiments demonstrate that \textsc{Dual-critique} prompting significantly bolsters the robustness of a diverse array of LLMs, even when confronted with varying degrees of inductive instruction complexity and differing inductive styles.

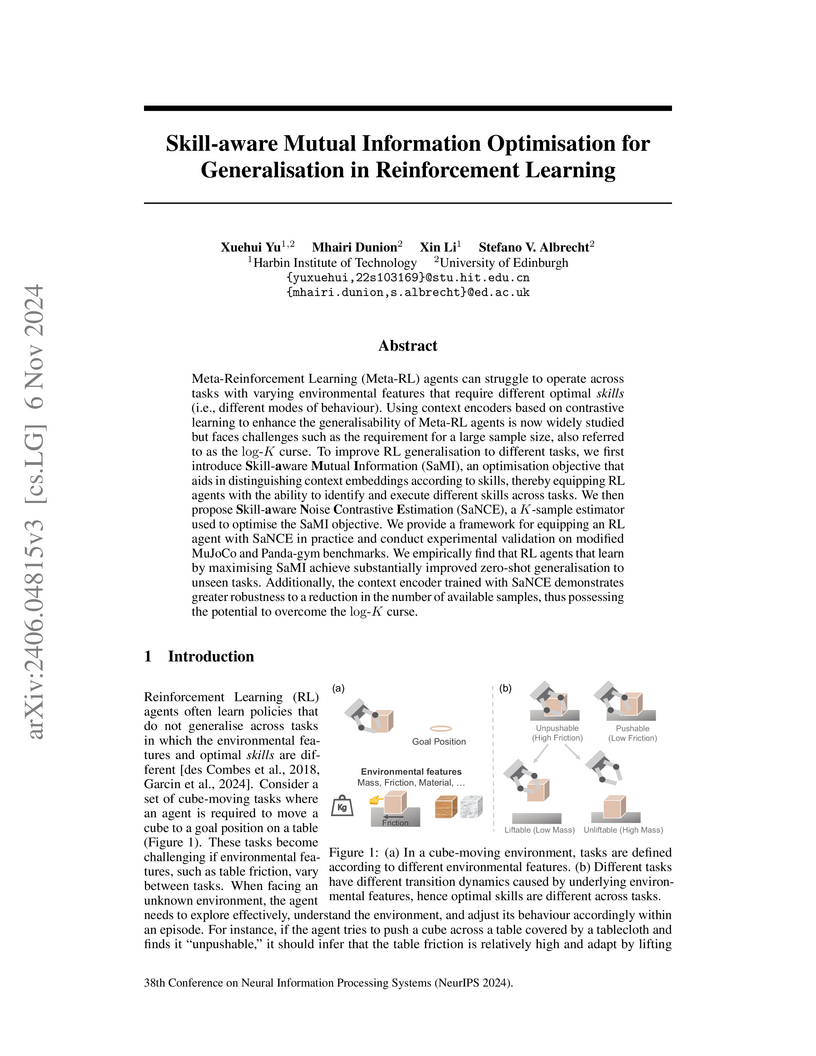

Skill-aware Mutual Information Optimisation for Generalisation in Reinforcement Learning

Meta-Reinforcement Learning (Meta-RL) agents can struggle to operate across tasks with varying environmental features that require different optimal skills (i.e., different modes of behaviour). Using context encoders based on contrastive learning to enhance the generalisability of Meta-RL agents is now widely studied but faces challenges such as the requirement for a large sample size, also referred to as the log-K curse. To improve RL generalisation to different tasks, we first introduce Skill-aware Mutual Information (SaMI), an optimisation objective that aids in distinguishing context embeddings according to skills, thereby equipping RL agents with the ability to identify and execute different skills across tasks. We then propose Skill-aware Noise Contrastive Estimation (SaNCE), a K-sample estimator used to optimise the SaMI objective. We provide a framework for equipping an RL agent with SaNCE in practice and conduct experimental validation on modified MuJoCo and Panda-gym benchmarks. We empirically find that RL agents that learn by maximising SaMI achieve substantially improved zero-shot generalisation to unseen tasks. Additionally, the context encoder trained with SaNCE demonstrates greater robustness to a reduction in the number of available samples, thus possessing the potential to overcome the log-K curse.

Exploring the Use of Large Language Models for Reference-Free Text Quality Evaluation: An Empirical Study

18 Sep 2023

Evaluating the quality of generated text is a challenging task in NLP, due to the inherent complexity and diversity of text. Recently, large language models (LLMs) have garnered significant attention due to their impressive performance in various tasks. Therefore, we present this paper to investigate the effectiveness of LLMs, especially ChatGPT, and explore ways to optimize their use in assessing text quality. We compared three kinds of reference-free evaluation methods. The experimental results prove that ChatGPT is capable of evaluating text quality effectively from various perspectives without reference and demonstrates superior performance than most existing automatic metrics. In particular, the Explicit Score, which utilizes ChatGPT to generate a numeric score measuring text quality, is the most effective and reliable method among the three exploited approaches. However, directly comparing the quality of two texts may lead to suboptimal results. We believe this paper will provide valuable insights for evaluating text quality with LLMs and have released the used data.

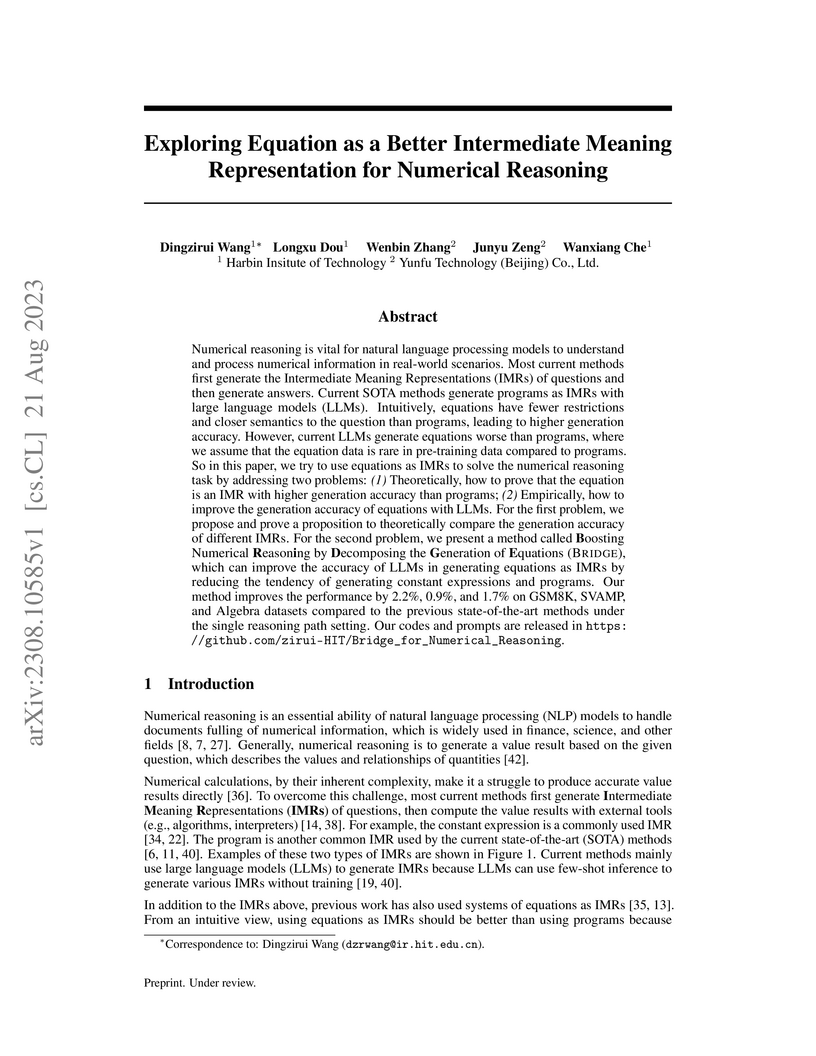

Exploring Equation as a Better Intermediate Meaning Representation for Numerical Reasoning

Numerical reasoning is vital for natural language processing models to understand and process numerical information in real-world scenarios. Most current methods first generate the Intermediate Meaning Representations (IMRs) of questions and then generate answers. Current SOTA methods generate programs as IMRs with large language models (LLMs). Intuitively, equations have fewer restrictions and closer semantics to the question than programs, leading to higher generation accuracy. However, current LLMs generate equations worse than programs, where we assume that the equation data is rare in pre-training data compared to programs. So in this paper, we try to use equations as IMRs to solve the numerical reasoning task by addressing two problems: (1) Theoretically, how to prove that the equation is an IMR with higher generation accuracy than programs; (2) Empirically, how to improve the generation accuracy of equations with LLMs. For the first problem, we propose and prove a proposition to theoretically compare the generation accuracy of different IMRs. For the second problem, we present a method called Boosting Numerical Reason\textbfing by Decomposing the Generation of Equations (Bridge), which can improve the accuracy of LLMs in generating equations as IMRs by reducing the tendency of generating constant expressions and programs. Our method improves the performance by 2.2%, 0.9%, and 1.7% on GSM8K, SVAMP, and Algebra datasets compared to the previous state-of-the-art methods under the single reasoning path setting. Our codes and prompts are released in this https URL.

RCMNet: A deep learning model assists CAR-T therapy for leukemia

Acute leukemia is a type of blood cancer with a high mortality rate. Current therapeutic methods include bone marrow transplantation, supportive therapy, and chemotherapy. Although a satisfactory remission of the disease can be achieved, the risk of recurrence is still high. Therefore, novel treatments are demanding. Chimeric antigen receptor-T (CAR-T) therapy has emerged as a promising approach to treat and cure acute leukemia. To harness the therapeutic potential of CAR-T cell therapy for blood diseases, reliable cell morphological identification is crucial. Nevertheless, the identification of CAR-T cells is a big challenge posed by their phenotypic similarity with other blood cells. To address this substantial clinical challenge, herein we first construct a CAR-T dataset with 500 original microscopy images after staining. Following that, we create a novel integrated model called RCMNet (ResNet18 with CBAM and MHSA) that combines the convolutional neural network (CNN) and Transformer. The model shows 99.63% top-1 accuracy on the public dataset. Compared with previous reports, our model obtains satisfactory results for image classification. Although testing on the CAR-T cells dataset, a decent performance is observed, which is attributed to the limited size of the dataset. Transfer learning is adapted for RCMNet and a maximum of 83.36% accuracy has been achieved, which is higher than other SOTA models. The study evaluates the effectiveness of RCMNet on a big public dataset and translates it to a clinical dataset for diagnostic applications.

Role Prompting Guided Domain Adaptation with General Capability Preserve for Large Language Models

The growing interest in Large Language Models (LLMs) for specialized

applications has revealed a significant challenge: when tailored to specific

domains, LLMs tend to experience catastrophic forgetting, compromising their

general capabilities and leading to a suboptimal user experience. Additionally,

crafting a versatile model for multiple domains simultaneously often results in

a decline in overall performance due to confusion between domains. In response

to these issues, we present the RolE Prompting Guided Multi-Domain Adaptation

(REGA) strategy. This novel approach effectively manages multi-domain LLM

adaptation through three key components: 1) Self-Distillation constructs and

replays general-domain exemplars to alleviate catastrophic forgetting. 2) Role

Prompting assigns a central prompt to the general domain and a unique role

prompt to each specific domain to minimize inter-domain confusion during

training. 3) Role Integration reuses and integrates a small portion of

domain-specific data to the general-domain data, which are trained under the

guidance of the central prompt. The central prompt is used for a streamlined

inference process, removing the necessity to switch prompts for different

domains. Empirical results demonstrate that REGA effectively alleviates

catastrophic forgetting and inter-domain confusion. This leads to improved

domain-specific performance compared to standard fine-tuned models, while still

preserving robust general capabilities.

There are no more papers matching your filters at the moment.

Shandong University

Shandong University

The Chinese University of Hong Kong

The Chinese University of Hong Kong