Interdisciplinary Transformation University Austria

Researchers from NXAI GmbH and JKU Linz introduce TiRex, a zero-shot time series forecasting model built on the xLSTM architecture, which achieves state-of-the-art performance across short and long horizons. TiRex sets new benchmarks on the GiftEval-ZS and Chronos-ZS, while being significantly more efficient with only 35M parameters and over 11x faster inference than leading competitors.

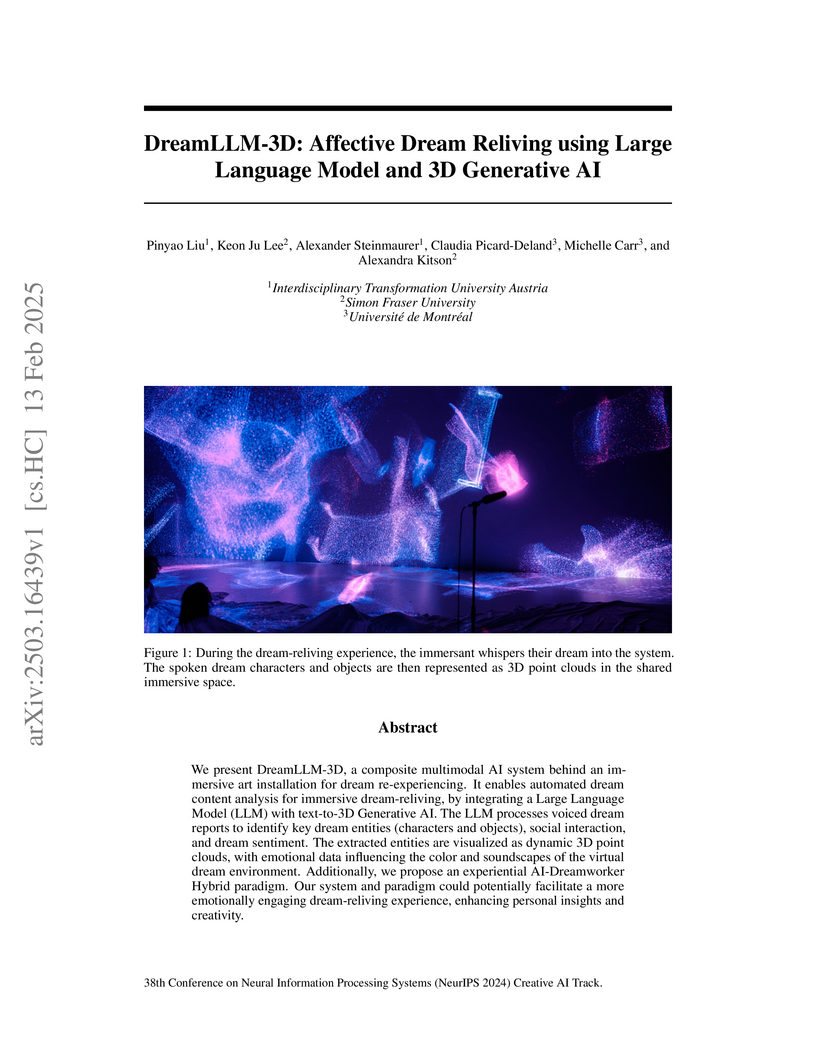

We present DreamLLM-3D, a composite multimodal AI system behind an immersive

art installation for dream re-experiencing. It enables automated dream content

analysis for immersive dream-reliving, by integrating a Large Language Model

(LLM) with text-to-3D Generative AI. The LLM processes voiced dream reports to

identify key dream entities (characters and objects), social interaction, and

dream sentiment. The extracted entities are visualized as dynamic 3D point

clouds, with emotional data influencing the color and soundscapes of the

virtual dream environment. Additionally, we propose an experiential

AI-Dreamworker Hybrid paradigm. Our system and paradigm could potentially

facilitate a more emotionally engaging dream-reliving experience, enhancing

personal insights and creativity.

22 Oct 2025

The CoRECT framework provides a systematic, large-scale platform for evaluating embedding compression techniques, demonstrating that retrieval performance significantly decreases with increasing corpus size and granularity, even for uncompressed embeddings. Its extensive benchmarking reveals that scalar quantization frequently retains performance at 2-bit precision, but the optimal compression method is highly dependent on the embedding model and retrieval task.

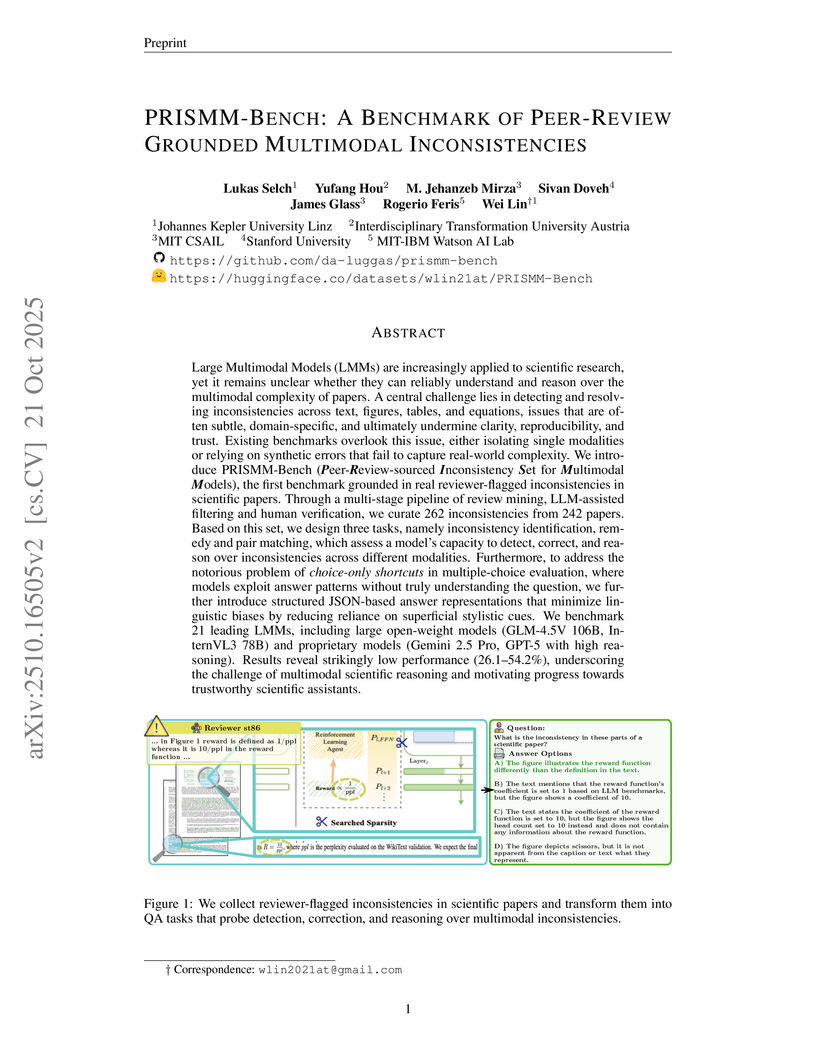

Large Multimodal Models (LMMs) are increasingly applied to scientific research, yet it remains unclear whether they can reliably understand and reason over the multimodal complexity of papers. A central challenge lies in detecting and resolving inconsistencies across text, figures, tables, and equations, issues that are often subtle, domain-specific, and ultimately undermine clarity, reproducibility, and trust. Existing benchmarks overlook this issue, either isolating single modalities or relying on synthetic errors that fail to capture real-world complexity. We introduce PRISMM-Bench (Peer-Review-sourced Inconsistency Set for Multimodal Models), the first benchmark grounded in real reviewer-flagged inconsistencies in scientific papers. Through a multi-stage pipeline of review mining, LLM-assisted filtering and human verification, we curate 262 inconsistencies from 242 papers. Based on this set, we design three tasks, namely inconsistency identification, remedy and pair matching, which assess a model's capacity to detect, correct, and reason over inconsistencies across different modalities. Furthermore, to address the notorious problem of choice-only shortcuts in multiple-choice evaluation, where models exploit answer patterns without truly understanding the question, we further introduce structured JSON-based answer representations that minimize linguistic biases by reducing reliance on superficial stylistic cues. We benchmark 21 leading LMMs, including large open-weight models (GLM-4.5V 106B, InternVL3 78B) and proprietary models (Gemini 2.5 Pro, GPT-5 with high reasoning). Results reveal strikingly low performance (26.1-54.2%), underscoring the challenge of multimodal scientific reasoning and motivating progress towards trustworthy scientific assistants.

22 Oct 2025

Dense retrieval systems have proven to be effective across various benchmarks, but require substantial memory to store large search indices. Recent advances in embedding compression show that index sizes can be greatly reduced with minimal loss in ranking quality. However, existing studies often overlook the role of corpus complexity -- a critical factor, as recent work shows that both corpus size and document length strongly affect dense retrieval performance. In this paper, we introduce CoRECT (Controlled Retrieval Evaluation of Compression Techniques), a framework for large-scale evaluation of embedding compression methods, supported by a newly curated dataset collection. To demonstrate its utility, we benchmark eight representative types of compression methods. Notably, we show that non-learned compression achieves substantial index size reduction, even on up to 100M passages, with statistically insignificant performance loss. However, selecting the optimal compression method remains challenging, as performance varies across models. Such variability highlights the necessity of CoRECT to enable consistent comparison and informed selection of compression methods. All code, data, and results are available on GitHub and HuggingFace.

We present KoWit-24, a dataset with fine-grained annotation of wordplay in 2,700 Russian news headlines. KoWit-24 annotations include the presence of wordplay, its type, wordplay anchors, and words/phrases the wordplay refers to. Unlike the majority of existing humor collections of canned jokes, KoWit-24 provides wordplay contexts -- each headline is accompanied by the news lead and summary. The most common type of wordplay in the dataset is the transformation of collocations, idioms, and named entities -- the mechanism that has been underrepresented in previous humor datasets. Our experiments with five LLMs show that there is ample room for improvement in wordplay detection and interpretation tasks. The dataset and evaluation scripts are available at this https URL

The systematic analysis of user-generated social media content, especially when enriched with geospatial context, plays a vital role in domains such as disaster management and public opinion monitoring. Although multimodal approaches have made significant progress, most existing models remain fragmented, processing each modality separately rather than integrating them into a unified end-to-end model. To address this, we propose an unsupervised, multimodal graph-based methodology that jointly embeds semantic and geographic information into a shared representation space. The proposed methodology comprises two architectural paradigms: a mono graph (MonoGrah) model that jointly encodes both modalities, and a multi graph (MultiGraph) model that separately models semantic and geographic relationships and subsequently integrates them through multi-head attention mechanisms. A composite loss, combining contrastive, coherence, and alignment objectives, guides the learning process to produce semantically coherent and spatially compact clusters. Experiments on four real-world disaster datasets demonstrate that our models consistently outperform existing baselines in topic quality, spatial coherence, and interpretability. Inherently domain-independent, the framework can be readily extended to diverse forms of multimodal data and a wide range of downstream analysis tasks.

There are no more papers matching your filters at the moment.