social-and-information-networks

This paper develops a geometric framework for modeling belief, motivation, and influence across cognitively heterogeneous agents. Each agent is represented by a personalized value space, a vector space encoding the internal dimensions through which the agent interprets and evaluates meaning. Beliefs are formalized as structured vectors-abstract beings-whose transmission is mediated by linear interpretation maps. A belief survives communication only if it avoids the null spaces of these maps, yielding a structural criterion for intelligibility, miscommunication, and belief death.

Within this framework, I show how belief distortion, motivational drift, counterfactual evaluation, and the limits of mutual understanding arise from purely algebraic constraints. A central result-"the No-Null-Space Leadership Condition"-characterizes leadership as a property of representational reachability rather than persuasion or authority. More broadly, the model explains how abstract beings can propagate, mutate, or disappear as they traverse diverse cognitive geometries.

The account unifies insights from conceptual spaces, social epistemology, and AI value alignment by grounding meaning preservation in structural compatibility rather than shared information or rationality. I argue that this cognitive-geometric perspective clarifies the epistemic boundaries of influence in both human and artificial systems, and offers a general foundation for analyzing belief dynamics across heterogeneous agents.

This project aims to construct and analyze a comprehensive knowledge graph of Nobel Prize and Laureates by enriching existing datasets with biographical information extracted from Wikipedia. Our approach integrates multiple advanced techniques, consisting of automatic data augmentation using LLMs for Named Entity Recognition (NER) and Relation Extraction (RE) tasks, and social network analysis to uncover hidden patterns within the scientific community. Furthermore, we also develop a GraphRAG-based chatbot system utilizing a fine-tuned model for Text2Cypher translation, enabling natural language querying over the knowledge graph. Experimental results demonstrate that the enriched graph possesses small-world network properties, identifying key influential figures and central organizations. The chatbot system achieves a competitive accuracy on a custom multiple-choice evaluation dataset, proving the effectiveness of combining LLMs with structured knowledge bases for complex reasoning tasks. Data and source code are available at: this https URL.

Multi-agent role-playing has recently shown promise for studying social behavior with language agents, but existing simulations are mostly monolingual and fail to model cross-lingual interaction, an essential property of real societies. We introduce MASim, the first multilingual agent-based simulation framework that supports multi-turn interaction among generative agents with diverse sociolinguistic profiles. MASim offers two key analyses: (i) global public opinion modeling, by simulating how attitudes toward open-domain hypotheses evolve across languages and cultures, and (ii) media influence and information diffusion, via autonomous news agents that dynamically generate content and shape user behavior. To instantiate simulations, we construct the MAPS benchmark, which combines survey questions and demographic personas drawn from global population distributions. Experiments on calibration, sensitivity, consistency, and cultural case studies show that MASim reproduces sociocultural phenomena and highlights the importance of multilingual simulation for scalable, controlled computational social science.

A Survey on Centrality and Importance Measures in Hypergraphs: Categorization and Empirical Insights

A Survey on Centrality and Importance Measures in Hypergraphs: Categorization and Empirical Insights

Identifying central entities and interactions is a fundamental problem in network science. While well-studied for graphs (pairwise relations), many biological and social systems exhibit higher-order interactions best modeled by hypergraphs. This has led to a proliferation of specialized hypergraph centrality measures, but the field remains fragmented and lacks a unifying framework. This paper addresses this gap by providing the first systematic survey of 39 distinct measures. We introduce a novel taxonomy classifying them as: (1) structural (topology-based), (2) functional (impact on system dynamics), or (3) contextual (incorporating external features). We also present an experimental assessment comparing their empirical similarity and computation time. Finally, we discuss applications, establishing a coherent roadmap for future research in this area.

This study presents a comprehensive analysis of public sentiment toward traffic management policies in Knoxville, Tennessee, utilizing social media data from Twitter and Reddit platforms. We collected and analyzed 7906 posts spanning January 2022 to December 2023, employing Valence Aware Dictionary and sEntiment Reasoner (VADER) for sentiment analysis and Latent Dirichlet Allocation (LDA) for topic modeling. Our findings reveal predominantly negative sentiment, with significant variations across platforms and topics. Twitter exhibited more negative sentiment compared to Reddit. Topic modeling identified six distinct themes, with construction-related topics showing the most negative sentiment while general traffic discussions were more positive. Spatiotemporal analysis revealed geographic and temporal patterns in sentiment expression. The research demonstrates social media's potential as a real-time public sentiment monitoring tool for transportation planning and policy evaluation.

Influence maximization in social networks plays a vital role in applications such as viral marketing, epidemiology, product recommendation, opinion mining, and counter-terrorism. A common approach identifies seed nodes by first detecting disjoint communities and subsequently selecting representative nodes from these communities. However, whether the quality of detected communities consistently affects the spread of influence under the Independent Cascade model remains unclear. This paper addresses this question by extending a previously proposed disjoint community detection method, termed α-Hierarchical Clustering, to the influence maximization problem under the Independent Cascade model. The proposed method is compared with an alternative approach that employs the same seed selection criteria but relies on communities of lower quality obtained through standard Hierarchical Clustering. The former is referred to as Hierarchical Clustering-based Influence Maximization, while the latter, which leverages higher-quality community structures to guide seed selection, is termed α-Hierarchical Clustering-based Influence Maximization. Extensive experiments are performed on multiple real-world datasets to assess the effectiveness of both methods. The results demonstrate that higher-quality community structures substantially improve information diffusion under the Independent Cascade model, particularly when the propagation probability is low. These findings underscore the critical importance of community quality in guiding effective seed selection for influence maximization in complex networks.

Graph Neural Networks (GNNs) have demonstrated exceptional efficacy in relational learning tasks, including node classification and link prediction. However, their application raises significant fairness concerns, as GNNs can perpetuate and even amplify societal biases against protected groups defined by sensitive attributes such as race or gender. These biases are often inherent in the node features, structural topology, and message-passing mechanisms of the graph itself. A critical limitation of existing fairness-aware GNN methods is their reliance on the strong assumption that sensitive attributes are fully available for all nodes during training--a condition that poses a practical impediment due to privacy concerns and data collection constraints. To address this gap, we propose a novel, model-agnostic fairness regularization framework designed for the realistic scenario where sensitive attributes are only partially available. Our approach formalizes a fairness-aware objective function that integrates both equal opportunity and statistical parity as differentiable regularization terms. Through a comprehensive empirical evaluation across five real-world benchmark datasets, we demonstrate that the proposed method significantly mitigates bias across key fairness metrics while maintaining competitive node classification performance. Results show that our framework consistently outperforms baseline models in achieving a favorable fairness-accuracy trade-off, with minimal degradation in predictive accuracy. The datasets and source code will be publicly released at this https URL.

We curate the DeXposure dataset, the first large-scale dataset for inter-protocol credit exposure in decentralized financial networks, covering global markets of 43.7 million entries across 4.3 thousand protocols, 602 blockchains, and 24.3 thousand tokens, from 2020 to 2025. A new measure, value-linked credit exposure between protocols, is defined as the inferred financial dependency relationships derived from changes in Total Value Locked (TVL). We develop a token-to-protocol model using DefiLlama metadata to infer inter-protocol credit exposure from the token's stock dynamics, as reported by the protocols. Based on the curated dataset, we develop three benchmarks for machine learning research with financial applications: (1) graph clustering for global network measurement, tracking the structural evolution of credit exposure networks, (2) vector autoregression for sector-level credit exposure dynamics during major shocks (Terra and FTX), and (3) temporal graph neural networks for dynamic link prediction on temporal graphs. From the analysis, we observe (1) a rapid growth of network volume, (2) a trend of concentration to key protocols, (3) a decline of network density (the ratio of actual connections to possible connections), and (4) distinct shock propagation across sectors, such as lending platforms, trading exchanges, and asset management protocols. The DeXposure dataset and code have been released publicly. We envision they will help with research and practice in machine learning as well as financial risk monitoring, policy analysis, DeFi market modeling, amongst others. The dataset also contributes to machine learning research by offering benchmarks for graph clustering, vector autoregression, and temporal graph analysis.

Nowadays, recommendation systems have become crucial to online platforms, shaping user exposure by accurate preference modeling. However, such an exposure strategy can also reinforce users' existing preferences, leading to a notorious phenomenon named filter bubbles. Given its negative effects, such as group polarization, increasing attention has been paid to exploring reasonable measures to filter bubbles. However, most existing evaluation metrics simply measure the diversity of user exposure, failing to distinguish between algorithmic preference modeling and actual information confinement. In view of this, we introduce Bubble Escape Potential (BEP), a behavior-aware measure that quantifies how easily users can escape from filter bubbles. Specifically, BEP leverages a contrastive simulation framework that assigns different behavioral tendencies (e.g., positive vs. negative) to synthetic users and compares the induced exposure patterns. This design enables decoupling the effect of filter bubbles and preference modeling, allowing for more precise diagnosis of bubble severity. We conduct extensive experiments across multiple recommendation models to examine the relationship between predictive accuracy and bubble escape potential across different groups. To the best of our knowledge, our empirical results are the first to quantitatively validate the dilemma between preference modeling and filter bubbles. What's more, we observe a counter-intuitive phenomenon that mild random recommendations are ineffective in alleviating filter bubbles, which can offer a principled foundation for further work in this direction.

Dynamic recommendation, focusing on modeling user preference from historical interactions and providing recommendations on current time, plays a key role in many personalized services. Recent works show that pre-trained dynamic graph neural networks (GNNs) can achieve excellent performance. However, existing methods by fine-tuning node representations at large scales demand significant computational resources. Additionally, the long-tail distribution of degrees leads to insufficient representations for nodes with sparse interactions, posing challenges for efficient fine-tuning. To address these issues, we introduce GraphSASA, a novel method for efficient fine-tuning in dynamic recommendation systems. GraphSASA employs test-time augmentation by leveraging the similarity of node representation distributions during hierarchical graph aggregation, which enhances node representations. Then it applies singular value decomposition, freezing the original vector matrix while focusing fine-tuning on the derived singular value matrices, which reduces the parameter burden of fine-tuning and improves the fine-tuning adaptability. Experimental results demonstrate that our method achieves state-of-the-art performance on three large-scale datasets.

The first comprehensive analysis of Grokipedia compared its content and citation practices against English Wikipedia, revealing it to be a hybrid platform that directly adapts large portions of Wikipedia while simultaneously rewriting other articles with an ideological bent. The study found a significantly higher reliance on unreliable and blacklisted sources within Grokipedia, especially for politically and socially sensitive topics, while 56% of its articles were highly similar and CC-licensed from Wikipedia.

Podcasts have become a central arena for shaping public opinion, making them a vital source for understanding contemporary discourse. Their typically unscripted, multi-themed, and conversational style offers a rich but complex form of data. To analyze how podcasts persuade and inform, we must examine their narrative structures -- specifically, the narrative frames they employ.

The fluid and conversational nature of podcasts presents a significant challenge for automated analysis. We show that existing large language models, typically trained on more structured text such as news articles, struggle to capture the subtle cues that human listeners rely on to identify narrative frames. As a result, current approaches fall short of accurately analyzing podcast narratives at scale.

To solve this, we develop and evaluate a fine-tuned BERT model that explicitly links narrative frames to specific entities mentioned in the conversation, effectively grounding the abstract frame in concrete details. Our approach then uses these granular frame labels and correlates them with high-level topics to reveal broader discourse trends. The primary contributions of this paper are: (i) a novel frame-labeling methodology that more closely aligns with human judgment for messy, conversational data, and (ii) a new analysis that uncovers the systematic relationship between what is being discussed (the topic) and how it is being presented (the frame), offering a more robust framework for studying influence in digital media.

DEEP, an AI-driven system developed at Northwestern University, forecasts the future volume and emotional intensity of social movement discourse on both social media and news platforms. Designed for journalists, the system leverages a transformer architecture and multi-platform data to provide probabilistic predictions and uncertainty estimates, enabling timely reporting on global issues.

As a key to accessing research impact, citation dynamics underpins research evaluation, scholarly recommendation, and the study of knowledge diffusion. Citation prediction is particularly critical for newborn papers, where early assessment must be performed without citation signals and under highly long-tailed distributions. We identify two key research gaps: (i) insufficient modeling of implicit factors of scientific impact, leading to reliance on coarse proxies; and (ii) a lack of bias-aware learning that can deliver stable predictions on lowly cited papers. We address these gaps by proposing a Bias-Aware Citation Prediction Framework, which combines multi-agent feature extraction with robust graph representation learning. First, a multi-agent x graph co-learning module derives fine-grained, interpretable signals, such as reproducibility, collaboration network, and text quality, from metadata and external resources, and fuses them with heterogeneous-network embeddings to provide rich supervision even in the absence of early citation signals. Second, we incorporate a set of robust mechanisms: a two-stage forward process that routes explicit factors through an intermediate exposure estimate, GroupDRO to optimize worst-case group risk across environments, and a regularization head that performs what-if analyses on controllable factors under monotonicity and smoothness constraints. Comprehensive experiments on two real-world datasets demonstrate the effectiveness of our proposed model. Specifically, our model achieves around a 13% reduction in error metrics (MALE and RMSLE) and a notable 5.5% improvement in the ranking metric (NDCG) over the baseline methods.

Node-ranking methods that focus on structural importance are widely used in a variety of applications, from ranking webpages in search engines to identifying key molecules in biomolecular networks. In real social, supply chain, and terrorist networks, one definition of importance considers the impact on information flow or network productivity when a given node is removed. In practice, however, a nearby node may be able to replace another node upon removal, allowing the network to continue functioning as before. This replaceability is an aspect that existing ranking methods do not consider. To address this, we introduce UniqueRank, a Markov-Chain-based approach that captures attribute uniqueness in addition to structural importance, making top-ranked nodes harder to replace. We find that UniqueRank identifies important nodes with dissimilar attributes from its neighbors in simple symmetric networks with known ground truth. Further, on real terrorist, social, and supply chain networks, we demonstrate that removing and attempting to replace top UniqueRank nodes often yields larger efficiency reductions than removing and attempting to replace top nodes ranked by competing methods. Finally, we show UniqueRank's versatility by demonstrating its potential to identify structurally critical atoms with unique chemical environments in biomolecular structures.

Large language models (LLMs) show strong potential for simulating human social behaviors and interactions, yet lack large-scale, systematically constructed benchmarks for evaluating their alignment with real-world social attitudes. To bridge this gap, we introduce SocioBench-a comprehensive benchmark derived from the annually collected, standardized survey data of the International Social Survey Programme (ISSP). The benchmark aggregates over 480,000 real respondent records from more than 30 countries, spanning 10 sociological domains and over 40 demographic attributes. Our experiments indicate that LLMs achieve only 30-40% accuracy when simulating individuals in complex survey scenarios, with statistically significant differences across domains and demographic subgroups. These findings highlight several limitations of current LLMs in survey scenarios, including insufficient individual-level data coverage, inadequate scenario diversity, and missing group-level modeling.

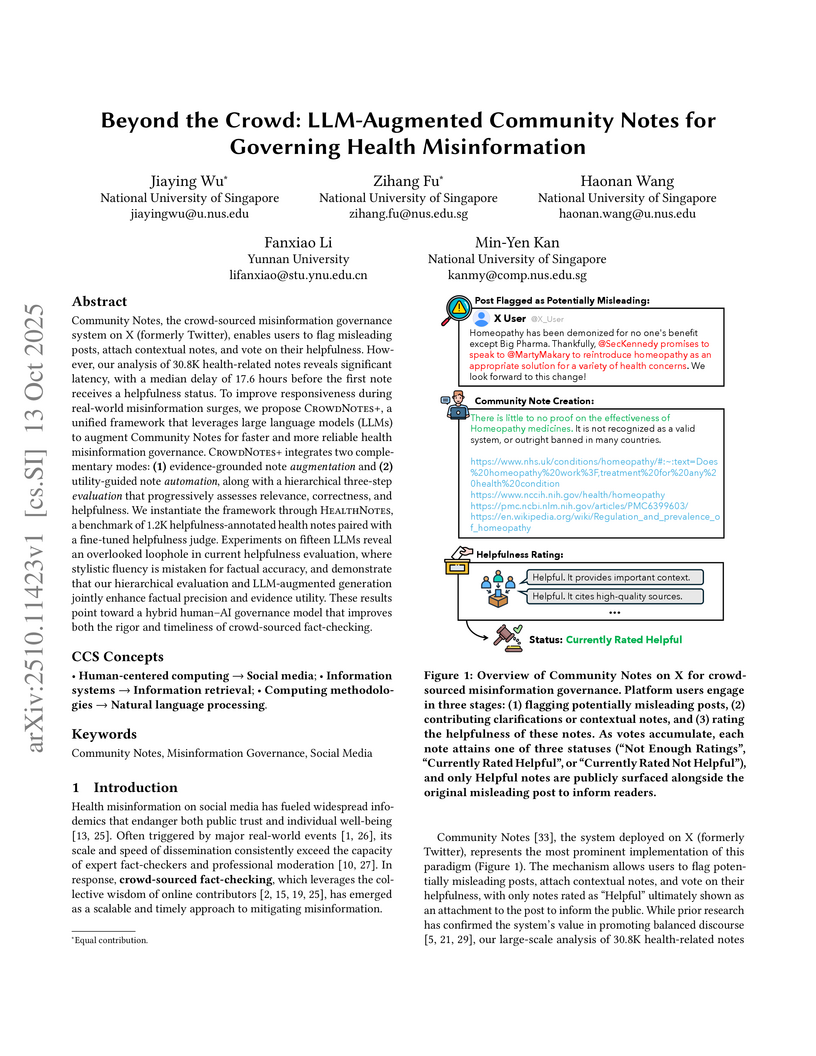

Researchers at the National University of Singapore introduced CrowdNotes+, a framework that integrates Large Language Models (LLMs) to enhance the timeliness and reliability of crowd-sourced misinformation governance on social media. The system addresses significant delays in existing platforms, with LLM-generated notes achieving higher helpfulness scores than human baselines in a rigorous evaluation, while exposing a systemic bias in human voting that often prioritizes stylistic fluency over factual accuracy.

The computational role of imagination remains debated. While classical accounts emphasize reward maximization, emerging evidence suggests imagination serves a broader function: accessing internal world models (IWMs). Here, we employ psychological network analysis to compare IWMs in humans and large language models (LLMs) through imagination vividness ratings. Using the Vividness of Visual Imagery Questionnaire (VVIQ-2) and Plymouth Sensory Imagery Questionnaire (PSIQ), we construct imagination networks from three human populations (Florida, Poland, London; N=2,743) and six LLM variants in two conversation conditions. Human imagination networks demonstrate robust correlations across centrality measures (expected influence, strength, closeness) and consistent clustering patterns, indicating shared structural organization of IWMs across populations. In contrast, LLM-derived networks show minimal clustering and weak centrality correlations, even when manipulating conversational memory. These systematic differences persist across environmental scenes (VVIQ-2) and sensory modalities (PSIQ), revealing fundamental disparities between human and artificial world models. Our network-based approach provides a quantitative framework for comparing internally-generated representations across cognitive agents, with implications for developing human-like imagination in artificial intelligence systems.

Mental health forums offer valuable insights into psychological issues, stressors, and potential solutions. We propose MHINDR, a large language model (LLM) based framework integrated with DSM-5 criteria to analyze user-generated text, dignose mental health conditions, and generate personalized interventions and insights for mental health practitioners. Our approach emphasizes on the extraction of temporal information for accurate diagnosis and symptom progression tracking, together with psychological features to create comprehensive mental health summaries of users. The framework delivers scalable, customizable, and data-driven therapeutic recommendations, adaptable to diverse clinical contexts, patient needs, and workplace well-being programs.

The study of emergent behaviors in large language model (LLM)-driven multi-agent systems is a critical research challenge, yet progress is limited by a lack of principled methodologies for controlled experimentation. To address this, we introduce Shachi, a formal methodology and modular framework that decomposes an agent's policy into core cognitive components: Configuration for intrinsic traits, Memory for contextual persistence, and Tools for expanded capabilities, all orchestrated by an LLM reasoning engine. This principled architecture moves beyond brittle, ad-hoc agent designs and enables the systematic analysis of how specific architectural choices influence collective behavior. We validate our methodology on a comprehensive 10-task benchmark and demonstrate its power through novel scientific inquiries. Critically, we establish the external validity of our approach by modeling a real-world U.S. tariff shock, showing that agent behaviors align with observed market reactions only when their cognitive architecture is appropriately configured with memory and tools. Our work provides a rigorous, open-source foundation for building and evaluating LLM agents, aimed at fostering more cumulative and scientifically grounded research.

There are no more papers matching your filters at the moment.