Saratoga High School

24 Oct 2024

Large Language Models (LLMs) have recently demonstrated impressive capabilities across various real-world applications. However, due to the current text-in-text-out paradigm, it remains challenging for LLMs to handle dynamic and complex application constraints, let alone devise general solutions that meet predefined system goals. Current common practices like model finetuning and reflection-based reasoning often address these issues case-by-case, limiting their generalizability. To address this issue, we propose a flexible framework that enables LLMs to interact with system interfaces, summarize constraint concepts, and continually optimize performance metrics by collaborating with human experts. As a case in point, we initialized a travel planner agent by establishing constraints from evaluation interfaces. Then, we employed both LLM-based and human discriminators to identify critical cases and continuously improve agent performance until the desired outcomes were achieved. After just one iteration, our framework achieved a 7.78% pass rate with the human discriminator (a 40.2% improvement over baseline) and a 6.11% pass rate with the LLM-based discriminator. Given the adaptability of our proposal, we believe this framework can be applied to a wide range of constraint-based applications and lay a solid foundation for model finetuning with performance-sensitive data samples.

Early detection of suicidal ideation in depressed individuals can allow for adequate medical attention and support, which in many cases is life-saving. Recent NLP research focuses on classifying, from a given piece of text, if an individual is suicidal or clinically healthy. However, there have been no major attempts to differentiate between depression and suicidal ideation, which is an important clinical challenge. Due to the scarce availability of EHR data, suicide notes, or other similar verified sources, web query data has emerged as a promising alternative. Online sources, such as Reddit, allow for anonymity that prompts honest disclosure of symptoms, making it a plausible source even in a clinical setting. However, these online datasets also result in lower performance, which can be attributed to the inherent noise in web-scraped labels, which necessitates a noise-removal process. Thus, we propose SDCNL, a suicide versus depression classification method through a deep learning approach. We utilize online content from Reddit to train our algorithm, and to verify and correct noisy labels, we propose a novel unsupervised label correction method which, unlike previous work, does not require prior noise distribution information. Our extensive experimentation with multiple deep word embedding models and classifiers display the strong performance of the method in anew, challenging classification application. We make our code and dataset available at this https URL

Sparse Fusion Transformers (SFT) introduce an efficient architecture for multimodal classification by leveraging the complementary nature of modalities to aggressively sparsify unimodal representations before fusion. The approach achieves up to an 11-fold reduction in computational cost and memory while maintaining or improving accuracy on benchmark datasets like VGGSound and CMU-MOSEI.

CT image quality is heavily reliant on radiation dose, which causes a trade-off between radiation dose and image quality that affects the subsequent image-based diagnostic performance. However, high radiation can be harmful to both patients and operators. Several (deep learning-based) approaches have been attempted to denoise low dose images. However, those approaches require access to large training sets, specifically the full dose CT images for reference, which can often be difficult to obtain. Self-supervised learning is an emerging alternative for lowering the reference data requirement facilitating unsupervised learning. Currently available self-supervised CT denoising works are either dependent on foreign domain or pretexts are not very task-relevant. To tackle the aforementioned challenges, we propose a novel self-supervised learning approach, namely Self-Supervised Window-Leveling for Image DeNoising (SSWL-IDN), leveraging an innovative, task-relevant, simple, yet effective surrogate -- prediction of the window-leveled equivalent. SSWL-IDN leverages residual learning and a hybrid loss combining perceptual loss and MSE, all incorporated in a VAE framework. Our extensive (in- and cross-domain) experimentation demonstrates the effectiveness of SSWL-IDN in aggressive denoising of CT (abdomen and chest) images acquired at 5\% dose level only.

Diabetes is one of the most prevalent chronic diseases in Bangladesh, and as a result, Diabetic Retinopathy (DR) is widespread in the population. DR, an eye illness caused by diabetes, can lead to blindness if it is not identified and treated in its early stages. Unfortunately, diagnosis of DR requires medically trained professionals, but Bangladesh has limited specialists in comparison to its population. Moreover, the screening process is often expensive, prohibiting many from receiving timely and proper diagnosis. To address the problem, we introduce a deep learning algorithm which screens for different stages of DR. We use a state-of-the-art CNN architecture to diagnose patients based on retinal fundus imagery. This paper is an experimental evaluation of the algorithm we developed for DR diagnosis and screening specifically for Bangladeshi patients. We perform this validation study using separate pools of retinal image data of real patients from a hospital and field studies in Bangladesh. Our results show that the algorithm is effective at screening Bangladeshi eyes even when trained on a public dataset which is out of domain, and can accurately determine the stage of DR as well, achieving an overall accuracy of 92.27\% and 93.02\% on two validation sets of Bangladeshi eyes. The results confirm the ability of the algorithm to be used in real clinical settings and applications due to its high accuracy and classwise metrics. Our algorithm is implemented in the application Drishti, which is used to screen for DR in patients living in rural areas in Bangladesh, where access to professional screening is limited.

Semi-supervised learning has been gaining attention as it allows for

performing image analysis tasks such as classification with limited labeled

data. Some popular algorithms using Generative Adversarial Networks (GANs) for

semi-supervised classification share a single architecture for classification

and discrimination. However, this may require a model to converge to a separate

data distribution for each task, which may reduce overall performance. While

progress in semi-supervised learning has been made, less addressed are

small-scale, fully-supervised tasks where even unlabeled data is unavailable

and unattainable. We therefore, propose a novel GAN model namely External

Classifier GAN (EC-GAN), that utilizes GANs and semi-supervised algorithms to

improve classification in fully-supervised regimes. Our method leverages a GAN

to generate artificial data used to supplement supervised classification. More

specifically, we attach an external classifier, hence the name EC-GAN, to the

GAN's generator, as opposed to sharing an architecture with the discriminator.

Our experiments demonstrate that EC-GAN's performance is comparable to the

shared architecture method, far superior to the standard data augmentation and

regularization-based approach, and effective on a small, realistic dataset.

The increasing size and severity of wildfires across the western United States have generated dangerous levels of PM2.5 concentrations in recent years. In a changing climate, expanding the use of prescribed fires is widely considered to be the most robust fire mitigation strategy. However, reliably forecasting the potential air quality impact from prescribed fires, which is critical in planning the prescribed fires' location and time, at hourly to daily time scales remains a challenging problem. In this paper, we introduce a spatial-temporal graph neural network (GNN) based forecasting model for hourly PM2.5 predictions across California. Using a two-step approach, we leverage our forecasting model to estimate the PM2.5 contribution of wildfires. Integrating the GNN-based PM2.5 forecasting model with prescribed fire simulations, we propose a novel framework to forecast the PM2.5 pollution of prescribed fires. This framework helps determine March as the optimal month for implementing prescribed fires in California and quantifies the potential air quality trade-offs involved in conducting more prescribed fires outside the fire season.

Deep learning-based models, when trained in a fully-supervised manner, can be effective in performing complex image analysis tasks, although contingent upon the availability of large labeled datasets. Especially in the medical imaging domain, however, expert image annotation is expensive, time-consuming, and prone to variability. Semi-supervised learning from limited quantities of labeled data has shown promise as an alternative. Maximizing knowledge gains from copious unlabeled data benefits semi-supervised learning models. Moreover, learning multiple tasks within the same model further improves its generalizability. We propose MultiMix, a new multi-task learning model that jointly learns disease classification and anatomical segmentation in a semi-supervised manner, while preserving explainability through a novel saliency bridge between the two tasks. Our experiments with varying quantities of multi-source labeled data in the training sets confirm the effectiveness of MultiMix in the simultaneous classification of pneumonia and segmentation of the lungs in chest X-ray images. Moreover, both in-domain and cross-domain evaluations across these tasks further showcase the potential of our model to adapt to challenging generalization scenarios.

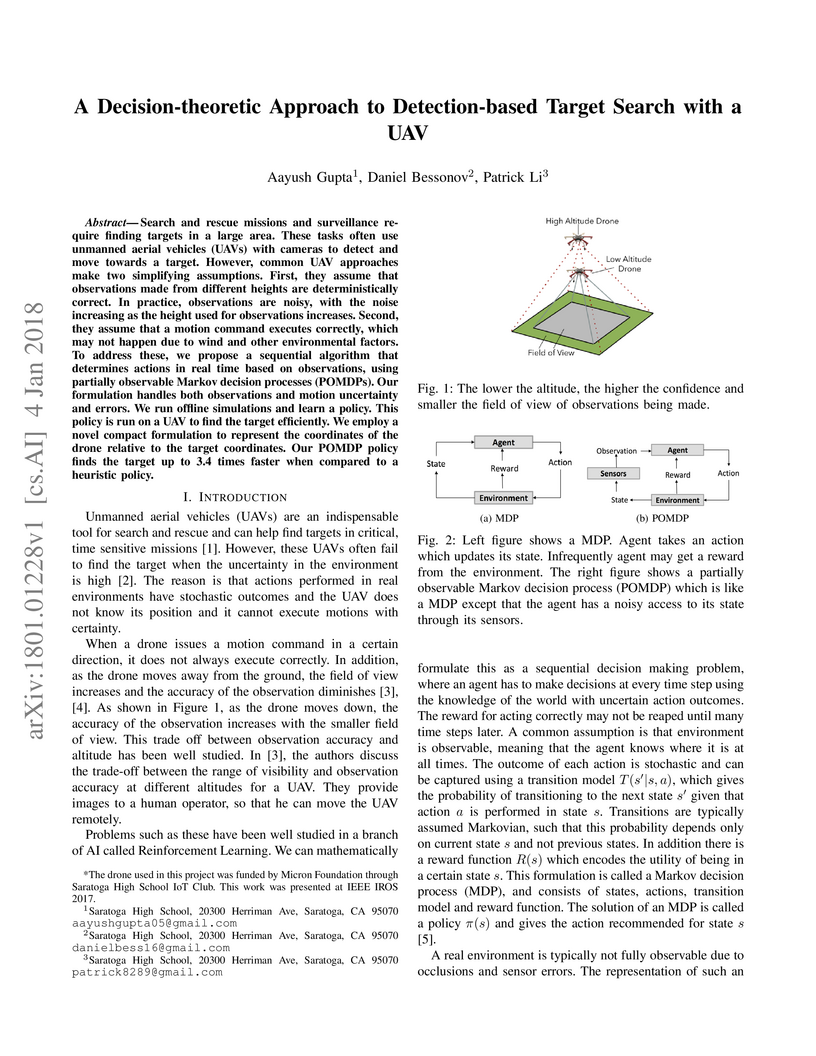

Search and rescue missions and surveillance require finding targets in a

large area. These tasks often use unmanned aerial vehicles (UAVs) with cameras

to detect and move towards a target. However, common UAV approaches make two

simplifying assumptions. First, they assume that observations made from

different heights are deterministically correct. In practice, observations are

noisy, with the noise increasing as the height used for observations increases.

Second, they assume that a motion command executes correctly, which may not

happen due to wind and other environmental factors. To address these, we

propose a sequential algorithm that determines actions in real time based on

observations, using partially observable Markov decision processes (POMDPs).

Our formulation handles both observations and motion uncertainty and errors. We

run offline simulations and learn a policy. This policy is run on a UAV to find

the target efficiently. We employ a novel compact formulation to represent the

coordinates of the drone relative to the target coordinates. Our POMDP policy

finds the target up to 3.4 times faster when compared to a heuristic policy.

11 Feb 2025

With increasing demands for fuel efficiency and operational adaptability in

commercial aviation}, this paper provides a systematic review and

classification of morphing wing technologies, analyzing their aerodynamic

performance characteristics and atmospheric condition adaptability. We first

develop a comprehensive classification framework for morphing wing designs

based on their scale of morphing, actuation mechanisms, and intended purposes.

Through analysis of historical developments and current implementations, we

evaluate two significant case studies: the Mission Adaptive Compliant Wing

(MACW) and Adaptive Aspect Ratio (AdAR) morphing wing, demonstrating

performance improvements of up to 25% in drag reduction and 40% in control

authority. Our investigation reveals critical trade-offs between full-span and

partial morphing approaches, particularly regarding implementation complexity,

certification requirements, and operational reliability. The study concludes

with an assessment of technical barriers and opportunities, providing specific

recommendations for advancing morphing wing technology in commercial aviation

applications. Key findings indicate that while material science and control

system advances enable practical implementation, certification pathways and

maintenance considerations remain critical challenges for widespread adoption.

The success of deep learning for medical imaging tasks, such as classification, is heavily reliant on the availability of large-scale datasets. However, acquiring datasets with large quantities of labeled data is challenging, as labeling is expensive and time-consuming. Semi-supervised learning (SSL) is a growing alternative to fully-supervised learning, but requires unlabeled samples for training. In medical imaging, many datasets lack unlabeled data entirely, so SSL can't be conventionally utilized. We propose 3N-GAN, or 3 Network Generative Adversarial Networks, to perform semi-supervised classification of medical images in fully-supervised settings. We incorporate a classifier into the adversarial relationship such that the generator trains adversarially against both the classifier and discriminator. Our preliminary results show improved classification performance and GAN generations over various algorithms. Our work can seamlessly integrate with numerous other medical imaging model architectures and SSL methods for greater performance.

Comprehensive planning agents have been a long term goal in the field of artificial intelligence. Recent innovations in Natural Language Processing have yielded success through the advent of Large Language Models (LLMs). We seek to improve the travel-planning capability of such LLMs by extending upon the work of the previous paper TravelPlanner. Our objective is to explore a new method of using LLMs to improve the travel planning experience. We focus specifically on the "sole-planning" mode of travel planning; that is, the agent is given necessary reference information, and its goal is to create a comprehensive plan from the reference information. While this does not simulate the real-world we feel that an optimization of the sole-planning capability of a travel planning agent will still be able to enhance the overall user experience. We propose a semi-automated prompt generation framework which combines the LLM-automated prompt and "human-in-the-loop" to iteratively refine the prompt to improve the LLM performance. Our result shows that LLM automated prompt has its limitations and "human-in-the-loop" greatly improves the performance by 139% with one single iteration.

There are no more papers matching your filters at the moment.