Ask or search anything...

An automated design-data augmentation framework enables finetuning of open-source Large Language Models (LLMs) like Llama2 for chip design tasks, achieving state-of-the-art performance in Verilog generation, Verilog repair, and EDA script generation. The framework effectively addresses data scarcity by systematically creating high-quality, aligned datasets for these specialized tasks.

View blogResearchers introduce RealBench, a benchmark for evaluating Verilog generation models that uses real-world IP designs, multi-modal specifications, and rigorous formal verification. Evaluations demonstrate that current state-of-the-art large language models exhibit low accuracy on complex hardware design tasks, particularly for hierarchical designs and submodule instantiations.

View blogThe Cambricon-LLM architecture enables efficient on-device inference of large language models up to 70 billion parameters by integrating a Neural Processing Unit with custom flash memory featuring on-die computation capabilities. This approach achieves an inference speed of 3.44 tokens/s for a 70B parameter model, demonstrating a 22x speedup over the best existing solutions.

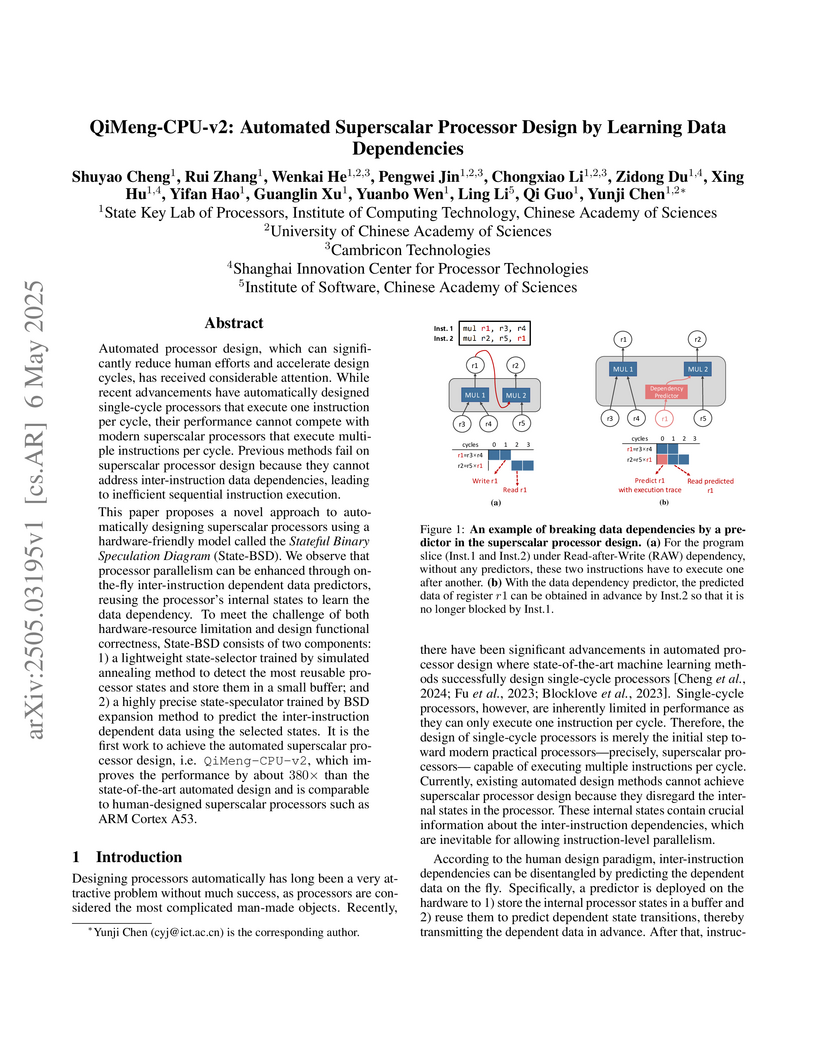

View blogA machine learning-based approach enables automated design of superscalar RISC-V processors through a novel State-BSD model for learning data dependencies, resulting in QiMeng-CPU-v2 which achieves 380x performance improvement over previous automated designs and successfully runs Linux and SPEC benchmarks on FPGA implementation.

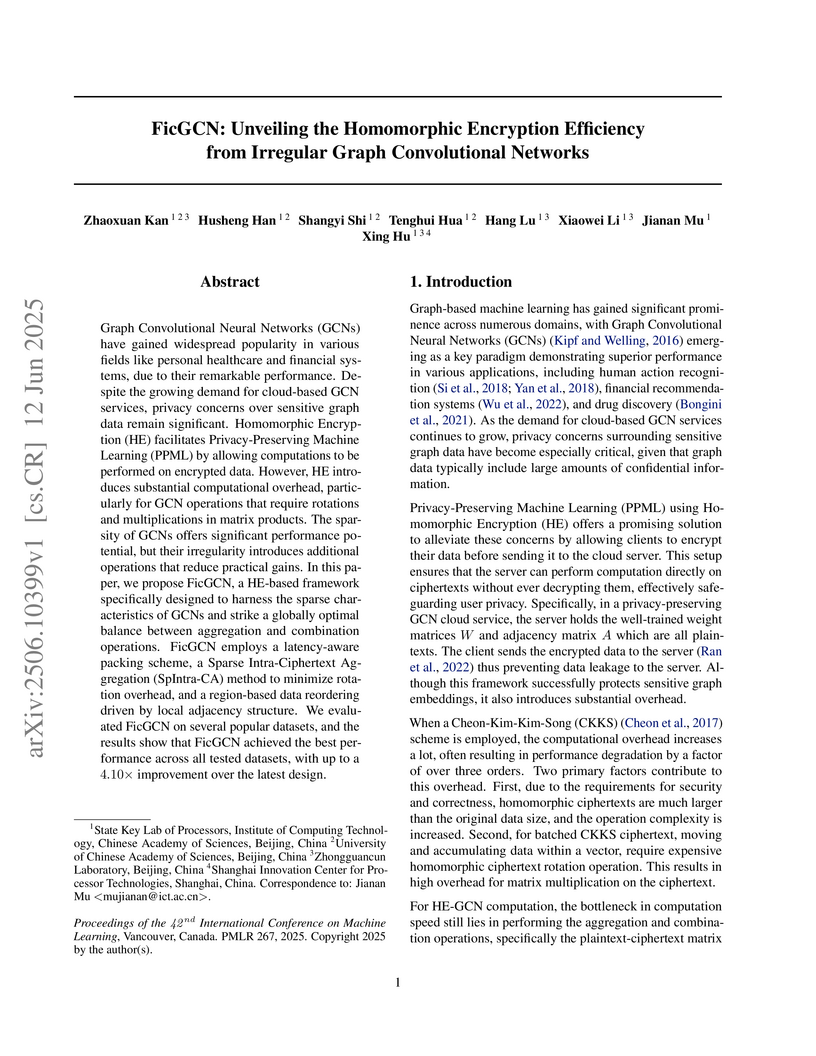

View blogResearchers from ICT Chinese Academy of Sciences develop FicGCN, a homomorphic encryption framework for privacy-preserving Graph Convolutional Network inference that achieves 4.10x speedup over CryptoGCN on large graphs (Corafull with 19,793 nodes) and up to 34.2x improvement over Gazelle by resolving the fundamental conflict between GCN's irregular sparsity and CKKS homomorphic encryption's SIMD computation model through a novel Sparse Intra-Ciphertext Aggregation (SpIntra-CA) algorithm that reduces expensive rotation operations by 66.4% on Cora dataset, combined with latency-aware packing schemes and Node Order Optimization that leverages cyclic shift properties to enable efficient neighbor aggregation within single ciphertexts rather than generating redundant encrypted copies, addressing the critical bottleneck in deploying GCNs for sensitive applications like healthcare and finance where data privacy is paramount but existing HE-GCN solutions suffer from prohibitive computational overhead that makes cloud-based inference impractical.

View blog University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Chinese Academy of Sciences

Chinese Academy of Sciences

University of Science and Technology of China

University of Science and Technology of China

Tsinghua University

Tsinghua University