Ask or search anything...

A two-stage pipeline called D ◦ S is introduced to robustly identify artists' vocal likenesses in both authentic and deepfake audio, specifically by triaging and prioritizing high-quality forgeries. This approach improves singer identification accuracy on deepfake-containing datasets, reducing the average Equal Error Rate from 24.79% to 16.19%.

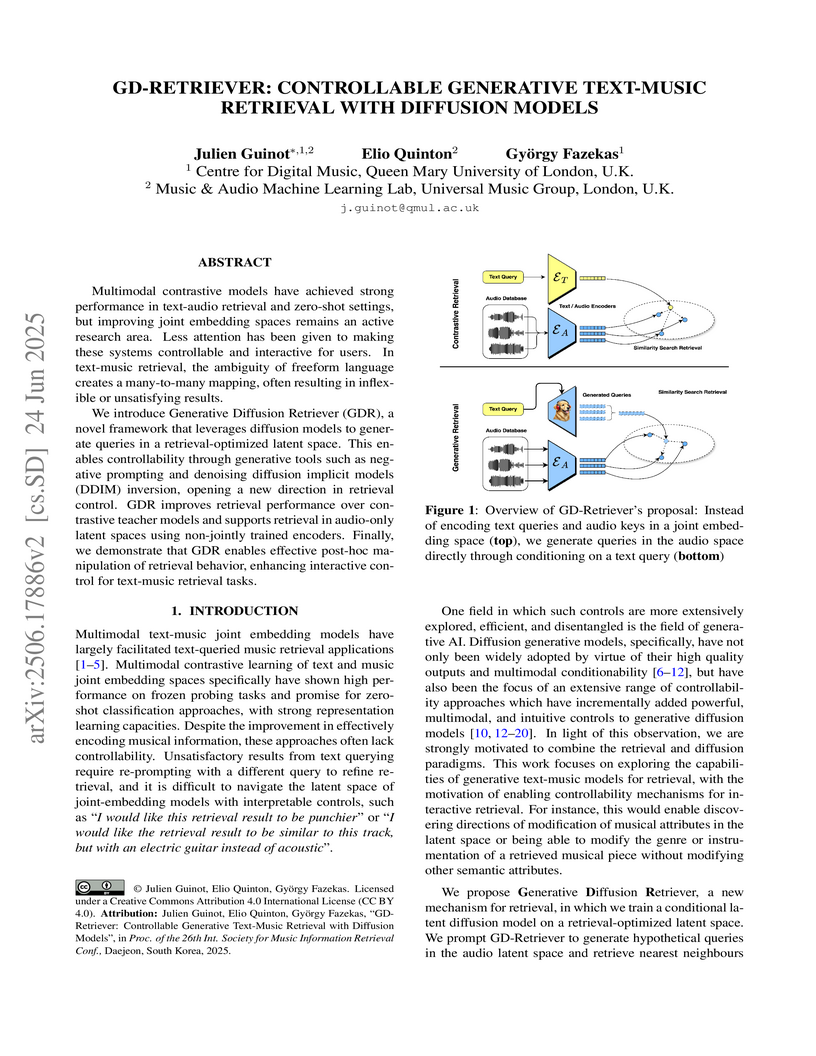

View blogA framework called GD-Retriever employs conditional latent diffusion models to synthesize hypothetical audio latent queries from text, enabling a more controllable text-music retrieval experience. The method improves in-domain retrieval performance compared to contrastive baselines and allows interactive refinement of search results through negative prompting and DDIM inversion, all while preserving the fidelity of modified queries.

View blogA unified framework from QMUL and Universal Music Group researchers evaluates learned representations along four key axes (informativeness, equivariance, invariance, and disentanglement), revealing that models with similar downstream performance can exhibit drastically different structural properties when analyzed through controlled factors of variation in image and speech domains.

View blogThe Song Describer Dataset (SDD) provides a new public corpus of 1106 human-written music captions for 706 unique 2-minute music recordings, explicitly designed for out-of-domain evaluation of music-and-language models. Benchmarking with SDD demonstrates significant performance drops for music captioning models and reveals generalization limitations for existing models when tested on unseen data distributions.

View blog

Queen Mary University of London

Queen Mary University of London

Université de Montréal

Université de Montréal ByteDance

ByteDance