audio-and-speech-processing

Lightweight, real-time text-to-speech systems are crucial for accessibility. However, the most efficient TTS models often rely on lightweight phonemizers that struggle with context-dependent challenges. In contrast, more advanced phonemizers with a deeper linguistic understanding typically incur high computational costs, which prevents real-time performance.

This paper examines the trade-off between phonemization quality and inference speed in G2P-aided TTS systems, introducing a practical framework to bridge this gap. We propose lightweight strategies for context-aware phonemization and a service-oriented TTS architecture that executes these modules as independent services. This design decouples heavy context-aware components from the core TTS engine, effectively breaking the latency barrier and enabling real-time use of high-quality phonemization models. Experimental results confirm that the proposed system improves pronunciation soundness and linguistic accuracy while maintaining real-time responsiveness, making it well-suited for offline and end-device TTS applications.

A two-stage self-supervised framework integrates the Joint-Embedding Predictive Architecture (JEPA) with Density Adaptive Attention Mechanisms (DAAM) to learn robust speech representations. This approach generates efficient, reversible discrete speech tokens at an ultra-low rate of 47.5 tokens/sec, designed for seamless integration with large language models.

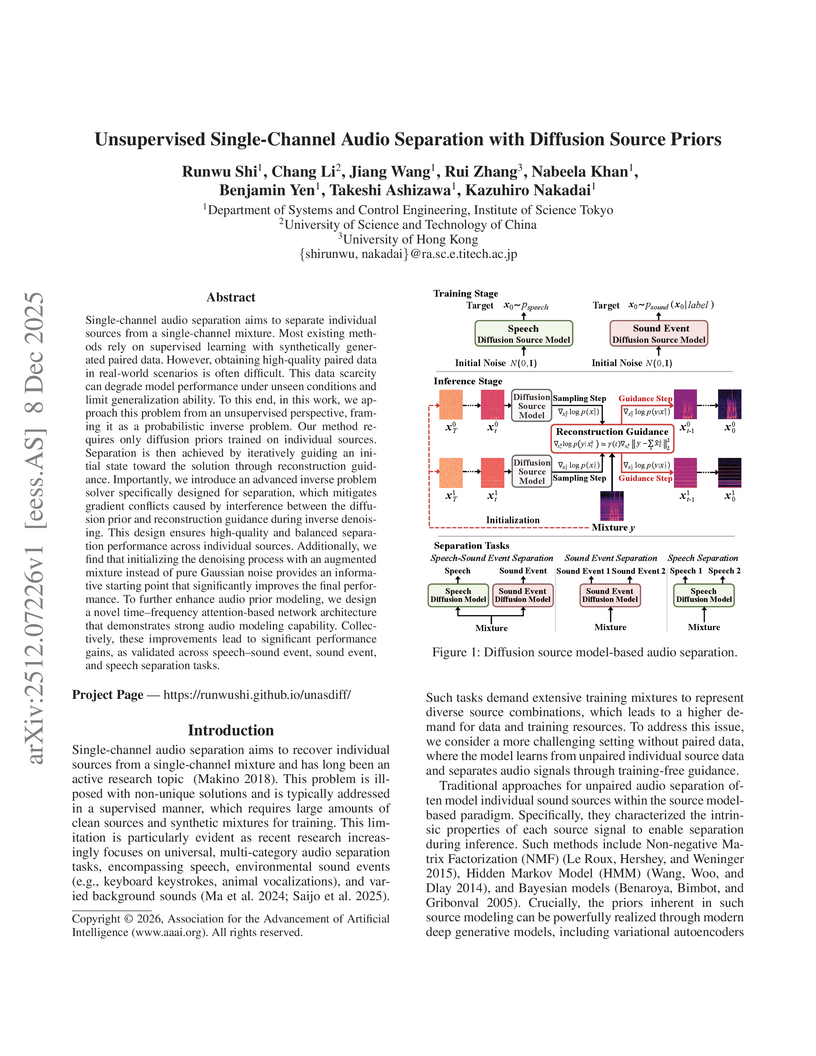

An unsupervised framework for single-channel audio separation, developed at the Institute of Science Tokyo, employs diffusion models as source priors for disentangling sound sources from a single-microphone recording. The method incorporates a novel hybrid guidance schedule and noise-augmented mixture initialization, achieving separation quality comparable to the supervised Conv-TasNet model and outperforming previous unsupervised methods without requiring paired training data.

Audio deepfakes have reached a level of realism that makes it increasingly difficult to distinguish between human and artificial voices, which poses risks such as identity theft or spread of disinformation. Despite these concerns, research on humans' ability to identify deepfakes is limited, with most studies focusing on English and very few exploring the reasons behind listeners' perceptual decisions. This study addresses this gap through a perceptual experiment in which 54 listeners (28 native Spanish speakers and 26 native Japanese speakers) classified voices as natural or synthetic, and justified their choices. The experiment included 80 stimuli (50% artificial), organized according to three variables: language (Spanish/Japanese), speech style (audiobooks/interviews), and familiarity with the voice (familiar/unfamiliar). The goal was to examine how these variables influence detection and to analyze qualitatively the reasoning behind listeners' perceptual decisions. Results indicate an average accuracy of 59.11%, with higher performance on authentic samples. Judgments of vocal naturalness rely on a combination of linguistic and non-linguistic cues. Comparing Japanese and Spanish listeners, our qualitative analysis further reveals both shared cues and notable cross-linguistic differences in how listeners conceptualize the "humanness" of speech. Overall, participants relied primarily on suprasegmental and higher-level or extralinguistic characteristics - such as intonation, rhythm, fluency, pauses, speed, breathing, and laughter - over segmental features. These findings underscore the complexity of human perceptual strategies in distinguishing natural from artificial speech and align partly with prior research emphasizing the importance of prosody and phenomena typical of spontaneous speech, such as disfluencies.

Always-on sensors are increasingly expected to embark a variety of tiny neural networks and to continuously perform inference on time-series of the data they sense. In order to fit lifetime and energy consumption requirements when operating on battery, such hardware uses microcontrollers (MCUs) with tiny memory budget e.g., 128kB of RAM. In this context, optimizing data flows across neural network layers becomes crucial. In this paper, we introduce TinyDéjàVu, a new framework and novel algorithms we designed to drastically reduce the RAM footprint required by inference using various tiny ML models for sensor data time-series on typical microcontroller hardware. We publish the implementation of TinyDéjàVu as open source, and we perform reproducible benchmarks on hardware. We show that TinyDéjàVu can save more than 60% of RAM usage and eliminate up to 90% of redundant compute on overlapping sliding window inputs.

This paper describes the BUT submission to the ESDD 2026 Challenge, specifically focusing on Track 1: Environmental Sound Deepfake Detection with Unseen Generators. To address the critical challenge of generalizing to audio generated by unseen synthesis algorithms, we propose a robust ensemble framework leveraging diverse Self-Supervised Learning (SSL) models. We conduct a comprehensive analysis of general audio SSL models (including BEATs, EAT, and Dasheng) and speech-specific SSLs. These front-ends are coupled with a lightweight Multi-Head Factorized Attention (MHFA) back-end to capture discriminative representations. Furthermore, we introduce a feature domain augmentation strategy based on distribution uncertainty modeling to enhance model robustness against unseen spectral distortions. All models are trained exclusively on the official EnvSDD data, without using any external resources. Experimental results demonstrate the effectiveness of our approach: our best single system achieved Equal Error Rates (EER) of 0.00\%, 4.60\%, and 4.80\% on the Development, Progress (Track 1), and Final Evaluation sets, respectively. The fusion system further improved generalization, yielding EERs of 0.00\%, 3.52\%, and 4.38\% across the same partitions.

Speech Enhancement (SE) and Speech Separation (SS) have traditionally been treated as distinct tasks in speech processing. However, real-world audio often involves both background noise and overlapping speakers, motivating the need for a unified solution. While recent approaches have attempted to integrate SE and SS within multi-stage architectures, these approaches typically involve complex, parameter-heavy models and rely on supervised training, limiting scalability and generalization. In this work, we propose UniVoiceLite, a lightweight and unsupervised audio-visual framework that unifies SE and SS within a single model. UniVoiceLite leverages lip motion and facial identity cues to guide speech extraction and employs Wasserstein distance regularization to stabilize the latent space without requiring paired noisy-clean data. Experimental results demonstrate that UniVoiceLite achieves strong performance in both noisy and multi-speaker scenarios, combining efficiency with robust generalization. The source code is available at this https URL.

The present document is Part 1 of a 2-part introduction to ambisonics and aims at readers who would like to work practically with ambisonics. We leave out deep technical details in this part and focus on helping the reader to develop an intuitive understanding of the underlying concept. We explain what ambisonic signals are, how they can be obtained, what manipulations can be applied to them, and how they can be reproduced to a listener. We provide a variety of audio examples that illustrate the matter. Part 2 of this introduction into ambisonics is provided in a separate document and aims at readers who would like to understand the mathematical details.

Speech Activity Detection (SAD) systems often misclassify singing as speech, leading to degraded performance in applications such as dialogue enhancement and automatic speech recognition. We introduce Singing-Robust Speech Activity Detection ( SR-SAD ), a neural network designed to robustly detect speech in the presence of singing. Our key contributions are: i) a training strategy using controlled ratios of speech and singing samples to improve discrimination, ii) a computationally efficient model that maintains robust performance while reducing inference runtime, and iii) a new evaluation metric tailored to assess SAD robustness in mixed speech-singing scenarios. Experiments on a challenging dataset spanning multiple musical genres show that SR-SAD maintains high speech detection accuracy (AUC = 0.919) while rejecting singing. By explicitly learning to distinguish between speech and singing, SR-SAD enables more reliable SAD in mixed speech-singing scenarios.

Voice authentication systems deployed at the network edge face dual threats: a) sophisticated deepfake synthesis attacks and b) control-plane poisoning in distributed federated learning protocols. We present a framework coupling physics-guided deepfake detection with uncertainty-aware in edge learning. The framework fuses interpretable physics features modeling vocal tract dynamics with representations coming from a self-supervised learning module. The representations are then processed via a Multi-Modal Ensemble Architecture, followed by a Bayesian ensemble providing uncertainty estimates. Incorporating physics-based characteristics evaluations and uncertainty estimates of audio samples allows our proposed framework to remain robust to both advanced deepfake attacks and sophisticated control-plane poisoning, addressing the complete threat model for networked voice authentication.

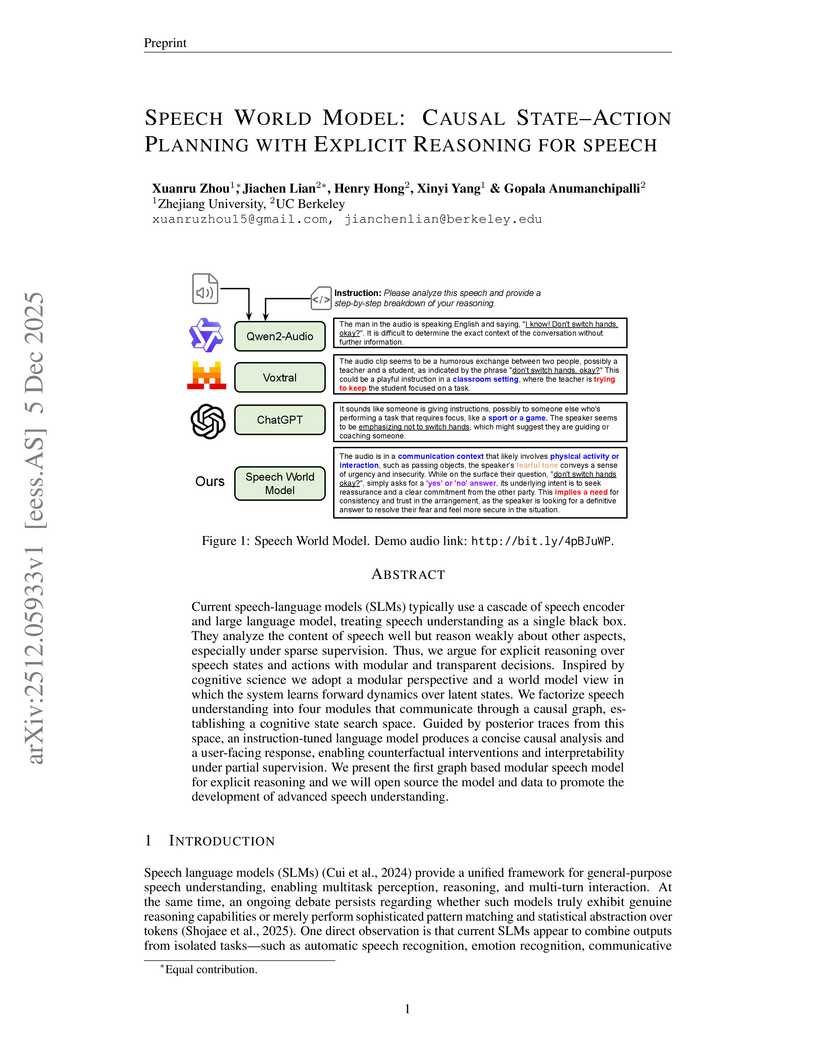

Current speech-language models (SLMs) typically use a cascade of speech encoder and large language model, treating speech understanding as a single black box. They analyze the content of speech well but reason weakly about other aspects, especially under sparse supervision. Thus, we argue for explicit reasoning over speech states and actions with modular and transparent decisions. Inspired by cognitive science we adopt a modular perspective and a world model view in which the system learns forward dynamics over latent states. We factorize speech understanding into four modules that communicate through a causal graph, establishing a cognitive state search space. Guided by posterior traces from this space, an instruction-tuned language model produces a concise causal analysis and a user-facing response, enabling counterfactual interventions and interpretability under partial supervision. We present the first graph based modular speech model for explicit reasoning and we will open source the model and data to promote the development of advanced speech understanding.

Recent advances in diffusion models have positioned them as powerful generative frameworks for speech synthesis, demonstrating substantial improvements in audio quality and stability. Nevertheless, their effectiveness in vocoders conditioned on mel spectrograms remains constrained, particularly when the conditioning diverges from the training distribution. The recently proposed GLA-Grad model introduced a phase-aware extension to the WaveGrad vocoder that integrated the Griffin-Lim algorithm (GLA) into the reverse process to reduce inconsistencies between generated signals and conditioning mel spectrogram. In this paper, we further improve GLA-Grad through an innovative choice in how to apply the correction. Particularly, we compute the correction term only once, with a single application of GLA, to accelerate the generation process. Experimental results demonstrate that our method consistently outperforms the baseline models, particularly in out-of-domain scenarios.

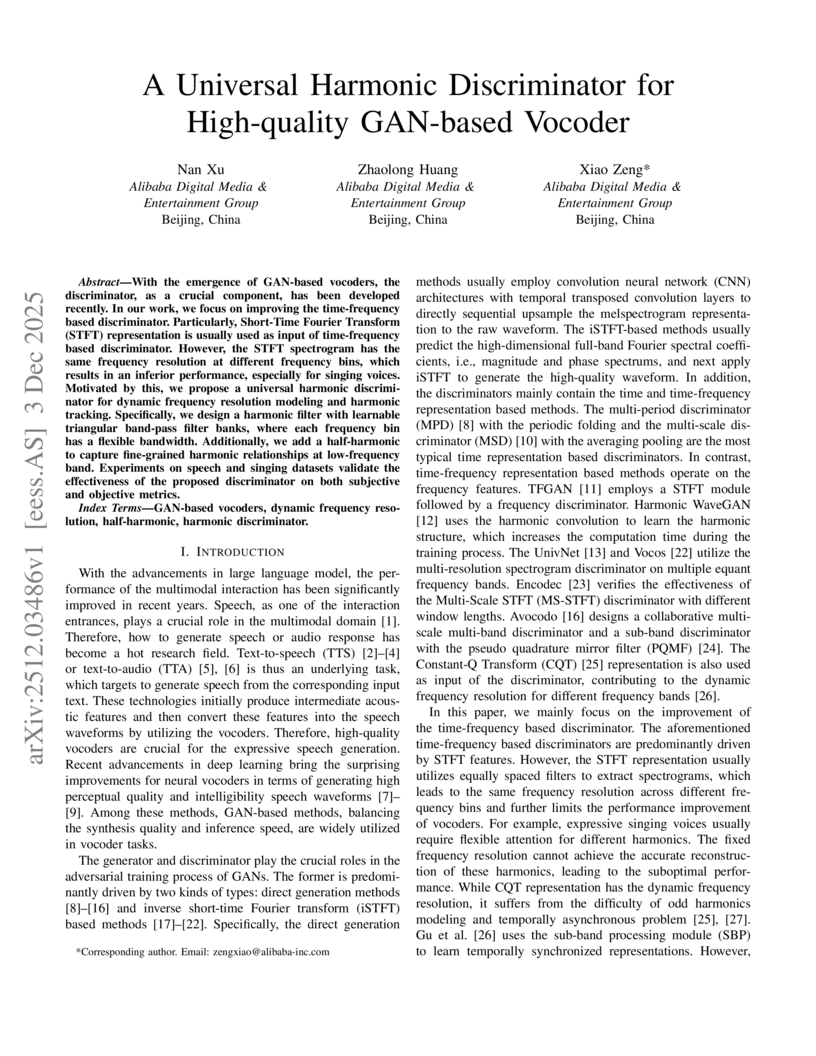

Researchers from Alibaba Digital Media & Entertainment Group developed the Universal Harmonic Discriminator (UnivHD) for GAN-based vocoders, utilizing a learnable harmonic filter bank to achieve dynamic frequency resolution and comprehensive harmonic modeling. This method substantially improved the perceptual quality of synthesized speech and singing voices, particularly for expressive and out-of-domain audio, by outperforming existing time-frequency discriminators.

Speech emotion recognition (SER) is an important technology in human-computer interaction. However, achieving high performance is challenging due to emotional complexity and scarce annotated data. To tackle these challenges, we propose a multi-loss learning (MLL) framework integrating an energy-adaptive mixup (EAM) method and a frame-level attention module (FLAM). The EAM method leverages SNR-based augmentation to generate diverse speech samples capturing subtle emotional variations. FLAM enhances frame-level feature extraction for multi-frame emotional cues. Our MLL strategy combines Kullback-Leibler divergence, focal, center, and supervised contrastive loss to optimize learning, address class imbalance, and improve feature separability. We evaluate our method on four widely used SER datasets: IEMOCAP, MSP-IMPROV, RAVDESS, and SAVEE. The results demonstrate our method achieves state-of-the-art performance, suggesting its effectiveness and robustness.

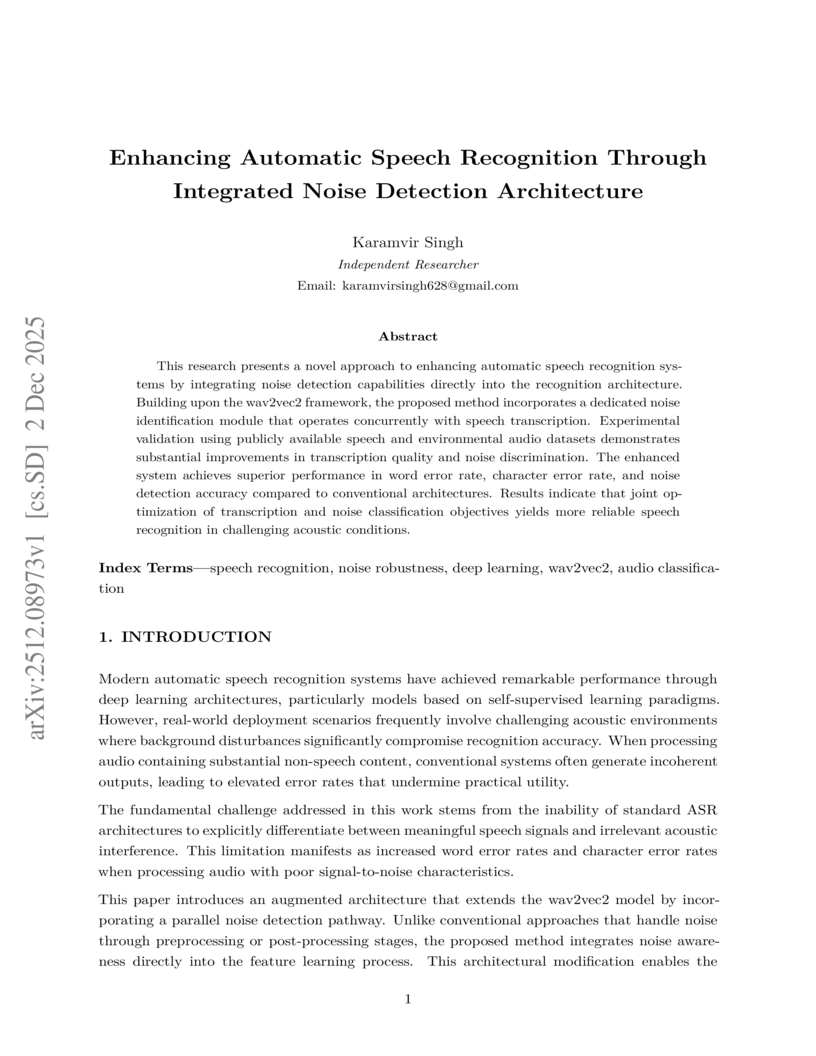

This research presents a novel approach to enhancing automatic speech recognition systems by integrating noise detection capabilities directly into the recognition architecture. Building upon the wav2vec2 framework, the proposed method incorporates a dedicated noise identification module that operates concurrently with speech transcription. Experimental validation using publicly available speech and environmental audio datasets demonstrates substantial improvements in transcription quality and noise discrimination. The enhanced system achieves superior performance in word error rate, character error rate, and noise detection accuracy compared to conventional architectures. Results indicate that joint optimization of transcription and noise classification objectives yields more reliable speech recognition in challenging acoustic conditions.

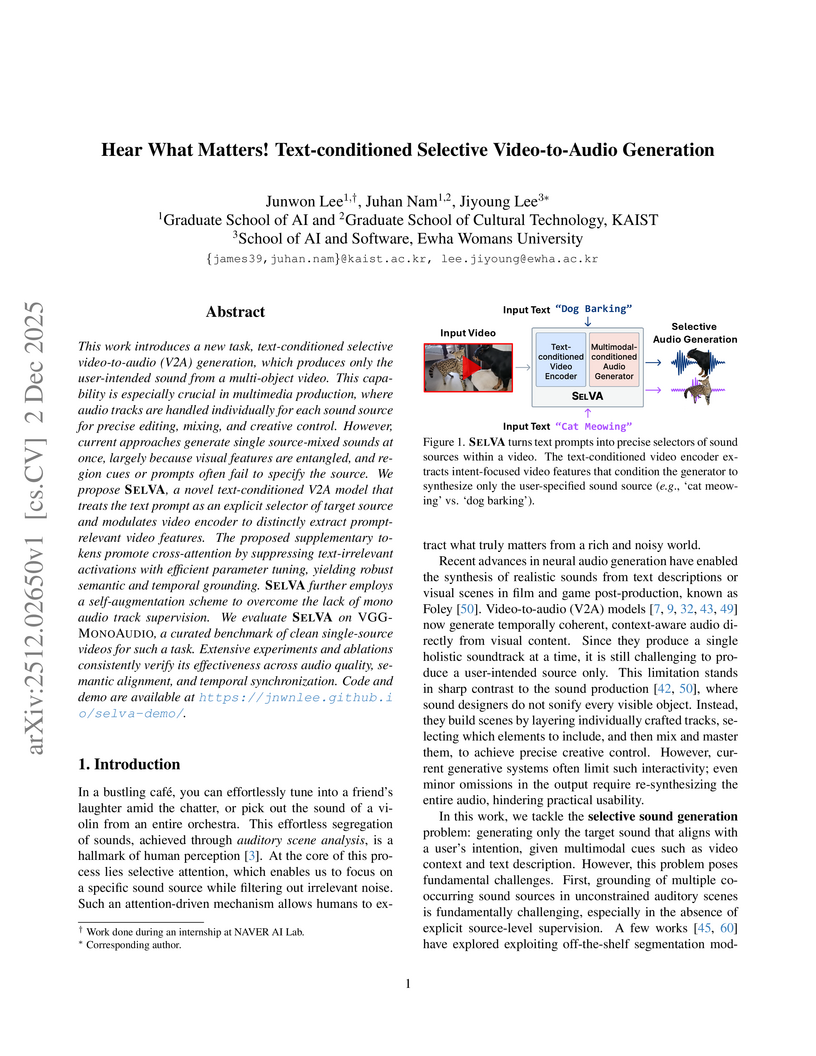

This work introduces a new task, text-conditioned selective video-to-audio (V2A) generation, which produces only the user-intended sound from a multi-object video. This capability is especially crucial in multimedia production, where audio tracks are handled individually for each sound source for precise editing, mixing, and creative control. However, current approaches generate single source-mixed sounds at once, largely because visual features are entangled, and region cues or prompts often fail to specify the source. We propose SelVA, a novel text-conditioned V2A model that treats the text prompt as an explicit selector of target source and modulates video encoder to distinctly extract prompt-relevant video features. The proposed supplementary tokens promote cross-attention by suppressing text-irrelevant activations with efficient parameter tuning, yielding robust semantic and temporal grounding. SelVA further employs a self-augmentation scheme to overcome the lack of mono audio track supervision. We evaluate SelVA on VGG-MONOAUDIO, a curated benchmark of clean single-source videos for such a task. Extensive experiments and ablations consistently verify its effectiveness across audio quality, semantic alignment, and temporal synchronization. Code and demo are available at this https URL.

A tutorial provides a comprehensive framework for understanding the design principles, historical context, and future challenges of integrating speech modalities with Large Language Models to create spoken conversational agents. It synthesizes advancements and identifies key research directions while emphasizing ethical considerations.

Dithering is a technique commonly used to improve the perceptual quality of lossy data compression. In this work, we analytically and experimentally justify the use of dithering for ASR input compression. We formalize an understanding of optimal ASR performance under lossy input compression and leverage this to propose a parametric dithering technique for a low-complexity speech compression pipeline. The method performs well at 1-bit resolution, showing a 25\% relative CER improvement, while also demonstrating improvements of 32.4\% and 33.5\% at 2- and 3-bit resolution, respectively, with our second dither choice yielding a reduced data rate. The proposed codec is adaptable to meet performance targets or stay within entropy constraints.

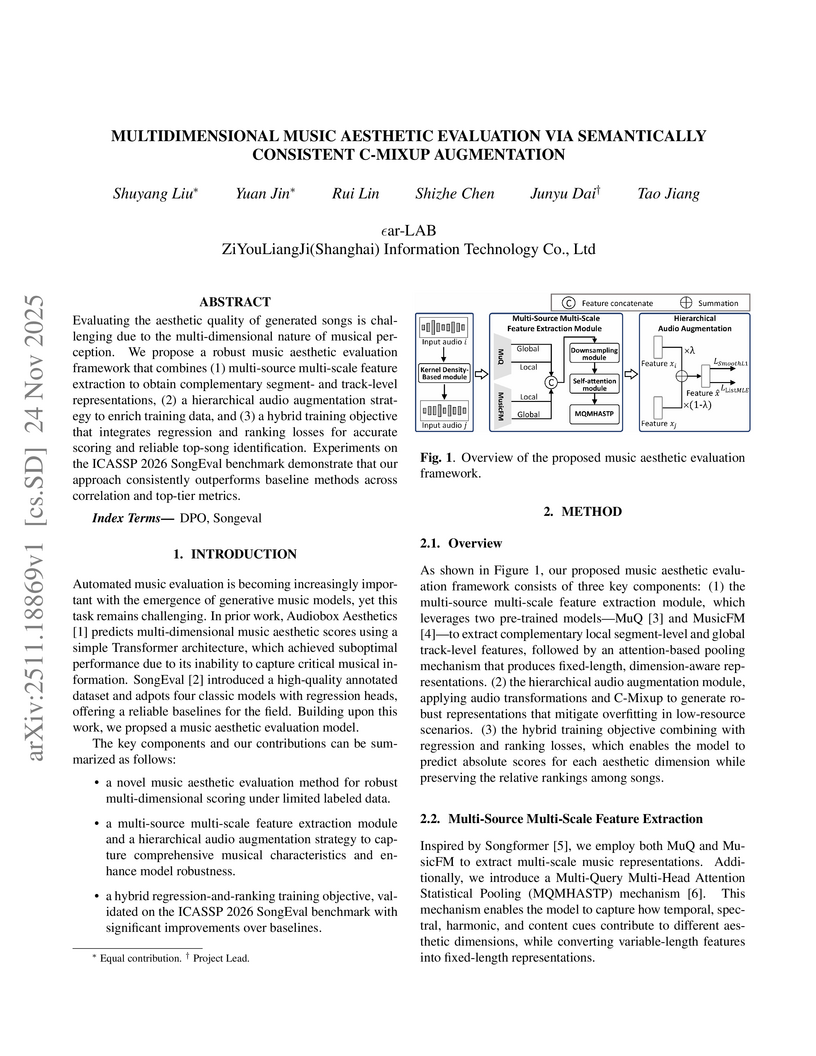

Researchers at "ϵar-LAB, ZiYouLiangJi (Shanghai) Information Technology Co., Ltd" developed a framework for multidimensional music aesthetic evaluation by integrating multi-source features, hierarchical augmentation, and a hybrid training objective. The approach achieved state-of-the-art performance on the ICASSP 2026 SongEval benchmark, with a 3.08 point improvement in Top-Tier Accuracy.

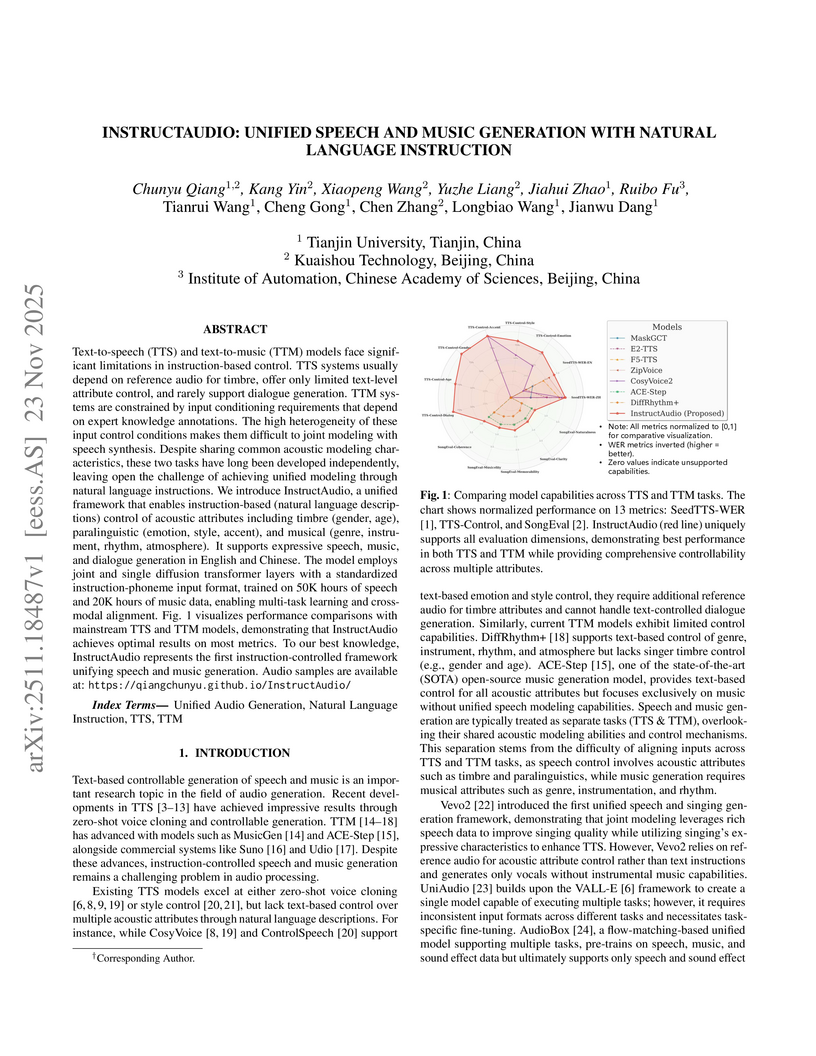

InstructAudio presents a unified framework for generating speech and music from natural language instructions, leveraging a Multimodal Diffusion Transformer to provide comprehensive control without requiring reference audio. The system achieves superior performance in instruction-based Text-to-Speech, including novel dialogue synthesis capabilities, and maintains competitive results in Text-to-Music.

There are no more papers matching your filters at the moment.