University of North Carolina Wilmington

18 Sep 2025

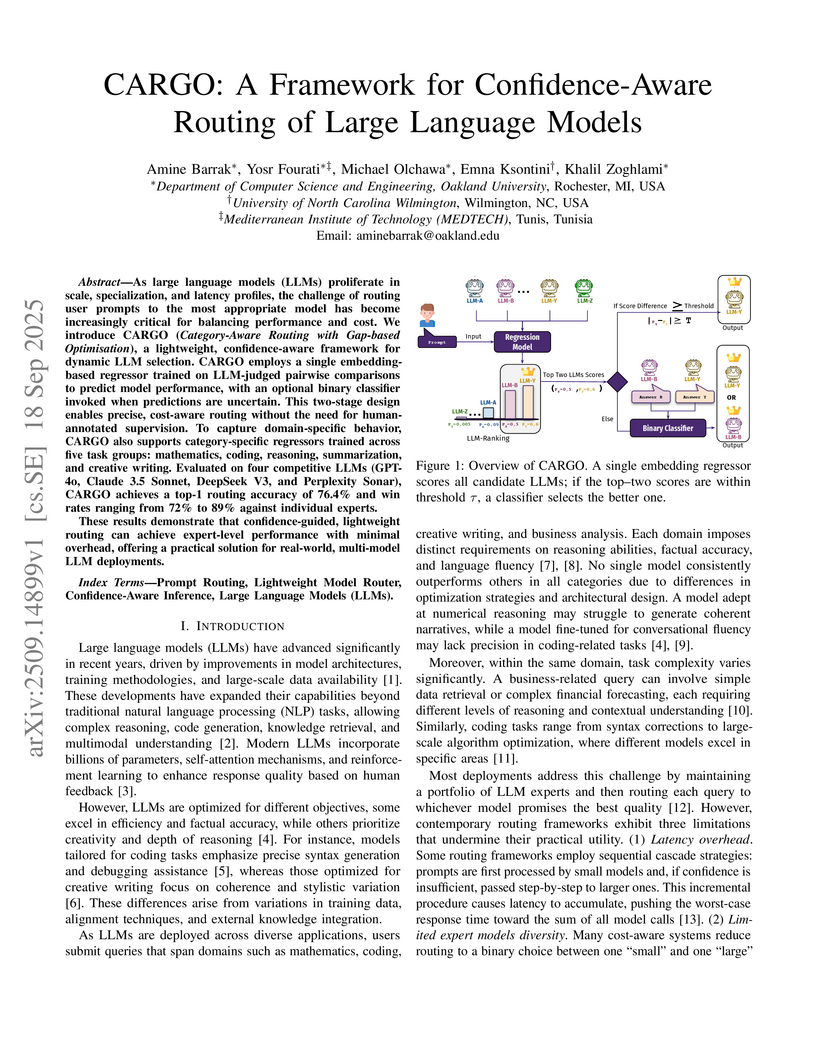

As large language models (LLMs) proliferate in scale, specialization, and latency profiles, the challenge of routing user prompts to the most appropriate model has become increasingly critical for balancing performance and cost. We introduce CARGO (Category-Aware Routing with Gap-based Optimization), a lightweight, confidence-aware framework for dynamic LLM selection. CARGO employs a single embedding-based regressor trained on LLM-judged pairwise comparisons to predict model performance, with an optional binary classifier invoked when predictions are uncertain. This two-stage design enables precise, cost-aware routing without the need for human-annotated supervision. To capture domain-specific behavior, CARGO also supports category-specific regressors trained across five task groups: mathematics, coding, reasoning, summarization, and creative writing. Evaluated on four competitive LLMs (GPT-4o, Claude 3.5 Sonnet, DeepSeek V3, and Perplexity Sonar), CARGO achieves a top-1 routing accuracy of 76.4% and win rates ranging from 72% to 89% against individual experts. These results demonstrate that confidence-guided, lightweight routing can achieve expert-level performance with minimal overhead, offering a practical solution for real-world, multi-model LLM deployments.

10 Sep 2025

Large Language Models (LLMs) are evolving from passive text generators into active agents that invoke external tools. To support this shift, scalable protocols for tool integration are essential. The Model Context Protocol (MCP), introduced by Anthropic in 2024, offers a schema-driven standard for dynamic tool discovery and invocation. Yet, building MCP servers remains manual and repetitive, requiring developers to write glue code, handle authentication, and configure schemas by hand-replicating much of the integration effort MCP aims to eliminate.

This paper investigates whether MCP server construction can be meaningfully automated. We begin by analyzing adoption trends: among 22,000+ MCP-tagged GitHub repositories created within six months of release, fewer than 5% include servers, typically small, single-maintainer projects dominated by repetitive scaffolding. To address this gap, we present AutoMCP, a compiler that generates MCP servers from OpenAPI 2.0/3.0 specifications. AutoMCP parses REST API definitions and produces complete server implementations, including schema registration and authentication handling.

We evaluate AutoMCP on 50 real-world APIs spanning 5,066 endpoints across over 10 domains. From a stratified sample of 1,023 tool calls, 76.5% succeeded out of the box. Manual failure analysis revealed five recurring issues, all attributable to inconsistencies or omissions in the OpenAPI contracts. After minor fixes, averaging 19 lines of spec changes per API, AutoMCP achieved 99.9% success.

Our findings (i) analyze MCP adoption and quantify the cost of manual server development, (ii) demonstrate that OpenAPI specifications, despite quality issues, enable near-complete MCP server automation, and (iii) contribute a corpus of 5,066 callable tools along with insights on repairing common specification flaws.

11 Aug 2025

Rensselaer Polytechnic InstituteSLAC National Accelerator Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University University of California, San Diego

University of California, San Diego McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryCarleton UniversityMontclair State UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaUniversity of WindsorLawrence Livermore National LaboratoryColorado School of MinesUniversity of Hawaii at ManoaIMT AtlantiqueDrexel UniversityNational Research Center “Kurchatov Institute”University of the Western CapeInstitute of high-energy PhysicsSUBATECHSNOLABLaurentian UniversityCNRS-IN2P3Universite De SherbrookeIBS Center for Underground PhysicsNantes UniversiteSkyline CollegeInstitute of MicroelectronicsWright LaboratoryAmherst Center for Fundamental InteractionsQueens

’ University

Brookhaven National LaboratoryCarleton UniversityMontclair State UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaUniversity of WindsorLawrence Livermore National LaboratoryColorado School of MinesUniversity of Hawaii at ManoaIMT AtlantiqueDrexel UniversityNational Research Center “Kurchatov Institute”University of the Western CapeInstitute of high-energy PhysicsSUBATECHSNOLABLaurentian UniversityCNRS-IN2P3Universite De SherbrookeIBS Center for Underground PhysicsNantes UniversiteSkyline CollegeInstitute of MicroelectronicsWright LaboratoryAmherst Center for Fundamental InteractionsQueens

’ University

Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University University of California, San Diego

University of California, San Diego McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryCarleton UniversityMontclair State UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaUniversity of WindsorLawrence Livermore National LaboratoryColorado School of MinesUniversity of Hawaii at ManoaIMT AtlantiqueDrexel UniversityNational Research Center “Kurchatov Institute”University of the Western CapeInstitute of high-energy PhysicsSUBATECHSNOLABLaurentian UniversityCNRS-IN2P3Universite De SherbrookeIBS Center for Underground PhysicsNantes UniversiteSkyline CollegeInstitute of MicroelectronicsWright LaboratoryAmherst Center for Fundamental InteractionsQueens

’ University

Brookhaven National LaboratoryCarleton UniversityMontclair State UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaUniversity of WindsorLawrence Livermore National LaboratoryColorado School of MinesUniversity of Hawaii at ManoaIMT AtlantiqueDrexel UniversityNational Research Center “Kurchatov Institute”University of the Western CapeInstitute of high-energy PhysicsSUBATECHSNOLABLaurentian UniversityCNRS-IN2P3Universite De SherbrookeIBS Center for Underground PhysicsNantes UniversiteSkyline CollegeInstitute of MicroelectronicsWright LaboratoryAmherst Center for Fundamental InteractionsQueens

’ UniversityThe next generation of rare-event search experiments in nuclear and particle physics demand structural materials combining exceptional mechanical strength with ultra-low levels of radioactive contamination. This study evaluates chemical vapor deposition (CVD) nickel as a candidate structural material for such applications. Manufacturer-supplied CVD Ni grown on aluminum substrates underwent tensile testing before and after welding alongside standard Ni samples. CVD Ni exhibited a planar tensile strength of ~600 MPa, significantly surpassing standard nickel. However, welding and heat treatment were found to reduce the tensile strength to levels comparable to standard Ni, with observed porosity in the welds likely contributing to this reduction. Material assay via inductively coupled plasma mass spectrometry (ICP-MS) employing isotope-dilution produced measured bulk concentration of 232-Th, 238-U, and nat-K at the levels of ~70 ppq, <100 ppq, and ~900 ppt, respectively, which is the lowest reported in nickel. Surface-etch profiling uncovered higher concentrations of these contaminants extending ~10 micrometer beneath the surface, likely associated with the aluminum growth substrate. The results reported are compared to the one other well documented usage of CVD Ni in a low radioactive background physics research experiment and a discussion is provided on how the currently reported results may arise from changes in CVD fabrication or testing process. These results establish CVD Ni as a promising low-radioactivity structural material, while outlining the need for further development in welding and surface cleaning techniques to fully realize its potential in large-scale, low radioactive background rare-event search experiments.

21 Sep 2020

The use of Artificial Intelligence (AI) and Machine Learning (ML) to solve

cybersecurity problems has been gaining traction within industry and academia,

in part as a response to widespread malware attacks on critical systems, such

as cloud infrastructures, government offices or hospitals, and the vast amounts

of data they generate. AI- and ML-assisted cybersecurity offers data-driven

automation that could enable security systems to identify and respond to cyber

threats in real time. However, there is currently a shortfall of professionals

trained in AI and ML for cybersecurity. Here we address the shortfall by

developing lab-intensive modules that enable undergraduate and graduate

students to gain fundamental and advanced knowledge in applying AI and ML

techniques to real-world datasets to learn about Cyber Threat Intelligence

(CTI), malware analysis, and classification, among other important topics in

cybersecurity.

Here we describe six self-contained and adaptive modules in "AI-assisted

Malware Analysis." Topics include: (1) CTI and malware attack stages, (2)

malware knowledge representation and CTI sharing, (3) malware data collection

and feature identification, (4) AI-assisted malware detection, (5) malware

classification and attribution, and (6) advanced malware research topics and

case studies such as adversarial learning and Advanced Persistent Threat (APT)

detection.

06 Aug 2025

Sapphire has mechanical and electrical properties that are advantageous for the construction of internal components of radiation detectors such as time projection chambers and bolometers. However, it has proved difficult to assess its 232Th and 238U content down to the picogram per gram level. This work reports an experimental verification of a computational study that demonstrates γγ coincidence counting, coupled with neutron activation analysis (NAA), can reach ppt sensitivities. Combining results from γγ coincidence counting with those of earlier single-γ counting based NAA shows that a sample of Saint Gobain sapphire has 232Th and 238U concentrations of <0.26 ppt and <2.3 ppt, respectively; the best constraints on the radiopurity of sapphire.

Transformer-based models can automatically generate highly plausible fake Cyber Threat Intelligence (CTI) that deceives experienced cybersecurity professionals, with experts incorrectly identifying 78.5% of fake samples as true. Ingesting this generated CTI can directly corrupt Cybersecurity Knowledge Graphs (CKGs), leading to misleading information in cyber defense systems.

31 Jul 2025

This paper proposes a new generalized linear model with the fractional binomial distribution.

Zero-inflated Poisson/negative binomial distributions are used for count data with many zeros. To analyze the association of such a count variable with covariates, zero-inflated Poisson/negative binomial regression models are widely used. In this work, we develop a regression model with the fractional binomial distribution that can serve as an additional tool for modeling the count response variable with covariates. The consistency of maximum likelihood estimators of the proposed model is investigated theoretically and empirically with simulations. The practicality of the proposed model is examined through data analysis. The results show that our model is as versatile as or more versatile than the existing zero-inflated models, and especially, it has a better fit with left-skewed discrete data than other models. However, the proposed model faces computational obstacles and will require more work in the future to implement this model on various count data with excess zeros.

Crafting neural network architectures manually is a formidable challenge

often leading to suboptimal and inefficient structures. The pursuit of the

perfect neural configuration is a complex task, prompting the need for a

metaheuristic approach such as Neural Architecture Search (NAS). Drawing

inspiration from the ingenious mechanisms of nature, this paper introduces

Collaborative Ant-based Neural Topology Search (CANTS-N), pushing the

boundaries of NAS and Neural Evolution (NE). In this innovative approach,

ant-inspired agents meticulously construct neural network structures,

dynamically adapting within a dynamic environment, much like their natural

counterparts. Guided by Particle Swarm Optimization (PSO), CANTS-N's colonies

optimize architecture searches, achieving remarkable improvements in mean

squared error (MSE) over established methods, including BP-free CANTS, BP

CANTS, and ANTS. Scalable, adaptable, and forward-looking, CANTS-N has the

potential to reshape the landscape of NAS and NE. This paper provides detailed

insights into its methodology, results, and far-reaching implications.

13 Jul 2023

A Controlled Experiment on the Impact of Intrusion Detection False Alarm Rate on Analyst Performance

A Controlled Experiment on the Impact of Intrusion Detection False Alarm Rate on Analyst Performance

Organizations use intrusion detection systems (IDSes) to identify harmful activity among millions of computer network events. Cybersecurity analysts review IDS alarms to verify whether malicious activity occurred and to take remedial action. However, IDS systems exhibit high false alarm rates. This study examines the impact of IDS false alarm rate on human analyst sensitivity (probability of detection), precision (positive predictive value), and time on task when evaluating IDS alarms. A controlled experiment was conducted with participants divided into two treatment groups, 50% IDS false alarm rate and 86% false alarm rate, who classified whether simulated IDS alarms were true or false alarms. Results show statistically significant differences in precision and time on task. The median values for the 86% false alarm rate group were 47% lower precision and 40% slower time on task than the 50% false alarm rate group. No significant difference in analyst sensitivity was observed.

08 Nov 2025

We introduce the Axial Seamount Eruption Forecasting Experiment (EFE), a real-time initiative designed to test the predictability of volcanic eruptions through a transparent, physics-based framework. The experiment is inspired by the Financial Bubble Experiment, adapting its principles of digital authentication, timestamped archiving, and delayed disclosure to the field of volcanology. The EFE implements a reproducible protocol in which each forecast is securely timestamped and cryptographically hashed (SHA-256) before being made public. The corresponding forecast documents, containing detailed diagnostics and probabilistic analyses, will be released after the next eruption or, if the forecasts are proven incorrect, at a later date. This procedure ensures full transparency while preventing premature interpretation or controversy surrounding public predictions. Forecasts will be issued monthly, or more frequently if required, using real-time monitoring data from the Ocean Observatories Initiative's Regional Cabled Array at Axial Seamount. By committing to publish all forecasts, successful or not, the EFE establishes a scientifically rigorous, falsifiable protocol to evaluate the limits of eruption forecasting. The ultimate goal is to transform eruption prediction into a cumulative and testable science founded on open verification, reproducibility, and physical understanding.

Group-level emotion recognition (ER) is a growing research area as the

demands for assessing crowds of all sizes are becoming an interest in both the

security arena as well as social media. This work extends the earlier ER

investigations, which focused on either group-level ER on single images or

within a video, by fully investigating group-level expression recognition on

crowd videos. In this paper, we propose an effective deep feature level fusion

mechanism to model the spatial-temporal information in the crowd videos. In our

approach, the fusing process is performed on the deep feature domain by a

generative probabilistic model, Non-Volume Preserving Fusion (NVPF), that

models spatial information relationships. Furthermore, we extend our proposed

spatial NVPF approach to the spatial-temporal NVPF approach to learn the

temporal information between frames. To demonstrate the robustness and

effectiveness of each component in the proposed approach, three experiments

were conducted: (i) evaluation on AffectNet database to benchmark the proposed

EmoNet for recognizing facial expression; (ii) evaluation on EmotiW2018 to

benchmark the proposed deep feature level fusion mechanism NVPF; and, (iii)

examine the proposed TNVPF on an innovative Group-level Emotion on Crowd Videos

(GECV) dataset composed of 627 videos collected from publicly available

sources. GECV dataset is a collection of videos containing crowds of people.

Each video is labeled with emotion categories at three levels: individual

faces, group of people, and the entire video frame.

Rensselaer Polytechnic InstituteSLAC National Accelerator Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityUniversity of CaliforniaTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaLawrence Livermore National LaboratoryColorado School of MinesDrexel UniversityUniversity of the Western CapeSUBATECHSNOLABLaurentian UniversityFriedrich-Alexander-University Erlangen-NurnbergIBS Center for Underground PhysicsSkyline CollegeInstitute of MicroelectronicsInstitute for Theoretical and Experimental Physics named by A. I. Alikhanov of National Research Center ”Kurchatov Institute”Universit

de Sherbrooke

Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityUniversity of CaliforniaTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaLawrence Livermore National LaboratoryColorado School of MinesDrexel UniversityUniversity of the Western CapeSUBATECHSNOLABLaurentian UniversityFriedrich-Alexander-University Erlangen-NurnbergIBS Center for Underground PhysicsSkyline CollegeInstitute of MicroelectronicsInstitute for Theoretical and Experimental Physics named by A. I. Alikhanov of National Research Center ”Kurchatov Institute”Universit

de Sherbrooke

Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityUniversity of CaliforniaTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaLawrence Livermore National LaboratoryColorado School of MinesDrexel UniversityUniversity of the Western CapeSUBATECHSNOLABLaurentian UniversityFriedrich-Alexander-University Erlangen-NurnbergIBS Center for Underground PhysicsSkyline CollegeInstitute of MicroelectronicsInstitute for Theoretical and Experimental Physics named by A. I. Alikhanov of National Research Center ”Kurchatov Institute”Universit

de Sherbrooke

Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityUniversity of CaliforniaTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyUniversity of South DakotaLawrence Livermore National LaboratoryColorado School of MinesDrexel UniversityUniversity of the Western CapeSUBATECHSNOLABLaurentian UniversityFriedrich-Alexander-University Erlangen-NurnbergIBS Center for Underground PhysicsSkyline CollegeInstitute of MicroelectronicsInstitute for Theoretical and Experimental Physics named by A. I. Alikhanov of National Research Center ”Kurchatov Institute”Universit

de SherbrookeWe study a possible calibration technique for the nEXO experiment using a

127Xe electron capture source. nEXO is a next-generation search for

neutrinoless double beta decay (0νββ) that will use a 5-tonne,

monolithic liquid xenon time projection chamber (TPC). The xenon, used both as

source and detection medium, will be enriched to 90% in 136Xe. To optimize

the event reconstruction and energy resolution, calibrations are needed to map

the position- and time-dependent detector response. The 36.3 day half-life of

127Xe and its small Q-value compared to that of 136Xe

0νββ would allow a small activity to be maintained continuously in

the detector during normal operations without introducing additional

backgrounds, thereby enabling in-situ calibration and monitoring of the

detector response. In this work we describe a process for producing the source

and preliminary experimental tests. We then use simulations to project the

precision with which such a source could calibrate spatial corrections to the

light and charge response of the nEXO TPC.

Rensselaer Polytechnic InstituteSLAC National Accelerator Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University University of California, San Diego

University of California, San Diego McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyLawrence Livermore National LaboratoryDrexel UniversityNational Research Center “Kurchatov Institute”Laurentian UniversityFriedrich-Alexander University Erlangen-NürnbergUniversit

de Sherbrooke

Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyLawrence Livermore National LaboratoryDrexel UniversityNational Research Center “Kurchatov Institute”Laurentian UniversityFriedrich-Alexander University Erlangen-NürnbergUniversit

de Sherbrooke

Chinese Academy of Sciences

Chinese Academy of Sciences Stanford University

Stanford University University of California, San Diego

University of California, San Diego McGill University

McGill University University of British Columbia

University of British Columbia Yale UniversityColorado State UniversityPacific Northwest National Laboratory

Yale UniversityColorado State UniversityPacific Northwest National Laboratory Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyLawrence Livermore National LaboratoryDrexel UniversityNational Research Center “Kurchatov Institute”Laurentian UniversityFriedrich-Alexander University Erlangen-NürnbergUniversit

de Sherbrooke

Brookhaven National LaboratoryOak Ridge National LaboratoryUniversity of IllinoisCarleton UniversityTRIUMFUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of MassachusettsUniversity of KentuckyLawrence Livermore National LaboratoryDrexel UniversityNational Research Center “Kurchatov Institute”Laurentian UniversityFriedrich-Alexander University Erlangen-NürnbergUniversit

de SherbrookeThe nEXO neutrinoless double beta decay experiment is designed to use a time

projection chamber and 5000 kg of isotopically enriched liquid xenon to search

for the decay in 136Xe. Progress in the detector design, paired with

higher fidelity in its simulation and an advanced data analysis, based on the

one used for the final results of EXO-200, produce a sensitivity prediction

that exceeds the half-life of 1028 years. Specifically, improvements have

been made in the understanding of production of scintillation photons and

charge as well as of their transport and reconstruction in the detector. The

more detailed knowledge of the detector construction has been paired with more

assays for trace radioactivity in different materials. In particular, the use

of custom electroformed copper is now incorporated in the design, leading to a

substantial reduction in backgrounds from the intrinsic radioactivity of

detector materials. Furthermore, a number of assumptions from previous

sensitivity projections have gained further support from interim work

validating the nEXO experiment concept. Together these improvements and updates

suggest that the nEXO experiment will reach a half-life sensitivity of

1.35×1028 yr at 90% confidence level in 10 years of data taking,

covering the parameter space associated with the inverted neutrino mass

ordering, along with a significant portion of the parameter space for the

normal ordering scenario, for almost all nuclear matrix elements. The effects

of backgrounds deviating from the nominal values used for the projections are

also illustrated, concluding that the nEXO design is robust against a number of

imperfections of the model.

19 Oct 2013

We present a new type of tournament design that we call a complete mixed

doubles round robin tournament, CMDRR(n,k), that generalizes spouse-avoiding

mixed doubles round robin tournaments and strict Mitchell mixed doubles round

robin tournaments. We show that CMDRR(n,k) exist for all allowed values of n

and k apart from 4 exceptions and 31 possible exceptions. We show that a fully

resolvable CMDRR(2n,0) exists for all n ≥ 5 and a fully resolvable

CMDRR(3n,n) exists for all n ≥ 5 and n odd. We prove a product theorem for

constructing CMDRR(n,k).

06 Mar 2017

Semi-Lagrangian methods are numerical methods designed to find approximate solutions to particular time-dependent partial differential equations (PDEs) that describe the advection process. We propose semi-Lagrangian one-step methods for numerically solving initial value problems for two general systems of partial differential equations. Along the characteristic lines of the PDEs, we use ordinary differential equation (ODE) numerical methods to solve the PDEs. The main benefit of our methods is the efficient achievement of high order local truncation error through the use of Runge-Kutta methods along the characteristics. In addition, we investigate the numerical analysis of semi-Lagrangian methods applied to systems of PDEs: stability, convergence, and maximum error bounds.

14 Nov 2016

This work is motivated by a biological experiment with a split-plot design,

for the purpose of comparison of the changing patterns in seed weight from two

treatment groups as subgroups in each of the two groups subject to increasing

levels of stress. We formalize the question into a nonparametric two sample

comparison problem for changes among the sub samples, which was analyzed using

U-statistics. Zero inflated value were also considered in the construction of

the U-statistics. The U-statistics were then used in a Chi-square type test

statistics framework for hypothesis testing. Bootstrapped p-values were

obtained through simulated samples. It was proven that the distribution of the

simulated sample can be independent provided the observed samples have certain

summary statistics. Simulation results suggest that the test is consistent.

To efficiently manage plant diseases, Agriculture Cyber-Physical Systems

(A-CPS) have been developed to detect and localize disease infestations by

integrating the Internet of Agro-Things (IoAT). By the nature of plant and

pathogen interactions, the spread of a disease appears as a focus with density

of infected plants and intensity of infection diminishing outwards. This

gradient of infection needs variable rate and precision pesticide spraying to

efficiently utilize resources and effectively handle the diseases. This

article, SprayCraft presents a graph based method for disease management A-CPS

to identify disease hotspots and compute near optimal path for a spraying drone

to perform variable rate precision spraying. It uses graph to represent the

diseased locations and their spatial relation, Message Passing is performed

over the graph to compute the probability of a location to be a disease

hotspot. These probabilities also serve as disease intensity measures and are

used for variable rate spraying at each location. Whereas, the graph is

utilized to compute tour path by considering it as Traveling Salesman Problem

(TSP) for precision spraying by the drone. Proposed method has been validated

on synthetic data of locations of diseased locations in a farmland.

Reported face verification accuracy has reached a plateau on current well-known test sets. As a result, some difficult test sets have been assembled by reducing the image quality or adding artifacts to the image. However, we argue that test sets can be challenging without artificially reducing the image quality because the face recognition (FR) models suffer from correctly recognizing 1) the pairs from the same identity (i.e., genuine pairs) with a large face attribute difference, 2) the pairs from different identities (i.e., impostor pairs) with a small face attribute difference, and 3) the pairs of similar-looking identities (e.g., twins and relatives). We propose three challenging test sets to reveal important but ignored weaknesses of the existing FR algorithms. To challenge models on variation of facial attributes, we propose Hadrian and Eclipse to address facial hair differences and face exposure differences. The images in both test sets are high-quality and collected in a controlled environment. To challenge FR models on similar-looking persons, we propose twins-IND, which contains images from a dedicated twins dataset. The LFW test protocol is used to structure the proposed test sets. Moreover, we introduce additional rules to assemble "Goldilocks1" level test sets, including 1) restricted number of occurrence of hard samples, 2) equal chance evaluation across demographic groups, and 3) constrained identity overlap across validation folds. Quantitatively, without further processing the images, the proposed test sets have on-par or higher difficulties than the existing test sets. The datasets are available at: https: //github.com/HaiyuWu/SOTA-Face-Recognition-Train-and-Test.

28 Dec 2023

Michigan State UniversityUniversity of Oklahoma

Michigan State UniversityUniversity of Oklahoma University of California, San DiegoOhio State University

University of California, San DiegoOhio State University Argonne National Laboratory

Argonne National Laboratory Arizona State University

Arizona State University Purdue UniversityCase Western Reserve UniversityVirginia Commonwealth University

Purdue UniversityCase Western Reserve UniversityVirginia Commonwealth University University of VirginiaTulane UniversityTexas State UniversityFlorida Atlantic UniversityUniversity of North Carolina, Chapel HillUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of North Carolina at CharlotteUniversity of Texas at San AntonioUniversity of Hawai’iUniversity of Tennessee, KnoxvilleThe University of Texas at ArlingtonSDSCSacred Heart UniversityUniversity of Texas at Tyler

University of VirginiaTulane UniversityTexas State UniversityFlorida Atlantic UniversityUniversity of North Carolina, Chapel HillUniversity of AlabamaUniversity of North Carolina WilmingtonUniversity of North Carolina at CharlotteUniversity of Texas at San AntonioUniversity of Hawai’iUniversity of Tennessee, KnoxvilleThe University of Texas at ArlingtonSDSCSacred Heart UniversityUniversity of Texas at TylerThis document describes a two-day meeting held for the Principal Investigators (PIs) of NSF CyberTraining grants. The report covers invited talks, panels, and six breakout sessions. The meeting involved over 80 PIs and NSF program managers (PMs). The lessons recorded in detail in the report are a wealth of information that could help current and future PIs, as well as NSF PMs, understand the future directions suggested by the PI community. The meeting was held simultaneously with that of the PIs of the NSF Cyberinfrastructure for Sustained Scientific Innovation (CSSI) program. This co-location led to two joint sessions: one with NSF speakers and the other on broader impact. Further, the joint poster and refreshment sessions benefited from the interactions between CSSI and CyberTraining PIs.

23 Oct 2024

Wuhan University Carnegie Mellon University

Carnegie Mellon University Tel Aviv University

Tel Aviv University Osaka UniversityUniversity of Regina

Osaka UniversityUniversity of Regina Arizona State UniversityFlorida State University

Arizona State UniversityFlorida State University Stony Brook UniversityThe George Washington UniversityUniversity of Massachusetts Amherst

Stony Brook UniversityThe George Washington UniversityUniversity of Massachusetts Amherst Duke University

Duke University Virginia TechUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityThe University of TennesseeMississippi State UniversityUniversity of North TexasUniversity of North Carolina WilmingtonUniversity of New HampshireTriangle Universities Nuclear LaboratoryGSI Helmholtz Centre for Heavy Ion ResearchThomas Jefferson National Accelerator FacilityLamar UniversityTomsk State UniversityCollege of William and MaryNorth Carolina Agricultural and Technical State UniversityA. I. Alikhanyan National Science LaboratoryWashington and Jefferson CollegeNational Research Nuclear University ","MEPhIRuhr-University-BochumNational Research Centre

“Kurchatov Institute”

Virginia TechUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityThe University of TennesseeMississippi State UniversityUniversity of North TexasUniversity of North Carolina WilmingtonUniversity of New HampshireTriangle Universities Nuclear LaboratoryGSI Helmholtz Centre for Heavy Ion ResearchThomas Jefferson National Accelerator FacilityLamar UniversityTomsk State UniversityCollege of William and MaryNorth Carolina Agricultural and Technical State UniversityA. I. Alikhanyan National Science LaboratoryWashington and Jefferson CollegeNational Research Nuclear University ","MEPhIRuhr-University-BochumNational Research Centre

“Kurchatov Institute”

Carnegie Mellon University

Carnegie Mellon University Tel Aviv University

Tel Aviv University Osaka UniversityUniversity of Regina

Osaka UniversityUniversity of Regina Arizona State UniversityFlorida State University

Arizona State UniversityFlorida State University Stony Brook UniversityThe George Washington UniversityUniversity of Massachusetts Amherst

Stony Brook UniversityThe George Washington UniversityUniversity of Massachusetts Amherst Duke University

Duke University Virginia TechUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityThe University of TennesseeMississippi State UniversityUniversity of North TexasUniversity of North Carolina WilmingtonUniversity of New HampshireTriangle Universities Nuclear LaboratoryGSI Helmholtz Centre for Heavy Ion ResearchThomas Jefferson National Accelerator FacilityLamar UniversityTomsk State UniversityCollege of William and MaryNorth Carolina Agricultural and Technical State UniversityA. I. Alikhanyan National Science LaboratoryWashington and Jefferson CollegeNational Research Nuclear University ","MEPhIRuhr-University-BochumNational Research Centre

“Kurchatov Institute”

Virginia TechUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityThe University of TennesseeMississippi State UniversityUniversity of North TexasUniversity of North Carolina WilmingtonUniversity of New HampshireTriangle Universities Nuclear LaboratoryGSI Helmholtz Centre for Heavy Ion ResearchThomas Jefferson National Accelerator FacilityLamar UniversityTomsk State UniversityCollege of William and MaryNorth Carolina Agricultural and Technical State UniversityA. I. Alikhanyan National Science LaboratoryWashington and Jefferson CollegeNational Research Nuclear University ","MEPhIRuhr-University-BochumNational Research Centre

“Kurchatov Institute”We report on the first measurement of J/ψ photoproduction from nuclei in

the photon energy range of 7 to 10.8 GeV, extending above and below the

photoproduction threshold in the free proton of ∼8.2 GeV. The experiment

used a tagged photon beam incident on deuterium, helium, and carbon, and the

GlueX detector at Jefferson Lab to measure the semi-inclusive

A(γ,e+e−p) reaction with a dilepton invariant mass $M(e^+e^-)\sim

m_{J/\psi}=3.1GeV.TheincoherentJ/\psi$ photoproduction cross sections in

the measured nuclei are extracted as a function of the incident photon energy,

momentum transfer, and proton reconstructed missing light-cone momentum

fraction. Comparisons with theoretical predictions assuming a dipole form

factor allow extracting a gluonic radius for bound protons of $\sqrt{\langle

r^2\rangle}=0.85\pm0.14$ fm. The data also suggest an excess of the measured

cross section for sub-threshold production and for interactions with high

missing light-cone momentum fraction protons. The measured enhancement can be

explained by modified gluon structure for high-virtuality bound-protons.

There are no more papers matching your filters at the moment.