Autodesk Research

05 Jun 2025

Fabrica introduces an autonomous system for dual-arm assembly of diverse multi-part objects directly from CAD models, integrating hierarchical planning with learning-based local control. This approach enables a single generalist policy to perform contact-rich insertions, achieving an 80% step-wise success rate on real robots with zero-shot sim-to-real transfer and demonstrating robust recovery capabilities for complex assemblies.

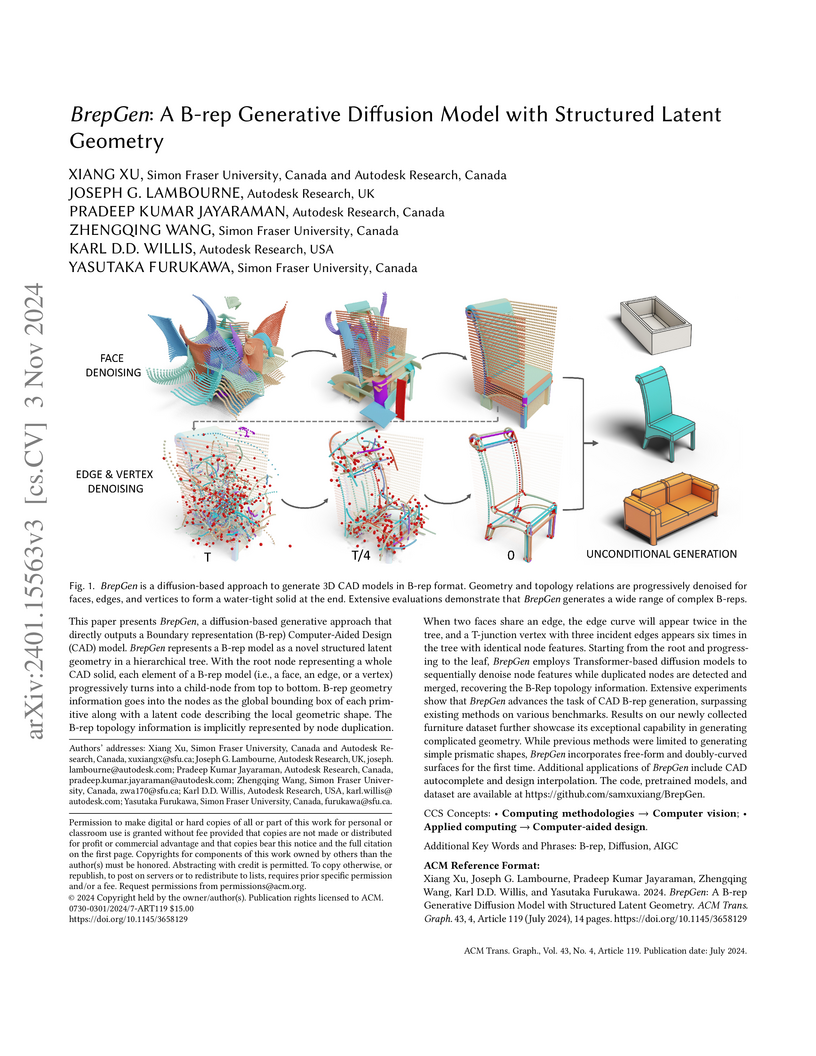

BrepGen introduces a generative diffusion model capable of directly synthesizing industrially-standard Boundary representation (B-rep) 3D models. It achieves this by employing a novel structured latent geometry representation that implicitly encodes topology, enabling the generation of complex, watertight models including free-form surfaces, and demonstrates capabilities like design autocompletion and interpolation.

Researchers from UC Berkeley and Autodesk Research developed the In-Context Robot Transformer (ICRT), a framework that allows a real robot to perform novel manipulation tasks solely from human teleoperated demonstrations provided as prompts during inference. ICRT achieved an average success rate of 79.2% on previously unseen pick-and-place and poking tasks, significantly outperforming state-of-the-art robot foundation models without requiring any fine-tuning.

08 Sep 2025

Generalizable long-horizon robotic assembly requires reasoning at multiple levels of abstraction. While end-to-end imitation learning (IL) is a promising approach, it typically requires large amounts of expert demonstration data and often struggles to achieve the high precision demanded by assembly tasks. Reinforcement learning (RL) approaches, on the other hand, have shown some success in high-precision assembly, but suffer from sample inefficiency, which limits their effectiveness in long-horizon tasks. To address these challenges, we propose a hierarchical modular approach, named Adaptive Robotic Compositional Hierarchy (ARCH), which enables long-horizon, high-precision robotic assembly in contact-rich settings. ARCH employs a hierarchical planning framework, including a low-level primitive library of parameterized skills and a high-level policy. The low-level primitive library includes essential skills for assembly tasks, such as grasping and inserting. These primitives consist of both RL and model-based policies. The high-level policy, learned via IL from a handful of demonstrations, without the need for teleoperation, selects the appropriate primitive skills and instantiates them with input parameters. We extensively evaluate our approach in simulation and on a real robotic manipulation platform. We show that ARCH generalizes well to unseen objects and outperforms baseline methods in terms of success rate and data efficiency. More details are available at: this https URL.

05 Aug 2025

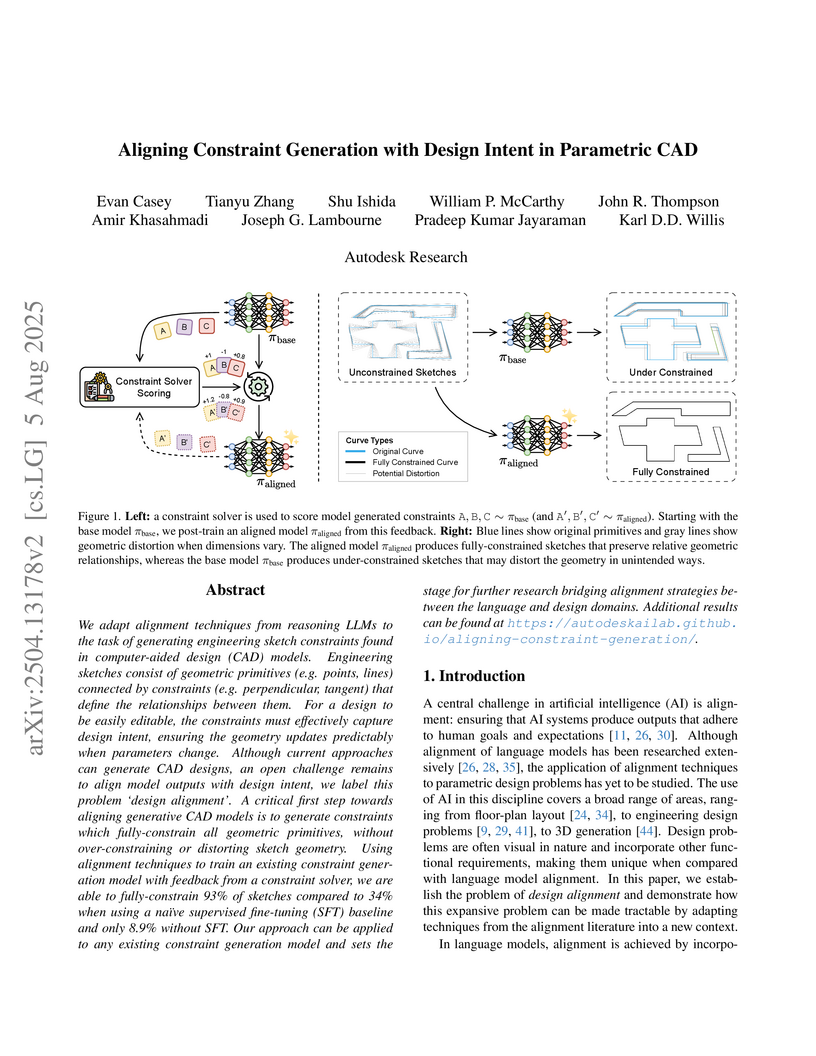

This research from Autodesk Research applies AI alignment methods to generative CAD, substantially increasing the percentage of fully-constrained and stable engineering sketches from an 8.87% baseline to over 90%, making them practically editable.

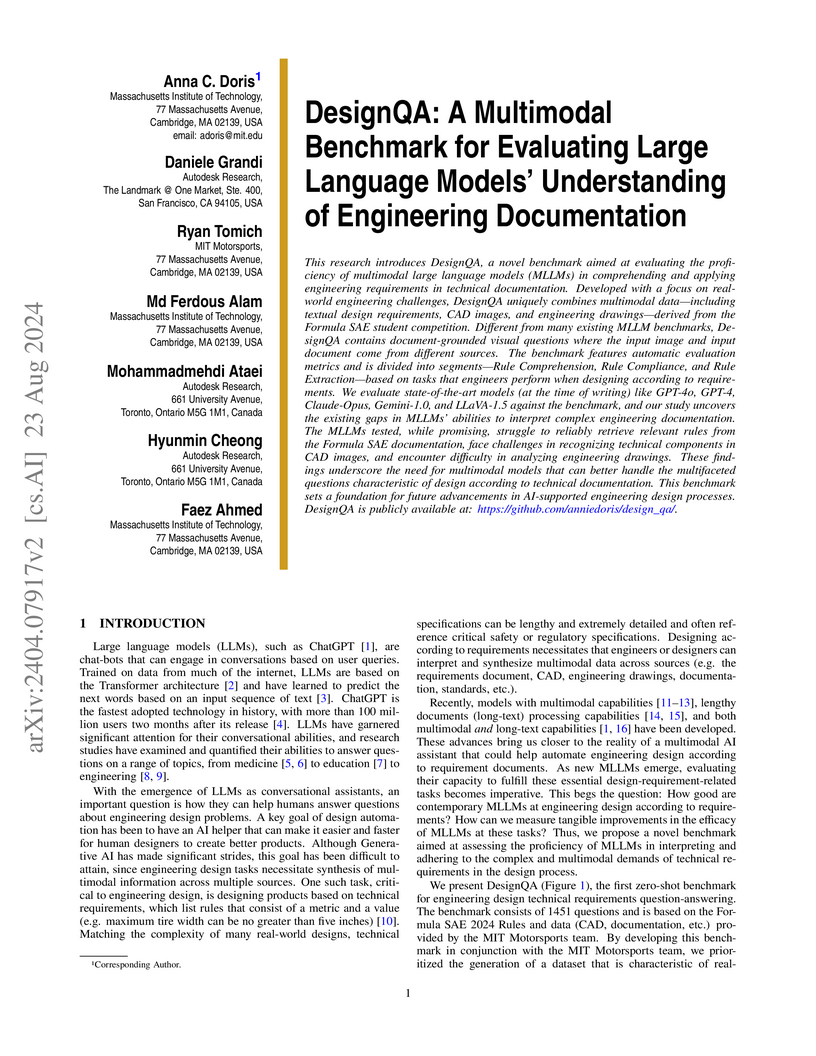

This research introduces DesignQA, a novel benchmark aimed at evaluating the

proficiency of multimodal large language models (MLLMs) in comprehending and

applying engineering requirements in technical documentation. Developed with a

focus on real-world engineering challenges, DesignQA uniquely combines

multimodal data-including textual design requirements, CAD images, and

engineering drawings-derived from the Formula SAE student competition.

Different from many existing MLLM benchmarks, DesignQA contains

document-grounded visual questions where the input image and input document

come from different sources. The benchmark features automatic evaluation

metrics and is divided into segments-Rule Comprehension, Rule Compliance, and

Rule Extraction-based on tasks that engineers perform when designing according

to requirements. We evaluate state-of-the-art models (at the time of writing)

like GPT-4o, GPT-4, Claude-Opus, Gemini-1.0, and LLaVA-1.5 against the

benchmark, and our study uncovers the existing gaps in MLLMs' abilities to

interpret complex engineering documentation. The MLLMs tested, while promising,

struggle to reliably retrieve relevant rules from the Formula SAE

documentation, face challenges in recognizing technical components in CAD

images, and encounter difficulty in analyzing engineering drawings. These

findings underscore the need for multimodal models that can better handle the

multifaceted questions characteristic of design according to technical

documentation. This benchmark sets a foundation for future advancements in

AI-supported engineering design processes. DesignQA is publicly available at:

https://github.com/anniedoris/design_qa/.

The University of Toronto and Autodesk Research introduce Physics Context Builders (PCBs), a modular framework that enhances Vision-Language Models' physical reasoning by having specialized smaller VLMs generate rich, simulation-trained textual descriptions of scenes. These descriptions provide context to larger foundation models, improving performance on tasks like stability prediction by up to 25.5% and demonstrating effective sim-to-real transfer.

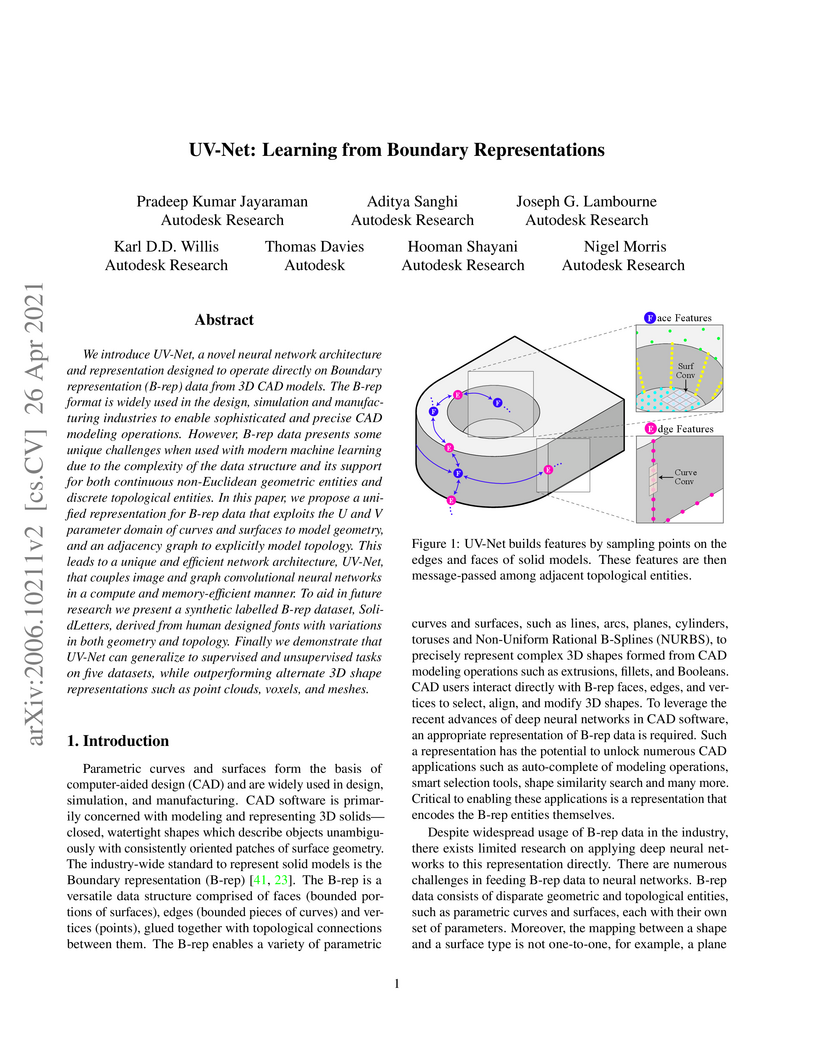

UV-Net introduces a deep learning architecture that directly processes Boundary Representations (B-reps), the industry-standard format for 3D CAD models, by combining parameterized geometric data with topological graph information. This approach enables precise learning on CAD data, demonstrating superior performance in shape classification, face segmentation, and self-supervised shape retrieval across various datasets.

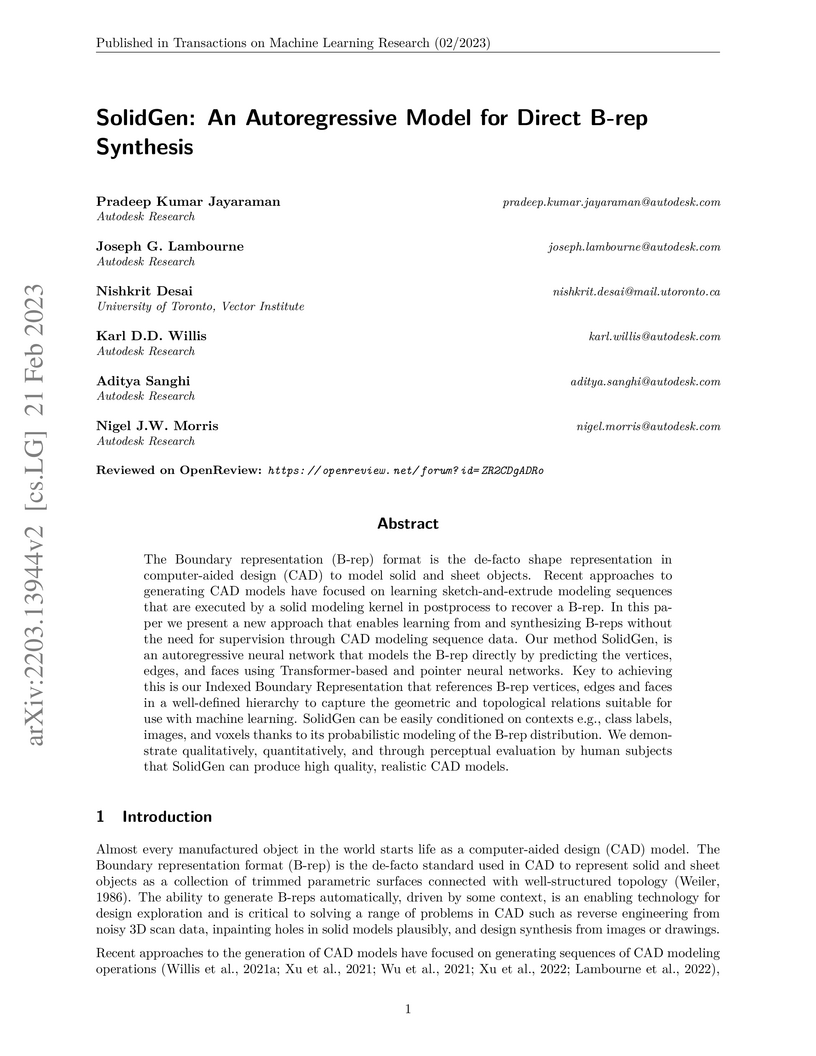

SolidGen introduces an autoregressive model capable of directly synthesizing Boundary representation (B-rep) CAD models, the industry standard for solid and sheet objects. The approach achieves high validity for generated models (83-88%) and produces outputs judged as perceptually indistinguishable from real CAD data by human evaluators.

Researchers from Autodesk Research, Mila, and Concordia University developed an unsupervised deep learning framework for 3D mesh parameterization that integrates semantic and visibility objectives. This method generates UV atlases that align with meaningful 3D parts and place cutting seams in less visible regions, achieving superior perceptual quality while maintaining geometric fidelity compared to existing baselines.

20 Apr 2023

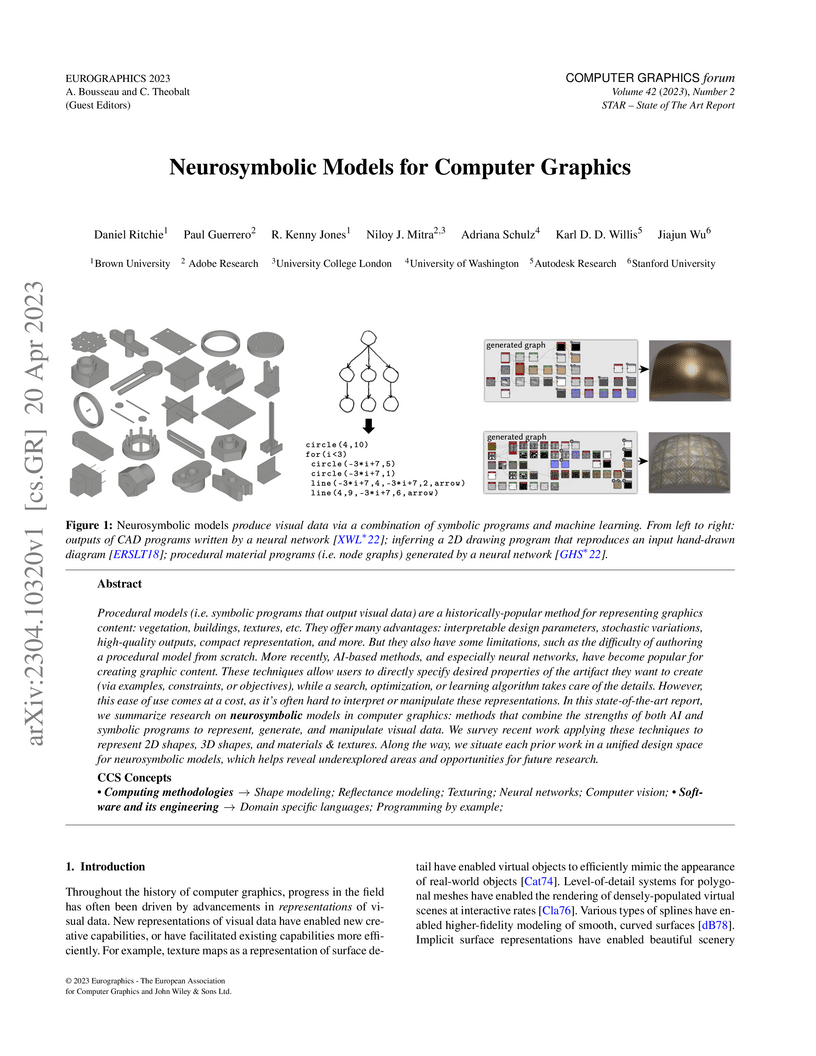

Procedural models (i.e. symbolic programs that output visual data) are a

historically-popular method for representing graphics content: vegetation,

buildings, textures, etc. They offer many advantages: interpretable design

parameters, stochastic variations, high-quality outputs, compact

representation, and more. But they also have some limitations, such as the

difficulty of authoring a procedural model from scratch. More recently,

AI-based methods, and especially neural networks, have become popular for

creating graphic content. These techniques allow users to directly specify

desired properties of the artifact they want to create (via examples,

constraints, or objectives), while a search, optimization, or learning

algorithm takes care of the details. However, this ease of use comes at a cost,

as it's often hard to interpret or manipulate these representations. In this

state-of-the-art report, we summarize research on neurosymbolic models in

computer graphics: methods that combine the strengths of both AI and symbolic

programs to represent, generate, and manipulate visual data. We survey recent

work applying these techniques to represent 2D shapes, 3D shapes, and materials

& textures. Along the way, we situate each prior work in a unified design space

for neurosymbolic models, which helps reveal underexplored areas and

opportunities for future research.

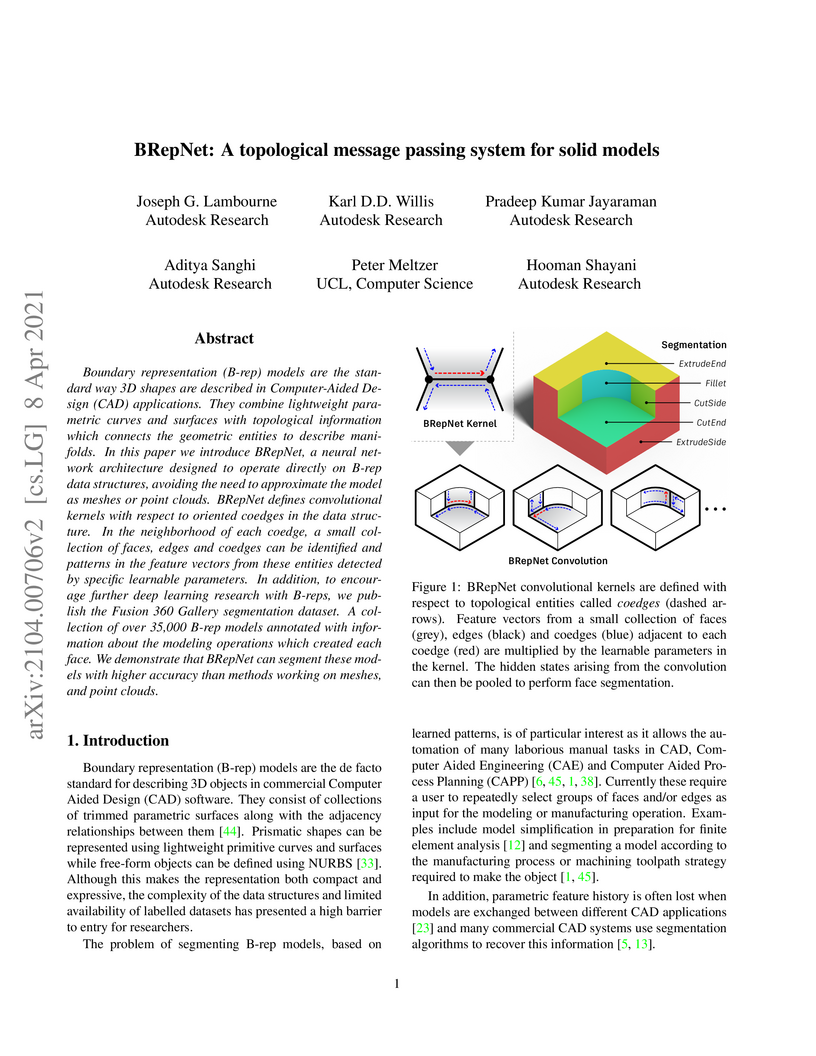

Boundary representation (B-rep) models are the standard way 3D shapes are described in Computer-Aided Design (CAD) applications. They combine lightweight parametric curves and surfaces with topological information which connects the geometric entities to describe manifolds. In this paper we introduce BRepNet, a neural network architecture designed to operate directly on B-rep data structures, avoiding the need to approximate the model as meshes or point clouds. BRepNet defines convolutional kernels with respect to oriented coedges in the data structure. In the neighborhood of each coedge, a small collection of faces, edges and coedges can be identified and patterns in the feature vectors from these entities detected by specific learnable parameters. In addition, to encourage further deep learning research with B-reps, we publish the Fusion 360 Gallery segmentation dataset. A collection of over 35,000 B-rep models annotated with information about the modeling operations which created each face. We demonstrate that BRepNet can segment these models with higher accuracy than methods working on meshes, and point clouds.

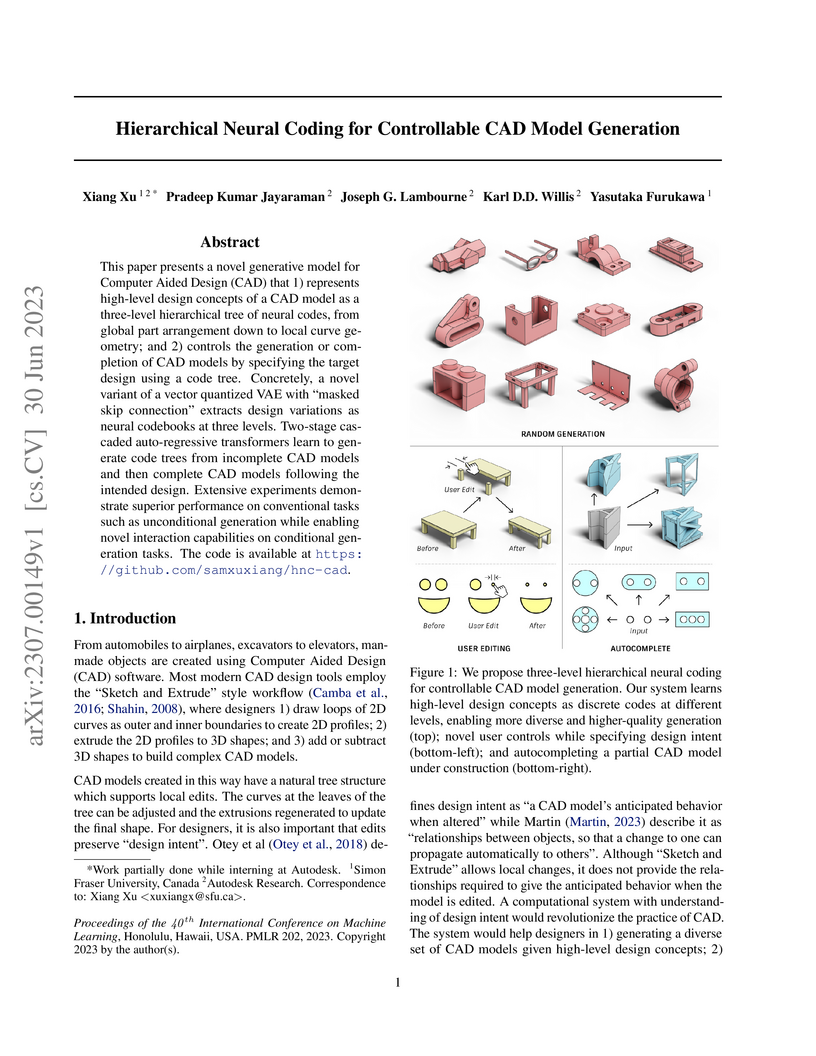

Researchers from Simon Fraser University and Autodesk Research developed a hierarchical neural coding framework that uses a three-level code tree (Solid, Profile, Loop) to capture design intent, enabling more realistic, diverse, and controllable CAD model generation with capabilities like design-preserving editing and auto-completion.

25 Oct 2025

Reduced-order modeling (ROM) of time-dependent and parameterized differential equations aims to accelerate the simulation of complex high-dimensional systems by learning a compact latent manifold representation that captures the characteristics of the solution fields and their time-dependent dynamics. Although high-fidelity numerical solvers generate the training datasets, they have thus far been excluded from the training process, causing the learned latent dynamics to drift away from the discretized governing physics. This mismatch often limits generalization and forecasting capabilities. In this work, we propose Physics-informed ROM (Φ-ROM) by incorporating differentiable PDE solvers into the training procedure. Specifically, the latent space dynamics and its dependence on PDE parameters are shaped directly by the governing physics encoded in the solver, ensuring a strong correspondence between the full and reduced systems. Our model outperforms state-of-the-art data-driven ROMs and other physics-informed strategies by accurately generalizing to new dynamics arising from unseen parameters, enabling long-term forecasting beyond the training horizon, maintaining continuity in both time and space, and reducing the data cost. Furthermore, Φ-ROM learns to recover and forecast the solution fields even when trained or evaluated with sparse and irregular observations of the fields, providing a flexible framework for field reconstruction and data assimilation. We demonstrate the framework's robustness across various PDE solvers and highlight its broad applicability by providing an open-source JAX implementation that is readily extensible to other PDE systems and differentiable solvers, available at this https URL.

Segmenting an object in a video presents significant challenges. Each pixel

must be accurately labelled, and these labels must remain consistent across

frames. The difficulty increases when the segmentation is with arbitrary

granularity, meaning the number of segments can vary arbitrarily, and masks are

defined based on only one or a few sample images. In this paper, we address

this issue by employing a pre-trained text to image diffusion model

supplemented with an additional tracking mechanism. We demonstrate that our

approach can effectively manage various segmentation scenarios and outperforms

state-of-the-art alternatives.

Elicitron, a framework developed by Autodesk Research, leverages Large Language Models to simulate diverse user experiences and automatically identify product requirements, including latent needs. This approach enables more efficient and comprehensive discovery of user needs, demonstrating an increased rate of latent need identification and high accuracy in automated analysis compared to traditional methods.

We present SkexGen, a novel autoregressive generative model for computer-aided design (CAD) construction sequences containing sketch-and-extrude modeling operations. Our model utilizes distinct Transformer architectures to encode topological, geometric, and extrusion variations of construction sequences into disentangled codebooks. Autoregressive Transformer decoders generate CAD construction sequences sharing certain properties specified by the codebook vectors. Extensive experiments demonstrate that our disentangled codebook representation generates diverse and high-quality CAD models, enhances user control, and enables efficient exploration of the design space. The code is available at this https URL.

Inductive reasoning is a core problem-solving capacity: humans can identify

underlying principles from a few examples, which robustly generalize to novel

scenarios. Recent work evaluates large language models (LLMs) on inductive

reasoning tasks by directly prompting them yielding "in context learning." This

works well for straightforward inductive tasks but performs poorly on complex

tasks such as the Abstraction and Reasoning Corpus (ARC). In this work, we

propose to improve the inductive reasoning ability of LLMs by generating

explicit hypotheses at multiple levels of abstraction: we prompt the LLM to

propose multiple abstract hypotheses about the problem, in natural language,

then implement the natural language hypotheses as concrete Python programs.

These programs can be verified by running on observed examples and generalized

to novel inputs. To reduce the hypothesis search space, we explore steps to

filter the set of hypotheses to implement: we either ask the LLM to summarize

them into a smaller set of hypotheses or ask human annotators to select a

subset. We verify our pipeline's effectiveness on the ARC visual inductive

reasoning benchmark, its variant 1D-ARC, string transformation dataset SyGuS,

and list transformation dataset List Functions. On a random 100-problem subset

of ARC, our automated pipeline using LLM summaries achieves 30% accuracy,

outperforming the direct prompting baseline (accuracy of 17%). With the minimal

human input of selecting from LLM-generated candidates, performance is boosted

to 33%. Our ablations show that both abstract hypothesis generation and

concrete program representations benefit LLMs on inductive reasoning tasks.

Advances in 3D generative AI have enabled the creation of physical objects from text prompts, but challenges remain in creating objects involving multiple component types. We present a pipeline that integrates 3D generative AI with vision-language models (VLMs) to enable the robotic assembly of multi-component objects from natural language. Our method leverages VLMs for zero-shot, multi-modal reasoning about geometry and functionality to decompose AI-generated meshes into multi-component 3D models using predefined structural and panel components. We demonstrate that a VLM is capable of determining which mesh regions need panel components in addition to structural components, based on the object's geometry and functionality. Evaluation across test objects shows that users preferred the VLM-generated assignments 90.6% of the time, compared to 59.4% for rule-based and 2.5% for random assignment. Lastly, the system allows users to refine component assignments through conversational feedback, enabling greater human control and agency in making physical objects with generative AI and robotics.

08 Nov 2022

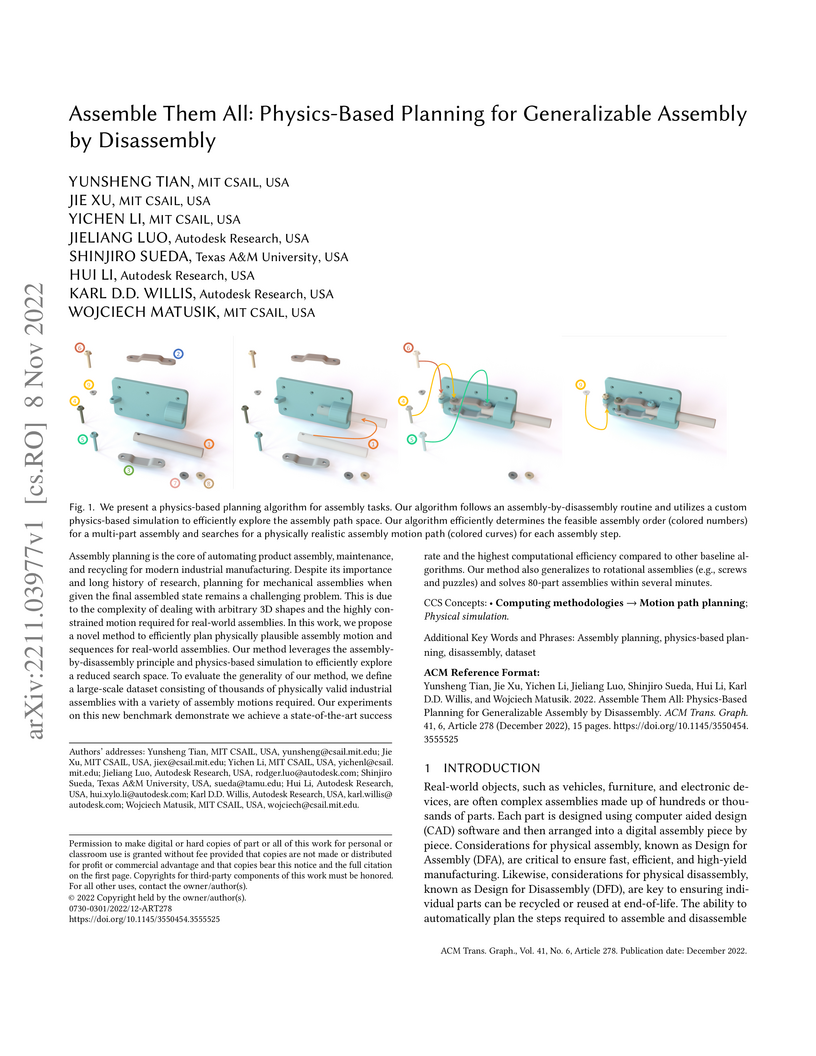

Researchers from MIT CSAIL and Autodesk Research developed "Assemble Them All," a physics-based framework for generating assembly sequences and motions by leveraging disassembly. This method achieves high success rates on a new large-scale dataset of thousands of industrial assemblies, notably disassembling a 53-part engine in 5 minutes, and outperforms geometric baselines in handling complex, constrained motions.

There are no more papers matching your filters at the moment.