Ericsson Canada

In Federated Learning (FL) with over-the-air aggregation, the quality of the signal received at the server critically depends on the receive scaling factors. While a larger scaling factor can reduce the effective noise power and improve training performance, it also compromises the privacy of devices by reducing uncertainty. In this work, we aim to adaptively design the receive scaling factors across training rounds to balance the trade-off between training convergence and privacy in an FL system under dynamic channel conditions. We formulate a stochastic optimization problem that minimizes the overall Rényi differential privacy (RDP) leakage over the entire training process, subject to a long-term constraint that ensures convergence of the global loss function. Our problem depends on unknown future information, and we observe that standard Lyapunov optimization is not applicable. Thus, we develop a new online algorithm, termed AdaScale, based on a sequence of novel per-round problems that can be solved efficiently. We further derive upper bounds on the dynamic regret and constraint violation of AdaSacle, establishing that it achieves diminishing dynamic regret in terms of time-averaged RDP leakage while ensuring convergence of FL training to a stationary point. Numerical experiments on canonical classification tasks show that our approach effectively reduces RDP and DP leakages compared with state-of-the-art benchmarks without compromising learning performance.

30 May 2024

Distributed Multiple-Input and Multiple-Output (D-MIMO) is envisioned to play a significant role in future wireless communication systems as an effective means to improve coverage and capacity. In this paper, we have studied the impact of a practical two-level data routing scheme on radio performance in a downlink D-MIMO scenario with segmented fronthaul. At the first level, a Distributed Unit (DU) is connected to the Aggregating Radio Units (ARUs) that behave as cluster heads for the selected serving RU groups. At the second level, the selected ARUs connect with the additional serving RUs. At each route discovery level, RUs and/or ARUs share information with each other. The aim of the proposed framework is to efficiently select serving RUs and ARUs so that the practical data routing impact for each User Equipment (UE) connection is minimal. The resulting post-routing Signal-to-Interference plus Noise Ratio (SINR) among all UEs is analyzed after the routing constraints have been applied. The results show that limited fronthaul segment capacity causes connection failures with the serving RUs of individual UEs, especially when long routing path lengths are required. Depending on whether the failures occur at the first or the second routing level, a UE may be dropped or its SINR may be reduced. To minimize the DU-ARU connection failures, the segment capacity of the segments closest to the DU is set as double as the remaining segments. When the number of active co-scheduled UEs is kept low enough, practical segment capacities suffice to achieve a zero UE dropping rate. Besides, the proper choice of maximum path length setting should take into account segment capacity and its utilization due to the relation between the two.

It is common practice to outsource the training of machine learning models to

cloud providers. Clients who do so gain from the cloud's economies of scale,

but implicitly assume trust: the server should not deviate from the client's

training procedure. A malicious server may, for instance, seek to insert

backdoors in the model. Detecting a backdoored model without prior knowledge of

both the backdoor attack and its accompanying trigger remains a challenging

problem. In this paper, we show that a client with access to multiple cloud

providers can replicate a subset of training steps across multiple servers to

detect deviation from the training procedure in a similar manner to

differential testing. Assuming some cloud-provided servers are benign, we

identify malicious servers by the substantial difference between model updates

required for backdooring and those resulting from clean training. Perhaps the

strongest advantage of our approach is its suitability to clients that have

limited-to-no local compute capability to perform training; we leverage the

existence of multiple cloud providers to identify malicious updates without

expensive human labeling or heavy computation. We demonstrate the capabilities

of our approach on an outsourced supervised learning task where 50% of the

cloud providers insert their own backdoor; our approach is able to correctly

identify 99.6% of them. In essence, our approach is successful because it

replaces the signature-based paradigm taken by existing approaches with an

anomaly-based detection paradigm. Furthermore, our approach is robust to

several attacks from adaptive adversaries utilizing knowledge of our detection

scheme.

We propose to control handoffs (HOs) in user-centric cell-free massive MIMO networks through a partially observable Markov decision process (POMDP) with the state space representing the discrete versions of the large-scale fading (LSF) and the action space representing the association decisions of the user with the access points. Our proposed formulation accounts for the temporal evolution and the partial observability of the channel states. This allows us to consider future rewards when performing HO decisions, and hence obtain a robust HO policy. To alleviate the high complexity of solving our POMDP, we follow a divide-and-conquer approach by breaking down the POMDP formulation into sub-problems, each solved individually. Then, the policy and the candidate cluster of access points for the best solved sub-problem is used to perform HOs within a specific time horizon. We control the number of HOs by determining when to use the HO policy. Our simulation results show that our proposed solution reduces HOs by 47% compared to time-triggered LSF-based HOs and by 70% compared to data rate threshold-triggered LSF-based HOs. This amount can be further reduced through increasing the time horizon of the POMDP.

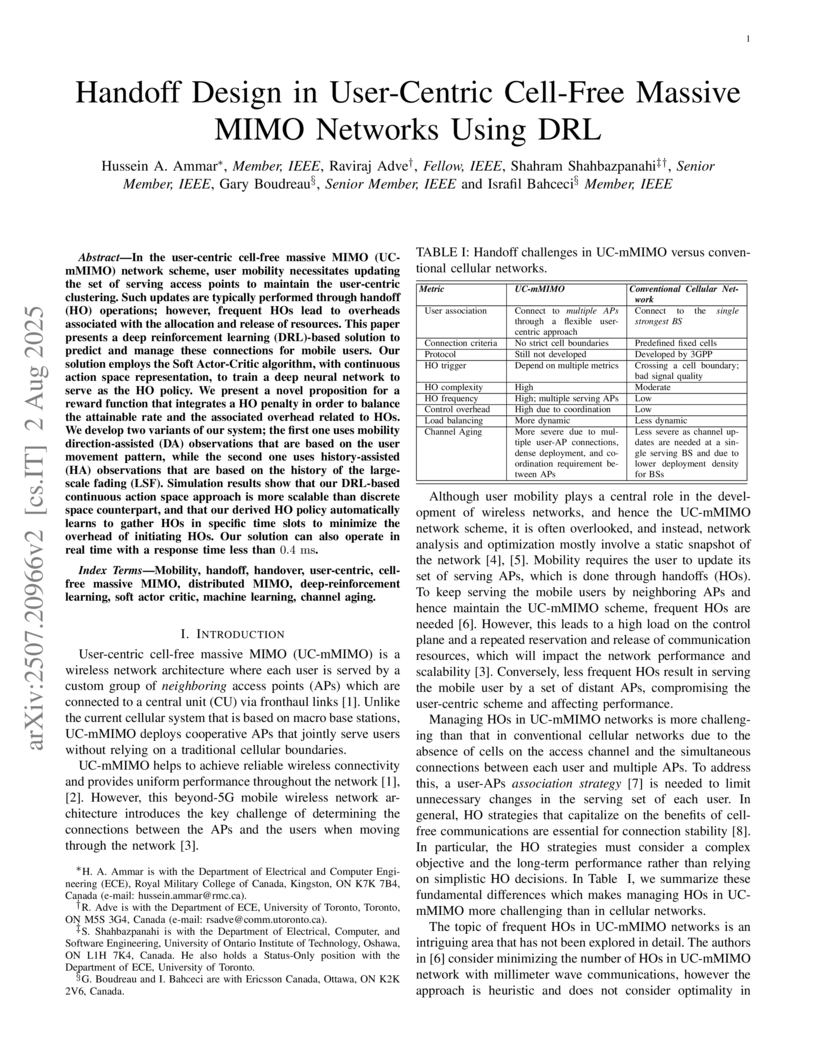

In the user-centric cell-free massive MIMO (UC-mMIMO) network scheme, user mobility necessitates updating the set of serving access points to maintain the user-centric clustering. Such updates are typically performed through handoff (HO) operations; however, frequent HOs lead to overheads associated with the allocation and release of resources. This paper presents a deep reinforcement learning (DRL)-based solution to predict and manage these connections for mobile users. Our solution employs the Soft Actor-Critic algorithm, with continuous action space representation, to train a deep neural network to serve as the HO policy. We present a novel proposition for a reward function that integrates a HO penalty in order to balance the attainable rate and the associated overhead related to HOs. We develop two variants of our system; the first one uses mobility direction-assisted (DA) observations that are based on the user movement pattern, while the second one uses history-assisted (HA) observations that are based on the history of the large-scale fading (LSF). Simulation results show that our DRL-based continuous action space approach is more scalable than discrete space counterpart, and that our derived HO policy automatically learns to gather HOs in specific time slots to minimize the overhead of initiating HOs. Our solution can also operate in real time with a response time less than 0.4 ms.

We propose to control handoffs (HOs) in user-centric cell-free massive MIMO networks through a partially observable Markov decision process (POMDP) with the state space representing the discrete versions of the large-scale fading (LSF) and the action space representing the association decisions of the user with the access points. Our proposed formulation accounts for the temporal evolution and the partial observability of the channel states. This allows us to consider future rewards when performing HO decisions, and hence obtain a robust HO policy. To alleviate the high complexity of solving our POMDP, we follow a divide-and-conquer approach by breaking down the POMDP formulation into sub-problems, each solved individually. Then, the policy and the candidate cluster of access points for the best solved sub-problem is used to perform HOs within a specific time horizon. We control the number of HOs by determining when to use the HO policy. Our simulation results show that our proposed solution reduces HOs by 47% compared to time-triggered LSF-based HOs and by 70% compared to data rate threshold-triggered LSF-based HOs. This amount can be further reduced through increasing the time horizon of the POMDP.

This paper studies power-efficient uplink transmission design for federated learning (FL) that employs over-the-air analog aggregation and multi-antenna beamforming at the server. We jointly optimize device transmit weights and receive beamforming at each FL communication round to minimize the total device transmit power while ensuring convergence in FL training. Through our convergence analysis, we establish sufficient conditions on the aggregation error to guarantee FL training convergence. Utilizing these conditions, we reformulate the power minimization problem into a unique bi-convex structure that contains a transmit beamforming optimization subproblem and a receive beamforming feasibility subproblem. Despite this unconventional structure, we propose a novel alternating optimization approach that guarantees monotonic decrease of the objective value, to allow convergence to a partial optimum. We further consider imperfect channel state information (CSI), which requires accounting for the channel estimation errors in the power minimization problem and FL convergence analysis. We propose a CSI-error-aware joint beamforming algorithm, which can substantially outperform one that does not account for channel estimation errors. Simulation with canonical classification datasets demonstrates that our proposed methods achieve significant power reduction compared to existing benchmarks across a wide range of parameter settings, while attaining the same target accuracy under the same convergence rate.

This paper studies the design of wireless federated learning (FL) for simultaneously training multiple machine learning models. We consider round robin device-model assignment and downlink beamforming for concurrent multiple model updates. After formulating the joint downlink-uplink transmission process, we derive the per-model global update expression over communication rounds, capturing the effect of beamforming and noisy reception. To maximize the multi-model training convergence rate, we derive an upper bound on the optimality gap of the global model update and use it to formulate a multi-group multicast beamforming problem. We show that this problem can be converted to minimizing the sum of inverse received signal-to-interference-plus-noise ratios, which can be solved efficiently by projected gradient descent. Simulation shows that our proposed multi-model FL solution outperforms other alternatives, including conventional single-model sequential training and multi-model zero-forcing beamforming.

03 Oct 2025

We consider downlink broadcast design for federated learning (FL) in a wireless network with imperfect channel state information (CSI). Aiming to reduce transmission latency, we propose a segmented analog broadcast (SegAB) scheme, where the parameter server, hosted by a multi-antenna base station, partitions the global model parameter vector into segments and transmits multiple parameters from these segments simultaneously over a common downlink channel. We formulate the SegAB transmission and reception processes to characterize FL training convergence, capturing the effects of downlink beamforming and imperfect CSI. To maximize the FL training convergence rate, we establish an upper bound on the expected model optimality gap and show that it can be minimized separately over the training rounds in online optimization, without requiring knowledge of the future channel states. We solve the per-round problem to achieve robust downlink beamforming, by minimizing the worst-case objective via an epigraph representation and a feasibility subproblem that ensures monotone convergence. Simulation with standard classification tasks under typical wireless network setting shows that the proposed SegAB substantially outperforms conventional full-model per-parameter broadcast and other alternatives.

It is common practice to outsource the training of machine learning models to cloud providers. Clients who do so gain from the cloud's economies of scale, but implicitly assume trust: the server should not deviate from the client's training procedure. A malicious server may, for instance, seek to insert backdoors in the model. Detecting a backdoored model without prior knowledge of both the backdoor attack and its accompanying trigger remains a challenging problem. In this paper, we show that a client with access to multiple cloud providers can replicate a subset of training steps across multiple servers to detect deviation from the training procedure in a similar manner to differential testing. Assuming some cloud-provided servers are benign, we identify malicious servers by the substantial difference between model updates required for backdooring and those resulting from clean training. Perhaps the strongest advantage of our approach is its suitability to clients that have limited-to-no local compute capability to perform training; we leverage the existence of multiple cloud providers to identify malicious updates without expensive human labeling or heavy computation. We demonstrate the capabilities of our approach on an outsourced supervised learning task where 50% of the cloud providers insert their own backdoor; our approach is able to correctly identify 99.6% of them. In essence, our approach is successful because it replaces the signature-based paradigm taken by existing approaches with an anomaly-based detection paradigm. Furthermore, our approach is robust to several attacks from adaptive adversaries utilizing knowledge of our detection scheme.

17 Aug 2024

This paper presents our experience developing a Llama-based chatbot for

question answering about continuous integration and continuous delivery (CI/CD)

at Ericsson, a multinational telecommunications company. Our chatbot is

designed to handle the specificities of CI/CD documents at Ericsson, employing

a retrieval-augmented generation (RAG) model to enhance accuracy and relevance.

Our empirical evaluation of the chatbot on industrial CI/CD-related questions

indicates that an ensemble retriever, combining BM25 and embedding retrievers,

yields the best performance. When evaluated against a ground truth of 72 CI/CD

questions and answers at Ericsson, our most accurate chatbot configuration

provides fully correct answers for 61.11% of the questions, partially correct

answers for 26.39%, and incorrect answers for 12.50%. Through an error analysis

of the partially correct and incorrect answers, we discuss the underlying

causes of inaccuracies and provide insights for further refinement. We also

reflect on lessons learned and suggest future directions for further improving

our chatbot's accuracy.

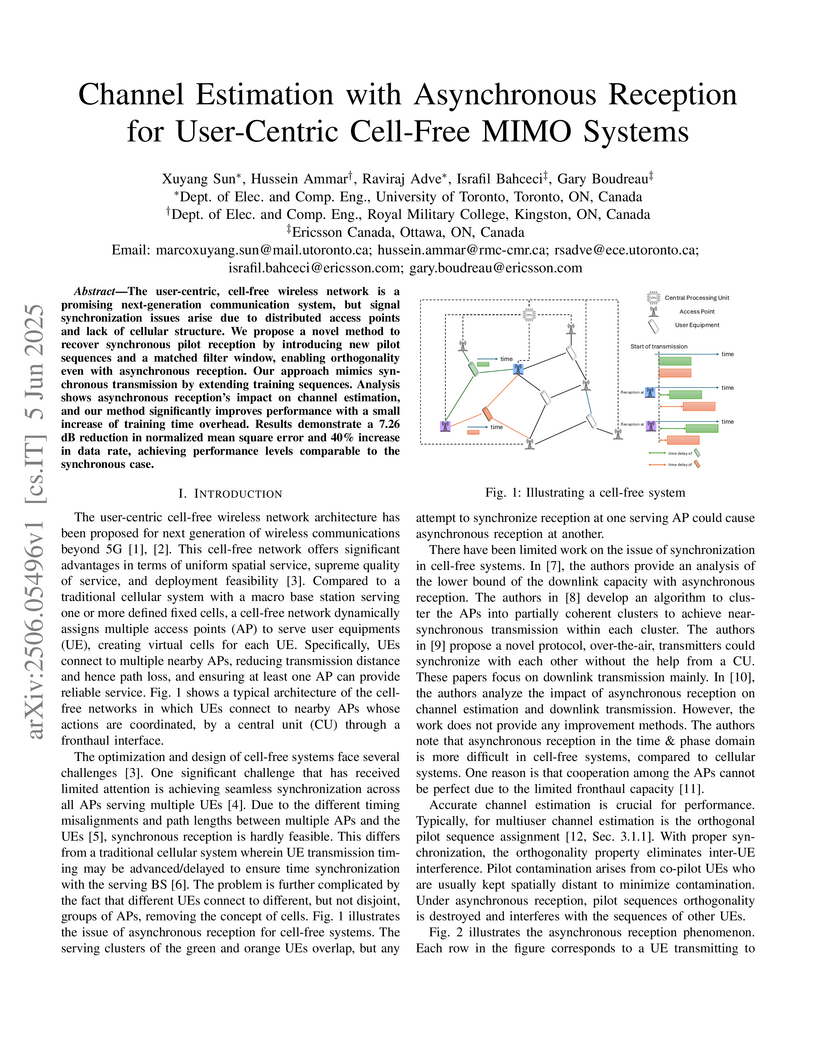

The user-centric, cell-free wireless network is a promising next-generation

communication system, but signal synchronization issues arise due to

distributed access points and lack of cellular structure. We propose a novel

method to recover synchronous pilot reception by introducing new pilot

sequences and a matched filter window, enabling orthogonality even with

asynchronous reception. Our approach mimics synchronous transmission by

extending training sequences. Analysis shows asynchronous reception's impact on

channel estimation, and our method significantly improves performance with a

small increase of training time overhead. Results demonstrate a 7.26 dB

reduction in normalized mean square error and 40% increase in data rate,

achieving performance levels comparable to the synchronous case.

Error accumulation is an essential component of the Top-k sparsification method in distributed gradient descent. It implicitly scales the learning rate and prevents the slow-down of lateral movement, but it can also deteriorate convergence. This paper proposes a novel sparsification algorithm called regularized Top-k (RegTop-k) that controls the learning rate scaling of error accumulation. The algorithm is developed by looking at the gradient sparsification as an inference problem and determining a Bayesian optimal sparsification mask via maximum-a-posteriori estimation. It utilizes past aggregated gradients to evaluate posterior statistics, based on which it prioritizes the local gradient entries. Numerical experiments with ResNet-18 on CIFAR-10 show that at 0.1% sparsification, RegTop-k achieves about 8% higher accuracy than standard Top-k.

Improving Wireless Federated Learning via Joint Downlink-Uplink Beamforming over Analog Transmission

Improving Wireless Federated Learning via Joint Downlink-Uplink Beamforming over Analog Transmission

Federated learning (FL) over wireless networks using analog transmission can efficiently utilize the communication resource but is susceptible to errors caused by noisy wireless links. In this paper, assuming a multi-antenna base station, we jointly design downlink-uplink beamforming to maximize FL training convergence over time-varying wireless channels. We derive the round-trip model updating equation and use it to analyze the FL training convergence to capture the effects of downlink and uplink beamforming and the local model training on the global model update. Aiming to maximize the FL training convergence rate, we propose a low-complexity joint downlink-uplink beamforming (JDUBF) algorithm, which adopts a greedy approach to decompose the multi-round joint optimization and convert it into per-round online joint optimization problems. The per-round problem is further decomposed into three subproblems over a block coordinate descent framework, where we show that each subproblem can be efficiently solved by projected gradient descent with fast closed-form updates. An efficient initialization method that leads to a closed-form initial point is also proposed to accelerate the convergence of JDUBF. Simulation demonstrates that JDUBF substantially outperforms the conventional separate-link beamforming design.

Federated learning (FL) with over-the-air computation efficiently utilizes

the communication resources, but it can still experience significant latency

when each device transmits a large number of model parameters to the server.

This paper proposes the Segmented Over-The-Air (SegOTA) method for FL, which

reduces latency by partitioning devices into groups and letting each group

transmit only one segment of the model parameters in each communication round.

Considering a multi-antenna server, we model the SegOTA transmission and

reception process to establish an upper bound on the expected model learning

optimality gap. We minimize this upper bound, by formulating the per-round

online optimization of device grouping and joint transmit-receive beamforming,

for which we derive efficient closed-form solutions. Simulation results show

that our proposed SegOTA substantially outperforms the conventional full-model

OTA approach and other common alternatives.

29 Apr 2025

Ambitions for the next generation of wireless communication include high data

rates, low latency, ubiquitous access, ensuring sustainability (in terms of

consumption of energy and natural resources), all while maintaining a

reasonable level of implementation complexity. Achieving these goals

necessitates reforms in cellular networks, specifically in the physical layer

and antenna design. The deployment of transmissive metasurfaces at basestations

(BSs) presents an appealing solution, enabling beamforming in the radiated wave

domain, minimizing the need for energy-hungry RF chains. Among various

metasurface-based antenna designs, we propose using Huygens' metasurface-based

antennas (HMAs) at BSs. Huygens' metasurfaces offer an attractive solution for

antennas because, by utilizing Huygens' equivalence principle, they allow

independent control over both the amplitude and phase of the transmitted

electromagnetic wave. In this paper, we investigate the fundamental limits of

HMAs in wireless networks by integrating electromagnetic theory and information

theory within a unified analytical framework. Specifically, we model the unique

electromagnetic characteristics of HMAs and incorporate them into an

information-theoretic optimization framework to determine their maximum

achievable sum rate. By formulating an optimization problem that captures the

impact of HMA's hardware constraints and electromagnetic properties, we

quantify the channel capacity of HMA-assisted systems. We then compare the

performance of HMAs against phased arrays and other metasurface-based antennas

in both rich scattering and realistic 3GPP channels, highlighting their

potential in improving spectral and energy efficiency.

We develop two distributed downlink resource allocation algorithms for user-centric, cell-free, spatially-distributed, multiple-input multiple-output (MIMO) networks. In such networks, each user is served by a subset of nearby transmitters that we call distributed units or DUs. The operation of the DUs in a region is controlled by a central unit (CU). Our first scheme is implemented at the DUs, while the second is implemented at the CUs controlling these DUs. We define a hybrid quality of service metric that enables distributed optimization of system resources in a proportional fair manner. Specifically, each of our algorithms performs user scheduling, beamforming, and power control while accounting for channel estimation errors. Importantly, our algorithm does not require information exchange amongst DUs (CUs) for the DU-distributed (CU-distributed) system, while also smoothly converging. Our results show that our CU-distributed system provides 1.3- to 1.8-fold network throughput compared to the DU-distributed system, with minor increases in complexity and front-haul load - and substantial gains over benchmark schemes like local zero-forcing. We also analyze the trade-offs provided by the CU-distributed system, hence highlighting the significance of deploying multiple CUs in user-centric cell-free networks.

11 Oct 2025

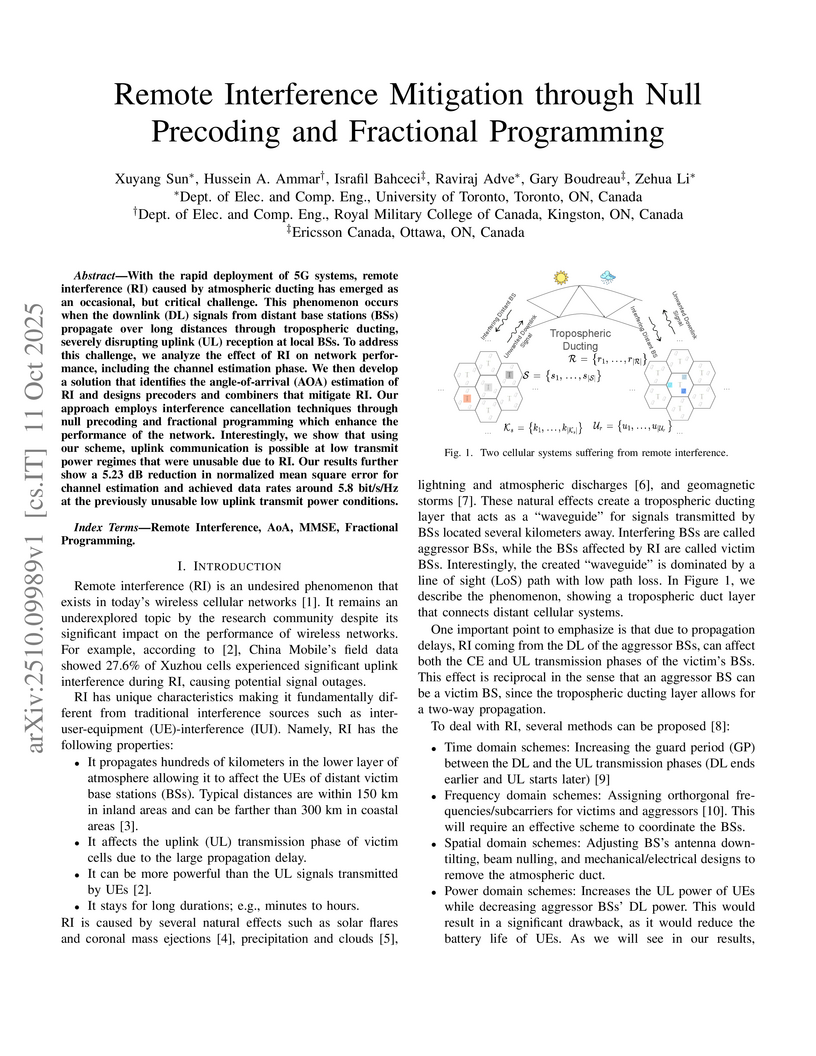

With the rapid deployment of 5G systems, remote interference (RI) caused by atmospheric ducting has emerged as an occasional, but critical challenge. This phenomenon occurs when the downlink (DL) signals from distant base stations (BSs) propagate over long distances through tropospheric ducting, severely disrupting uplink (UL) reception at local BSs. To address this challenge, we analyze the effect of RI on network performance, including the channel estimation phase. We then develop a solution that identifies the angle-of-arrival (AOA) estimation of RI and designs precoders and combiners that mitigate RI. Our approach employs interference cancellation techniques through null precoding and fractional programming which enhance the performance of the network. Interestingly, we show that using our scheme, uplink communication is possible at low transmit power regimes that were unusable due to RI. Our results further show a 5.23~dB reduction in normalized mean square error for channel estimation and achieved data rates around 5.8~bit/s/Hz at the previously unusable low uplink transmit power conditions.

01 Jul 2023

We study joint downlink-uplink beamforming design for wireless federated learning (FL) with a multi-antenna base station. Considering analog transmission over noisy channels and uplink over-the-air aggregation, we derive the global model update expression over communication rounds. We then obtain an upper bound on the expected global loss function, capturing the downlink and uplink beamforming and receiver noise effect. We propose a low-complexity joint beamforming algorithm to minimize this upper bound, which employs alternating optimization to breakdown the problem into three subproblems, each solved via closed-form gradient updates. Simulation under practical wireless system setup shows that our proposed joint beamforming design solution substantially outperforms the conventional separate-link design approach and nearly attains the performance of ideal FL with error-free communication links.

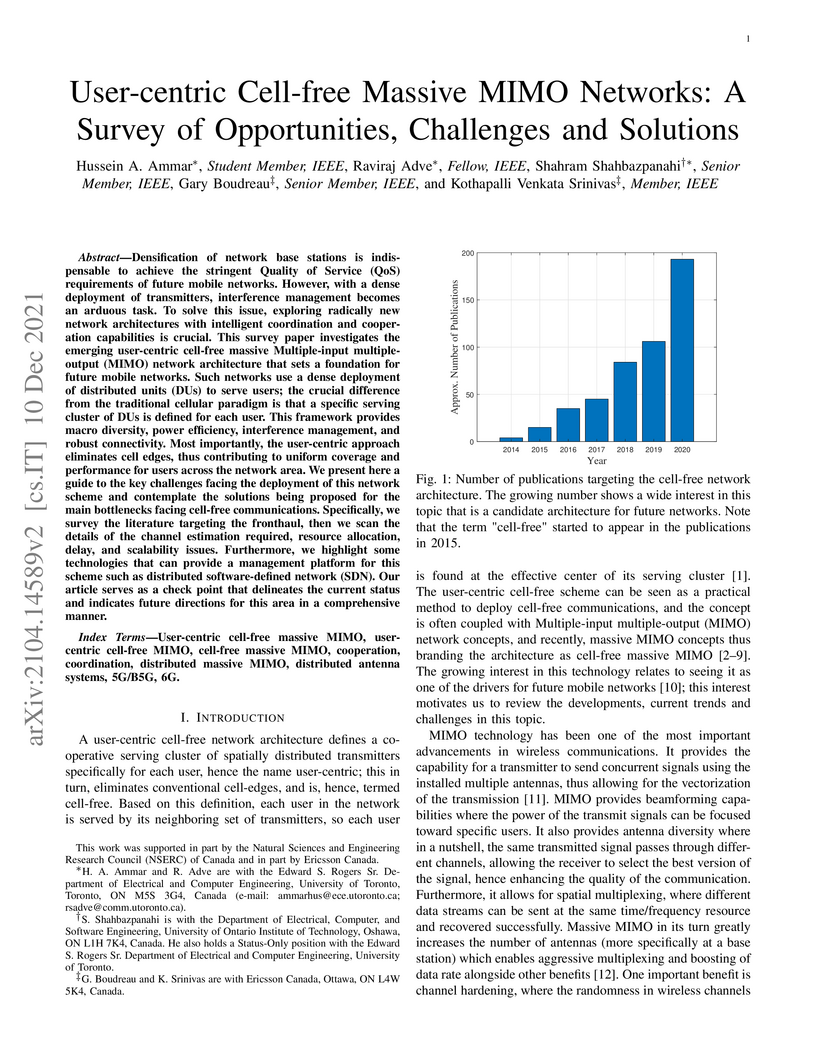

Densification of network base stations is indispensable to achieve the

stringent Quality of Service (QoS) requirements of future mobile networks.

However, with a dense deployment of transmitters, interference management

becomes an arduous task. To solve this issue, exploring radically new network

architectures with intelligent coordination and cooperation capabilities is

crucial. This survey paper investigates the emerging user-centric cell-free

massive Multiple-input multiple-output (MIMO) network architecture that sets a

foundation for future mobile networks. Such networks use a dense deployment of

distributed units (DUs) to serve users; the crucial difference from the

traditional cellular paradigm is that a specific serving cluster of DUs is

defined for each user. This framework provides macro diversity, power

efficiency, interference management, and robust connectivity. Most importantly,

the user-centric approach eliminates cell edges, thus contributing to uniform

coverage and performance for users across the network area. We present here a

guide to the key challenges facing the deployment of this network scheme and

contemplate the solutions being proposed for the main bottlenecks facing

cell-free communications. Specifically, we survey the literature targeting the

fronthaul, then we scan the details of the channel estimation required,

resource allocation, delay, and scalability issues. Furthermore, we highlight

some technologies that can provide a management platform for this scheme such

as distributed software-defined network (SDN). Our article serves as a check

point that delineates the current status and indicates future directions for

this area in a comprehensive manner.

There are no more papers matching your filters at the moment.