Fraunhofer IOSB

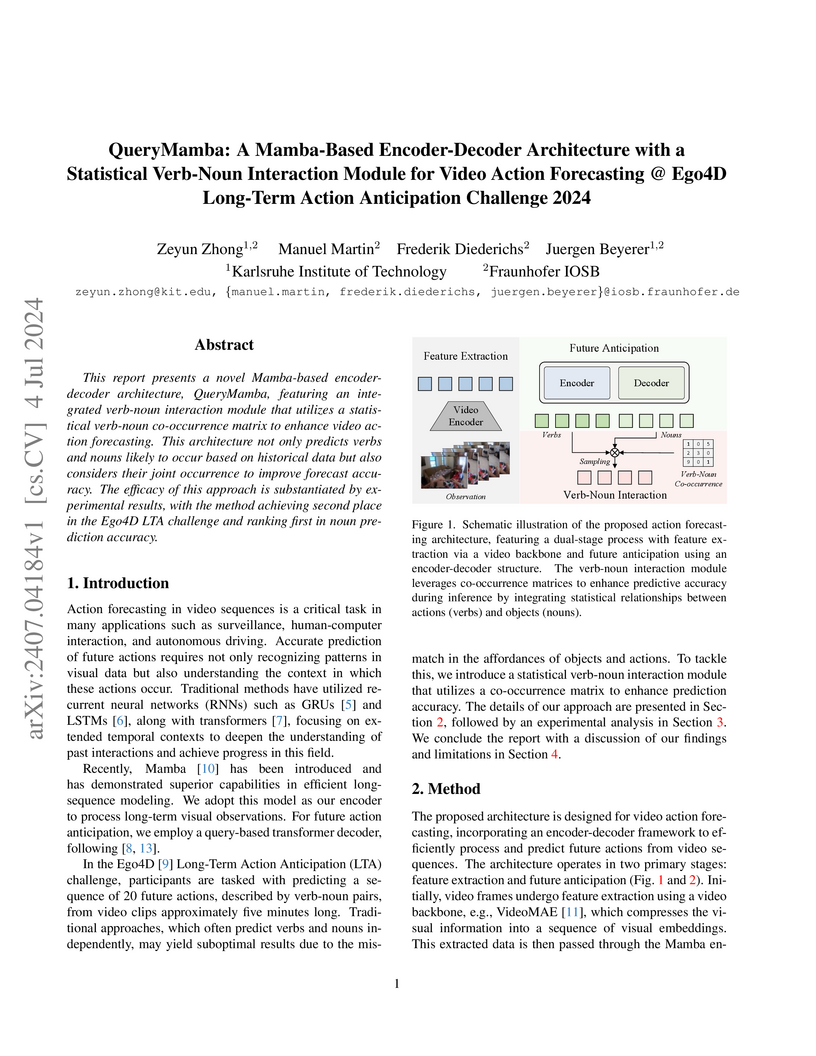

This report presents a novel Mamba-based encoder-decoder architecture,

QueryMamba, featuring an integrated verb-noun interaction module that utilizes

a statistical verb-noun co-occurrence matrix to enhance video action

forecasting. This architecture not only predicts verbs and nouns likely to

occur based on historical data but also considers their joint occurrence to

improve forecast accuracy. The efficacy of this approach is substantiated by

experimental results, with the method achieving second place in the Ego4D LTA

challenge and ranking first in noun prediction accuracy.

Driven by the increasing demand for accurate and efficient representation of 3D data in various domains, point cloud sampling has emerged as a pivotal research topic in 3D computer vision. Recently, learning-to-sample methods have garnered growing interest from the community, particularly for their ability to be jointly trained with downstream tasks. However, previous learning-based sampling methods either lead to unrecognizable sampling patterns by generating a new point cloud or biased sampled results by focusing excessively on sharp edge details. Moreover, they all overlook the natural variations in point distribution across different shapes, applying a similar sampling strategy to all point clouds. In this paper, we propose a Sparse Attention Map and Bin-based Learning method (termed SAMBLE) to learn shape-specific sampling strategies for point cloud shapes. SAMBLE effectively achieves an improved balance between sampling edge points for local details and preserving uniformity in the global shape, resulting in superior performance across multiple common point cloud downstream tasks, even in scenarios with few-point sampling.

Although human action anticipation is a task which is inherently multi-modal,

state-of-the-art methods on well known action anticipation datasets leverage

this data by applying ensemble methods and averaging scores of unimodal

anticipation networks. In this work we introduce transformer based modality

fusion techniques, which unify multi-modal data at an early stage. Our

Anticipative Feature Fusion Transformer (AFFT) proves to be superior to popular

score fusion approaches and presents state-of-the-art results outperforming

previous methods on EpicKitchens-100 and EGTEA Gaze+. Our model is easily

extensible and allows for adding new modalities without architectural changes.

Consequently, we extracted audio features on EpicKitchens-100 which we add to

the set of commonly used features in the community.

MeshVPR introduces a feature alignment framework that enables citywide visual place recognition by matching real-world query images against synthetic image databases rendered from 3D textured meshes. The framework effectively mitigates the synthetic-to-real domain shift, recovering most of the performance loss, reducing the Recall@5 drop to less than 4% for the SALAD model compared to using a real-image database.

21 Sep 2025

Conventional optical imaging is limited by diffraction, preventing discrimination of closely spaced incoherent sources. Inspired by quantum parameter estimation, this thesis explores spatial-mode demultiplexing (SPADE) as a method to overcome this limit by projecting light onto an orthonormal mode basis. In this work, we design and experimentally validate a practical implementation of spatial-mode demultiplexing via Multi-Plane Light Conversion (MPLC).

New York UniversityUniversity of LjubljanaJD Explore AcademyThe University of SydneyBeijing University of Posts and TelecommunicationsFondazione Bruno KesslerKitwareUniversity of CagliariUniversity of LisbonUniversity of SeoulUniversity of JyvaskylaUniversity of South-Eastern NorwayUniversity of TuebingenFraunhofer IOSBHelmut Schmidt UniversityTU KaiserslauternGranular AI

New York UniversityUniversity of LjubljanaJD Explore AcademyThe University of SydneyBeijing University of Posts and TelecommunicationsFondazione Bruno KesslerKitwareUniversity of CagliariUniversity of LisbonUniversity of SeoulUniversity of JyvaskylaUniversity of South-Eastern NorwayUniversity of TuebingenFraunhofer IOSBHelmut Schmidt UniversityTU KaiserslauternGranular AIThe 1st Workshop on Maritime Computer Vision (MaCVi) 2023 focused on maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicle (USV), and organized several subchallenges in this domain: (i) UAV-based Maritime Object Detection, (ii) UAV-based Maritime Object Tracking, (iii) USV-based Maritime Obstacle Segmentation and (iv) USV-based Maritime Obstacle Detection. The subchallenges were based on the SeaDronesSee and MODS benchmarks. This report summarizes the main findings of the individual subchallenges and introduces a new benchmark, called SeaDronesSee Object Detection v2, which extends the previous benchmark by including more classes and footage. We provide statistical and qualitative analyses, and assess trends in the best-performing methodologies of over 130 submissions. The methods are summarized in the appendix. The datasets, evaluation code and the leaderboard are publicly available at this https URL.

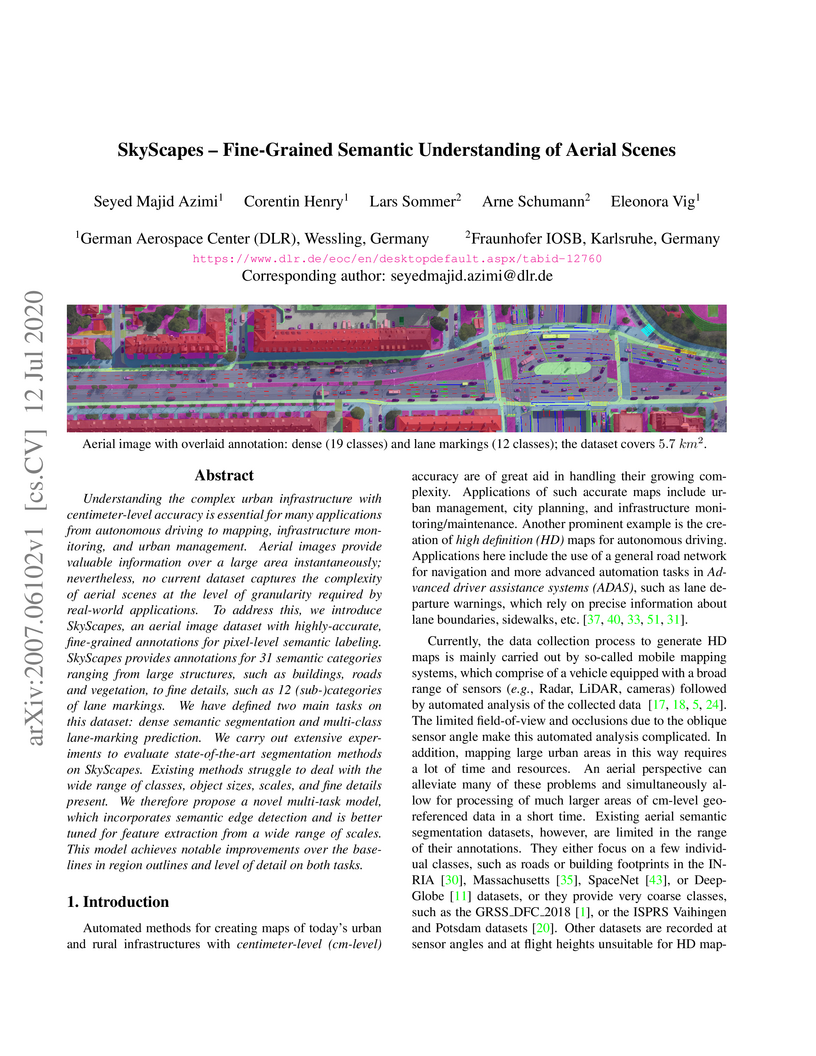

Understanding the complex urban infrastructure with centimeter-level accuracy

is essential for many applications from autonomous driving to mapping,

infrastructure monitoring, and urban management. Aerial images provide valuable

information over a large area instantaneously; nevertheless, no current dataset

captures the complexity of aerial scenes at the level of granularity required

by real-world applications. To address this, we introduce SkyScapes, an aerial

image dataset with highly-accurate, fine-grained annotations for pixel-level

semantic labeling. SkyScapes provides annotations for 31 semantic categories

ranging from large structures, such as buildings, roads and vegetation, to fine

details, such as 12 (sub-)categories of lane markings. We have defined two main

tasks on this dataset: dense semantic segmentation and multi-class lane-marking

prediction. We carry out extensive experiments to evaluate state-of-the-art

segmentation methods on SkyScapes. Existing methods struggle to deal with the

wide range of classes, object sizes, scales, and fine details present. We

therefore propose a novel multi-task model, which incorporates semantic edge

detection and is better tuned for feature extraction from a wide range of

scales. This model achieves notable improvements over the baselines in region

outlines and level of detail on both tasks.

An appropriate data basis grants one of the most important aspects for

training and evaluating probabilistic trajectory prediction models based on

neural networks. In this regard, a common shortcoming of current benchmark

datasets is their limitation to sets of sample trajectories and a lack of

actual ground truth distributions, which prevents the use of more expressive

error metrics, such as the Wasserstein distance for model evaluation. Towards

this end, this paper proposes a novel approach to synthetic dataset generation

based on composite probabilistic B\'ezier curves, which is capable of

generating ground truth data in terms of probability distributions over full

trajectories. This allows the calculation of arbitrary posterior distributions.

The paper showcases an exemplary trajectory prediction model evaluation using

generated ground truth distribution data.

26 May 2023

A frequent starting point of quantum computation platforms are two-state

quantum systems, i.e., qubits. However, in the context of integer optimization

problems, relevant to scheduling optimization and operations research, it is

often more resource-efficient to employ quantum systems with more than two

basis states, so-called qudits. Here, we discuss the quantum approximate

optimization algorithm (QAOA) for qudit systems. We illustrate how the QAOA can

be used to formulate a variety of integer optimization problems such as graph

coloring problems or electric vehicle (EV) charging optimization. In addition,

we comment on the implementation of constraints and describe three methods to

include these into a quantum circuit of a QAOA by penalty contributions to the

cost Hamiltonian, conditional gates using ancilla qubits, and a dynamical

decoupling strategy. Finally, as a showcase of qudit-based QAOA, we present

numerical results for a charging optimization problem mapped onto a

max-k-graph coloring problem. Our work illustrates the flexibility of qudit

systems to solve integer optimization problems.

Nanjing University of Science and Technology Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

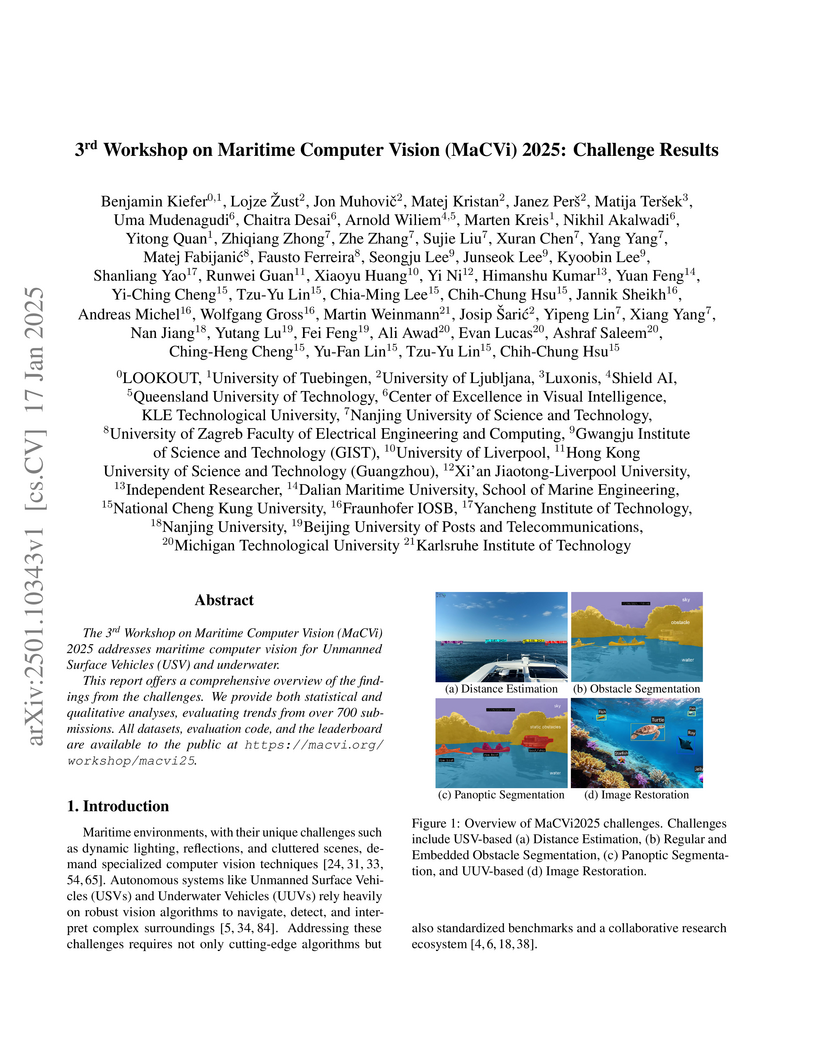

Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUTThe 3rd Workshop on Maritime Computer Vision (MaCVi) 2025 addresses maritime computer vision for Unmanned Surface Vehicles (USV) and underwater. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 700 submissions. All datasets, evaluation code, and the leaderboard are available to the public at this https URL.

Current logo retrieval research focuses on closed set scenarios. We argue

that the logo domain is too large for this strategy and requires an open set

approach. To foster research in this direction, a large-scale logo dataset,

called Logos in the Wild, is collected and released to the public. A typical

open set logo retrieval application is, for example, assessing the

effectiveness of advertisement in sports event broadcasts. Given a query sample

in shape of a logo image, the task is to find all further occurrences of this

logo in a set of images or videos. Currently, common logo retrieval approaches

are unsuitable for this task because of their closed world assumption. Thus, an

open set logo retrieval method is proposed in this work which allows searching

for previously unseen logos by a single query sample. A two stage concept with

separate logo detection and comparison is proposed where both modules are based

on task specific CNNs. If trained with the Logos in the Wild data, significant

performance improvements are observed, especially compared with

state-of-the-art closed set approaches.

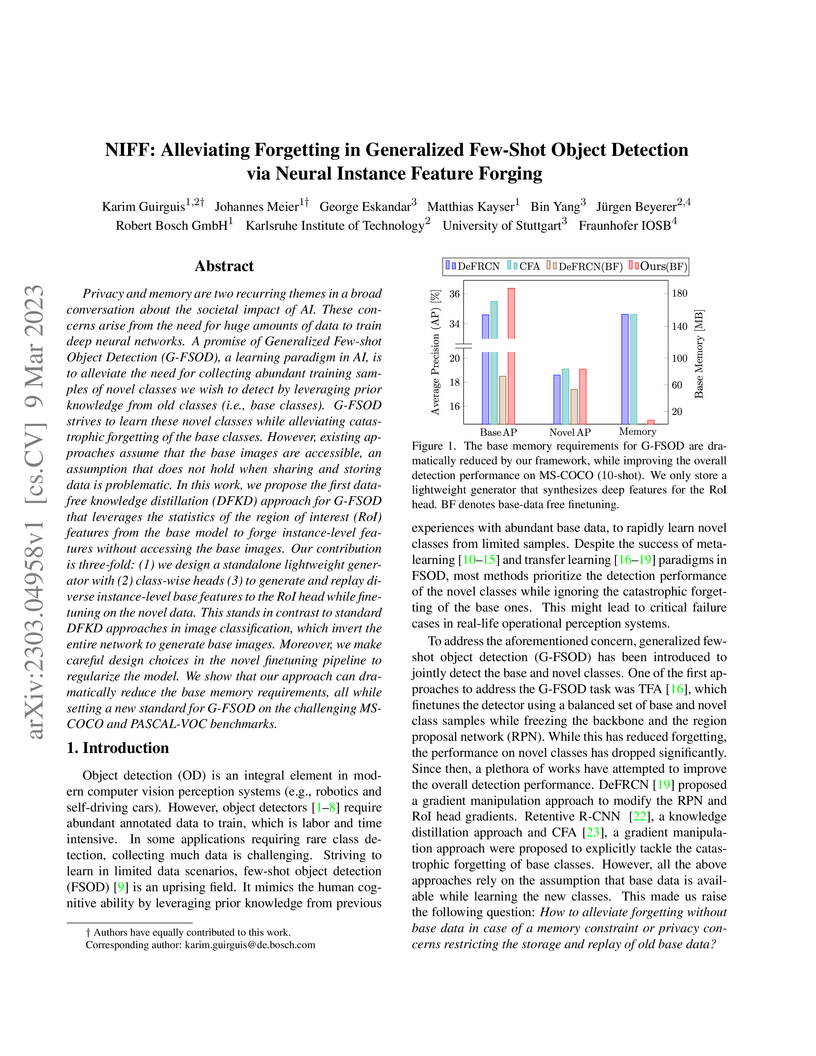

Privacy and memory are two recurring themes in a broad conversation about the

societal impact of AI. These concerns arise from the need for huge amounts of

data to train deep neural networks. A promise of Generalized Few-shot Object

Detection (G-FSOD), a learning paradigm in AI, is to alleviate the need for

collecting abundant training samples of novel classes we wish to detect by

leveraging prior knowledge from old classes (i.e., base classes). G-FSOD

strives to learn these novel classes while alleviating catastrophic forgetting

of the base classes. However, existing approaches assume that the base images

are accessible, an assumption that does not hold when sharing and storing data

is problematic. In this work, we propose the first data-free knowledge

distillation (DFKD) approach for G-FSOD that leverages the statistics of the

region of interest (RoI) features from the base model to forge instance-level

features without accessing the base images. Our contribution is three-fold: (1)

we design a standalone lightweight generator with (2) class-wise heads (3) to

generate and replay diverse instance-level base features to the RoI head while

finetuning on the novel data. This stands in contrast to standard DFKD

approaches in image classification, which invert the entire network to generate

base images. Moreover, we make careful design choices in the novel finetuning

pipeline to regularize the model. We show that our approach can dramatically

reduce the base memory requirements, all while setting a new standard for

G-FSOD on the challenging MS-COCO and PASCAL-VOC benchmarks.

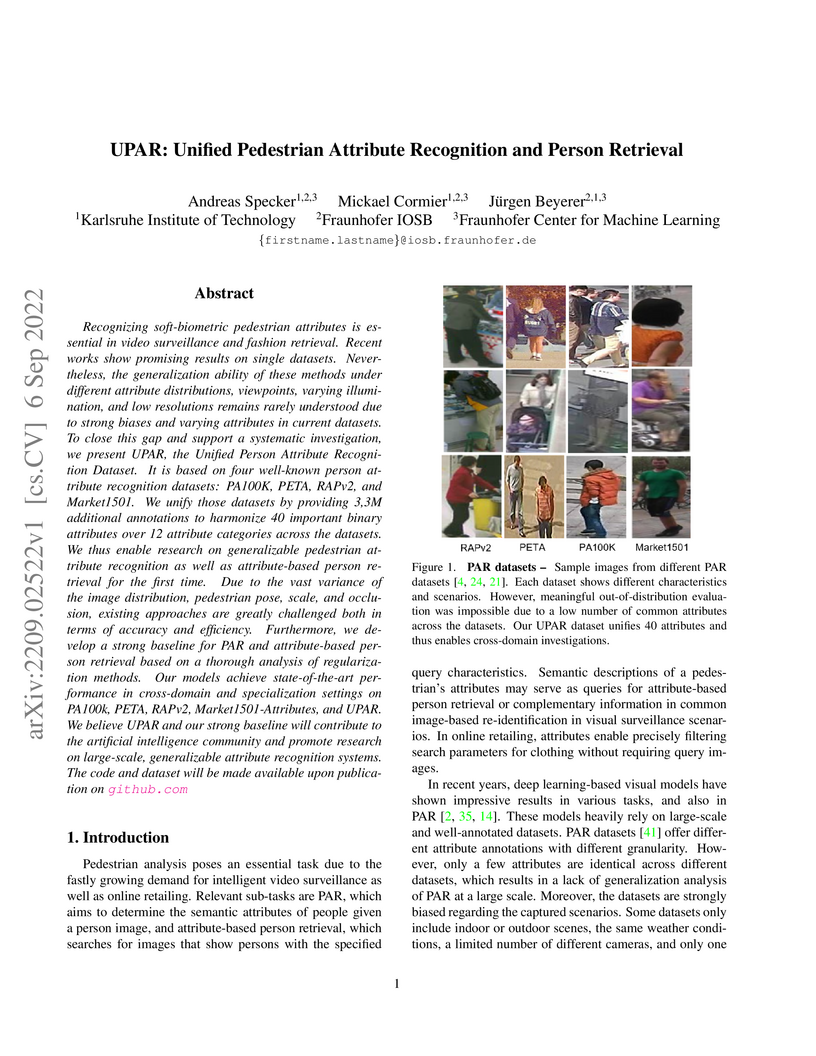

Recognizing soft-biometric pedestrian attributes is essential in video surveillance and fashion retrieval. Recent works show promising results on single datasets. Nevertheless, the generalization ability of these methods under different attribute distributions, viewpoints, varying illumination, and low resolutions remains rarely understood due to strong biases and varying attributes in current datasets. To close this gap and support a systematic investigation, we present UPAR, the Unified Person Attribute Recognition Dataset. It is based on four well-known person attribute recognition datasets: PA100K, PETA, RAPv2, and Market1501. We unify those datasets by providing 3,3M additional annotations to harmonize 40 important binary attributes over 12 attribute categories across the datasets. We thus enable research on generalizable pedestrian attribute recognition as well as attribute-based person retrieval for the first time. Due to the vast variance of the image distribution, pedestrian pose, scale, and occlusion, existing approaches are greatly challenged both in terms of accuracy and efficiency. Furthermore, we develop a strong baseline for PAR and attribute-based person retrieval based on a thorough analysis of regularization methods. Our models achieve state-of-the-art performance in cross-domain and specialization settings on PA100k, PETA, RAPv2, Market1501-Attributes, and UPAR. We believe UPAR and our strong baseline will contribute to the artificial intelligence community and promote research on large-scale, generalizable attribute recognition systems.

Object tracking is an essential task for autonomous systems. With the

advancement of 3D sensors, these systems can better perceive their surroundings

using effective 3D Extended Object Tracking (EOT) methods. Based on the

observation that common road users are symmetrical on the right and left sides

in the traveling direction, we focus on the side view profile of the object. In

order to leverage of the development in 2D EOT and balance the number of

parameters of a shape model in the tracking algorithms, we propose a method for

3D extended object tracking (EOT) by describing the side view profile of the

object with B-spline curves and forming an extrusion to obtain a 3D extent. The

use of B-spline curves exploits their flexible representation power by allowing

the control points to move freely. The algorithm is developed into an Extended

Kalman Filter (EKF). For a through evaluation of this method, we use simulated

traffic scenario of different vehicle models and realworld open dataset

containing both radar and lidar data.

Research in the field of Continual Semantic Segmentation is mainly

investigating novel learning algorithms to overcome catastrophic forgetting of

neural networks. Most recent publications have focused on improving learning

algorithms without distinguishing effects caused by the choice of neural

architecture.Therefore, we study how the choice of neural network architecture

affects catastrophic forgetting in class- and domain-incremental semantic

segmentation. Specifically, we compare the well-researched CNNs to recently

proposed Transformers and Hybrid architectures, as well as the impact of the

choice of novel normalization layers and different decoder heads. We find that

traditional CNNs like ResNet have high plasticity but low stability, while

transformer architectures are much more stable. When the inductive biases of

CNN architectures are combined with transformers in hybrid architectures, it

leads to higher plasticity and stability. The stability of these models can be

explained by their ability to learn general features that are robust against

distribution shifts. Experiments with different normalization layers show that

Continual Normalization achieves the best trade-off in terms of adaptability

and stability of the model. In the class-incremental setting, the choice of the

normalization layer has much less impact. Our experiments suggest that the

right choice of architecture can significantly reduce forgetting even with

naive fine-tuning and confirm that for real-world applications, the

architecture is an important factor in designing a continual learning model.

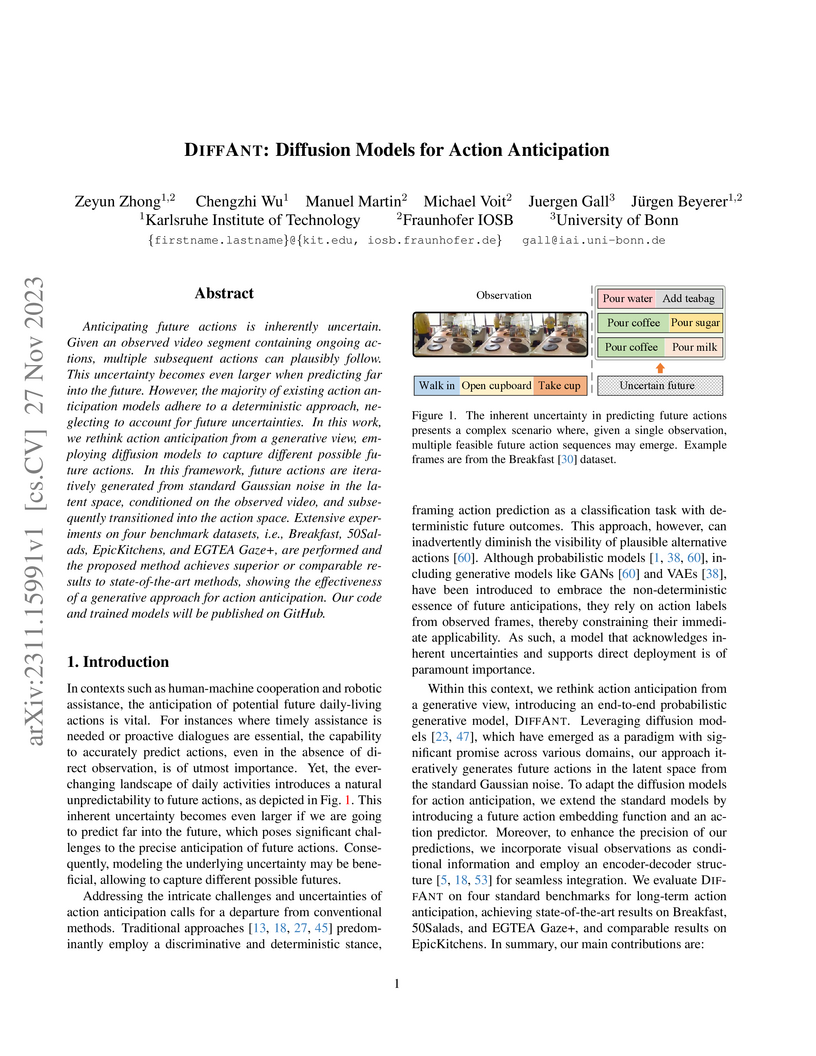

Anticipating future actions is inherently uncertain. Given an observed video

segment containing ongoing actions, multiple subsequent actions can plausibly

follow. This uncertainty becomes even larger when predicting far into the

future. However, the majority of existing action anticipation models adhere to

a deterministic approach, neglecting to account for future uncertainties. In

this work, we rethink action anticipation from a generative view, employing

diffusion models to capture different possible future actions. In this

framework, future actions are iteratively generated from standard Gaussian

noise in the latent space, conditioned on the observed video, and subsequently

transitioned into the action space. Extensive experiments on four benchmark

datasets, i.e., Breakfast, 50Salads, EpicKitchens, and EGTEA Gaze+, are

performed and the proposed method achieves superior or comparable results to

state-of-the-art methods, showing the effectiveness of a generative approach

for action anticipation. Our code and trained models will be published on

GitHub.

03 Jul 2024

Intelligent Transportation Systems (ITS) can benefit from roadside 4D mmWave radar sensors for large-scale traffic monitoring due to their weatherproof functionality, long sensing range and low manufacturing cost. However, the localization method using external measurement devices has limitations in urban environments. Furthermore, if the sensor mount exhibits changes due to environmental influences, they cannot be corrected when the measurement is performed only during the installation. In this paper, we propose self-localization of roadside radar data using Extended Object Tracking (EOT). The method analyses both the tracked trajectories of the vehicles observed by the sensor and the aerial laser scan of city streets, assigns labels of driving behaviors such as "straight ahead", "left turn", "right turn" to trajectory sections and road segments, and performs Semantic Iterative Closest Points (SICP) algorithm to register the point cloud. The method exploits the result from a down stream task -- object tracking -- for localization. We demonstrate high accuracy in the sub-meter range along with very low orientation error. The method also shows good data efficiency. The evaluation is done in both simulation and real-world tests.

The field of continual deep learning is an emerging field and a lot of progress has been made. However, concurrently most of the approaches are only tested on the task of image classification, which is not relevant in the field of intelligent vehicles. Only recently approaches for class-incremental semantic segmentation were proposed. However, all of those approaches are based on some form of knowledge distillation. At the moment there are no investigations on replay-based approaches that are commonly used for object recognition in a continual setting. At the same time while unsupervised domain adaption for semantic segmentation gained a lot of traction, investigations regarding domain-incremental learning in an continual setting is not well-studied. Therefore, the goal of our work is to evaluate and adapt established solutions for continual object recognition to the task of semantic segmentation and to provide baseline methods and evaluation protocols for the task of continual semantic segmentation. We firstly introduce evaluation protocols for the class- and domain-incremental segmentation and analyze selected approaches. We show that the nature of the task of semantic segmentation changes which methods are most effective in mitigating forgetting compared to image classification. Especially, in class-incremental learning knowledge distillation proves to be a vital tool, whereas in domain-incremental learning replay methods are the most effective method.

With the technological advancements of aerial imagery and accurate 3d reconstruction of urban environments, more and more attention has been paid to the automated analyses of urban areas. In our work, we examine two important aspects that allow live analysis of building structures in city models given oblique aerial imagery, namely automatic building extraction with convolutional neural networks (CNNs) and selective real-time depth estimation from aerial imagery. We use transfer learning to train the Faster R-CNN method for real-time deep object detection, by combining a large ground-based dataset for urban scene understanding with a smaller number of images from an aerial dataset. We achieve an average precision (AP) of about 80% for the task of building extraction on a selected evaluation dataset. Our evaluation focuses on both dataset-specific learning and transfer learning. Furthermore, we present an algorithm that allows for multi-view depth estimation from aerial imagery in real-time. We adopt the semi-global matching (SGM) optimization strategy to preserve sharp edges at object boundaries. In combination with the Faster R-CNN, it allows a selective reconstruction of buildings, identified with regions of interest (RoIs), from oblique aerial imagery.

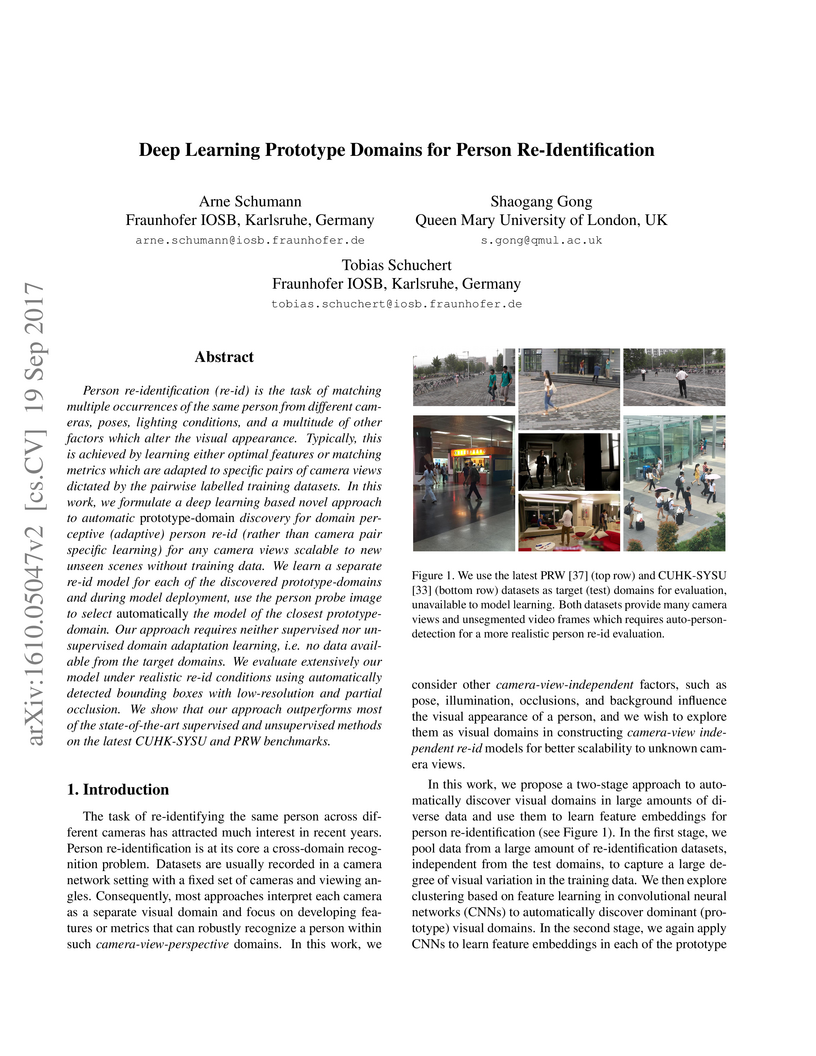

Person re-identification (re-id) is the task of matching multiple occurrences of the same person from different cameras, poses, lighting conditions, and a multitude of other factors which alter the visual appearance. Typically, this is achieved by learning either optimal features or matching metrics which are adapted to specific pairs of camera views dictated by the pairwise labelled training datasets. In this work, we formulate a deep learning based novel approach to automatic prototype-domain discovery for domain perceptive (adaptive) person re-id (rather than camera pair specific learning) for any camera views scalable to new unseen scenes without training data. We learn a separate re-id model for each of the discovered prototype-domains and during model deployment, use the person probe image to select automatically the model of the closest prototype domain. Our approach requires neither supervised nor unsupervised domain adaptation learning, i.e. no data available from the target domains. We evaluate extensively our model under realistic re-id conditions using automatically detected bounding boxes with low-resolution and partial occlusion. We show that our approach outperforms most of the state-of-the-art supervised and unsupervised methods on the latest CUHK-SYSU and PRW benchmarks.

There are no more papers matching your filters at the moment.