Galbot

DreamVLA, a Vision-Language-Action (VLA) model from a collaboration including Shanghai Jiao Tong University and Tsinghua University, enhances robot manipulation by forecasting comprehensive future world knowledge, including dynamic regions, depth, and semantics. It achieves this by integrating these predictions into a unified transformer, leading to improved generalization and higher success rates across various robotic tasks while maintaining efficient inference.

16 Sep 2025

NavFoM, an embodied navigation foundation model, achieves cross-task and cross-embodiment generalization by leveraging a dual-branch architecture that integrates multimodal inputs with an LLM. This model demonstrates state-of-the-art or competitive performance across seven diverse benchmarks, including VLN, object searching, tracking, and autonomous driving, achieving a 5.6% increase in success rate on single-view VLN-CE RxR and a 78% success rate in real-world VLN tasks.

30 Sep 2025

A two-stage Reinforcement Learning framework for humanoids, called Any2Track, enables robust motion tracking across diverse, highly dynamic, and contact-rich movements, coupled with online adaptation to real-world disturbances. The system demonstrates superior tracking quality and stable operation on both simulation and a physical Unitree G1 robot, with successful zero-shot transfer.

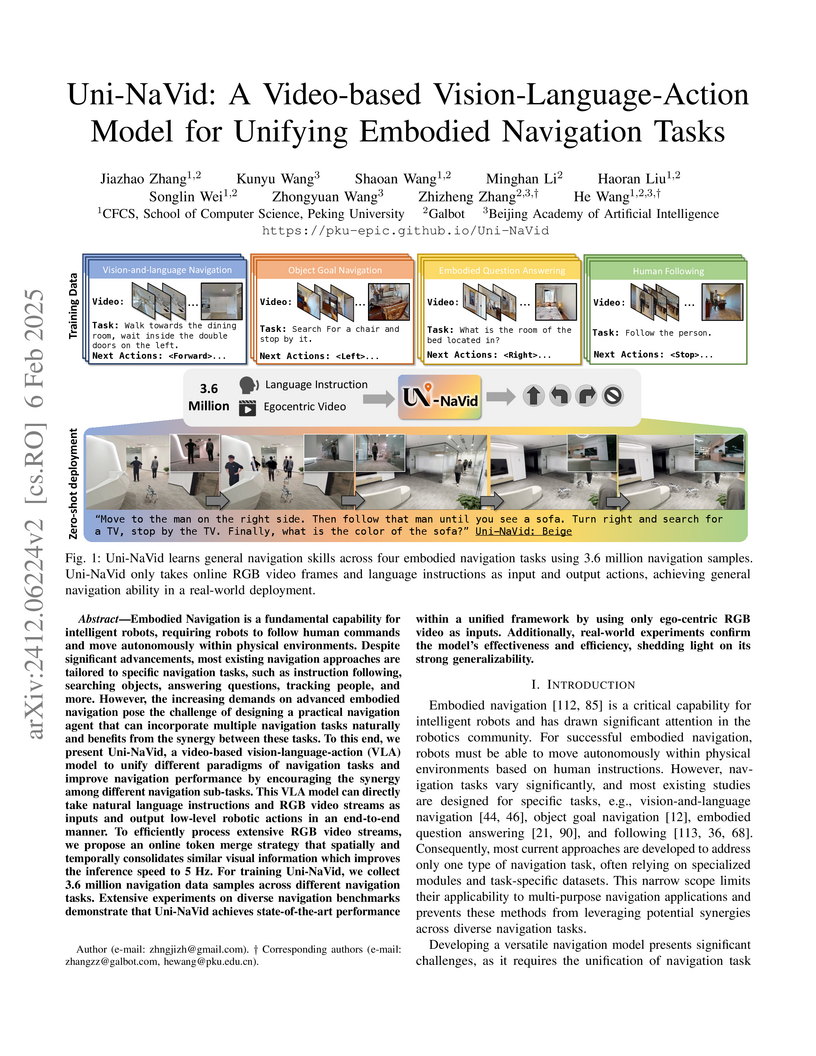

Uni-NaVid presents a unified Vision-Language-Action (VLA) model capable of addressing four distinct embodied navigation tasks by processing ego-centric video and natural language instructions. The model employs a novel online visual token merging mechanism to achieve a 5 Hz inference speed, demonstrating state-of-the-art performance in simulation and strong real-world generalization.

27 Aug 2025

GraspVLA, a grasping foundation model from Galbot and Peking University, demonstrates robust zero-shot sim-to-real transfer and open-vocabulary grasping by pre-training exclusively on SynGrasp-1B, a billion-scale synthetic action dataset, achieving around 90% success rates on diverse real-world object categories.

Researchers from Peking University, BAAI, and Galbot develop TrackVLA, a unified Vision-Language-Action model that integrates target recognition and trajectory planning within a single LLM backbone for embodied visual tracking, achieving 10 FPS inference speed while outperforming existing methods on zero-shot tracking benchmarks and demonstrating robust sim-to-real transfer on a quadruped robot through joint training on 855K tracking samples and 855K video question-answering samples using an anchor-based diffusion action model that generates continuous waypoint trajectories from natural language instructions.

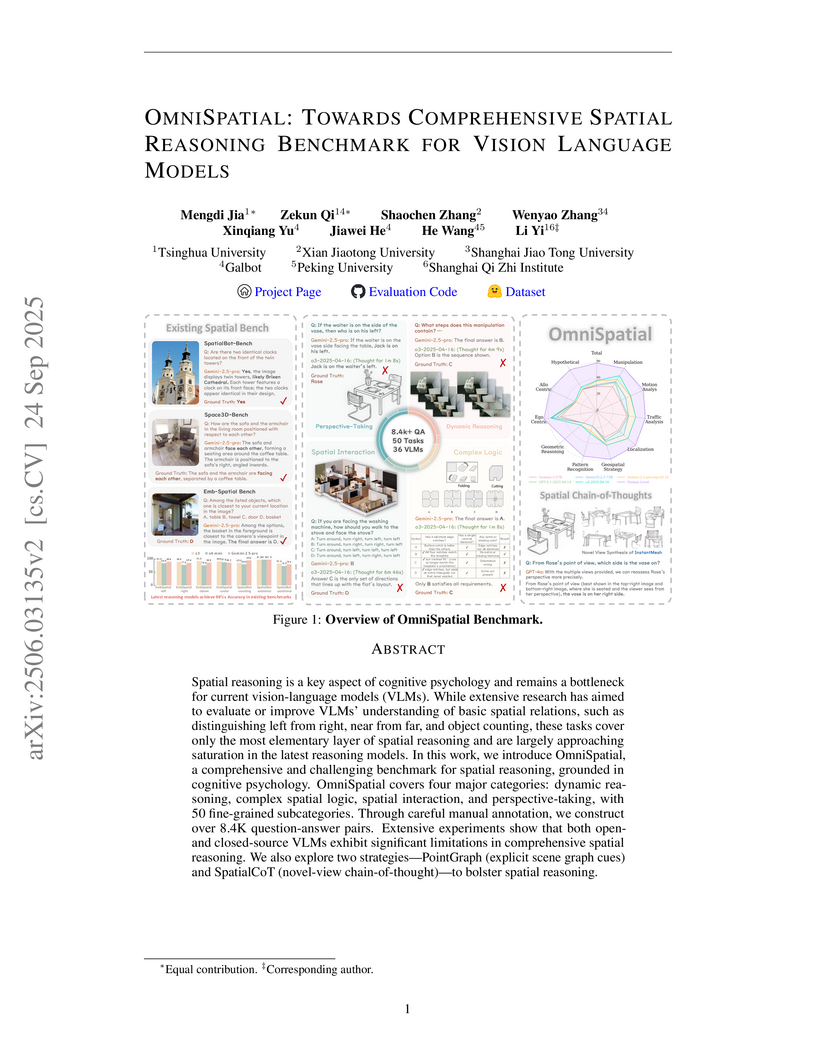

A new benchmark, OmniSpatial, offers a comprehensive evaluation of Vision-Language Models' spatial reasoning by integrating principles from cognitive psychology into 50 fine-grained subtasks. The benchmark reveals that state-of-the-art VLMs perform over 30 percentage points below human accuracy, particularly struggling with complex spatial logic and perspective-taking.

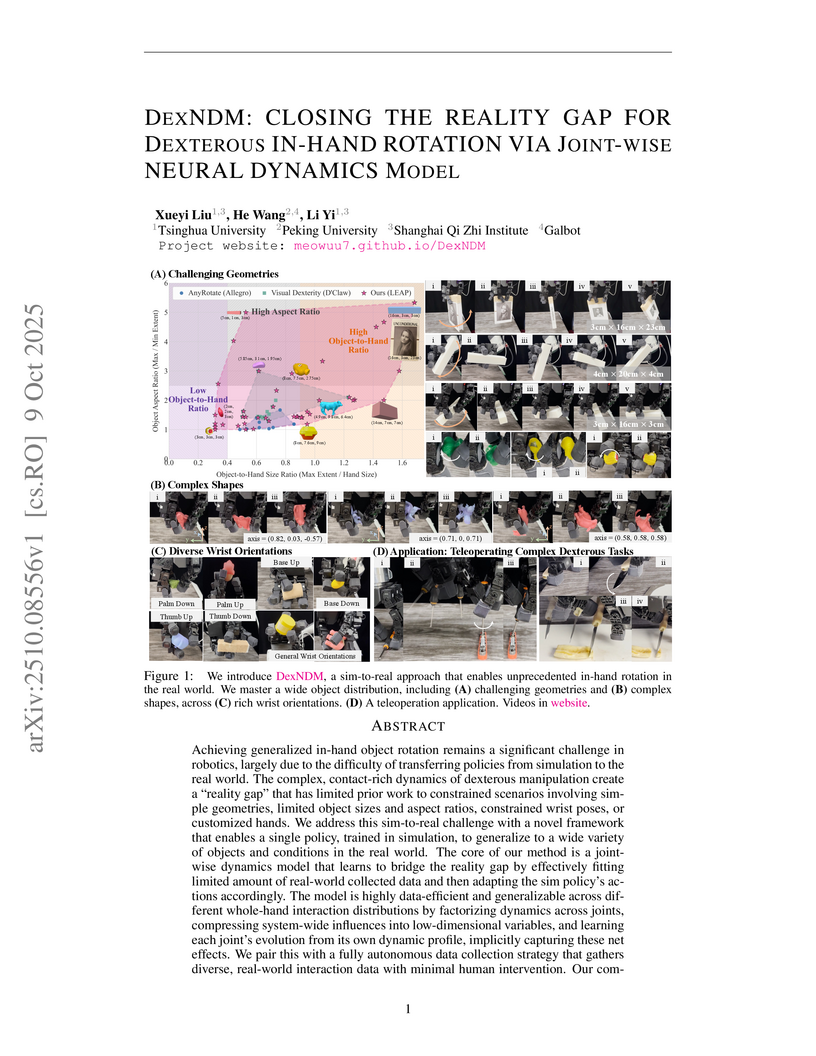

DexNDM introduces a sim-to-real framework that leverages a joint-wise neural dynamics model and autonomous data collection to achieve generalized in-hand object rotation. This approach enables robust manipulation of diverse objects, including those with challenging geometries, under various wrist orientations and rotation axes in real-world scenarios.

08 Oct 2025

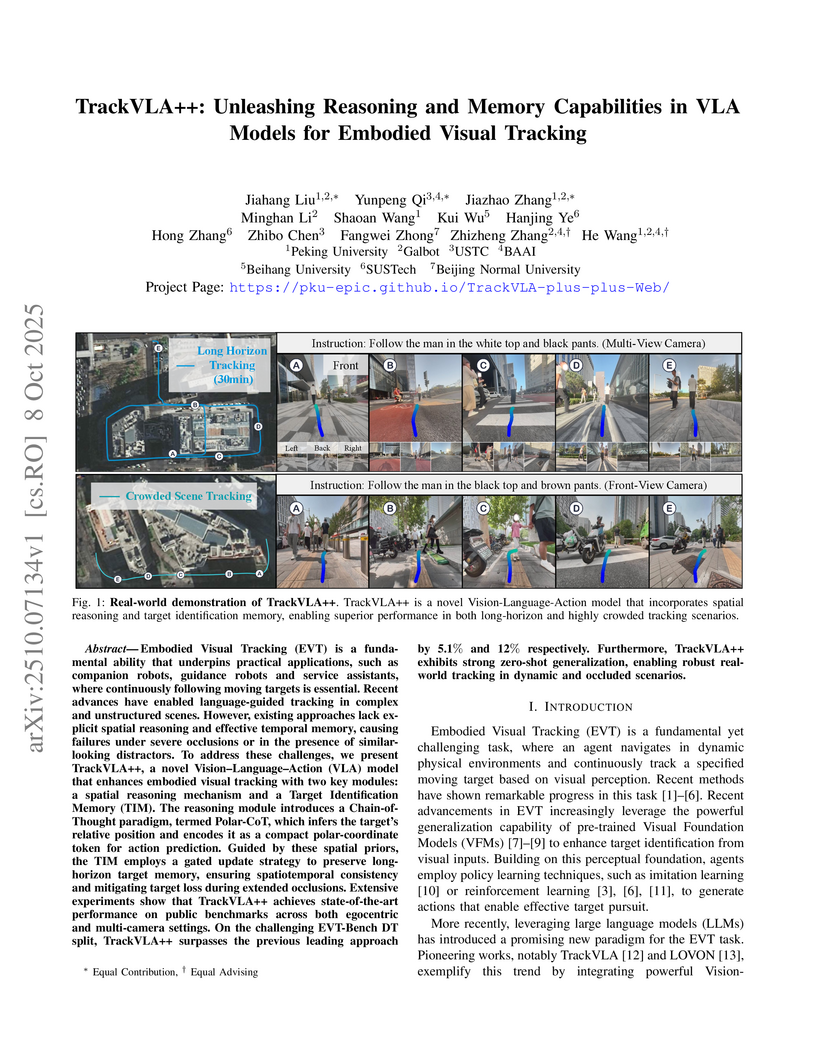

TrackVLA++ advances embodied visual tracking by integrating an efficient spatial reasoning mechanism and a robust, confidence-gated long-term memory into Vision-Language-Action models. It achieves state-of-the-art performance on multiple simulation benchmarks and demonstrates improved real-world tracking robustness against occlusions and distractors.

NaVid is a video-based Vision-Language Model that enables navigation in continuous environments using only monocular RGB video streams. It achieves state-of-the-art performance on R2R Val-Unseen with 35.9% SPL and demonstrates a 66% success rate in real-world scenarios, eliminating reliance on depth, odometry, or maps.

While spatial reasoning has made progress in object localization relationships, it often overlooks object orientation-a key factor in 6-DoF fine-grained manipulation. Traditional pose representations rely on pre-defined frames or templates, limiting generalization and semantic grounding. In this paper, we introduce the concept of semantic orientation, which defines object orientations using natural language in a reference-frame-free manner (e.g., the "plug-in" direction of a USB or the "handle" direction of a cup). To support this, we construct OrienText300K, a large-scale dataset of 3D objects annotated with semantic orientations, and develop PointSO, a general model for zero-shot semantic orientation prediction. By integrating semantic orientation into VLM agents, our SoFar framework enables 6-DoF spatial reasoning and generates robotic actions. Extensive experiments demonstrated the effectiveness and generalization of our SoFar, e.g., zero-shot 48.7% successful rate on Open6DOR and zero-shot 74.9% successful rate on SIMPLER-Env.

A new framework introduces DexGraspNet 3.0, the largest synthetic dataset for dexterous grasping with 170 million semantically-annotated poses, and DexVLG, a large vision-language-grasp model. The model predicts language-aligned dexterous grasp poses from single-view RGBD input, achieving 80% success and 75% part accuracy in real-world experiments.

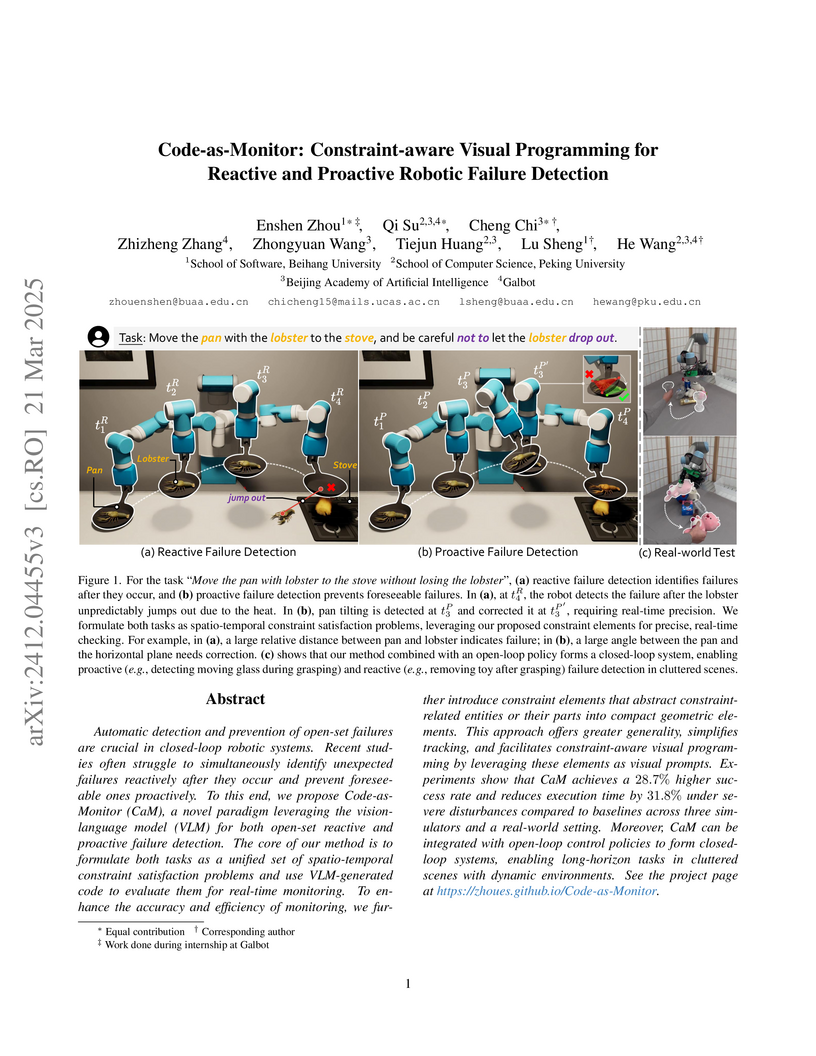

The Code-as-Monitor (CaM) framework, developed by researchers from Beihang University, Peking University, BAAI, and Galbot, introduces a unified approach for reactive and proactive robotic failure detection. It uses Vision-Language Models to generate executable monitor code based on dynamically extracted "constraint elements" for real-time spatio-temporal constraint checking, achieving improved task success rates (e.g., 17.5% higher in "Stack in Order") and reduced execution times (e.g., 34.8% less in Omnigibson) across diverse robotic tasks.

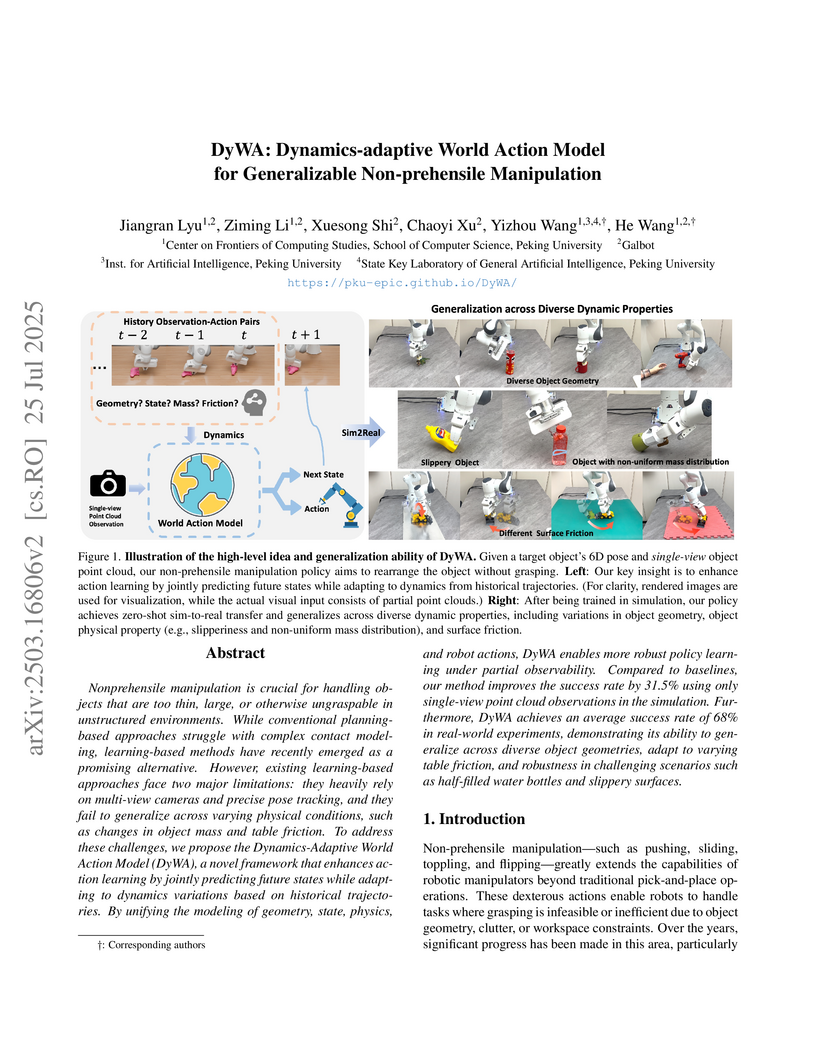

A framework from Peking University and Galbot, DyWA, enables generalizable non-prehensile robotic manipulation using only a single-view camera and without explicit pose tracking. It achieves an 82.2% success rate on seen objects and 75.0% on unseen objects in challenging simulation scenarios, while adapting to varying physical properties like mass and friction.

UrbanVLA introduces a Vision-Language-Action (VLA) model capable of interpreting high-level route instructions from consumer navigation apps and visual observations to perform long-horizon urban micromobility tasks. The model achieves a 97% success rate in unseen simulated environments and demonstrates robust real-world navigation, including social compliance and obstacle avoidance, on a Unitree Go2 robot.

Legged robots with advanced manipulation capabilities have the potential to

significantly improve household duties and urban maintenance. Despite

considerable progress in developing robust locomotion and precise manipulation

methods, seamlessly integrating these into cohesive whole-body control for

real-world applications remains challenging. In this paper, we present a

modular framework for robust and generalizable whole-body loco-manipulation

controller based on a single arm-mounted camera. By using reinforcement

learning (RL), we enable a robust low-level policy for command execution over 5

dimensions (5D) and a grasp-aware high-level policy guided by a novel metric,

Generalized Oriented Reachability Map (GORM). The proposed system achieves

state-of-the-art one-time grasping accuracy of 89% in the real world, including

challenging tasks such as grasping transparent objects. Through extensive

simulations and real-world experiments, we demonstrate that our system can

effectively manage a large workspace, from floor level to above body height,

and perform diverse whole-body loco-manipulation tasks.

Researchers introduce DexGraspNet 2.0, a large-scale benchmark comprising 427 million dexterous grasp annotations for cluttered scenes. They also present a two-stage generative grasping method that achieves robust grasping of diverse objects in cluttered environments, demonstrating successful zero-shot sim-to-real transfer.

Generalizable dexterous grasping with suitable grasp types is a fundamental skill for intelligent robots. Developing such skills requires a large-scale and high-quality dataset that covers numerous grasp types (i.e., at least those categorized by the GRASP taxonomy), but collecting such data is extremely challenging. Existing automatic grasp synthesis methods are often limited to specific grasp types or object categories, hindering scalability. This work proposes an efficient pipeline capable of synthesizing contact-rich, penetration-free, and physically plausible grasps for any grasp type, object, and articulated hand. Starting from a single human-annotated template for each hand and grasp type, our pipeline tackles the complicated synthesis problem with two stages: optimize the object to fit the hand template first, and then locally refine the hand to fit the object in simulation. To validate the synthesized grasps, we introduce a contact-aware control strategy that allows the hand to apply the appropriate force at each contact point to the object. Those validated grasps can also be used as new grasp templates to facilitate future synthesis. Experiments show that our method significantly outperforms previous type-unaware grasp synthesis baselines in simulation. Using our algorithm, we construct a dataset containing 10.7k objects and 9.5M grasps, covering 31 grasp types in the GRASP taxonomy. Finally, we train a type-conditional generative model that successfully performs the desired grasp type from single-view object point clouds, achieving an 82.3% success rate in real-world experiments. Project page: this https URL.

03 Sep 2025

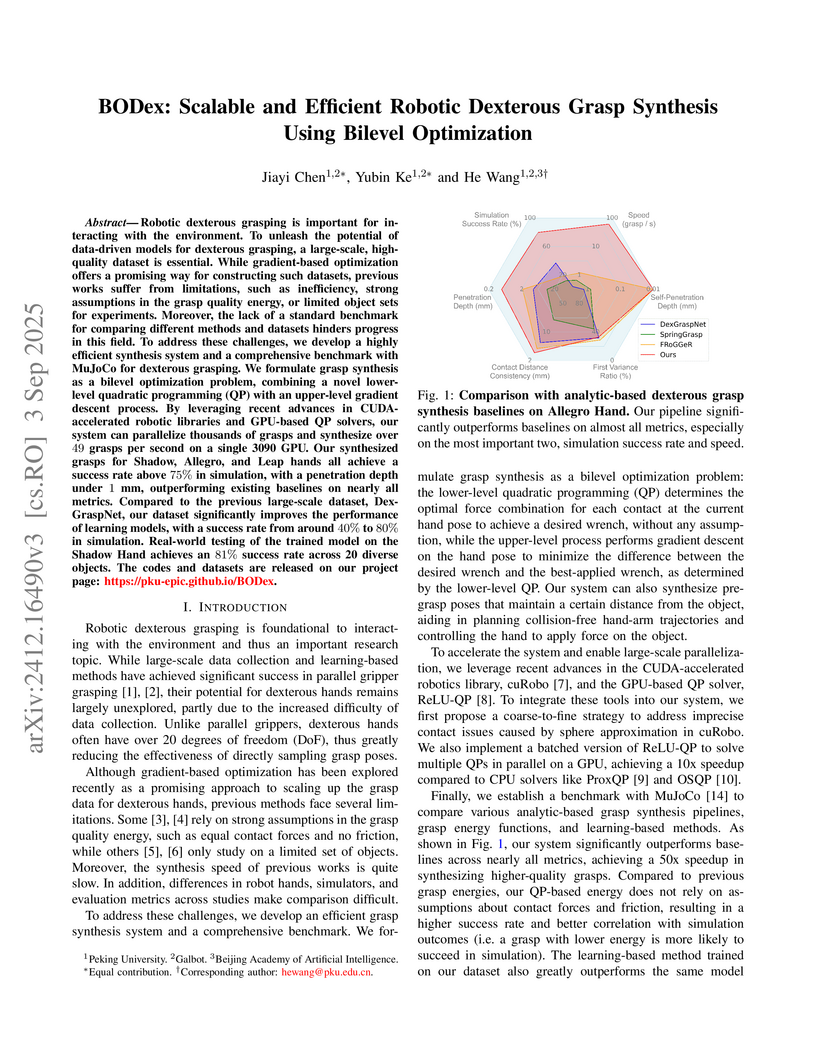

BODex presents a GPU-accelerated bilevel optimization framework for synthesizing high-quality, large-scale dexterous grasps, achieving over 49 grasps per second and a 50x speedup over prior methods. This approach enables learning models trained on its dataset to reach an 80.1% simulation success rate and an 81% real-world success rate on a Shadow Hand.

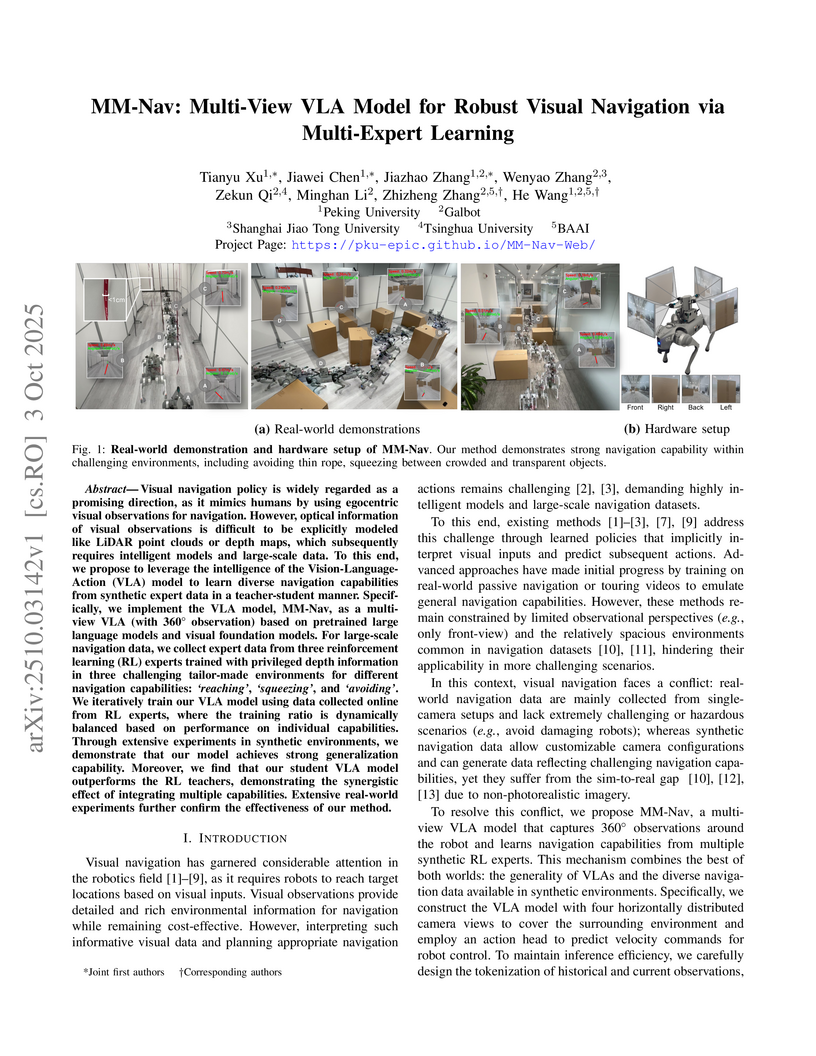

Visual navigation policy is widely regarded as a promising direction, as it mimics humans by using egocentric visual observations for navigation. However, optical information of visual observations is difficult to be explicitly modeled like LiDAR point clouds or depth maps, which subsequently requires intelligent models and large-scale data. To this end, we propose to leverage the intelligence of the Vision-Language-Action (VLA) model to learn diverse navigation capabilities from synthetic expert data in a teacher-student manner. Specifically, we implement the VLA model, MM-Nav, as a multi-view VLA (with 360 observations) based on pretrained large language models and visual foundation models. For large-scale navigation data, we collect expert data from three reinforcement learning (RL) experts trained with privileged depth information in three challenging tailor-made environments for different navigation capabilities: reaching, squeezing, and avoiding. We iteratively train our VLA model using data collected online from RL experts, where the training ratio is dynamically balanced based on performance on individual capabilities. Through extensive experiments in synthetic environments, we demonstrate that our model achieves strong generalization capability. Moreover, we find that our student VLA model outperforms the RL teachers, demonstrating the synergistic effect of integrating multiple capabilities. Extensive real-world experiments further confirm the effectiveness of our method.

There are no more papers matching your filters at the moment.