KuaiShou Inc.

KuaiShou Inc.26 Feb 2025

Researchers at KuaiShou Inc. developed OneRec, a unified generative recommender system designed to replace traditional retrieve-and-rank pipelines by leveraging a Mixture-of-Experts architecture and iterative preference alignment. Online A/B tests on Kuaishou's platform showed OneRec-1B+IPA increased total watch time by 1.68% and average view duration by 6.56%.

11 Nov 2025

Kuaishou Inc. developed OneRec-Think, a generative recommendation framework that integrates explicit, in-text reasoning into large language models to provide transparent and accurate recommendations. The framework achieved a 0.159% gain in APP Stay Time during A/B testing on Kuaishou's platform and outperformed state-of-the-art models on public benchmarks.

04 Jun 2025

ETEGRec is a generative recommender framework that enables end-to-end learning of item tokenization alongside the recommendation task. It addresses the decoupling issue in existing models by integrating an item tokenizer and a generative recommender with specific alignment objectives and an alternating optimization strategy, consistently outperforming traditional and generative baselines on various Amazon datasets.

26 Feb 2025

Recently, generative retrieval-based recommendation systems have emerged as a promising paradigm. However, most modern recommender systems adopt a retrieve-and-rank strategy, where the generative model functions only as a selector during the retrieval stage. In this paper, we propose OneRec, which replaces the cascaded learning framework with a unified generative model. To the best of our knowledge, this is the first end-to-end generative model that significantly surpasses current complex and well-designed recommender systems in real-world scenarios. Specifically, OneRec includes: 1) an encoder-decoder structure, which encodes the user's historical behavior sequences and gradually decodes the videos that the user may be interested in. We adopt sparse Mixture-of-Experts (MoE) to scale model capacity without proportionally increasing computational FLOPs. 2) a session-wise generation approach. In contrast to traditional next-item prediction, we propose a session-wise generation, which is more elegant and contextually coherent than point-by-point generation that relies on hand-crafted rules to properly combine the generated results. 3) an Iterative Preference Alignment module combined with Direct Preference Optimization (DPO) to enhance the quality of the generated results. Unlike DPO in NLP, a recommendation system typically has only one opportunity to display results for each user's browsing request, making it impossible to obtain positive and negative samples simultaneously. To address this limitation, We design a reward model to simulate user generation and customize the sampling strategy. Extensive experiments have demonstrated that a limited number of DPO samples can align user interest preferences and significantly improve the quality of generated results. We deployed OneRec in the main scene of Kuaishou, achieving a 1.6\% increase in watch-time, which is a substantial improvement.

08 Nov 2022

Short video applications have attracted billions of users in recent years,

fulfilling their various needs with diverse content. Users usually watch short

videos on many topics on mobile devices in a short period of time, and give

explicit or implicit feedback very quickly to the short videos they watch. The

recommender system needs to perceive users' preferences in real-time in order

to satisfy their changing interests. Traditionally, recommender systems

deployed at server side return a ranked list of videos for each request from

client. Thus it cannot adjust the recommendation results according to the

user's real-time feedback before the next request. Due to client-server

transmitting latency, it is also unable to make immediate use of users'

real-time feedback. However, as users continue to watch videos and feedback,

the changing context leads the ranking of the server-side recommendation system

inaccurate. In this paper, we propose to deploy a short video recommendation

framework on mobile devices to solve these problems. Specifically, we design

and deploy a tiny on-device ranking model to enable real-time re-ranking of

server-side recommendation results. We improve its prediction accuracy by

exploiting users' real-time feedback of watched videos and client-specific

real-time features. With more accurate predictions, we further consider

interactions among candidate videos, and propose a context-aware re-ranking

method based on adaptive beam search. The framework has been deployed on

Kuaishou, a billion-user scale short video application, and improved effective

view, like and follow by 1.28%, 8.22% and 13.6% respectively.

Researchers from the University of Science and Technology of China and Kuaishou Inc. developed DimeRec, a framework that integrates generative diffusion models with sequential recommendation by separating user interest extraction from recommendation generation. This approach, which includes a novel Geodesic Random Walk, yielded improvements of 10-15% in Hit Rate and was successfully deployed in a real-world system, increasing user engagement and recommendation diversity.

24 Sep 2025

User behavior sequences in search systems resemble "interest fossils", capturing genuine intent yet eroded by exposure bias, category drift, and contextual noise. Current methods predominantly follow an "identify-aggregate" paradigm, assuming sequences immutably reflect user preferences while overlooking the organic entanglement of noise and genuine interest. Moreover, they output static, context-agnostic representations, failing to adapt to dynamic intent shifts under varying Query-User-Item-Context conditions.

To resolve this dual challenge, we propose the Contextual Diffusion Purifier (CDP). By treating category-filtered behaviors as "contaminated observations", CDP employs a forward noising and conditional reverse denoising process guided by cross-interaction features (Query x User x Item x Context), controllably generating pure, context-aware interest representations that dynamically evolve with scenarios. Extensive offline/online experiments demonstrate the superiority of CDP over state-of-the-art methods.

09 Apr 2024

KuaiShou Inc. developed EM3, an industrial framework enabling end-to-end training of multimodal and ranking models for large-scale recommender systems. This approach yielded offline AUC gains of up to 0.256% on e-commerce CTR and 0.242% on advertising CVR, along with significant online A/B test improvements like +3.22% GMV and +2.64% RPM, while also increasing cold-start item impressions by +2.07%.

14 Aug 2024

The diversity of recommendation is equally crucial as accuracy in improving user experience. Existing studies, e.g., Determinantal Point Process (DPP) and Maximal Marginal Relevance (MMR), employ a greedy paradigm to iteratively select items that optimize both accuracy and diversity. However, prior methods typically exhibit quadratic complexity, limiting their applications to the re-ranking stage and are not applicable to other recommendation stages with a larger pool of candidate items, such as the pre-ranking and ranking stages. In this paper, we propose Contextual Distillation Model (CDM), an efficient recommendation model that addresses diversification, suitable for the deployment in all stages of industrial recommendation pipelines. Specifically, CDM utilizes the candidate items in the same user request as context to enhance the diversification of the results. We propose a contrastive context encoder that employs attention mechanisms to model both positive and negative contexts. For the training of CDM, we compare each target item with its context embedding and utilize the knowledge distillation framework to learn the win probability of each target item under the MMR algorithm, where the teacher is derived from MMR outputs. During inference, ranking is performed through a linear combination of the recommendation and student model scores, ensuring both diversity and efficiency. We perform offline evaluations on two industrial datasets and conduct online A/B test of CDM on the short-video platform KuaiShou. The considerable enhancements observed in both recommendation quality and diversity, as shown by metrics, provide strong superiority for the effectiveness of CDM.

Graph Neural Networks (GNNs) have demonstrated promising results on exploiting node representations for many downstream tasks through supervised end-to-end training. To deal with the widespread label scarcity issue in real-world applications, Graph Contrastive Learning (GCL) is leveraged to train GNNs with limited or even no labels by maximizing the mutual information between nodes in its augmented views generated from the original graph. However, the distribution of graphs remains unconsidered in view generation, resulting in the ignorance of unseen edges in most existing literature, which is empirically shown to be able to improve GCL's performance in our experiments. To this end, we propose to incorporate graph generative adversarial networks (GANs) to learn the distribution of views for GCL, in order to i) automatically capture the characteristic of graphs for augmentations, and ii) jointly train the graph GAN model and the GCL model. Specifically, we present GACN, a novel Generative Adversarial Contrastive learning Network for graph representation learning. GACN develops a view generator and a view discriminator to generate augmented views automatically in an adversarial style. Then, GACN leverages these views to train a GNN encoder with two carefully designed self-supervised learning losses, including the graph contrastive loss and the Bayesian personalized ranking Loss. Furthermore, we design an optimization framework to train all GACN modules jointly. Extensive experiments on seven real-world datasets show that GACN is able to generate high-quality augmented views for GCL and is superior to twelve state-of-the-art baseline methods. Noticeably, our proposed GACN surprisingly discovers that the generated views in data augmentation finally conform to the well-known preferential attachment rule in online networks.

Cross-domain recommendation (CDR) has been proven as a promising way to tackle the user cold-start problem, which aims to make recommendations for users in the target domain by transferring the user preference derived from the source domain. Traditional CDR studies follow the embedding and mapping (EMCDR) paradigm, which transfers user representations from the source to target domain by learning a user-shared mapping function, neglecting the user-specific preference. Recent CDR studies attempt to learn user-specific mapping functions in meta-learning paradigm, which regards each user's CDR as an individual task, but neglects the preference correlations among users, limiting the beneficial information for user representations. Moreover, both of the paradigms neglect the explicit user-item interactions from both domains during the mapping process. To address the above issues, this paper proposes a novel CDR framework with neural process (NP), termed as CDRNP. Particularly, it develops the meta-learning paradigm to leverage user-specific preference, and further introduces a stochastic process by NP to capture the preference correlations among the overlapping and cold-start users, thus generating more powerful mapping functions by mapping the user-specific preference and common preference correlations to a predictive probability distribution. In addition, we also introduce a preference remainer to enhance the common preference from the overlapping users, and finally devises an adaptive conditional decoder with preference modulation to make prediction for cold-start users with items in the target domain. Experimental results demonstrate that CDRNP outperforms previous SOTA methods in three real-world CDR scenarios.

Nowadays, reading or writing comments on captivating videos has emerged as a critical part of the viewing experience on online video platforms. However, existing recommender systems primarily focus on users' interaction behaviors with videos, neglecting comment content and interaction in user preference modeling. In this paper, we propose a novel recommendation approach called LSVCR that utilizes user interaction histories with both videos and comments to jointly perform personalized video and comment recommendation. Specifically, our approach comprises two key components: sequential recommendation (SR) model and supplemental large language model (LLM) recommender. The SR model functions as the primary recommendation backbone (retained in deployment) of our method for efficient user preference modeling. Concurrently, we employ a LLM as the supplemental recommender (discarded in deployment) to better capture underlying user preferences derived from heterogeneous interaction behaviors. In order to integrate the strengths of the SR model and the supplemental LLM recommender, we introduce a two-stage training paradigm. The first stage, personalized preference alignment, aims to align the preference representations from both components, thereby enhancing the semantics of the SR model. The second stage, recommendation-oriented fine-tuning, involves fine-tuning the alignment-enhanced SR model according to specific objectives. Extensive experiments in both video and comment recommendation tasks demonstrate the effectiveness of LSVCR. Moreover, online A/B testing on KuaiShou platform verifies the practical benefits of our approach. In particular, we attain a cumulative gain of 4.13% in comment watch time.

Driven by curiosity, humans have continually sought to explore and understand the world around them, leading to the invention of various tools to satiate this inquisitiveness. Despite not having the capacity to process and memorize vast amounts of information in their brains, humans excel in critical thinking, planning, reflection, and harnessing available tools to interact with and interpret the world, enabling them to find answers efficiently. The recent advancements in large language models (LLMs) suggest that machines might also possess the aforementioned human-like capabilities, allowing them to exhibit powerful abilities even with a constrained parameter count. In this paper, we introduce KwaiAgents, a generalized information-seeking agent system based on LLMs. Within KwaiAgents, we propose an agent system that employs LLMs as its cognitive core, which is capable of understanding a user's query, behavior guidelines, and referencing external documents. The agent can also update and retrieve information from its internal memory, plan and execute actions using a time-aware search-browse toolkit, and ultimately provide a comprehensive response. We further investigate the system's performance when powered by LLMs less advanced than GPT-4, and introduce the Meta-Agent Tuning (MAT) framework, designed to ensure even an open-sourced 7B or 13B model performs well among many agent systems. We exploit both benchmark and human evaluations to systematically validate these capabilities. Extensive experiments show the superiority of our agent system compared to other autonomous agents and highlight the enhanced generalized agent-abilities of our fine-tuned LLMs.

Researchers at Kuaishou Inc. developed an end-to-end framework that directly optimizes marketing effectiveness under budget constraints by integrating a non-differentiable budget allocation process into an S-Learner's training objective. This approach resulted in a 1.24% increase in per-capita response with a 0.369% lower per-capita cost in a large-scale A/B test on their short video platform, where it is currently deployed to allocate budgets for hundreds of millions of users.

08 May 2024

The Probability Ranking Principle (PRP) has been considered as the foundational standard in the design of information retrieval (IR) systems. The principle requires an IR module's returned list of results to be ranked with respect to the underlying user interests, so as to maximize the results' utility.

Nevertheless, we point out that it is inappropriate to indiscriminately apply PRP through every stage of a contemporary IR system. Such systems contain multiple stages (e.g., retrieval, pre-ranking, ranking, and re-ranking stages, as examined in this paper). The \emph{selection bias} inherent in the model of each stage significantly influences the results that are ultimately presented to users.

To address this issue, we propose an improved ranking principle for multi-stage systems, namely the Generalized Probability Ranking Principle (GPRP), to emphasize both the selection bias in each stage of the system pipeline as well as the underlying interest of users.

We realize GPRP via a unified algorithmic framework named Full Stage Learning to Rank. Our core idea is to first estimate the selection bias in the subsequent stages and then learn a ranking model that best complies with the downstream modules' selection bias so as to deliver its top ranked results to the final ranked list in the system's output.

We performed extensive experiment evaluations of our developed Full Stage Learning to Rank solution, using both simulations and online A/B tests in one of the leading short-video recommendation platforms. The algorithm is proved to be effective in both retrieval and ranking stages. Since deployed, the algorithm has brought consistent and significant performance gain to the platform.

Current advances in recommender systems have been remarkably successful in optimizing immediate engagement. However, long-term user engagement, a more desirable performance metric, remains difficult to improve. Meanwhile, recent reinforcement learning (RL) algorithms have shown their effectiveness in a variety of long-term goal optimization tasks. For this reason, RL is widely considered as a promising framework for optimizing long-term user engagement in recommendation. Though promising, the application of RL heavily relies on well-designed rewards, but designing rewards related to long-term user engagement is quite difficult. To mitigate the problem, we propose a novel paradigm, recommender systems with human preferences (or Preference-based Recommender systems), which allows RL recommender systems to learn from preferences about users historical behaviors rather than explicitly defined rewards. Such preferences are easily accessible through techniques such as crowdsourcing, as they do not require any expert knowledge. With PrefRec, we can fully exploit the advantages of RL in optimizing long-term goals, while avoiding complex reward engineering. PrefRec uses the preferences to automatically train a reward function in an end-to-end manner. The reward function is then used to generate learning signals to train the recommendation policy. Furthermore, we design an effective optimization method for PrefRec, which uses an additional value function, expectile regression and reward model pre-training to improve the performance. We conduct experiments on a variety of long-term user engagement optimization tasks. The results show that PrefRec significantly outperforms previous state-of-the-art methods in all the tasks.

Offering incentives (e.g., coupons at Amazon, discounts at Uber and video bonuses at Tiktok) to user is a common strategy used by online platforms to increase user engagement and platform revenue. Despite its proven effectiveness, these marketing incentives incur an inevitable cost and might result in a low ROI (Return on Investment) if not used properly. On the other hand, different users respond differently to these incentives, for instance, some users never buy certain products without coupons, while others do anyway. Thus, how to select the right amount of incentives (i.e. treatment) to each user under budget constraints is an important research problem with great practical implications. In this paper, we call such problem as a budget-constrained treatment selection (BTS) problem.

The challenge is how to efficiently solve BTS problem on a Large-Scale dataset and achieve improved results over the existing techniques. We propose a novel tree-based treatment selection technique under budget constraints, called Large-Scale Budget-Constrained Causal Forest (LBCF) algorithm, which is also an efficient treatment selection algorithm suitable for modern distributed computing systems. A novel offline evaluation method is also proposed to overcome an intrinsic challenge in assessing solutions' performance for BTS problem in randomized control trials (RCT) data. We deploy our approach in a real-world scenario on a large-scale video platform, where the platform gives away bonuses in order to increase users' campaign engagement duration. The simulation analysis, offline and online experiments all show that our method outperforms various tree-based state-of-the-art baselines. The proposed approach is currently serving over hundreds of millions of users on the platform and achieves one of the most tremendous improvements over these months.

15 May 2025

Video-based dialogue systems, such as education assistants, have compelling

application value, thereby garnering growing interest. However, the current

video-based dialogue systems are limited by their reliance on a single dialogue

type, which hinders their versatility in practical applications across a range

of scenarios, including question-answering, emotional dialog, etc. In this

paper, we identify this challenge as how to generate video-driven multilingual

mixed-type dialogues. To mitigate this challenge, we propose a novel task and

create a human-to-human video-driven multilingual mixed-type dialogue corpus,

termed KwaiChat, containing a total of 93,209 videos and 246,080 dialogues,

across 4 dialogue types, 30 domains, 4 languages, and 13 topics. Additionally,

we establish baseline models on KwaiChat. An extensive analysis of 7 distinct

LLMs on KwaiChat reveals that GPT-4o achieves the best performance but still

cannot perform well in this situation even with the help of in-context learning

and fine-tuning, which indicates that the task is not trivial and needs further

research.

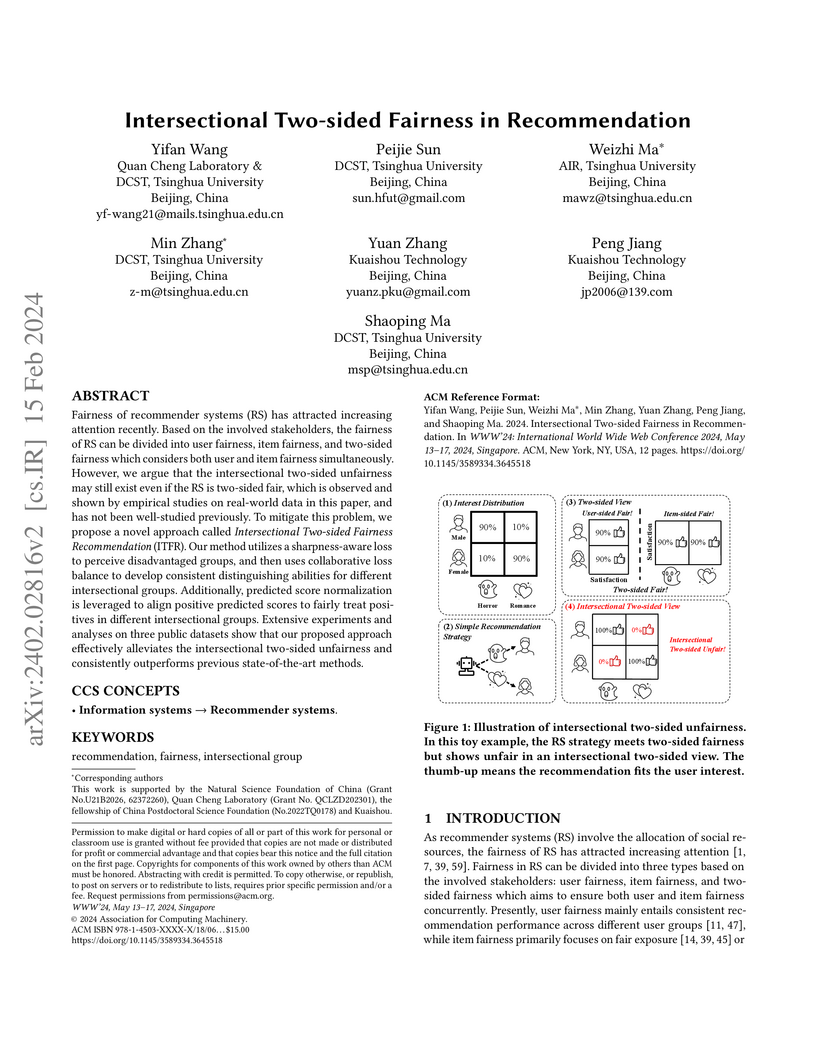

Fairness of recommender systems (RS) has attracted increasing attention

recently. Based on the involved stakeholders, the fairness of RS can be divided

into user fairness, item fairness, and two-sided fairness which considers both

user and item fairness simultaneously. However, we argue that the

intersectional two-sided unfairness may still exist even if the RS is two-sided

fair, which is observed and shown by empirical studies on real-world data in

this paper, and has not been well-studied previously. To mitigate this problem,

we propose a novel approach called Intersectional Two-sided Fairness

Recommendation (ITFR). Our method utilizes a sharpness-aware loss to perceive

disadvantaged groups, and then uses collaborative loss balance to develop

consistent distinguishing abilities for different intersectional groups.

Additionally, predicted score normalization is leveraged to align positive

predicted scores to fairly treat positives in different intersectional groups.

Extensive experiments and analyses on three public datasets show that our

proposed approach effectively alleviates the intersectional two-sided

unfairness and consistently outperforms previous state-of-the-art methods.

ContentCTR, a multimodal transformer architecture, predicts Click-Through Rate (CTR) at the frame level for live streaming content by integrating visual, audio, and text features with streamer identity. This approach achieves superior performance in identifying engaging moments, yielding a +2.9% CTR increase and +5.9% live play duration in production on KuaiShou's platform.

There are no more papers matching your filters at the moment.