Louisiana Tech University

This study applies Bayesian models to predict hotel booking cancellations, a

key challenge affecting resource allocation, revenue, and customer satisfaction

in the hospitality industry. Using a Kaggle dataset with 36,285 observations

and 17 features, Bayesian Logistic Regression and Beta-Binomial models were

implemented. The logistic model, applied to 12 features and 5,000 randomly

selected observations, outperformed the Beta-Binomial model in predictive

accuracy. Key predictors included the number of adults, children, stay

duration, lead time, car parking space, room type, and special requests. Model

evaluation using Leave-One-Out Cross-Validation (LOO-CV) confirmed strong

alignment between observed and predicted outcomes, demonstrating the model's

robustness. Special requests and parking availability were found to be the

strongest predictors of cancellation. This Bayesian approach provides a

valuable tool for improving booking management and operational efficiency in

the hotel industry.

University of Mississippi California Institute of TechnologyKyungpook National UniversitySLAC National Accelerator Laboratory

California Institute of TechnologyKyungpook National UniversitySLAC National Accelerator Laboratory Imperial College London

Imperial College London University of Notre Dame

University of Notre Dame University of ChicagoGhent University

University of ChicagoGhent University Nanjing UniversityUniversity of Bonn

Nanjing UniversityUniversity of Bonn University of MichiganUniversity of Melbourne

University of MichiganUniversity of Melbourne Cornell University

Cornell University Boston UniversityKansas State UniversityRutherford Appleton Laboratory

Boston UniversityKansas State UniversityRutherford Appleton Laboratory CERN

CERN Argonne National Laboratory

Argonne National Laboratory University of Minnesota

University of Minnesota Brookhaven National LaboratoryUniversity of Colorado

Brookhaven National LaboratoryUniversity of Colorado Purdue UniversityUniversity of Helsinki

Purdue UniversityUniversity of Helsinki University of California, DavisUniversity of Massachusetts AmherstUniversity of IowaFermi National Accelerator Laboratory

University of California, DavisUniversity of Massachusetts AmherstUniversity of IowaFermi National Accelerator Laboratory MIT

MIT Princeton UniversityUniversity of DelhiUniversity of New MexicoUniversity of OregonLawrence Livermore National LaboratoryMoscow State UniversityUniversity of MontenegroEwha Womans University

Princeton UniversityUniversity of DelhiUniversity of New MexicoUniversity of OregonLawrence Livermore National LaboratoryMoscow State UniversityUniversity of MontenegroEwha Womans University University of California, Santa CruzGSIUniversity of Hawai’iMax Planck Institute for PhysicsUniversity of BarcelonaCEA SaclayNorthern Illinois UniversityLouisiana Tech UniversityLPNHEBristol UniversitySUNY, Stony BrookLaboratoire d’Annecy-le-Vieux de Physique des ParticulesInstitute of Microelectronics of Barcelona, IMB-CNM (CSIC)Institute of Nuclear Research (Atomki)University of IndianaMolecular Biology ConsortiumIPPPGomel State Technical UniversityInstituto de Fisica Corpuscular (IFIC)IHEP BeijingInstituto de Fisica de Cantabria (IFCA)Institute of Physics, PragueObninsk State Technical University for Nuclear Power EngineeringBirla Institute for Technology and Science, PilaniIPHC-IN2P3/CNRSUniversite´ Pierre et Marie Curie

University of California, Santa CruzGSIUniversity of Hawai’iMax Planck Institute for PhysicsUniversity of BarcelonaCEA SaclayNorthern Illinois UniversityLouisiana Tech UniversityLPNHEBristol UniversitySUNY, Stony BrookLaboratoire d’Annecy-le-Vieux de Physique des ParticulesInstitute of Microelectronics of Barcelona, IMB-CNM (CSIC)Institute of Nuclear Research (Atomki)University of IndianaMolecular Biology ConsortiumIPPPGomel State Technical UniversityInstituto de Fisica Corpuscular (IFIC)IHEP BeijingInstituto de Fisica de Cantabria (IFCA)Institute of Physics, PragueObninsk State Technical University for Nuclear Power EngineeringBirla Institute for Technology and Science, PilaniIPHC-IN2P3/CNRSUniversite´ Pierre et Marie Curie

California Institute of TechnologyKyungpook National UniversitySLAC National Accelerator Laboratory

California Institute of TechnologyKyungpook National UniversitySLAC National Accelerator Laboratory Imperial College London

Imperial College London University of Notre Dame

University of Notre Dame University of ChicagoGhent University

University of ChicagoGhent University Nanjing UniversityUniversity of Bonn

Nanjing UniversityUniversity of Bonn University of MichiganUniversity of Melbourne

University of MichiganUniversity of Melbourne Cornell University

Cornell University Boston UniversityKansas State UniversityRutherford Appleton Laboratory

Boston UniversityKansas State UniversityRutherford Appleton Laboratory CERN

CERN Argonne National Laboratory

Argonne National Laboratory University of Minnesota

University of Minnesota Brookhaven National LaboratoryUniversity of Colorado

Brookhaven National LaboratoryUniversity of Colorado Purdue UniversityUniversity of Helsinki

Purdue UniversityUniversity of Helsinki University of California, DavisUniversity of Massachusetts AmherstUniversity of IowaFermi National Accelerator Laboratory

University of California, DavisUniversity of Massachusetts AmherstUniversity of IowaFermi National Accelerator Laboratory MIT

MIT Princeton UniversityUniversity of DelhiUniversity of New MexicoUniversity of OregonLawrence Livermore National LaboratoryMoscow State UniversityUniversity of MontenegroEwha Womans University

Princeton UniversityUniversity of DelhiUniversity of New MexicoUniversity of OregonLawrence Livermore National LaboratoryMoscow State UniversityUniversity of MontenegroEwha Womans University University of California, Santa CruzGSIUniversity of Hawai’iMax Planck Institute for PhysicsUniversity of BarcelonaCEA SaclayNorthern Illinois UniversityLouisiana Tech UniversityLPNHEBristol UniversitySUNY, Stony BrookLaboratoire d’Annecy-le-Vieux de Physique des ParticulesInstitute of Microelectronics of Barcelona, IMB-CNM (CSIC)Institute of Nuclear Research (Atomki)University of IndianaMolecular Biology ConsortiumIPPPGomel State Technical UniversityInstituto de Fisica Corpuscular (IFIC)IHEP BeijingInstituto de Fisica de Cantabria (IFCA)Institute of Physics, PragueObninsk State Technical University for Nuclear Power EngineeringBirla Institute for Technology and Science, PilaniIPHC-IN2P3/CNRSUniversite´ Pierre et Marie Curie

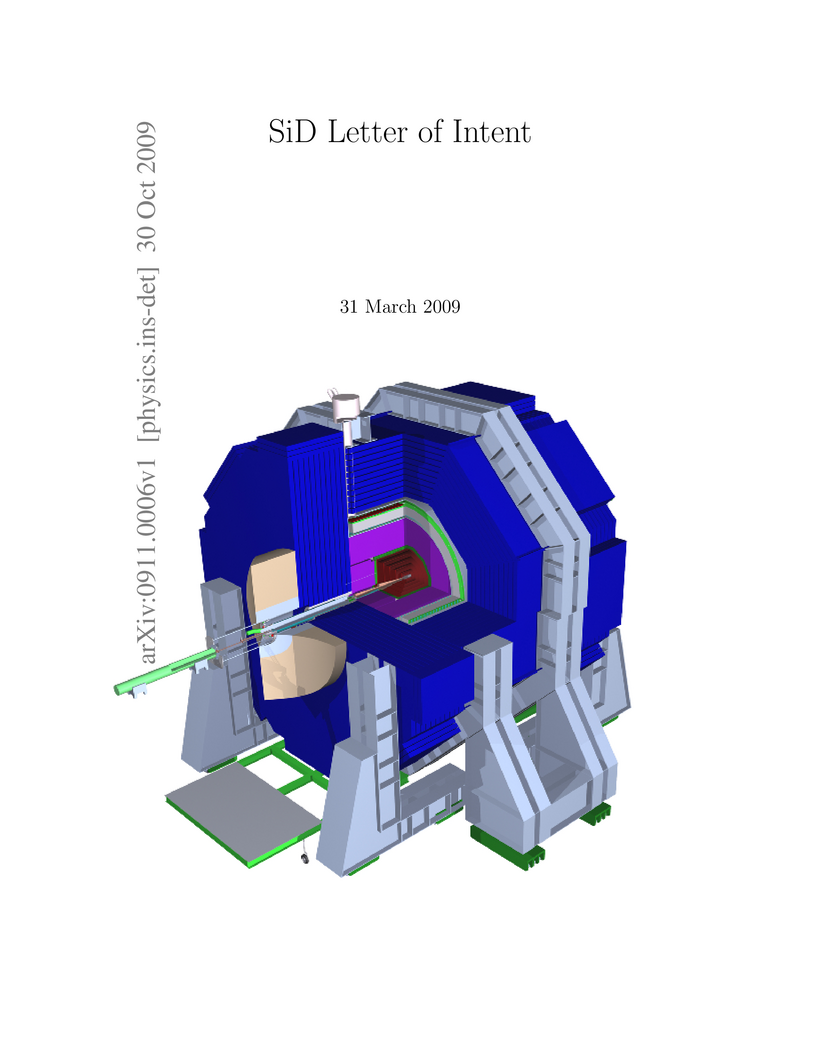

University of California, Santa CruzGSIUniversity of Hawai’iMax Planck Institute for PhysicsUniversity of BarcelonaCEA SaclayNorthern Illinois UniversityLouisiana Tech UniversityLPNHEBristol UniversitySUNY, Stony BrookLaboratoire d’Annecy-le-Vieux de Physique des ParticulesInstitute of Microelectronics of Barcelona, IMB-CNM (CSIC)Institute of Nuclear Research (Atomki)University of IndianaMolecular Biology ConsortiumIPPPGomel State Technical UniversityInstituto de Fisica Corpuscular (IFIC)IHEP BeijingInstituto de Fisica de Cantabria (IFCA)Institute of Physics, PragueObninsk State Technical University for Nuclear Power EngineeringBirla Institute for Technology and Science, PilaniIPHC-IN2P3/CNRSUniversite´ Pierre et Marie CurieLetter of intent describing SiD (Silicon Detector) for consideration by the International Linear Collider IDAG panel. This detector concept is founded on the use of silicon detectors for vertexing, tracking, and electromagnetic calorimetry. The detector has been cost-optimized as a general-purpose detector for a 500 GeV electron-positron linear collider.

In the standard approach, predictions of perturbative Quantum Chromodynamics for ratios of cross sections are computed as the ratio of fixed-order predictions for the numerator and the denominator. Beyond the lowest order in the perturbative expansion, the result does, however, not correspond to a fixed-order prediction for the ratio. This article describes how exact fixed-order results for ratios of arbitrary cross sections can be obtained. The general method for computations in any order of the perturbative expansion is derived, and results for next-to-leading order and next-to-next-to-leading order calculations are given. The approach is applied to theory predictions for various multi-jet cross section ratios measured at hadron colliders. The two methods are compared with each other and with the experimental data. Recommendations are made how to obtain improved theory predictions with more realistic uncertainty estimates.

Accurate and quick prediction of wood chip moisture content is critical for optimizing biofuel production and ensuring energy efficiency. The current widely used direct method (oven drying) is limited by its longer processing time and sample destructiveness. On the other hand, existing indirect methods, including near-infrared spectroscopy-based, electrical capacitance-based, and image-based approaches, are quick but not accurate when wood chips come from various sources. Variability in the source material can alter data distributions, undermining the performance of data-driven models. Therefore, there is a need for a robust approach that effectively mitigates the impact of source variability. Previous studies show that manually extracted texture features have the potential to predict wood chip moisture class. Building on this, in this study, we conduct a comprehensive analysis of five distinct texture feature types extracted from wood chip images to predict moisture content. Our findings reveal that a combined feature set incorporating all five texture features achieves an accuracy of 95% and consistently outperforms individual texture features in predicting moisture content. To ensure robust moisture prediction, we propose a domain adaptation method named AdaptMoist that utilizes the texture features to transfer knowledge from one source of wood chip data to another, addressing variability across different domains. We also proposed a criterion for model saving based on adjusted mutual information. The AdaptMoist method improves prediction accuracy across domains by 23%, achieving an average accuracy of 80%, compared to 57% for non-adapted models. These results highlight the effectiveness of AdaptMoist as a robust solution for wood chip moisture content estimation across domains, making it a potential solution for wood chip-reliant industries.

In a cloud of m-dimensional data points, how would we spot, as well as rank, both single-point- as well as group- anomalies? We are the first to generalize anomaly detection in two dimensions: The first dimension is that we handle both point-anomalies, as well as group-anomalies, under a unified view -- we shall refer to them as generalized anomalies. The second dimension is that gen2Out not only detects, but also ranks, anomalies in suspiciousness order. Detection, and ranking, of anomalies has numerous applications: For example, in EEG recordings of an epileptic patient, an anomaly may indicate a seizure; in computer network traffic data, it may signify a power failure, or a DoS/DDoS attack. We start by setting some reasonable axioms; surprisingly, none of the earlier methods pass all the axioms. Our main contribution is the gen2Out algorithm, that has the following desirable properties: (a) Principled and Sound anomaly scoring that obeys the axioms for detectors, (b) Doubly-general in that it detects, as well as ranks generalized anomaly -- both point- and group-anomalies, (c) Scalable, it is fast and scalable, linear on input size. (d) Effective, experiments on real-world epileptic recordings (200GB) demonstrate effectiveness of gen2Out as confirmed by clinicians. Experiments on 27 real-world benchmark datasets show that gen2Out detects ground truth groups, matches or outperforms point-anomaly baseline algorithms on accuracy, with no competition for group-anomalies and requires about 2 minutes for 1 million data points on a stock machine.

08 Aug 2003

Kyungpook National University University of Maryland

University of Maryland Duke University

Duke University MIT

MIT University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State University

University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State University

University of Maryland

University of Maryland Duke University

Duke University MIT

MIT University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State University

University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State UniversityWe report new measurements of the ratio of the electric form factor to the magnetic form factor of the neutron, GEn/GMn, obtained via recoil polarimetry from the quasielastic ^2H(vec{e},e'vec{n})^1H reaction at Q^2 values of 0.45, 1.13, and 1.45 (GeV/c)^2 with relative statistical uncertainties of 7.6 and 8.4% at the two higher Q^2 points, which were not reached previously via polarization measurements. Scale and systematic uncertainties are small.

13 Nov 2005

California Institute of TechnologyKyungpook National UniversityJoint Institute for Nuclear Research

California Institute of TechnologyKyungpook National UniversityJoint Institute for Nuclear Research University of Maryland

University of Maryland Duke University

Duke University University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityNorthern Michigan UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State University

University of VirginiaFlorida International UniversityOld Dominion UniversityKent State UniversityThomas Jefferson National Accelerator FacilityNorfolk State UniversityThe College of William and MaryJohannes Gutenberg-UniversitätOhio UniversityNorthern Michigan UniversityLouisiana Tech UniversityHampton UniversityYerevan Physics InstituteRheinische Friedrich-Wilhelms-UniversitätSouthern University at New OrleansNorth Carolina AT State UniversityWe report values for the neutron electric to magnetic form factor ratio,

GEn/GMn, deduced from measurements of the neutron's recoil polarization in the

quasielastic 2H(\vec{e},e'\vec{n})1H reaction, at three Q^2 values of 0.45,

1.13, and 1.45 (GeV/c)^2. The data at Q^2 = 1.13 and 1.45 (GeV/c)^2 are the

first direct experimental measurements of GEn employing polarization degrees of

freedom in the Q^2 > 1 (GeV/c)^2 region and stand as the most precise

determinations of GEn for all values of Q^2.

18 Aug 2019

We consider a distributed computing framework where the distributed nodes

have different communication capabilities, motivated by the heterogeneous

networks in data centers and mobile edge computing systems. Following the

structure of MapReduce, this framework consists of Map computation phase,

Shuffle phase, and Reduce computation phase. The Shuffle phase allows

distributed nodes to exchange intermediate values, in the presence of

heterogeneous communication bottlenecks for different nodes (heterogeneous

communication load constraints). For this setting, we characterize the minimum

total computation load and the minimum worst-case computation load in some

cases, under the heterogeneous communication load constraints. While the total

computation load depends on the sum of the computation loads of all the nodes,

the worst-case computation load depends on the computation load of a node with

the heaviest job. We show an interesting insight that, for some cases, there is

a tradeoff between the minimum total computation load and the minimum

worst-case computation load, in the sense that both cannot be achieved at the

same time. The achievability schemes are proposed with careful design on the

file assignment and the data shuffling. Beyond the cut-set bound, a novel

converse is proposed using the proof by contradiction. For the general case, we

identify two extreme regimes in which both the scheme with coding and the

scheme without coding are optimal, respectively.

17 Oct 2018

Motivated by mobile edge computing and wireless data centers, we study a

wireless distributed computing framework where the distributed nodes exchange

information over a wireless interference network. Our framework follows the

structure of MapReduce. This framework consists of Map, Shuffle, and Reduce

phases, where Map and Reduce are computation phases and Shuffle is a data

transmission phase. In our setting, we assume that the transmission is operated

over a wireless interference network. We demonstrate that, by duplicating the

computation work at a cluster of distributed nodes in the Map phase, one can

reduce the amount of transmission load required for the Shuffle phase. In this

work, we characterize the fundamental tradeoff between computation load and

communication load, under the assumption of one-shot linear schemes. The

proposed scheme is based on side information cancellation and zero-forcing, and

we prove that it is optimal in terms of computation-communication tradeoff. The

proposed scheme outperforms the naive TDMA scheme with single node transmission

at a time, as well as the coded TDMA scheme that allows coding across data, in

terms of the computation-communication tradeoff.

09 Feb 2024

Thermal resonance, in which the temperature amplitude attains a maximum value (peak) in response to an external exciting frequency source, is a phenomenon pertinent to the presence of underdamped thermal oscillations and explicit finite-speed for the thermal wave propagation. The present work investigates the occurrence condition for thermal resonance phenomenon during the electron-phonon interaction process in metals based on the hyperbolic two-temperature model. First, a sufficient condition for underdamped electron and lattice temperature oscillations is discussed by deriving a critical frequency (a material characteristic). It is shown that the critical frequency of thermal waves near room temperature, during electron-phonon interactions, may be on the order of terahertz (10−20 THz for Cu and Au, i.e., lying within the terahertz gap). It is found that whenever the natural frequency of metal temperature exceeds this frequency threshold, the temperature oscillations are of underdamped type. However, this condition is not necessary, since there is a small frequency domain, below this threshold, in which the underdamped thermal wave solution is available but not effective. Otherwise, the critical damping and the overdamping conditions of the temperature waves are determined numerically for a sample of pure metals. The thermal resonance conditions in both electron and lattice temperatures are investigated. The occurrence of resonance in both electron and lattice temperature is conditional on violating two distinct critical values of frequencies. When the natural frequency of the system becomes larger than these two critical values, an applied frequency equal to such a natural frequency can drive both electron and lattice temperatures to resonate together with different amplitudes and behaviors. However, the electron temperature resonates earlier than the lattice temperature.

University of California, Santa Barbara

University of California, Santa Barbara University of California, San DiegoLouisiana State UniversityLos Alamos National LaboratoryTemple UniversityUniversity of New MexicoEmbry-Riddle Aeronautical UniversityUniversity of California RiversideLouisiana Tech UniversitySouthern UniversityLinfield College

University of California, San DiegoLouisiana State UniversityLos Alamos National LaboratoryTemple UniversityUniversity of New MexicoEmbry-Riddle Aeronautical UniversityUniversity of California RiversideLouisiana Tech UniversitySouthern UniversityLinfield CollegeA search for nu_mu -> nu_e oscillations has been conducted with the LSND

apparatus at the Los Alamos Meson Physics Facility. Using nu_mu from pi^+ decay

in flight, the nu_e appearance is detected via the charged-current reaction

C(nu_e,e^-)X. Two independent analyses observe a total of 40 beam-on

high-energy electron events (60 < E_e < 200 MeV) consistent with the above

signature. This number is significantly above the 21.9 +- 2.1 events expected

from the nu_e contamination in the beam and the beam-off background. If

interpreted as an oscillation signal, the observed oscillation probability of

(2.6 +- 1.0 +- 0.5) x 10^{-3} is consistent with the previously reported

nu_mu_bar -> nu_e_bar oscillation evidence from LSND.

19 Dec 2017

One of the most important developments in Monte Carlo simulation of collider

events for the LHC has been the arrival of schemes and codes for matching of

parton showers to matrix elements calculated at next-to-leading order in the

QCD coupling. The POWHEG scheme, and particularly its implementation in the

POWHEG-BOX code, has attracted most attention due to ease of use and effective

portability between parton shower algorithms.

But formal accuracy to NLO does not guarantee predictivity, and the

beyond-fixed-order corrections associated with the shower may be large.

Further, there are open questions over which is the "best" variant of the

POWHEG matching procedure to use, and how to evaluate systematic uncertainties

due to the degrees of freedom in the scheme.

In this paper we empirically explore the scheme variations allowed in Pythia8

matching to POWHEG-BOX dijet events, demonstrating the effects of both discrete

and continuous freedoms in emission vetoing details for both tuning to data and

for estimation of systematic uncertainties from the matching and parton shower

aspects of the POWHEG-BOX+Pythia8 generator combination.

This paper introduces EdgeProfiler, a fast profiling framework designed for evaluating lightweight Large Language Models (LLMs) on edge systems. While LLMs offer remarkable capabilities in natural language understanding and generation, their high computational, memory, and power requirements often confine them to cloud environments. EdgeProfiler addresses these challenges by providing a systematic methodology for assessing LLM performance in resource-constrained edge settings. The framework profiles compact LLMs, including TinyLLaMA, Gemma3.1B, Llama3.2-1B, and DeepSeek-r1-1.5B, using aggressive quantization techniques and strict memory constraints. Analytical modeling is used to estimate latency, FLOPs, and energy consumption. The profiling reveals that 4-bit quantization reduces model memory usage by approximately 60-70%, while maintaining accuracy within 2-5% of full-precision baselines. Inference speeds are observed to improve by 2-3x compared to FP16 baselines across various edge devices. Power modeling estimates a 35-50% reduction in energy consumption for INT4 configurations, enabling practical deployment on hardware such as Raspberry Pi 4/5 and Jetson Orin Nano Super. Our findings emphasize the importance of efficient profiling tailored to lightweight LLMs in edge environments, balancing accuracy, energy efficiency, and computational feasibility.

As the most important human-machine interfacing tool, an insignificant amount of work has been carried out on Bangla Speech Recognition compared to the English language. Motivated by this, in this work, the performance of speaker-independent isolated speech recognition systems has been implemented and analyzed using a dataset that is created containing both isolated Bangla and English spoken words. An approach using the Mel Frequency Cepstral Coefficient (MFCC) and Deep Feed-Forward Fully Connected Neural Network (DFFNN) of 7 layers as a classifier is proposed in this work to recognize isolated spoken words. This work shows 93.42% recognition accuracy which is better compared to most of the works done previously on Bangla speech recognition considering the number of classes and dataset size.

Argonne National Laboratory

Argonne National Laboratory Stony Brook University

Stony Brook University Karlsruhe Institute of TechnologySyracuse University

Karlsruhe Institute of TechnologySyracuse University University of VirginiaUniversity of ManitobaThomas Jefferson National Accelerator FacilityCollege of William and MaryHelmholtz Institute MainzLouisiana Tech UniversityUniversidad Nacional Autonoma de MexicoVirginia Tech UniversityJohannes Gutenberg Universit\"at Mainz

University of VirginiaUniversity of ManitobaThomas Jefferson National Accelerator FacilityCollege of William and MaryHelmholtz Institute MainzLouisiana Tech UniversityUniversidad Nacional Autonoma de MexicoVirginia Tech UniversityJohannes Gutenberg Universit\"at MainzThis article describes the future P2 parity-violating electron scattering facility at the upcoming MESA accelerator in Mainz. The physics program of the facility comprises indirect, high precision search for physics beyond the Standard Model, measurement of the neutron distribution in nuclear physics, single-spin asymmetries stemming from two-photon exchange and a possible future extension to the measurement of hadronic parity violation. The first measurement of the P2 experiment aims for a high precision determination of the weak mixing angle to a precision of 0.14% at a four-momentum transfer of Q^2 = 4.5 10^{-3} GeV^2. The accuracy is comparable to existing measurements at the Z pole. It comprises a sensitive test of the standard model up to a mass scale of 50 TeV, extendable to 70 TeV. This requires a measurement of the parity violating cross section asymmetry -39.94 10^{-9} in the elastic electron-proton scattering with a total accuracy of 0.56 10^-9 (1.4 %) in 10,000 h of 150 \micro A polarized electron beam impinging on a 60 cm liquid H_2 target allowing for an extraction of the weak charge of the proton which is directly connected to the weak mixing angle. Contributions from gamma Z-box graphs become small at the small beam energy of 155 MeV. The size of the asymmetry is the smallest asymmetry ever measured in electron scattering with an unprecedented goal for the accuracy. We report here on the conceptual design of the P2 spectrometer, its Cherenkov detectors, the integrating read-out electronics as well as the ultra-thin, fast tracking detectors. There has been substantial theory work done in preparation of the determination of the weak mixing angle. The further physics program in particle and nuclear physics is described as well.

George Washington UniversityUniversity of Wisconsin MITUniversity of ConnecticutSyracuse University

MITUniversity of ConnecticutSyracuse University University of VirginiaUniversity of Manitoba

University of VirginiaUniversity of Manitoba Dartmouth CollegeMississippi State UniversityTRIUMFUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityYerevan Physics InstituteUniversity of Northern British ColumbiaVirginia Polytechnic InstituteHendrix CollegeInstituto de Fisica, Universidad Nacional Autonoma de MexicoCockroft Institute of Accelerator Science and Technology

Dartmouth CollegeMississippi State UniversityTRIUMFUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityYerevan Physics InstituteUniversity of Northern British ColumbiaVirginia Polytechnic InstituteHendrix CollegeInstituto de Fisica, Universidad Nacional Autonoma de MexicoCockroft Institute of Accelerator Science and Technology

MITUniversity of ConnecticutSyracuse University

MITUniversity of ConnecticutSyracuse University University of VirginiaUniversity of Manitoba

University of VirginiaUniversity of Manitoba Dartmouth CollegeMississippi State UniversityTRIUMFUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityYerevan Physics InstituteUniversity of Northern British ColumbiaVirginia Polytechnic InstituteHendrix CollegeInstituto de Fisica, Universidad Nacional Autonoma de MexicoCockroft Institute of Accelerator Science and Technology

Dartmouth CollegeMississippi State UniversityTRIUMFUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityYerevan Physics InstituteUniversity of Northern British ColumbiaVirginia Polytechnic InstituteHendrix CollegeInstituto de Fisica, Universidad Nacional Autonoma de MexicoCockroft Institute of Accelerator Science and TechnologyWe propose a new precision measurement of parity-violating electron scattering on the proton at very low Q^2 and forward angles to challenge predictions of the Standard Model and search for new physics. A unique opportunity exists to carry out the first precision measurement of the proton's weak charge, QW=1−4sin2θW. A 2200 hour measurement of the parity violating asymmetry in elastic ep scattering at Q^2=0.03 (GeV/c)^2 employing 180 μA of 85% polarized beam on a 35 cm liquid Hydrogen target will determine the proton's weak charge with approximately 4% combined statistical and systematic errors. The Standard Model makes a firm prediction of QW, based on the running of the weak mixing angle from the Z0 pole down to low energies, corresponding to a 10 sigma effect in this experiment.

02 Sep 2013

George Washington UniversityUniversity of Zagreb MITUniversity of Connecticut

MITUniversity of Connecticut University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaVirginia Polytechnic Institute & State UniversityA. I. Alikhanyan National Science LaboratoryHendrix CollegeSouthern University at New Orleans

University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaVirginia Polytechnic Institute & State UniversityA. I. Alikhanyan National Science LaboratoryHendrix CollegeSouthern University at New Orleans

MITUniversity of Connecticut

MITUniversity of Connecticut University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaVirginia Polytechnic Institute & State UniversityA. I. Alikhanyan National Science LaboratoryHendrix CollegeSouthern University at New Orleans

University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityCollege of William and MaryOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaVirginia Polytechnic Institute & State UniversityA. I. Alikhanyan National Science LaboratoryHendrix CollegeSouthern University at New OrleansThe Qweak experiment has measured the parity-violating asymmetry in polarized e-p elastic scattering at Q^2 = 0.025(GeV/c)^2, employing 145 microamps of 89% longitudinally polarized electrons on a 34.4cm long liquid hydrogen target at Jefferson Lab. The results of the experiment's commissioning run are reported here, constituting approximately 4% of the data collected in the experiment. From these initial results the measured asymmetry is Aep = -279 +- 35 (statistics) +- 31 (systematics) ppb, which is the smallest and most precise asymmetry ever measured in polarized e-p scattering. The small Q^2 of this experiment has made possible the first determination of the weak charge of the proton, QpW, by incorporating earlier parity-violating electron scattering (PVES) data at higher Q^2 to constrain hadronic corrections. The value of QpW obtained in this way is QpW(PVES) = 0.064 +- 0.012, in good agreement with the Standard Model prediction of QpW(SM) = 0.0710 +- 0.0007. When this result is further combined with the Cs atomic parity violation (APV) measurement, significant constraints on the weak charges of the up and down quarks can also be extracted. That PVES+APV analysis reveals the neutron's weak charge to be QnW(PVES+APV) = -0.975 +- 0.010.

20 May 2019

George Washington UniversityUniversity of Zagreb MITWilliam & MaryUniversity of ConnecticutSyracuse University

MITWilliam & MaryUniversity of ConnecticutSyracuse University University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityVirginia Polytechnic Institute and State UniversityOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaHendrix CollegeSouthern University at New OrleansA. I. Alikhanyan National Science Laboratory, Yerevan Physics Institute

University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityVirginia Polytechnic Institute and State UniversityOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaHendrix CollegeSouthern University at New OrleansA. I. Alikhanyan National Science Laboratory, Yerevan Physics Institute

MITWilliam & MaryUniversity of ConnecticutSyracuse University

MITWilliam & MaryUniversity of ConnecticutSyracuse University University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityVirginia Polytechnic Institute and State UniversityOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaHendrix CollegeSouthern University at New OrleansA. I. Alikhanyan National Science Laboratory, Yerevan Physics Institute

University of VirginiaUniversity of ManitobaMississippi State UniversityTRIUMFUniversity of AdelaideUniversity of WinnipegUniversity of New HampshireThomas Jefferson National Accelerator FacilityChristopher Newport UniversityVirginia Polytechnic Institute and State UniversityOhio UniversityLouisiana Tech UniversityHampton UniversityIdaho State UniversityUniversity of Northern British ColumbiaHendrix CollegeSouthern University at New OrleansA. I. Alikhanyan National Science Laboratory, Yerevan Physics InstituteThe fields of particle and nuclear physics have undertaken extensive programs to search for evidence of physics beyond that explained by current theories. The observation of the Higgs boson at the Large Hadron Collider completed the set of particles predicted by the Standard Model (SM), currently the best description of fundamental particles and forces. However, the theory's limitations include a failure to predict fundamental parameters and the inability to account for dark matter/energy, gravity, and the matter-antimater asymmetry in the universe, among other phenomena. Given the lack of additional particles found so far through direct searches in the post-Higgs era, indirect searches utilizing precise measurements of well predicted SM observables allow highly targeted alternative tests for physics beyond the SM. Indirect searches have the potential to reach mass/energy scales beyond those directly accessible by today's high-energy accelerators. The value of the weak charge of the proton Q_W^p is an example of such an indirect search, as it sets the strength of the proton's interaction with particles via the well-predicted neutral electroweak force. Parity violation (invariance under spatial inversion (x,y,z) -> (-x,-y,-z)) is violated only in the weak interaction, thus providing a unique tool to isolate the weak interaction in order to measure the proton's weak charge. Here we report Q_W^p=0.0719+-0.0045, as extracted from our measured parity-violating (PV) polarized electron-proton scattering asymmetry, A_ep=-226.5+-9.3 ppb. Our value of Q_W^p is in excellent agreement with the SM, and sets multi-TeV-scale constraints on any semi-leptonic PV physics not described within the SM.

28 Aug 2024

This paper investigates the performance of various Border Gateway Protocol (BGP) security policies against multiple attack scenarios using different deployment strategies. Through extensive simulations, we evaluate the effectiveness of defensive mechanisms such as Root Origin Validation (ROV), Autonomous System Provider Authorization (ASPA), and PeerROV across distinct AS deployment types. Our findings reveal critical insights into the strengths and limitations of current BGP security measures, providing guidance for future policy development and implementation.

16 Jun 2022

Carnegie Mellon UniversityIndiana UniversityUniversity of Ljubljana

Carnegie Mellon UniversityIndiana UniversityUniversity of Ljubljana Argonne National LaboratoryUniversity of ZagrebUniversity of Massachusetts Amherst

Argonne National LaboratoryUniversity of ZagrebUniversity of Massachusetts Amherst Virginia Tech

Virginia Tech Shandong UniversityWilliam & MaryUniversity of ConnecticutTemple UniversitySyracuse University

Shandong UniversityWilliam & MaryUniversity of ConnecticutTemple UniversitySyracuse University University of VirginiaOld Dominion UniversityUniversity of ManitobaIstituto Nazionale di Fisica NucleareMississippi State UniversityJozef Stefan InstituteUniversity of WinnipegThomas Jefferson National Accelerator FacilityChristopher Newport UniversityOhio UniversityLouisiana Tech UniversityINFN Sezione di RomaHampton UniversityIdaho State UniversityCalifornia State University, Los AngelesA. I. Alikhanyan National Science LaboratoryJohannes Gutenberg Universit\"at MainzStony Brook, State University of New YorkCenter for Frontiers in Nuclear ScienceVeer Kunwar Singh UniversityTexas A&M University, Kingsville

University of VirginiaOld Dominion UniversityUniversity of ManitobaIstituto Nazionale di Fisica NucleareMississippi State UniversityJozef Stefan InstituteUniversity of WinnipegThomas Jefferson National Accelerator FacilityChristopher Newport UniversityOhio UniversityLouisiana Tech UniversityINFN Sezione di RomaHampton UniversityIdaho State UniversityCalifornia State University, Los AngelesA. I. Alikhanyan National Science LaboratoryJohannes Gutenberg Universit\"at MainzStony Brook, State University of New YorkCenter for Frontiers in Nuclear ScienceVeer Kunwar Singh UniversityTexas A&M University, KingsvilleWe report a precise measurement of the parity-violating asymmetry APV in the elastic scattering of longitudinally polarized electrons from 48Ca. We measure APV=2668±106 (stat)±40 (syst) parts per billion, leading to an extraction of the neutral weak form factor FW(q=0.8733 fm−1)=0.1304±0.0052 (stat)±0.0020 (syst) and the charge minus the weak form factor Fch−FW=0.0277±0.0055. The resulting neutron skin thickness Rn−Rp=0.121±0.026 (exp)±0.024 (model)~fm is relatively thin yet consistent with many model calculations. The combined CREX and PREX results will have implications for future energy density functional calculations and on the density dependence of the symmetry energy of nuclear matter.

There are no more papers matching your filters at the moment.