Max Planck Computing and Data Facility

15 Sep 2025

Elliptic equations play a crucial role in turbulence models for magnetic confinement fusion. Regardless of the chosen modeling approach - whether gyrokinetic, gyrofluid, or drift-fluid - the Poisson equation and Ampère's law lead to elliptic problems that must be solved on 2D planes perpendicular to the magnetic field. In this work, we present an efficient solver for such generalised elliptic problems, especially suited for the conditions in the boundary region. A finite difference discretisation is employed, and the solver is based on a flexible generalised minimal residual method (fGMRES) with a geometric multigrid preconditioner. We present implementations with OpenMP parallelisation and GPU acceleration, with backends in CUDA and HIP. On the node level, significant speed-ups are achieved with the GPU implementation, exceeding external library solutions such as rocALUTION. In accordance with theoretical scaling laws for multigrid methods, we observe linear scaling of the solver with problem size, O(N). This solver is implemented in the PARALLAX/PAccX libraries and serves as a central component of the plasma boundary turbulence codes GRILLIX and GENE-X.

Graph neural networks (GNN) have shown promising results for several domains such as materials science, chemistry, and the social sciences. GNN models often contain millions of parameters, and like other neural network (NN) models, are often fed only a fraction of the graphs that make up the training dataset in batches to update model parameters. The effect of batching algorithms on training time and model performance has been thoroughly explored for NNs but not yet for GNNs. We analyze two different batching algorithms for graph based models, namely static and dynamic batching for two datasets, the QM9 dataset of small molecules and the AFLOW materials database. Our experiments show that changing the batching algorithm can provide up to a 2.7x speedup, but the fastest algorithm depends on the data, model, batch size, hardware, and number of training steps run. Experiments show that for a select number of combinations of batch size, dataset, and model, significant differences in model learning metrics are observed between static and dynamic batching algorithms.

10 Jun 2024

Three-dimensional dust density maps are crucial for understanding the

structure of the interstellar medium of the Milky Way and the processes that

shape it. However, constructing these maps requires large datasets and the

methods used to analyse them are computationally expensive and difficult to

scale up. As a result it is has only recently become possible to map

kiloparsec-scale regions of our Galaxy at parsec-scale grid sampling. We

present all-sky three-dimensional dust density and extinction maps of the Milky

Way out to 2.8~kpc in distance from the Sun using the fast and scalable

Gaussian Process algorithm \DustT. The sampling of the three-dimensional map is

l,b,d=1∘×1∘×1.7~pc. The input extinction and

distance catalogue contains 120 million stars with photometry and astrometry

from Gaia DR2, 2MASS and AllWISE. This combines the strengths of optical and

infrared data to probe deeper into the dusty regions of the Milky Way. We

compare our maps with other published 3D dust maps. All maps quantitatively

agree at the 0.001~mag~pc−1 scale with many qualitatively similar

features, although each map also has its own features. We recover Galactic

features previously identified in the literature. Moreover, we also see a large

under-density that may correspond to an inter-arm or -spur gap towards the

Galactic Centre.

The segmentation of cells and neurites in microscopy images of neuronal networks provides valuable quantitative information about neuron growth and neuronal differentiation, including the number of cells, neurites, neurite length and neurite orientation. This information is essential for assessing the development of neuronal networks in response to extracellular stimuli, which is useful for studying neuronal structures, for example, the study of neurodegenerative diseases and pharmaceuticals. However, automatic and accurate analysis of neuronal structures from phase contrast images has remained challenging. To address this, we have developed NeuroQuantify, an open-source software that uses deep learning to efficiently and quickly segment cells and neurites in phase contrast microscopy images. NeuroQuantify offers several key features: (i) automatic detection of cells and neurites; (ii) post-processing of the images for the quantitative neurite length measurement based on segmentation of phase contrast microscopy images, and (iii) identification of neurite orientations. The user-friendly NeuroQuantify software can be installed and freely downloaded from GitHub this https URL.

03 Nov 2018

The solution of (generalized) eigenvalue problems for symmetric or Hermitian

matrices is a common subtask of many numerical calculations in electronic

structure theory or materials science. Solving the eigenvalue problem can

easily amount to a sizeable fraction of the whole numerical calculation. For

researchers in the field of computational materials science, an efficient and

scalable solution of the eigenvalue problem is thus of major importance. The

ELPA-library is a well-established dense direct eigenvalue solver library,

which has proven to be very efficient and scalable up to very large core

counts. In this paper, we describe the latest optimizations of the ELPA-library

for new HPC architectures of the Intel Skylake processor family with an AVX-512

SIMD instruction set, or for HPC systems accelerated with recent GPUs. We also

describe a complete redesign of the API in a modern modular way, which, apart

from a much simpler and more flexible usability, leads to a new path to access

system-specific performance optimizations. In order to ensure optimal

performance for a particular scientific setting or a specific HPC system, the

new API allows the user to influence in straightforward way the internal

details of the algorithms and of performance-critical parameters used in the

ELPA-library. On top of that, we introduced an autotuning functionality, which

allows for finding the best settings in a self-contained automated way. In

situations where many eigenvalue problems with similar settings have to be

solved consecutively, the autotuning process of the ELPA-library can be done

"on-the-fly". Practical applications from materials science which rely on

so-called self-consistency iterations can profit from the autotuning. On some

examples of scientific interest, simulated with the FHI-aims application, the

advantages of the latest optimizations of the ELPA-library are demonstrated.

12 Jan 2025

Data compression plays a key role in reducing storage and I/O costs. Traditional lossy methods primarily target data on rectilinear grids and cannot leverage the spatial coherence in unstructured mesh data, leading to suboptimal compression ratios. We present a multi-component, error-bounded compression framework designed to enhance the compression of floating-point unstructured mesh data, which is common in scientific applications. Our approach involves interpolating mesh data onto a rectilinear grid and then separately compressing the grid interpolation and the interpolation residuals. This method is general, independent of mesh types and typologies, and can be seamlessly integrated with existing lossy compressors for improved performance. We evaluated our framework across twelve variables from two synthetic datasets and two real-world simulation datasets. The results indicate that the multi-component framework consistently outperforms state-of-the-art lossy compressors on unstructured data, achieving, on average, a 2.3−3.5× improvement in compression ratios, with error bounds ranging from \num1e−6 to \num1e−2. We further investigate the impact of hyperparameters, such as grid spacing and error allocation, to deliver optimal compression ratios in diverse datasets.

10 Oct 2024

Three-dimensional simulations usually fail to cover the entire dynamical common-envelope phase of gravitational wave progenitor systems due to the vast range of spatial and temporal scales involved. We investigated the common-envelope interactions of a 10M⊙ red supergiant primary star with a black hole and a neutron star companion, respectively, until full envelope ejection (≳97% of the envelope mass). We find that the dynamical plunge-in of the systems determines largely the orbital separations of the core binary system, while the envelope ejection by recombination acts only at later stages of the evolution and fails to harden the core binaries down to orbital frequencies where they qualify as progenitors of gravitational-wave-emitting double-compact object mergers. As opposed to the conventional picture of a spherically symmetric envelope ejection, our simulations show a new mechanism: The rapid plunge-in of the companion transforms the spherical morphology of the giant primary star into a disk-like structure. During this process, magnetic fields are amplified, and the subsequent transport of material through the disk around the core binary system drives a fast jet-like outflow in the polar directions. While most of the envelope material is lost through a recombination-driven wind from the outer edge of the disk, about 7% of the envelope leaves the system via the magnetically driven outflows. We further explored the potential evolutionary pathways of the post-common-envelope systems given the expected remaining lifetime of the primary core (2.97M⊙) until core collapse (6×104yr), most likely forming a neutron star. We find that the interaction of the core binary system with the circumbinary disk increases the likelihood of giving rise to a double-neutron star merger. (abridged)

Modern HPC systems are increasingly relying on greater core counts and wider vector registers. Thus, applications need to be adapted to fully utilize these hardware capabilities. One class of applications that can benefit from this increase in parallelism are molecular dynamics simulations. In this paper, we describe our efforts at modernizing the ESPResSo++ molecular dynamics simulation package by restructuring its particle data layout for efficient memory accesses and applying vectorization techniques to benefit the calculation of short-range non-bonded forces, which results in an overall three times speedup and serves as a baseline for further optimizations. We also implement fine-grained parallelism for multi-core CPUs through HPX, a C++ runtime system which uses lightweight threads and an asynchronous many-task approach to maximize concurrency. Our goal is to evaluate the performance of an HPX-based approach compared to the bulk-synchronous MPI-based implementation. This requires the introduction of an additional layer to the domain decomposition scheme that defines the task granularity. On spatially inhomogeneous systems, which impose a corresponding load-imbalance in traditional MPI-based approaches, we demonstrate that by choosing an optimal task size, the efficient work-stealing mechanisms of HPX can overcome the overhead of communication resulting in an overall 1.4 times speedup compared to the baseline MPI version.

21 Aug 2020

Chinese Academy of Sciences

Chinese Academy of Sciences University of Maryland, College Park

University of Maryland, College Park Peking University

Peking University Columbia UniversityThe Hebrew UniversityUniversity of Heidelberg

Columbia UniversityThe Hebrew UniversityUniversity of Heidelberg Princeton UniversityRIKEN Center for Computational ScienceMax Planck Institute for AstrophysicsNicolaus Copernicus Astronomical Centre, Polish Academy of SciencesKavli Institute for Astronomy and AstrophysicsMax Planck Computing and Data FacilityArgelander-Institut für AstronomieMain Astronomical Observatory of Ukrainian National Academy of Sciences

Princeton UniversityRIKEN Center for Computational ScienceMax Planck Institute for AstrophysicsNicolaus Copernicus Astronomical Centre, Polish Academy of SciencesKavli Institute for Astronomy and AstrophysicsMax Planck Computing and Data FacilityArgelander-Institut für AstronomieMain Astronomical Observatory of Ukrainian National Academy of SciencesYoung dense massive star clusters are a promising environment for the formation of intermediate mass black holes (IMBHs) through collisions. We present a set of 80 simulations carried out with Nbody6++GPU of 10 initial conditions for compact ∼7×104M⊙ star clusters with half-mass radii Rh≲1pc, central densities ρcore≳105M⊙pc−3, and resolved stellar populations with 10\% primordial binaries. Very massive stars (VMSs) with masses up to ∼400M⊙ grow rapidly by binary exchange and three-body scattering events with main sequences stars in hard binaries. Assuming that in VMS - stellar BH collisions all stellar material is accreted onto the BH, IMBHs with masses up to MBH∼350M⊙ can form on timescales of ≲15 Myr. This process was qualitatively predicted from Monte Carlo MOCCA simulations. Despite the stochastic nature of the process - typically not more than 3/8 cluster realisations show IMBH formation - we find indications for higher formation efficiencies in more compact clusters. Assuming a lower accretion fraction of 0.5 for VMS - BH collisions, IMBHs can also form. The process might not work for accretion fractions as low as 0.1. After formation, the IMBHs can experience occasional mergers with stellar mass BHs in intermediate mass-ratio inspiral events (IMRIs) on a 100 Myr timescale. Realised with more than 105 stars, 10 \% binaries, the assumed stellar evolution model with all relevant evolution processes included and 300 Myr simulation time, our large suite of simulations indicates that IMBHs of several hundred solar masses might form rapidly in massive star clusters right after their birth while they are still compact.

University of TorontoHeidelberg University

University of TorontoHeidelberg University Monash University

Monash University Imperial College LondonTechnical University of Berlin

Imperial College LondonTechnical University of Berlin Northwestern University

Northwestern University University of Arizona

University of Arizona Duke University

Duke University Technical University of MunichCardiff UniversityHumboldt-Universität zu BerlinMax Planck Institute for the Structure and Dynamics of MatterEcole Polytechnique Fédérale de LausanneMax Planck Institute for the Physics of Complex SystemsMax Planck Institute for Polymer ResearchThe Barcelona Institute of Science and TechnologyFritz-Haber-Institut der Max-Planck-GesellschaftMax-Planck Institute for InformaticsMax Planck Institute for Dynamics of Complex Technical SystemsSTFC Hartree CentreMax Planck Computing and Data FacilityDaresbury LaboratoryHelmholtz AIMolecular Simulations from First Principles e.V.Max Planck Institute of Colloids and InterfacesMax-Planck-Institut für Eisenforschung GmbHLenovo HPC Innovation CenterFriedrich-Alexander Universität, Erlangen-NürnbergICFO

Institut de Ciencies FotoniquesThe NOMAD LaboratoryMax Planck Centre on the Fundamentals of Heterogeneous Catalysis (FUNCAT)

Technical University of MunichCardiff UniversityHumboldt-Universität zu BerlinMax Planck Institute for the Structure and Dynamics of MatterEcole Polytechnique Fédérale de LausanneMax Planck Institute for the Physics of Complex SystemsMax Planck Institute for Polymer ResearchThe Barcelona Institute of Science and TechnologyFritz-Haber-Institut der Max-Planck-GesellschaftMax-Planck Institute for InformaticsMax Planck Institute for Dynamics of Complex Technical SystemsSTFC Hartree CentreMax Planck Computing and Data FacilityDaresbury LaboratoryHelmholtz AIMolecular Simulations from First Principles e.V.Max Planck Institute of Colloids and InterfacesMax-Planck-Institut für Eisenforschung GmbHLenovo HPC Innovation CenterFriedrich-Alexander Universität, Erlangen-NürnbergICFO

Institut de Ciencies FotoniquesThe NOMAD LaboratoryMax Planck Centre on the Fundamentals of Heterogeneous Catalysis (FUNCAT)Science is and always has been based on data, but the terms "data-centric" and the "4th paradigm of" materials research indicate a radical change in how information is retrieved, handled and research is performed. It signifies a transformative shift towards managing vast data collections, digital repositories, and innovative data analytics methods. The integration of Artificial Intelligence (AI) and its subset Machine Learning (ML), has become pivotal in addressing all these challenges. This Roadmap on Data-Centric Materials Science explores fundamental concepts and methodologies, illustrating diverse applications in electronic-structure theory, soft matter theory, microstructure research, and experimental techniques like photoemission, atom probe tomography, and electron microscopy. While the roadmap delves into specific areas within the broad interdisciplinary field of materials science, the provided examples elucidate key concepts applicable to a wider range of topics. The discussed instances offer insights into addressing the multifaceted challenges encountered in contemporary materials research.

Understanding plasma instabilities is essential for achieving sustainable

fusion energy, with large-scale plasma simulations playing a crucial role in

both the design and development of next-generation fusion energy devices and

the modelling of industrial plasmas. To achieve sustainable fusion energy, it

is essential to accurately model and predict plasma behavior under extreme

conditions, requiring sophisticated simulation codes capable of capturing the

complex interaction between plasma dynamics, magnetic fields, and material

surfaces. In this work, we conduct a comprehensive HPC analysis of two

prominent plasma simulation codes, BIT1 and JOREK, to advance understanding of

plasma behavior in fusion energy applications. Our focus is on evaluating

JOREK's computational efficiency and scalability for simulating non-linear MHD

phenomena in tokamak fusion devices. The motivation behind this work stems from

the urgent need to advance our understanding of plasma instabilities in

magnetically confined fusion devices. Enhancing JOREK's performance on

supercomputers improves fusion plasma code predictability, enabling more

accurate modelling and faster optimization of fusion designs, thereby

contributing to sustainable fusion energy. In prior studies, we analysed BIT1,

a massively parallel Particle-in-Cell (PIC) code for studying plasma-material

interactions in fusion devices. Our investigations into BIT1's computational

requirements and scalability on advanced supercomputing architectures yielded

valuable insights. Through detailed profiling and performance analysis, we have

identified the primary bottlenecks and implemented optimization strategies,

significantly enhancing parallel performance. This previous work serves as a

foundation for our present endeavours.

Stellar mergers are one important path to highly magnetised stars. Mergers of two low-mass white dwarfs may create up to every third hot subdwarf star. The merging process is usually assumed to dramatically amplify magnetic fields. However, so far only four highly magnetised hot subdwarf stars have been found, suggesting a fraction of less than 1%.

We present two high-resolution magnetohydrodynamical (MHD) simulations of the merger of two helium white dwarfs in a binary system with the same total mass of 0.6M⊙. We analysed an equal-mass merger with two 0.3M⊙ white dwarfs, and an unequal-mass merger with white dwarfs of 0.25M⊙ and 0.35M⊙. We simulated the inspiral, merger, and further evolution of the merger remnant for about 50 rotations.

We found efficient magnetic field amplification in both mergers via a small-scale dynamo, reproducing previous results of stellar merger simulations. The magnetic field saturates at a similar strength for both simulations.

We then identified a second phase of magnetic field amplification in both merger remnants that happens on a timescale of several tens of rotational periods of the merger remnant. This phase generates a large-scale ordered azimuthal field via a large-scale dynamo driven by the magneto-rotational instability.

Finally, we speculate that in the unequal-mass merger remnant, helium burning will initially start in a shell around a cold core, rather than in the centre. This forms a convection zone that coincides with the region that contains most of the magnetic energy, and likely destroys the strong, ordered field. Ohmic resistivity might then quickly erase the remaining small-scale field. Therefore, the mass ratio of the initial merger could be the selecting factor that decides if a merger remnant will stay highly magnetised long after the merger.

The computational power of High-Performance Computing (HPC) systems is

constantly increasing, however, their input/output (IO) performance grows

relatively slowly, and their storage capacity is also limited. This unbalance

presents significant challenges for applications such as Molecular Dynamics

(MD) and Computational Fluid Dynamics (CFD), which generate massive amounts of

data for further visualization or analysis. At the same time, checkpointing is

crucial for long runs on HPC clusters, due to limited walltimes and/or failures

of system components, and typically requires the storage of large amount of

data. Thus, restricted IO performance and storage capacity can lead to

bottlenecks for the performance of full application workflows (as compared to

computational kernels without IO). In-situ techniques, where data is further

processed while still in memory rather to write it out over the I/O subsystem,

can help to tackle these problems. In contrast to traditional post-processing

methods, in-situ techniques can reduce or avoid the need to write or read data

via the IO subsystem. They offer a promising approach for applications aiming

to leverage the full power of large scale HPC systems. In-situ techniques can

also be applied to hybrid computational nodes on HPC systems consisting of

graphics processing units (GPUs) and central processing units (CPUs). On one

node, the GPUs would have significant performance advantages over the CPUs.

Therefore, current approaches for GPU-accelerated applications often focus on

maximizing GPU usage, leaving CPUs underutilized. In-situ tasks using CPUs to

perform data analysis or preprocess data concurrently to the running

simulation, offer a possibility to improve this underutilization.

25 May 2016

A new parallel equilibrium reconstruction code for tokamak plasmas is

presented. GPEC allows to compute equilibrium flux distributions sufficiently

accurate to derive parameters for plasma control within 1 ms of runtime which

enables real-time applications at the ASDEX Upgrade experiment (AUG) and other

machines with a control cycle of at least this size. The underlying algorithms

are based on the well-established offline-analysis code CLISTE, following the

classical concept of iteratively solving the Grad-Shafranov equation and

feeding in diagnostic signals from the experiment. The new code adopts a hybrid

parallelization scheme for computing the equilibrium flux distribution and

extends the fast, shared-memory-parallel Poisson solver which we have described

previously by a distributed computation of the individual Poisson problems

corresponding to different basis functions. The code is based entirely on

open-source software components and runs on standard server hardware and

software environments. The real-time capability of GPEC is demonstrated by

performing an offline-computation of a sequence of 1000 flux distributions

which are taken from one second of operation of a typical AUG discharge and

deriving the relevant control parameters with a time resolution of a

millisecond. On current server hardware the new code allows employing a grid

size of 32x64 zones for the spatial discretization and up to 15 basis

functions. It takes into account about 90 diagnostic signals while using up to

4 equilibrium iterations and computing more than 20 plasma-control parameters,

including the computationally expensive safety-factor q on at least 4 different

levels of the normalized flux.

20 Sep 2024

We report the first ab initio, non-relativistic QED method that couples light

and matter self-consistently beyond the electric dipole approximation and

without multipolar truncations. This method is based on an extension of the

Maxwell-Pauli-Kohn-Sham approach to a full minimal coupling Hamiltonian, where

the space- and time-dependent vector potential is coupled to the matter system,

and its back-reaction to the radiated fields is generated by the full current

density. The implementation in the open-source Octopus code is designed for

massively-parallel multiscale simulations considering different grid spacings

for the Maxwell and matter subsystems. Here, we show the first applications of

this framework to simulate renormalized Cherenkov radiation of an electronic

wavepacket, magnetooptical effects with non-chiral light in non-chiral

molecular systems, and renormalized plasmonic modes in a nanoplasmonic dimer.

We show that in some cases the beyond-dipole effects can not be captured by a

multipolar expansion Hamiltonian in the length gauge. Finally, we discuss

further opportunities enabled by the framework in the field of twisted light

and orbital angular momentum, inelastic light scattering and strong field

physics.

The high-performance computing (HPC) community has recently seen a

substantial diversification of hardware platforms and their associated

programming models. From traditional multicore processors to highly specialized

accelerators, vendors and tool developers back up the relentless progress of

those architectures. In the context of scientific programming, it is

fundamental to consider performance portability frameworks, i.e., software

tools that allow programmers to write code once and run it on different

computer architectures without sacrificing performance. We report here on the

benefits and challenges of performance portability using a field-line tracing

simulation and a particle-in-cell code, two relevant applications in

computational plasma physics with applications to magnetically-confined

nuclear-fusion energy research. For these applications we report performance

results obtained on four HPC platforms with server-class CPUs from Intel (Xeon)

and AMD (EPYC), and high-end GPUs from Nvidia and AMD, including the latest

Nvidia H100 GPU and the novel AMD Instinct MI300A APU. Our results show that

both Kokkos and OpenMP are powerful tools to achieve performance portability

and decent "out-of-the-box" performance, even for the very latest hardware

platforms. For our applications, Kokkos provided performance portability to the

broadest range of hardware architectures from different vendors.

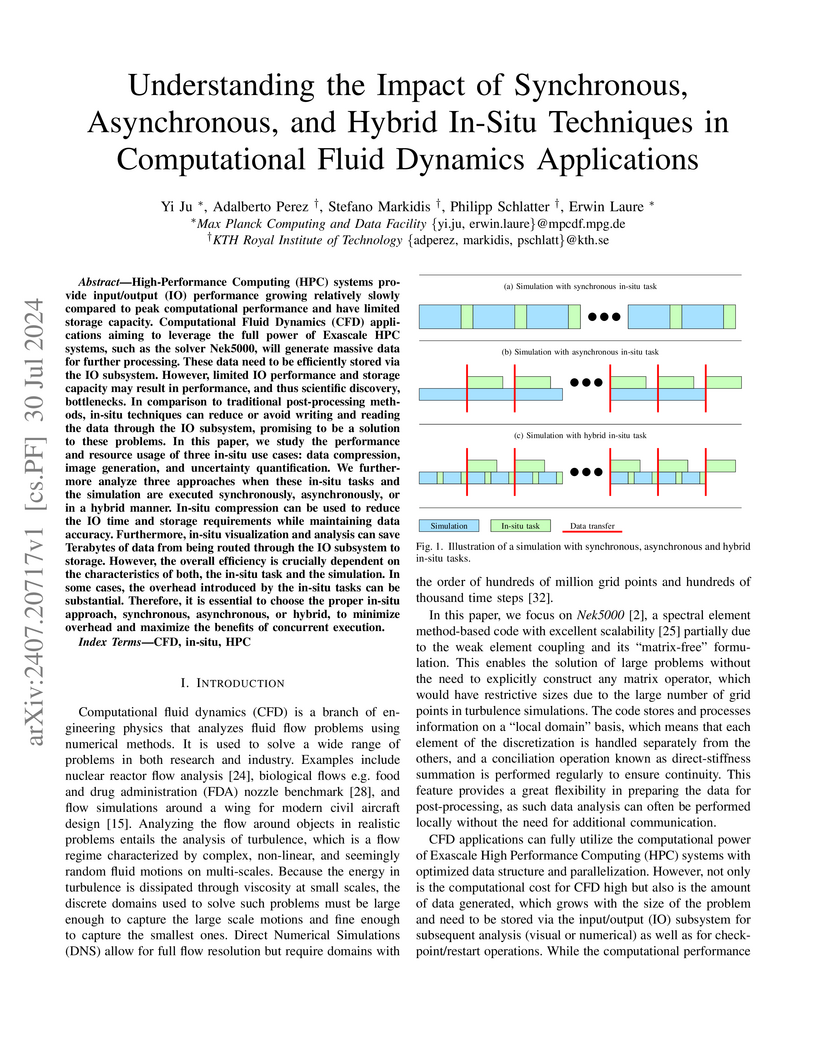

High-Performance Computing (HPC) systems provide input/output (IO) performance growing relatively slowly compared to peak computational performance and have limited storage capacity. Computational Fluid Dynamics (CFD) applications aiming to leverage the full power of Exascale HPC systems, such as the solver Nek5000, will generate massive data for further processing. These data need to be efficiently stored via the IO subsystem. However, limited IO performance and storage capacity may result in performance, and thus scientific discovery, bottlenecks. In comparison to traditional post-processing methods, in-situ techniques can reduce or avoid writing and reading the data through the IO subsystem, promising to be a solution to these problems. In this paper, we study the performance and resource usage of three in-situ use cases: data compression, image generation, and uncertainty quantification. We furthermore analyze three approaches when these in-situ tasks and the simulation are executed synchronously, asynchronously, or in a hybrid manner. In-situ compression can be used to reduce the IO time and storage requirements while maintaining data accuracy. Furthermore, in-situ visualization and analysis can save Terabytes of data from being routed through the IO subsystem to storage. However, the overall efficiency is crucially dependent on the characteristics of both, the in-situ task and the simulation. In some cases, the overhead introduced by the in-situ tasks can be substantial. Therefore, it is essential to choose the proper in-situ approach, synchronous, asynchronous, or hybrid, to minimize overhead and maximize the benefits of concurrent execution.

In this contribution, we give an overview of the ELPA library and ELSI interface, which are crucial elements for large-scale electronic structure calculations in FHI-aims.

ELPA is a key solver library that provides efficient solutions for both standard and generalized eigenproblems, which are central to the Kohn-Sham formalism in density functional theory (DFT). It supports CPU and GPU architectures, with full support for NVIDIA and AMD GPUs, and ongoing development for Intel GPUs. Here we also report the results of recent optimizations, leading to significant improvements in GPU performance for the generalized eigenproblem.

ELSI is an open-source software interface layer that creates a well-defined connection between "user" electronic structure codes and "solver" libraries for the Kohn-Sham problem, abstracting the step between Hamilton and overlap matrices (as input to ELSI and the respective solvers) and eigenvalues and eigenvectors or density matrix solutions (as output to be passed back to the "user" electronic structure code). In addition to ELPA, ELSI supports solvers including LAPACK and MAGMA, the PEXSI and NTPoly libraries (which bypass an explicit eigenvalue solution), and several others.

27 Feb 2025

SISSO (sure-independence screening and sparsifying operator) is an artificial

intelligence (AI) method based on symbolic regression and compressed sensing

widely used in materials science research. SISSO++ is its C++ implementation

that employs MPI and OpenMP for parallelization, rendering it well-suited for

high-performance computing (HPC) environments. As heterogeneous hardware

becomes mainstream in the HPC and AI fields, we chose to port the SISSO++ code

to GPUs using the Kokkos performance-portable library. Kokkos allows us to

maintain a single codebase for both Nvidia and AMD GPUs, significantly reducing

the maintenance effort. In this work, we summarize the necessary code changes

we did to achieve hardware and performance portability. This is accompanied by

performance benchmarks on Nvidia and AMD GPUs. We demonstrate the speedups

obtained from using GPUs across the three most time-consuming parts of our

code.

Recent three-dimensional magnetohydrodynamical simulations of the

common-envelope interaction revealed the self-consistent formation of bipolar

magnetically driven outflows launched from a toroidal structure resembling a

circumbinary disk. So far, the dynamical impact of bipolar outflows on the

common-envelope phase remains uncertain and we aim to quantify its importance.

We illustrate the impact on common-envelope evolution by comparing two

simulations -- one with magnetic fields and one without -- using the

three-dimensional moving-mesh hydrodynamics code AREPO. We focus on the

specific case of a 10M⊙ red supergiant star with a 5M⊙ black

hole companion. By the end of the magnetohydrodynamic simulations (after $\sim

1220orbitsofthecorebinarysystem),about6.4 \%$ of the envelope mass is

ejected via the bipolar outflow, contributing to angular momentum extraction

from the disk structure and core binary. The resulting enhanced torques reduce

the final orbital separation by about 24% compared to the hydrodynamical

scenario, while the overall envelope ejection remains dominated by

recombination-driven equatorial winds. We analyze field amplification and

outflow launching mechanisms, confirming consistency with earlier studies:

magnetic fields are amplified by shear flows, and outflows are launched by a

magneto-centrifugal process, supported by local shocks and magnetic pressure

gradients. These outflows originate from ∼1.1 times the orbital

separation. We conclude that the magnetically driven outflows and their role in

the dynamical interaction are a universal aspect, and we further propose an

adaptation of the αCE-formalism by adjusting the final orbital

energy with a factor of 1+Mout/μ, where Mout is the

mass ejected through the outflows and μ the reduced mass of the core

binary. (abridged)

There are no more papers matching your filters at the moment.