Max Planck Institute of Molecular Cell Biology and Genetics

We provide a comprehensive overview of meshfree collocation methods for numerically approximating differential operators on continuously labeled unstructured point clouds. Meshfree collocation methods do not require a computational grid or mesh. Instead, they approximate smooth functions and their derivatives at potentially irregularly distributed collocation points, often called particles, to a desired order of consistency. We review several meshfree collocation methods from the literature, trace the historical development of key concepts, and propose a classification of methods according to their principle of derivation. Although some of the methods reviewed are similar or identical, there are subtle yet important differences between many, which we highlight and discuss. We present a unifying formulation of meshfree collocation methods that renders these differences apparent and show how each method can be derived from this formulation. Finally, we propose a generalized derivation for meshfree collocation methods going forward.

We establish a large deviation principle (LDP) for probability graphons, which are symmetric functions from the unit square into the space of probability measures. This notion extends classical graphons and provides a flexible framework for studying the limit behavior of large dense weighted graphs. In particular, our result generalizes the seminal work of Chatterjee and Varadhan (2011), who derived an LDP for Erdős-Rényi random graphs via graphon theory. We move beyond their binary (Bernoulli) setting to encompass arbitrary edge-weight distributions. Specifically, we analyze the distribution on probability graphons induced by random weighted graphs in which edges are sampled independently from a common reference probability measure supported on a compact Polish space. We prove that this distribution satisfies an LDP with a good rate function, expressed as an extension of the Kullback-Leibler divergence between probability graphons and the reference measure. This theorem can also be viewed as a Sanov-type result in the graphon setting. Our work provides a rigorous foundation for analyzing rare events in weighted networks and supports statistical inference in structured random graph models under distributional edge uncertainty.

Mammalian tissue architecture is central to biological function, and its disruption is a hallmark of disease. Medical imaging techniques can generate large point cloud datasets that capture changes in the cellular composition of such tissues with disease progression. However, regions of interest (ROIs) are usually defined by quadrat-based methods that ignore intrinsic structure and risk fragmenting meaningful features. Here, we introduce TopROI, a topology-informed, network-based method for partitioning point clouds into ROIs that preserves both local geometry and higher-order architecture. TopROI integrates geometry-informed networks with persistent homology, combining cell neighbourhoods and multiscale cycles to guide community detection. Applied to synthetic point clouds that mimic glandular structure, TopROI outperforms quadrat-based and purely geometric partitions by maintaining biologically plausible ROI geometry and better preserving ground-truth structures. Applied to cellular point clouds obtained from human colorectal cancer biopsies, TopROI generates ROIs that preserve crypt-like structures and enable persistent homology analysis of individual regions. This study reveals a continuum of architectural changes from healthy mucosa to carcinoma, reflecting progressive disorganisation in tissue structure. TopROI thus provides a principled and flexible framework for defining biologically meaningful ROIs in large point clouds, enabling more accurate quantification of tissue organization and new insights into structural changes associated with disease progression.

Across development, the morphology of fluid-filled lumina enclosed by epithelial tissues arises from an interplay of lumen pressure, mechanics of the cell cortex, and cell-cell adhesion. Here, we explore the mechanical basis for the control of this interplay using the shape space of MDCK cysts and the instability of their apical surfaces under tight junction perturbations [Mukenhirn et al., Dev. Cell 59, 2886 (2024)]. We discover that the cysts respond to these perturbations by significantly modulating their lateral and basal tensions, in addition to the known modulations of pressure and apical belt tension. We develop a mean-field three-dimensional vertex model of these cysts that reproduces the experimental shape instability quantitatively. This reveals that the observed increase of lateral contractility is a cellular response that counters the instability. Our work thus shows how regulation of the mechanics of all cell surfaces conspires to control lumen morphology.

28 Aug 2025

Efficient information processing is crucial for both living organisms and engineered systems. The mutual information rate, a core concept of information theory, quantifies the amount of information shared between the trajectories of input and output signals, and enables the quantification of information flow in dynamic systems. A common approach for estimating the mutual information rate is the Gaussian approximation which assumes that the input and output trajectories follow Gaussian statistics. However, this method is limited to linear systems, and its accuracy in nonlinear or discrete systems remains unclear. In this work, we assess the accuracy of the Gaussian approximation for non-Gaussian systems by leveraging Path Weight Sampling (PWS), a recent technique for exactly computing the mutual information rate. In two case studies, we examine the limitations of the Gaussian approximation. First, we focus on discrete linear systems and demonstrate that, even when the system's statistics are nearly Gaussian, the Gaussian approximation fails to accurately estimate the mutual information rate. Second, we explore a continuous diffusive system with a nonlinear transfer function, revealing significant deviations between the Gaussian approximation and the exact mutual information rate as nonlinearity increases. Our results provide a quantitative evaluation of the Gaussian approximation's performance across different stochastic models and highlight when more computationally intensive methods, such as PWS, are necessary.

CNRS

CNRS Harvard University

Harvard University Sorbonne UniversitéInstitut CurieMax Planck Institute of Molecular Cell Biology and GeneticsCenter for Systems Biology DresdenMax Planck Institute for the Physics of Complex SystemsDresden University of TechnologyUniversité Paris Sciences et LettresPhysics of Life Cluster of Excellence

Sorbonne UniversitéInstitut CurieMax Planck Institute of Molecular Cell Biology and GeneticsCenter for Systems Biology DresdenMax Planck Institute for the Physics of Complex SystemsDresden University of TechnologyUniversité Paris Sciences et LettresPhysics of Life Cluster of ExcellenceWe present a topology grounded, multiscale simulation platform for morphogenesis and biological active matter. Morphogenesis and biological active matter represent keystone problems in biology with additional, far-reaching implications across the biomedical sciences. Addressing these problems will require flexible, cross-scale models of tissue shape, development, and dysfunction that can be tuned to understand, model, and predict relevant individual cases. Current approaches to simulating anatomical or cellular subsystems tend to rely on static, assumed shapes. Meanwhile, the potential for topology to provide natural dimensionality reduction and organization of shape and dynamical outcomes is not fully exploited. TopoSPAM combines ease of use with powerful simulation algorithms and methodological advances, including active nematic gels, topological-defect-driven shape dynamics, and an active 3D vertex model of tissues. It is capable of determining emergent flows and shapes across scales.

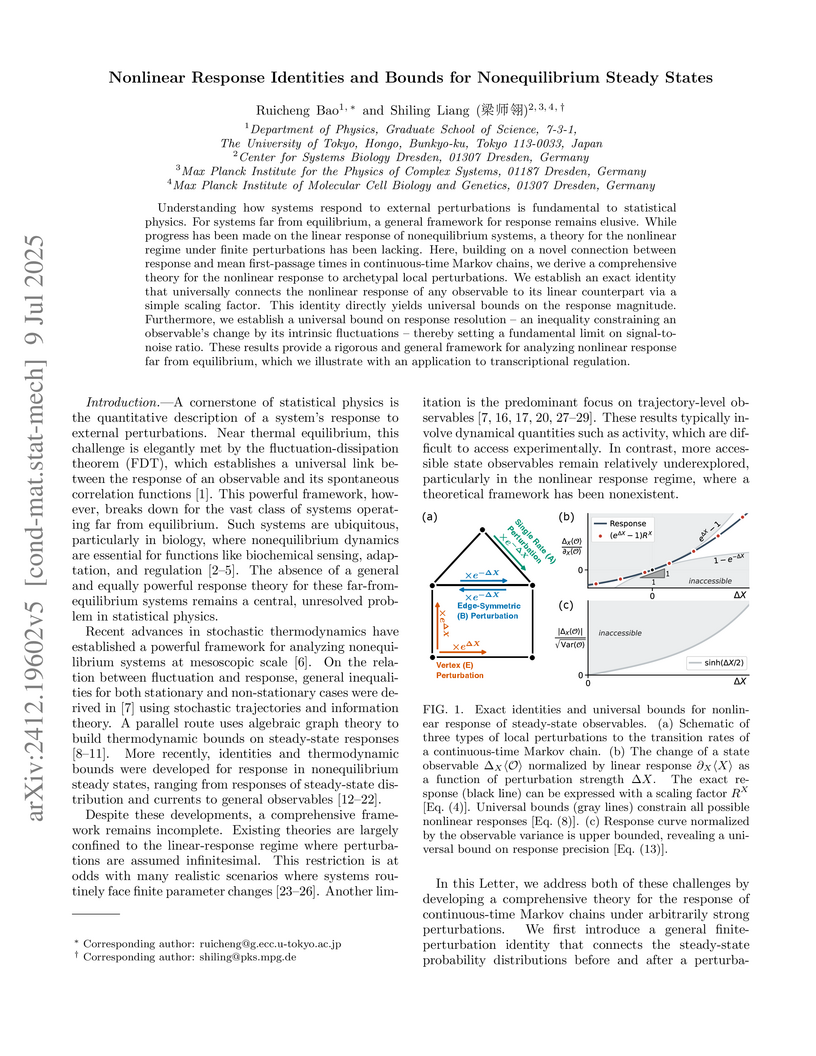

Understanding how systems respond to external perturbations is fundamental to statistical physics. For systems far from equilibrium, a general framework for response remains elusive. While progress has been made on the linear response of nonequilibrium systems, a theory for the nonlinear regime under finite perturbations has been lacking. Here, building on a novel connection between response and mean first-passage times in continuous-time Markov chains, we derive a comprehensive theory for the nonlinear response to archetypal local perturbations. We establish an exact identity that universally connects the nonlinear response of any observable to its linear counterpart via a simple scaling factor. This identity directly yields universal bounds on the response magnitude. Furthermore, we establish a universal bound on response resolution -- an inequality constraining an observable's change by its intrinsic fluctuations -- thereby setting a fundamental limit on signal-to-noise ratio. These results provide a rigorous and general framework for analyzing nonlinear response far from equilibrium, which we illustrate with an application to transcriptional regulation.

We introduce the Push-Forward Signed Distance Morphometric (PF-SDM), a novel method for shape quantification in biomedical imaging that is continuous, interpretable, and invariant to shape-preserving transformations. PF-SDM effectively captures the geometric properties of shapes, including their topological skeletons and radial symmetries. This results in a robust and interpretable shape descriptor that generalizes to capture temporal shape dynamics. Importantly, PF-SDM avoids certain issues of previous geometric morphometrics, like Elliptical Fourier Analysis and Generalized Procrustes Analysis, such as coefficient correlations and landmark choices. We present the PF-SDM theory, provide a practically computable algorithm, and benchmark it on synthetic data.

Bio-image analysis is challenging due to inhomogeneous intensity distributions and high levels of noise in the images. Bayesian inference provides a principled way for regularizing the problem using prior knowledge. A fundamental choice is how one measures "distances" between shapes in an image. It has been shown that the straightforward geometric L2 distance is degenerate and leads to pathological situations. This is avoided when using Sobolev gradients, rendering the segmentation problem less ill-posed. The high computational cost and implementation overhead of Sobolev gradients, however, have hampered practical applications. We show how particle methods as applied to image segmentation allow for a simple and computationally efficient implementation of Sobolev gradients. We show that the evaluation of Sobolev gradients amounts to particle-particle interactions along the contour in an image. We extend an existing particle-based segmentation algorithm to using Sobolev gradients. Using synthetic and real-world images, we benchmark the results for both 2D and 3D images using piecewise smooth and piecewise constant region models. The present particle approximation of Sobolev gradients is 2.8 to 10 times faster than the previous reference implementation, but retains the known favorable properties of Sobolev gradients. This speedup is achieved by using local particle-particle interactions instead of solving a global Poisson equation at each iteration. The computational time per iteration is higher for Sobolev gradients than for L2 gradients. Since Sobolev gradients precondition the optimization problem, however, a smaller number of overall iterations may be necessary for the algorithm to converge, which can in some cases amortize the higher per-iteration cost.

Dynamical systems with polynomial right-hand sides are very important in various applications, e.g., in biochemistry and population dynamics. The mathematical study of these dynamical systems is challenging due to the possibility of multistability, oscillations, and chaotic dynamics. One important tool for this study is the concept of reaction systems, which are dynamical systems generated by reaction networks for some choices of parameter values. Among these, disguised toric systems are remarkably stable: they have a unique attracting fixed point, and cannot give rise to oscillations or chaotic dynamics. The computation of the set of parameter values for which a network gives rise to disguised toric systems (i.e., the disguised toric locus of the network) is an important but difficult task. We introduce new ideas based on network fluxes for studying the disguised toric locus. We prove that the disguised toric locus of any network G is a contractible manifold with boundary, and introduce an associated graph Gmax that characterizes its interior. These theoretical tools allow us, for the first time, to compute the full disguised toric locus for many networks of interest.

Automatic detection and segmentation of objects in 2D and 3D microscopy data is important for countless biomedical applications. In the natural image domain, spatial embedding-based instance segmentation methods are known to yield high-quality results, but their utility for segmenting microscopy data is currently little researched. Here we introduce EmbedSeg, an embedding-based instance segmentation method which outperforms existing state-of-the-art baselines on 2D as well as 3D microscopy datasets. Additionally, we show that EmbedSeg has a GPU memory footprint small enough to train even on laptop GPUs, making it accessible to virtually everyone. Finally, we introduce four new 3D microscopy datasets, which we make publicly available alongside ground truth training labels. Our open-source implementation is available at this https URL.

30 Nov 2016

Max Planck Institute of Molecular Cell Biology and GeneticsLipotype GmbHGerman Center for Diabetes Research (DZD e.V.)Paul Langerhans Institute Dresden of the Helmholtz Centre Munich at the University Clinic Carl Gustav Carus, TU DresdenMedical Clinic and Polyclinic I, University hospital TU Dresden

The pathogenesis and progression of many tumors, including hematologic

malignancies is highly dependent on enhanced lipogenesis. De novo fatty-acid

synthesis permits accelerated proliferation of tumor cells by providing

structural components to build the membranes. It may also lead to alterations

of physicochemical properties of the formed membranes, which can have an impact

on signaling or even increase resistance to drugs in cancer cells. Cancer

type-specific lipid profiles would allow understanding the actual effects of

lipid changes and therefore could potentially serve as fingerprints for

individual tumors and be explored as diagnostic markers. We have used shotgun

MS approach to identify lipid patterns in different types of acute myeloid

leukemia (AML) patients that either show no karyotype changes or belong to

t(8;21) or inv16 types. The observed differences in lipidomes of t(8;21) and

inv(16) patients, as compared to AML patients without karyotype changes,

concentrate mostly on substantial modulation of ceramides/sphingolipids

synthesis. Also significant changes in the physicochemical properties of the

membranes, between the t(8;21) and the other patients, were noted that were

related to a marked alteration in the saturation levels of lipids. The revealed

differences in lipid profiles of various AML types increase our understanding

of the affected biochemical pathways and can potentially serve as diagnostic

tools.

Rensselaer Polytechnic InstituteLeipzig University UCLA

UCLA UC Berkeley

UC Berkeley University College London

University College London Boston UniversityTechnische Universität Dresden

Boston UniversityTechnische Universität Dresden University of Maryland

University of Maryland Stockholm University

Stockholm University King’s College LondonUppsala UniversityOak Ridge National LaboratoryThe University of Queensland

King’s College LondonUppsala UniversityOak Ridge National LaboratoryThe University of Queensland Chalmers University of TechnologyUniversity of Wisconsin–MadisonUniversity of TennesseeInstitute of Physics of the Czech Academy of SciencesMax Planck Institute of Molecular Cell Biology and GeneticsHelmholtz-Zentrum Dresden-RossendorfUniversity of GothenburgInstitute of Science and Technology AustriaTechnion – IITRoche Diagnostics GmbHAlbany Medical CollegeSalk Institute for Biological StudiesUniversitat de Vic – Universitat Central de CatalunyaArtificial Intelligence for Life Sciences CICCherry Biotech SASElephasUniversité Franche-Comté

Chalmers University of TechnologyUniversity of Wisconsin–MadisonUniversity of TennesseeInstitute of Physics of the Czech Academy of SciencesMax Planck Institute of Molecular Cell Biology and GeneticsHelmholtz-Zentrum Dresden-RossendorfUniversity of GothenburgInstitute of Science and Technology AustriaTechnion – IITRoche Diagnostics GmbHAlbany Medical CollegeSalk Institute for Biological StudiesUniversitat de Vic – Universitat Central de CatalunyaArtificial Intelligence for Life Sciences CICCherry Biotech SASElephasUniversité Franche-Comté

UCLA

UCLA UC Berkeley

UC Berkeley University College London

University College London Boston UniversityTechnische Universität Dresden

Boston UniversityTechnische Universität Dresden University of Maryland

University of Maryland Stockholm University

Stockholm University King’s College LondonUppsala UniversityOak Ridge National LaboratoryThe University of Queensland

King’s College LondonUppsala UniversityOak Ridge National LaboratoryThe University of Queensland Chalmers University of TechnologyUniversity of Wisconsin–MadisonUniversity of TennesseeInstitute of Physics of the Czech Academy of SciencesMax Planck Institute of Molecular Cell Biology and GeneticsHelmholtz-Zentrum Dresden-RossendorfUniversity of GothenburgInstitute of Science and Technology AustriaTechnion – IITRoche Diagnostics GmbHAlbany Medical CollegeSalk Institute for Biological StudiesUniversitat de Vic – Universitat Central de CatalunyaArtificial Intelligence for Life Sciences CICCherry Biotech SASElephasUniversité Franche-Comté

Chalmers University of TechnologyUniversity of Wisconsin–MadisonUniversity of TennesseeInstitute of Physics of the Czech Academy of SciencesMax Planck Institute of Molecular Cell Biology and GeneticsHelmholtz-Zentrum Dresden-RossendorfUniversity of GothenburgInstitute of Science and Technology AustriaTechnion – IITRoche Diagnostics GmbHAlbany Medical CollegeSalk Institute for Biological StudiesUniversitat de Vic – Universitat Central de CatalunyaArtificial Intelligence for Life Sciences CICCherry Biotech SASElephasUniversité Franche-ComtéThrough digital imaging, microscopy has evolved from primarily being a means

for visual observation of life at the micro- and nano-scale, to a quantitative

tool with ever-increasing resolution and throughput. Artificial intelligence,

deep neural networks, and machine learning are all niche terms describing

computational methods that have gained a pivotal role in microscopy-based

research over the past decade. This Roadmap is written collectively by

prominent researchers and encompasses selected aspects of how machine learning

is applied to microscopy image data, with the aim of gaining scientific

knowledge by improved image quality, automated detection, segmentation,

classification and tracking of objects, and efficient merging of information

from multiple imaging modalities. We aim to give the reader an overview of the

key developments and an understanding of possibilities and limitations of

machine learning for microscopy. It will be of interest to a wide

cross-disciplinary audience in the physical sciences and life sciences.

University of OxfordTechnische Universität Dresden

University of OxfordTechnische Universität Dresden Queen Mary University of LondonMax Planck Institute of Molecular Cell Biology and GeneticsCentre de Recherches Mathématiques et Institut des sciences mathématiques, Laboratoire de combinatoire et d’informatique mathématique de l’Université du Québec à MontréalCentre for Systems BiologyUniversit

de Sherbrooke

Queen Mary University of LondonMax Planck Institute of Molecular Cell Biology and GeneticsCentre de Recherches Mathématiques et Institut des sciences mathématiques, Laboratoire de combinatoire et d’informatique mathématique de l’Université du Québec à MontréalCentre for Systems BiologyUniversit

de SherbrookeClassical unsupervised learning methods like clustering and linear dimensionality reduction parametrize large-scale geometry when it is discrete or linear, while more modern methods from manifold learning find low dimensional representation or infer local geometry by constructing a graph on the input data. More recently, topological data analysis popularized the use of simplicial complexes to represent data topology with two main methodologies: topological inference with geometric complexes and large-scale topology visualization with Mapper graphs -- central to these is the nerve construction from topology, which builds a simplicial complex given a cover of a space by subsets. While successful, these have limitations: geometric complexes scale poorly with data size, and Mapper graphs can be hard to tune and only contain low dimensional information. In this paper, we propose to study the problem of learning covers in its own right, and from the perspective of optimization. We describe a method for learning topologically-faithful covers of geometric datasets, and show that the simplicial complexes thus obtained can outperform standard topological inference approaches in terms of size, and Mapper-type algorithms in terms of representation of large-scale topology.

We establish a large deviation principle (LDP) for probability graphons, which are symmetric functions from the unit square into the space of probability measures. This notion extends classical graphons and provides a flexible framework for studying the limit behavior of large dense weighted graphs. In particular, our result generalizes the seminal work of Chatterjee and Varadhan (2011), who derived an LDP for Erdős-Rényi random graphs via graphon theory. We move beyond their binary (Bernoulli) setting to encompass arbitrary edge-weight distributions. Specifically, we analyze the distribution on probability graphons induced by random weighted graphs in which edges are sampled independently from a common reference probability measure supported on a compact Polish space. We prove that this distribution satisfies an LDP with a good rate function, expressed as an extension of the Kullback-Leibler divergence between probability graphons and the reference measure. This theorem can also be viewed as a Sanov-type result in the graphon setting. Our work provides a rigorous foundation for analyzing rare events in weighted networks and supports statistical inference in structured random graph models under distributional edge uncertainty.

22 Apr 2020

Life science today involves computational analysis of a large amount and

variety of data, such as volumetric data acquired by state-of-the-art

microscopes, or mesh data from analysis of such data or simulations.

Visualization is often the first step in making sense of data, and a crucial

part of building and debugging analysis pipelines. It is therefore important

that visualizations can be quickly prototyped, as well as developed or embedded

into full applications. In order to better judge spatiotemporal relationships,

immersive hardware, such as Virtual or Augmented Reality (VR/AR) headsets and

associated controllers are becoming invaluable tools. In this work we introduce

scenery, a flexible VR/AR visualization framework for the Java VM that can

handle mesh and large volumetric data, containing multiple views, timepoints,

and color channels. scenery is free and open-source software, works on all

major platforms, and uses the Vulkan or OpenGL rendering APIs. We introduce

scenery's main features and example applications, such as its use in VR for

microscopy, in the biomedical image analysis software Fiji, or for visualizing

agent-based simulations.

How epithelial cells coordinate their polarity to form functional tissues is an open question in cell biology. Here, we characterize a unique type of polarity found in liver tissue, nematic cell polarity, which is different from vectorial cell polarity in simple, sheet-like epithelia. We propose a conceptual and algorithmic framework to characterize complex patterns of polarity proteins on the surface of a cell in terms of a multipole expansion. To rigorously quantify previously observed tissue-level patterns of nematic cell polarity (Morales-Navarette et al., eLife 8:e44860, 2019), we introduce the concept of co-orientational order parameters, which generalize the known biaxial order parameters of the theory of liquid crystals. Applying these concepts to three-dimensional reconstructions of single cells from high-resolution imaging data of mouse liver tissue, we show that the axes of nematic cell polarity of hepatocytes exhibit local coordination and are aligned with the biaxially anisotropic sinusoidal network for blood transport. Our study characterizes liver tissue as a biological example of a biaxial liquid crystal. The general methodology developed here could be applied to other tissues or in-vitro organoids.

04 Oct 2016

We study the statistics of infima, stopping times and passage probabilities

of entropy production in nonequilibrium steady states, and show that they are

universal. We consider two examples of stopping times: first-passage times of

entropy production and waiting times of stochastic processes, which are the

times when a system reaches for the first time a given state. Our main results

are: (i) the distribution of the global infimum of entropy production is

exponential with mean equal to minus Boltzmann's constant; (ii) we find the

exact expressions for the passage probabilities of entropy production to reach

a given value; (iii) we derive a fluctuation theorem for stopping-time

distributions of entropy production. These results have interesting

implications for stochastic processes that can be discussed in simple colloidal

systems and in active molecular processes. In particular, we show that the

timing and statistics of discrete chemical transitions of molecular processes,

such as, the steps of molecular motors, are governed by the statistics of

entropy production. We also show that the extreme-value statistics of active

molecular processes are governed by entropy production, for example, the

infimum of entropy production of a motor can be related to the maximal

excursion of a motor against the direction of an external force. Using this

relation, we make predictions for the distribution of the maximum backtrack

depth of RNA polymerases, which follows from our universal results for

entropy-production infima.

Technische Universität DresdenMax Planck Institute of Molecular Cell Biology and GeneticsCenter for Systems Biology DresdenCluster of Excellence, Physics of LifeCluster of Excellence Physics of Life, Technische Universität DresdenCenter for Scalable Data Aanlytics and Artificial Intelligence ScaDS.AI

We present data structures and algorithms for native implementations of discrete convolution operators over Adaptive Particle Representations (APR) of images on parallel computer architectures. The APR is a content-adaptive image representation that locally adapts the sampling resolution to the image signal. It has been developed as an alternative to pixel representations for large, sparse images as they typically occur in fluorescence microscopy. It has been shown to reduce the memory and runtime costs of storing, visualizing, and processing such images. This, however, requires that image processing natively operates on APRs, without intermediately reverting to pixels. Designing efficient and scalable APR-native image processing primitives, however, is complicated by the APR's irregular memory structure. Here, we provide the algorithmic building blocks required to efficiently and natively process APR images using a wide range of algorithms that can be formulated in terms of discrete convolutions. We show that APR convolution naturally leads to scale-adaptive algorithms that efficiently parallelize on multi-core CPU and GPU architectures. We quantify the speedups in comparison to pixel-based algorithms and convolutions on evenly sampled data. We achieve pixel-equivalent throughputs of up to 1 TB/s on a single Nvidia GeForce RTX 2080 gaming GPU, requiring up to two orders of magnitude less memory than a pixel-based implementation.

University of FreiburgLeibniz University HannoverUniversity of GoettingenUniversity of StuttgartLMU MunichLouisiana State UniversityTechnische Universität DresdenBielefeld University Technical University of MunichEindhoven University of TechnologyUniversity of PotsdamUlm UniversityHumboldt-Universität zu BerlinTechnische Universität BraunschweigUniversität WürzburgUniversity of MünsterTechnische Universität MünchenFederal Institute for Materials Research and TestingUniversity of TennesseeMax Planck Institute of Molecular Cell Biology and GeneticsLinköping UniversityGerman Aerospace Center (DLR)Karlsruhe Institute of Technology (KIT)Friedrich-Schiller-University JenaUniversität Duisburg-EssenUniversität BremenEuropean Molecular Biology LaboratoryFIZ Karlsruhe - Leibniz Institute for Information InfrastructureRobert Koch InstituteHelmholtz Zentrum MunichDeutsches KrebsforschungszentrumAlfred Wegener Institute, Helmholtz Center for Polar and Marine Research BremerhavenLeibniz Institute of Agricultural Development in Transition Economies (IAMO)University Heart Centre Freiburg Bad KrozingenJulius Kühn-Institut (JKI)Konrad-Zuse-Zentrum für Informationstechnik Berlin ZIB

Technical University of MunichEindhoven University of TechnologyUniversity of PotsdamUlm UniversityHumboldt-Universität zu BerlinTechnische Universität BraunschweigUniversität WürzburgUniversity of MünsterTechnische Universität MünchenFederal Institute for Materials Research and TestingUniversity of TennesseeMax Planck Institute of Molecular Cell Biology and GeneticsLinköping UniversityGerman Aerospace Center (DLR)Karlsruhe Institute of Technology (KIT)Friedrich-Schiller-University JenaUniversität Duisburg-EssenUniversität BremenEuropean Molecular Biology LaboratoryFIZ Karlsruhe - Leibniz Institute for Information InfrastructureRobert Koch InstituteHelmholtz Zentrum MunichDeutsches KrebsforschungszentrumAlfred Wegener Institute, Helmholtz Center for Polar and Marine Research BremerhavenLeibniz Institute of Agricultural Development in Transition Economies (IAMO)University Heart Centre Freiburg Bad KrozingenJulius Kühn-Institut (JKI)Konrad-Zuse-Zentrum für Informationstechnik Berlin ZIB

Technical University of MunichEindhoven University of TechnologyUniversity of PotsdamUlm UniversityHumboldt-Universität zu BerlinTechnische Universität BraunschweigUniversität WürzburgUniversity of MünsterTechnische Universität MünchenFederal Institute for Materials Research and TestingUniversity of TennesseeMax Planck Institute of Molecular Cell Biology and GeneticsLinköping UniversityGerman Aerospace Center (DLR)Karlsruhe Institute of Technology (KIT)Friedrich-Schiller-University JenaUniversität Duisburg-EssenUniversität BremenEuropean Molecular Biology LaboratoryFIZ Karlsruhe - Leibniz Institute for Information InfrastructureRobert Koch InstituteHelmholtz Zentrum MunichDeutsches KrebsforschungszentrumAlfred Wegener Institute, Helmholtz Center for Polar and Marine Research BremerhavenLeibniz Institute of Agricultural Development in Transition Economies (IAMO)University Heart Centre Freiburg Bad KrozingenJulius Kühn-Institut (JKI)Konrad-Zuse-Zentrum für Informationstechnik Berlin ZIB

Technical University of MunichEindhoven University of TechnologyUniversity of PotsdamUlm UniversityHumboldt-Universität zu BerlinTechnische Universität BraunschweigUniversität WürzburgUniversity of MünsterTechnische Universität MünchenFederal Institute for Materials Research and TestingUniversity of TennesseeMax Planck Institute of Molecular Cell Biology and GeneticsLinköping UniversityGerman Aerospace Center (DLR)Karlsruhe Institute of Technology (KIT)Friedrich-Schiller-University JenaUniversität Duisburg-EssenUniversität BremenEuropean Molecular Biology LaboratoryFIZ Karlsruhe - Leibniz Institute for Information InfrastructureRobert Koch InstituteHelmholtz Zentrum MunichDeutsches KrebsforschungszentrumAlfred Wegener Institute, Helmholtz Center for Polar and Marine Research BremerhavenLeibniz Institute of Agricultural Development in Transition Economies (IAMO)University Heart Centre Freiburg Bad KrozingenJulius Kühn-Institut (JKI)Konrad-Zuse-Zentrum für Informationstechnik Berlin ZIBResearch software has become a central asset in academic research. It

optimizes existing and enables new research methods, implements and embeds

research knowledge, and constitutes an essential research product in itself.

Research software must be sustainable in order to understand, replicate,

reproduce, and build upon existing research or conduct new research

effectively. In other words, software must be available, discoverable, usable,

and adaptable to new needs, both now and in the future. Research software

therefore requires an environment that supports sustainability. Hence, a change

is needed in the way research software development and maintenance are

currently motivated, incentivized, funded, structurally and infrastructurally

supported, and legally treated. Failing to do so will threaten the quality and

validity of research. In this paper, we identify challenges for research

software sustainability in Germany and beyond, in terms of motivation,

selection, research software engineering personnel, funding, infrastructure,

and legal aspects. Besides researchers, we specifically address political and

academic decision-makers to increase awareness of the importance and needs of

sustainable research software practices. In particular, we recommend strategies

and measures to create an environment for sustainable research software, with

the ultimate goal to ensure that software-driven research is valid,

reproducible and sustainable, and that software is recognized as a first class

citizen in research. This paper is the outcome of two workshops run in Germany

in 2019, at deRSE19 - the first International Conference of Research Software

Engineers in Germany - and a dedicated DFG-supported follow-up workshop in

Berlin.

There are no more papers matching your filters at the moment.