Microsoft Research New England

07 Sep 2025

FlexMDMs enable discrete diffusion models to generate sequences of variable lengths and perform token insertions by extending the stochastic interpolant framework with a novel joint interpolant for continuous-time Markov chains. The approach more accurately models length distributions, boosts planning task success rates by nearly 60%, and improves performance on math and code infilling tasks by up to 13% after efficient retrofitting of existing large-scale MDMs.

Adjoint Sampling presents a highly scalable and efficient method for generating samples from unnormalized distributions, such as those defined by energy functions. This approach significantly reduces the number of expensive energy evaluations needed per gradient update by utilizing a Reciprocal Adjoint Matching technique, enabling superior exploration of molecular conformer spaces.

17 Aug 2025

Solving stochastic optimal control problems with quadratic control costs can be viewed as approximating a target path space measure, e.g. via gradient-based optimization. In practice, however, this optimization is challenging in particular if the target measure differs substantially from the prior. In this work, we therefore approach the problem by iteratively solving constrained problems incorporating trust regions that aim for approaching the target measure gradually in a systematic way. It turns out that this trust region based strategy can be understood as a geometric annealing from the prior to the target measure, where, however, the incorporated trust regions lead to a principled and educated way of choosing the time steps in the annealing path. We demonstrate in multiple optimal control applications that our novel method can improve performance significantly, including tasks in diffusion-based sampling, transition path sampling, and fine-tuning of diffusion models.

Discrete diffusion has emerged as a powerful framework for generative modeling in discrete domains, yet efficiently sampling from these models remains challenging. Existing sampling strategies often struggle to balance computation and sample quality when the number of sampling steps is reduced, even when the model has learned the data distribution well. To address these limitations, we propose a predictor-corrector sampling scheme where the corrector is informed by the diffusion model to more reliably counter the accumulating approximation errors. To further enhance the effectiveness of our informed corrector, we introduce complementary architectural modifications based on hollow transformers and a simple tailored training objective that leverages more training signal. We use a synthetic example to illustrate the failure modes of existing samplers and show how informed correctors alleviate these problems. On the text8 and tokenized ImageNet 256x256 datasets, our informed corrector consistently produces superior samples with fewer errors or improved FID scores for discrete diffusion models. These results underscore the potential of informed correctors for fast and high-fidelity generation using discrete diffusion. Our code is available at this https URL.

Research demonstrates that large language models can internally identify their own hallucinated references using self-consistency checks, with larger models like GPT-4 showing a 46.8% hallucination rate and an ensemble detection AUC of 0.927. This work indicates that a model's 'knowledge' about a reference correlates with its ability to consistently recall associated details.

25 Sep 2019

We propose the orthogonal random forest, an algorithm that combines Neyman-orthogonality to reduce sensitivity with respect to estimation error of nuisance parameters with generalized random forests (Athey et al., 2017)--a flexible non-parametric method for statistical estimation of conditional moment models using random forests. We provide a consistency rate and establish asymptotic normality for our estimator. We show that under mild assumptions on the consistency rate of the nuisance estimator, we can achieve the same error rate as an oracle with a priori knowledge of these nuisance parameters. We show that when the nuisance functions have a locally sparse parametrization, then a local ℓ1-penalized regression achieves the required rate. We apply our method to estimate heterogeneous treatment effects from observational data with discrete treatments or continuous treatments, and we show that, unlike prior work, our method provably allows to control for a high-dimensional set of variables under standard sparsity conditions. We also provide a comprehensive empirical evaluation of our algorithm on both synthetic and real data.

Adaptive Bias Correction (ABC), a hybrid machine learning framework, enhances subseasonal forecasts by correcting persistent systematic errors in operational dynamical models. The method significantly boosts temperature and precipitation forecasting skill by 40-289% and identifies climate-dependent "windows of opportunity" for targeted deployment.

Gradient estimation -- approximating the gradient of an expectation with respect to the parameters of a distribution -- is central to the solution of many machine learning problems. However, when the distribution is discrete, most common gradient estimators suffer from excessive variance. To improve the quality of gradient estimation, we introduce a variance reduction technique based on Stein operators for discrete distributions. We then use this technique to build flexible control variates for the REINFORCE leave-one-out estimator. Our control variates can be adapted online to minimize variance and do not require extra evaluations of the target function. In benchmark generative modeling tasks such as training binary variational autoencoders, our gradient estimator achieves substantially lower variance than state-of-the-art estimators with the same number of function evaluations.

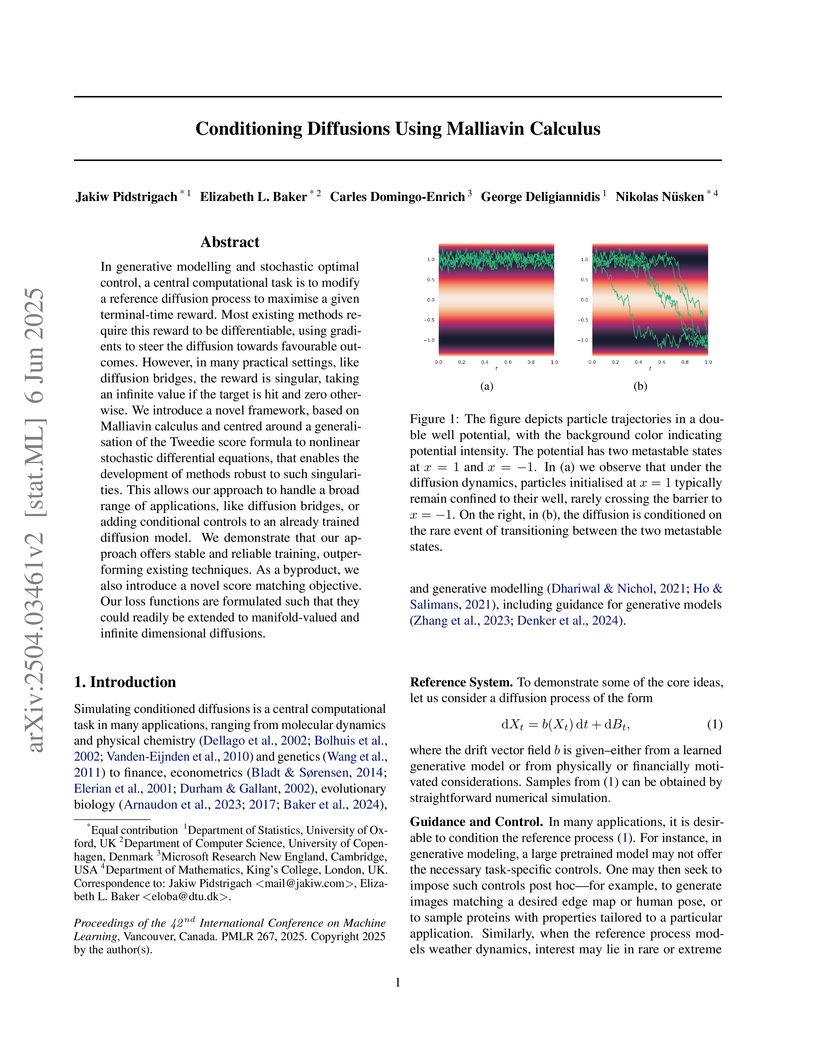

This paper introduces a framework for conditioning diffusion processes using Malliavin calculus to robustly handle singular or non-differentiable terminal-time reward functions. This approach enables precise, amortized conditional generation in diffusion models and accurate rare-event simulation, outperforming prior methods on complex tasks like stochastic shape processes and double-well potential transitions.

We describe a technique that automatically generates plausible depth maps

from videos using non-parametric depth sampling. We demonstrate our technique

in cases where past methods fail (non-translating cameras and dynamic scenes).

Our technique is applicable to single images as well as videos. For videos, we

use local motion cues to improve the inferred depth maps, while optical flow is

used to ensure temporal depth consistency. For training and evaluation, we use

a Kinect-based system to collect a large dataset containing stereoscopic videos

with known depths. We show that our depth estimation technique outperforms the

state-of-the-art on benchmark databases. Our technique can be used to

automatically convert a monoscopic video into stereo for 3D visualization, and

we demonstrate this through a variety of visually pleasing results for indoor

and outdoor scenes, including results from the feature film Charade.

25 Mar 2025

Permutation tests are a popular choice for distinguishing distributions and

testing independence, due to their exact, finite-sample control of false

positives and their minimax optimality when paired with U-statistics. However,

standard permutation tests are also expensive, requiring a test statistic to be

computed hundreds or thousands of times to detect a separation between

distributions. In this work, we offer a simple approach to accelerate testing:

group your datapoints into bins and permute only those bins. For U and

V-statistics, we prove that these cheap permutation tests have two remarkable

properties. First, by storing appropriate sufficient statistics, a cheap test

can be run in time comparable to evaluating a single test statistic. Second,

cheap permutation power closely approximates standard permutation power. As a

result, cheap tests inherit the exact false positive control and minimax

optimality of standard permutation tests while running in a fraction of the

time. We complement these findings with improved power guarantees for standard

permutation testing and experiments demonstrating the benefits of cheap

permutations over standard maximum mean discrepancy (MMD), Hilbert-Schmidt

independence criterion (HSIC), random Fourier feature, Wilcoxon-Mann-Whitney,

cross-MMD, and cross-HSIC tests.

Researchers introduced tuning-free and self-tuning online learning algorithms that integrate optimism to effectively handle delayed feedback, achieving minimax optimal regret rates. These algorithms demonstrated superior performance in subseasonal climate forecasting tasks, often outperforming the best single models over a decade.

01 Jun 2024

Traditionally, AI has been modeled within economics as a technology that

impacts payoffs by reducing costs or refining information for human agents. Our

position is that, in light of recent advances in generative AI, it is

increasingly useful to model AI itself as an economic agent. In our framework,

each user is augmented with an AI agent and can consult the AI prior to taking

actions in a game. The AI agent and the user have potentially different

information and preferences over the communication, which can result in

equilibria that are qualitatively different than in settings without AI.

Stochastic gradient-based optimisation for discrete latent variable models is challenging due to the high variance of gradients. We introduce a variance reduction technique for score function estimators that makes use of double control variates. These control variates act on top of a main control variate, and try to further reduce the variance of the overall estimator. We develop a double control variate for the REINFORCE leave-one-out estimator using Taylor expansions. For training discrete latent variable models, such as variational autoencoders with binary latent variables, our approach adds no extra computational cost compared to standard training with the REINFORCE leave-one-out estimator. We apply our method to challenging high-dimensional toy examples and training variational autoencoders with binary latent variables. We show that our estimator can have lower variance compared to other state-of-the-art estimators.

In several applications of real-time matching of demand to supply in online marketplaces, the platform allows for some latency to batch the demand and improve the efficiency. Motivated by these applications, we study the optimal trade-off between batching and inefficiency under adversarial arrival. As our base model, we consider K-stage variants of the vertex weighted b-matching in the adversarial setting, where online vertices arrive stage-wise and in K batches -- in contrast to online arrival. Our main result for this problem is an optimal (1-(1-1/K)^K)- competitive (fractional) matching algorithm, improving the classic (1-1/e) competitive ratio bound known for its online variant (Mehta et al., 2007; Aggarwal et al., 2011). We also extend this result to the rich model of multi-stage configuration allocation with free-disposals (Devanur et al., 2016), which is motivated by the display advertising in video streaming platforms.

Our main technique is developing tools to vary the trade-off between "greedy-ness" and "hedging" of the algorithm across stages. We rely on a particular family of convex-programming based matchings that distribute the demand in a specifically balanced way among supply in different stages, while carefully modifying the balancedness of the resulting matching across stages. More precisely, we identify a sequence of polynomials with decreasing degrees to be used as strictly concave regularizers of the maximum weight matching linear program to form these convex programs. At each stage, our algorithm returns the corresponding regularized optimal solution as the matching of this stage (by solving the convex program). Using structural properties of these convex programs and recursively connecting the regularizers together, we develop a new multi-stage primal-dual framework to analyze the competitive ratio. We further show this algorithm is optimally competitive.

07 Nov 2024

We prove that the kissing numbers in 17, 18, 19, 20, and 21 dimensions are at least 5730, 7654, 11692, 19448, and 29768, respectively. The previous records were set by Leech in 1967, and we improve on them by 384, 256, 1024, 2048, and 2048. Unlike the previous constructions, the new configurations are not cross sections of the Leech lattice minimal vectors. Instead, they are constructed by modifying the signs in the lattice vectors to open up more space for additional spheres.

21 Dec 2013

The sphere packing problem asks for the greatest density of a packing of

congruent balls in Euclidean space. The current best upper bound in all

sufficiently high dimensions is due to Kabatiansky and Levenshtein in 1978. We

revisit their argument and improve their bound by a constant factor using a

simple geometric argument, and we extend the argument to packings in hyperbolic

space, for which it gives an exponential improvement over the previously known

bounds. Additionally, we show that the Cohn-Elkies linear programming bound is

always at least as strong as the Kabatiansky-Levenshtein bound; this result is

analogous to Rodemich's theorem in coding theory. Finally, we develop

hyperbolic linear programming bounds and prove the analogue of Rodemich's

theorem there as well.

We provide the first finite-particle convergence rate for Stein variational gradient descent (SVGD), a popular algorithm for approximating a probability distribution with a collection of particles. Specifically, whenever the target distribution is sub-Gaussian with a Lipschitz score, SVGD with n particles and an appropriate step size sequence drives the kernel Stein discrepancy to zero at an order 1/sqrt(log log n) rate. We suspect that the dependence on n can be improved, and we hope that our explicit, non-asymptotic proof strategy will serve as a template for future refinements.

25 Jun 2022

Spike-and-slab priors are commonly used for Bayesian variable selection, due

to their interpretability and favorable statistical properties. However,

existing samplers for spike-and-slab posteriors incur prohibitive computational

costs when the number of variables is large. In this article, we propose

Scalable Spike-and-Slab (S3), a scalable Gibbs sampling implementation for

high-dimensional Bayesian regression with the continuous spike-and-slab prior

of George and McCulloch (1993). For a dataset with n observations and p

covariates, S3 has order max{n2pt,np} computational cost at

iteration t where pt never exceeds the number of covariates switching

spike-and-slab states between iterations t and t−1 of the Markov chain.

This improves upon the order n2p per-iteration cost of state-of-the-art

implementations as, typically, pt is substantially smaller than p. We

apply S3 on synthetic and real-world datasets, demonstrating orders of

magnitude speed-ups over existing exact samplers and significant gains in

inferential quality over approximate samplers with comparable cost.

Researchers introduced SubseasonalClimateUSA, a curated and regularly updated dataset designed for machine learning-based subseasonal weather forecasting in the contiguous U.S. Benchmarking efforts demonstrated that adaptive bias correction models consistently outperformed leading operational dynamical models and complex deep learning methods in predicting temperature and precipitation for weeks 3-6 ahead.

There are no more papers matching your filters at the moment.