Middle Tennessee State University

Large Language Models (LLMs) have demonstrated impressive capabilities across various tasks, but fine-tuning them for domain-specific applications often requires substantial domain-specific data that may be distributed across multiple organizations. Federated Learning (FL) offers a privacy-preserving solution, but faces challenges with computational constraints when applied to LLMs. Low-Rank Adaptation (LoRA) has emerged as a parameter-efficient fine-tuning approach, though a single LoRA module often struggles with heterogeneous data across diverse domains. This paper addresses two critical challenges in federated LoRA fine-tuning: 1. determining the optimal number and allocation of LoRA experts across heterogeneous clients, and 2. enabling clients to selectively utilize these experts based on their specific data characteristics. We propose FedLEASE (Federated adaptive LoRA Expert Allocation and SElection), a novel framework that adaptively clusters clients based on representation similarity to allocate and train domain-specific LoRA experts. It also introduces an adaptive top-M Mixture-of-Experts mechanism that allows each client to select the optimal number of utilized experts. Our extensive experiments on diverse benchmark datasets demonstrate that FedLEASE significantly outperforms existing federated fine-tuning approaches in heterogeneous client settings while maintaining communication efficiency.

One-step shortcut diffusion models [Frans, Hafner, Levine and Abbeel, ICLR 2025] have shown potential in vision generation, but their reliance on first-order trajectory supervision is fundamentally limited. The Shortcut model's simplistic velocity-only approach fails to capture intrinsic manifold geometry, leading to erratic trajectories, poor geometric alignment, and instability-especially in high-curvature regions. These shortcomings stem from its inability to model mid-horizon dependencies or complex distributional features, leaving it ill-equipped for robust generative modeling. In this work, we introduce HOMO (High-Order Matching for One-Step Shortcut Diffusion), a game-changing framework that leverages high-order supervision to revolutionize distribution transportation. By incorporating acceleration, jerk, and beyond, HOMO not only fixes the flaws of the Shortcut model but also achieves unprecedented smoothness, stability, and geometric precision. Theoretically, we prove that HOMO's high-order supervision ensures superior approximation accuracy, outperforming first-order methods. Empirically, HOMO dominates in complex settings, particularly in high-curvature regions where the Shortcut model struggles. Our experiments show that HOMO delivers smoother trajectories and better distributional alignment, setting a new standard for one-step generative models.

Diffusion Transformers have emerged as the preeminent models for a wide array

of generative tasks, demonstrating superior performance and efficacy across

various applications. The promising results come at the cost of slow inference,

as each denoising step requires running the whole transformer model with a

large amount of parameters. In this paper, we show that performing the full

computation of the model at each diffusion step is unnecessary, as some

computations can be skipped by lazily reusing the results of previous steps.

Furthermore, we show that the lower bound of similarity between outputs at

consecutive steps is notably high, and this similarity can be linearly

approximated using the inputs. To verify our demonstrations, we propose the

\textbf{LazyDiT}, a lazy learning framework that efficiently leverages cached

results from earlier steps to skip redundant computations. Specifically, we

incorporate lazy learning layers into the model, effectively trained to

maximize laziness, enabling dynamic skipping of redundant computations.

Experimental results show that LazyDiT outperforms the DDIM sampler across

multiple diffusion transformer models at various resolutions. Furthermore, we

implement our method on mobile devices, achieving better performance than DDIM

with similar latency. Code: this https URL

In-context learning has been recognized as a key factor in the success of

Large Language Models (LLMs). It refers to the model's ability to learn

patterns on the fly from provided in-context examples in the prompt during

inference. Previous studies have demonstrated that the Transformer architecture

used in LLMs can implement a single-step gradient descent update by processing

in-context examples in a single forward pass. Recent work has further shown

that, during in-context learning, a looped Transformer can implement multi-step

gradient descent updates in forward passes. However, their theoretical results

require an exponential number of in-context examples, n=exp(Ω(T)),

where T is the number of loops or passes, to achieve a reasonably low error.

In this paper, we study linear looped Transformers in-context learning on

linear vector generation tasks. We show that linear looped Transformers can

implement multi-step gradient descent efficiently for in-context learning. Our

results demonstrate that as long as the input data has a constant condition

number, e.g., n=O(d), the linear looped Transformers can achieve a small

error by multi-step gradient descent during in-context learning. Furthermore,

our preliminary experiments validate our theoretical analysis. Our findings

reveal that the Transformer architecture possesses a stronger in-context

learning capability than previously understood, offering new insights into the

mechanisms behind LLMs and potentially guiding the better design of efficient

inference algorithms for LLMs.

Numerical Pruning introduces a training-free structural pruning method for autoregressive Transformer models, effective across both language (LLaMA) and image generation (LlamaGen) tasks. The approach consistently achieves state-of-the-art performance, evidenced by superior perplexity (e.g., LLaMA-65B perplexity on WikiText2: Ours 9.65 vs. FLAP Nan at 70% pruning) and improved image quality (e.g., LlamaGen-3B FID of 3.97 vs. FLAP's 7.57 at 10% pruning), while also reducing GPU memory usage and accelerating generation speed.

A theoretical analysis of Visual Autoregressive Transformers establishes a fundamental lower bound of Ω(n²d) on the memory required for the Key-Value (KV) cache during inference, where n² is the number of tokens and d is the embedding dimension. This finding indicates that quadratic memory scaling is an inherent property of these models, limiting sub-quadratic compression without imposing additional structural constraints or approximations.

This paper introduces Force Matching (ForM), a novel framework for generative

modeling that represents an initial exploration into leveraging special

relativistic mechanics to enhance the stability of the sampling process. By

incorporating the Lorentz factor, ForM imposes a velocity constraint, ensuring

that sample velocities remain bounded within a constant limit. This constraint

serves as a fundamental mechanism for stabilizing the generative dynamics,

leading to a more robust and controlled sampling process. We provide a rigorous

theoretical analysis demonstrating that the velocity constraint is preserved

throughout the sampling procedure within the ForM framework. To validate the

effectiveness of our approach, we conduct extensive empirical evaluations. On

the \textit{half-moons} dataset, ForM significantly outperforms baseline

methods, achieving the lowest Euclidean distance loss of \textbf{0.714}, in

contrast to vanilla first-order flow matching (5.853) and first- and

second-order flow matching (5.793). Additionally, we perform an ablation study

to further investigate the impact of our velocity constraint, reaffirming the

superiority of ForM in stabilizing the generative process. The theoretical

guarantees and empirical results underscore the potential of integrating

special relativity principles into generative modeling. Our findings suggest

that ForM provides a promising pathway toward achieving stable, efficient, and

flexible generative processes. This work lays the foundation for future

advancements in high-dimensional generative modeling, opening new avenues for

the application of physical principles in machine learning.

This study introduces a training-free conditional diffusion model for learning unknown stochastic differential equations (SDEs) using data. The proposed approach addresses key challenges in computational efficiency and accuracy for modeling SDEs by utilizing a score-based diffusion model to approximate their stochastic flow map. Unlike the existing methods, this technique is based on an analytically derived closed-form exact score function, which can be efficiently estimated by Monte Carlo method using the trajectory data, and eliminates the need for neural network training to learn the score function. By generating labeled data through solving the corresponding reverse ordinary differential equation, the approach enables supervised learning of the flow map. Extensive numerical experiments across various SDE types, including linear, nonlinear, and multi-dimensional systems, demonstrate the versatility and effectiveness of the method. The learned models exhibit significant improvements in predicting both short-term and long-term behaviors of unknown stochastic systems, often surpassing baseline methods like GANs in estimating drift and diffusion coefficients.

Researchers from multiple institutions provide mathematical proof that Transformer language models fundamentally fail to learn majority Boolean logic functions through gradient descent, even with exponential training samples, demonstrating a gap between model expressivity and learnability that persists regardless of training set size.

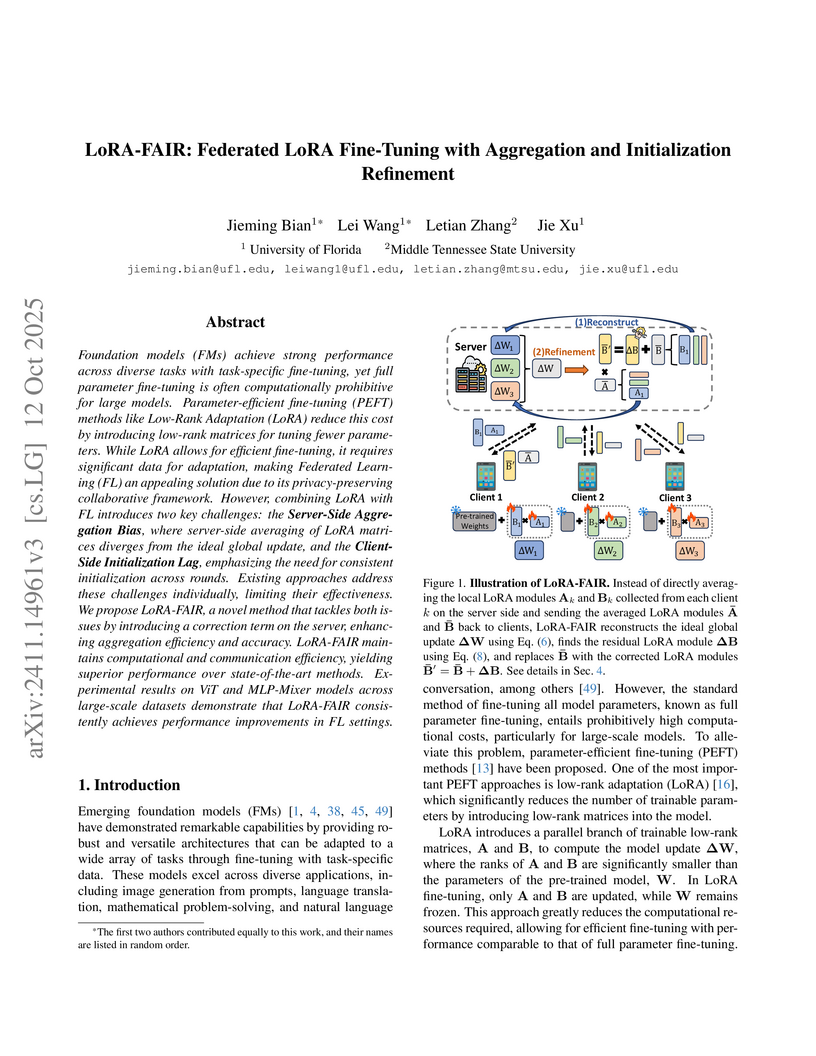

Foundation models (FMs) achieve strong performance across diverse tasks with task-specific fine-tuning, yet full parameter fine-tuning is often computationally prohibitive for large models. Parameter-efficient fine-tuning (PEFT) methods like Low-Rank Adaptation (LoRA) reduce this cost by introducing low-rank matrices for tuning fewer parameters. While LoRA allows for efficient fine-tuning, it requires significant data for adaptation, making Federated Learning (FL) an appealing solution due to its privacy-preserving collaborative framework. However, combining LoRA with FL introduces two key challenges: the \textbf{Server-Side Aggregation Bias}, where server-side averaging of LoRA matrices diverges from the ideal global update, and the \textbf{Client-Side Initialization Lag}, emphasizing the need for consistent initialization across rounds. Existing approaches address these challenges individually, limiting their effectiveness. We propose LoRA-FAIR, a novel method that tackles both issues by introducing a correction term on the server, enhancing aggregation efficiency and accuracy. LoRA-FAIR maintains computational and communication efficiency, yielding superior performance over state-of-the-art methods. Experimental results on ViT and MLP-Mixer models across large-scale datasets demonstrate that LoRA-FAIR consistently achieves performance improvements in FL settings.

Large Language Models (LLMs) have demonstrated remarkable capabilities across

various applications, but their performance on long-context tasks is often

limited by the computational complexity of attention mechanisms. We introduce a

novel approach to accelerate attention computation in LLMs, particularly for

long-context scenarios. We leverage the inherent sparsity within attention

mechanisms, both in conventional Softmax attention and ReLU attention (with

ReLUα activation, α∈N+), to significantly

reduce the running time complexity. Our method employs a Half-Space Reporting

(HSR) data structure to identify non-zero or ``massively activated'' entries in

the attention matrix. We present theoretical analyses for two key scenarios:

generation decoding and prompt prefilling. Our approach achieves a running time

of O(mn4/5) significantly faster than the naive approach O(mn) for

generation decoding, where n is the context length, m is the query length,

and d is the hidden dimension. We can also reduce the running time for prompt

prefilling from O(mn) to O(mn1−1/⌊d/2⌋+mn4/5). Our

method introduces only provably negligible error for Softmax attention. This

work represents a significant step towards enabling efficient long-context

processing in LLMs.

Characterizing the express power of the Transformer architecture is critical

to understanding its capacity limits and scaling law. Recent works provide the

circuit complexity bounds to Transformer-like architecture. On the other hand,

Rotary Position Embedding (RoPE) has emerged as a crucial technique

in modern large language models, offering superior performance in capturing

positional information compared to traditional position embeddings, which shows

great potential in application prospects, particularly for the long context

scenario. Empirical evidence also suggests that RoPE-based

Transformer architectures demonstrate greater generalization capabilities

compared to conventional Transformer models. In this work, we establish a

circuit complexity bound for Transformers with RoPE attention. Our

key contribution is that we show that unless TC0=NC1, a

RoPE-based Transformer with poly(n)-precision, O(1)

layers, hidden dimension d≤O(n) cannot solve the Arithmetic formula

evaluation problem or the Boolean formula value problem. This result

significantly demonstrates the fundamental limitation of the expressivity of

the RoPE-based Transformer architecture, although it achieves giant

empirical success. Our theoretical result not only establishes the complexity

bound but also may instruct further work on the RoPE-based

Transformer.

11 May 2024

This systematic literature review paper explores the use of extended reality

{(XR)} technology for smart built environments and particularly for smart

lighting systems design. Smart lighting is a novel concept that has emerged

over a decade now and is being used and tested in commercial and industrial

built environments. We used PRISMA methodology to review 270 research papers

published from 1968 to 2023. Following a discussion of historical advances and

key modeling techniques, a description of lighting simulation in the context of

extended reality and smart built environment is given, followed by a discussion

of the current trends and challenges.

16 Sep 2025

Passive resonators have been widely used in MRI to manipulate RF field distributions. However, optimizing these structures using full-wave electromagnetic simulations is computationally prohibitive, particularly for large passive resonator arrays with many degrees of freedom. This work presents a co-simulation framework tailored specifically for the analysis and optimization of passive resonators. The framework performs a single full-wave electromagnetic simulation in which the resonator's lumped components are replaced by ports, followed by circuit-level computations to evaluate arbitrary capacitor and inductor configurations. This allows integration with a genetic algorithm to rapidly optimize resonator parameters and enhance the B1 field in a targeted region of interest (ROI). We validated the method in three scenarios: (1) a single-loop passive resonator on a spherical phantom, (2) a two-loop array on a cylindrical phantom, and (3) a two-loop array on a human head model. In all cases, the co-simulation results showed excellent agreement with full-wave simulations, with relative errors below 1%. The genetic-algorithm-driven optimization, involving tens of thousands of capacitor combinations, completed in under 5 minutes, whereas equivalent full-wave EM sweeps would require an impractically long computation time. This work extends co-simulation methodology to passive resonator design for first time, enabling the fast, accurate, and scalable optimization. The approach significantly reduces computational burden while preserving full-wave accuracy, making it a powerful tool for passive RF structure development in MRI.

Fine-tuning large language models (LLMs) in federated settings enables

privacy-preserving adaptation but suffers from cross-client interference due to

model aggregation. Existing federated LoRA fine-tuning methods, primarily based

on FedAvg, struggle with data heterogeneity, leading to harmful cross-client

interference and suboptimal personalization. In this work, we propose

\textbf{FedALT}, a novel personalized federated LoRA fine-tuning algorithm that

fundamentally departs from FedAvg. Instead of using an aggregated model to

initialize local training, each client continues training its individual LoRA

while incorporating shared knowledge through a separate Rest-of-World (RoW)

LoRA component. To effectively balance local adaptation and global information,

FedALT introduces an adaptive mixer that dynamically learns input-specific

weightings between the individual and RoW LoRA components, drawing conceptual

foundations from the Mixture-of-Experts (MoE) paradigm. Through extensive

experiments on NLP benchmarks, we demonstrate that FedALT significantly

outperforms state-of-the-art personalized federated LoRA fine-tuning methods,

achieving superior local adaptation without sacrificing computational

efficiency.

Federated learning (FL) allows collaborative machine learning training without sharing private data. While most FL methods assume identical data domains across clients, real-world scenarios often involve heterogeneous data domains. Federated Prototype Learning (FedPL) addresses this issue, using mean feature vectors as prototypes to enhance model generalization. However, existing FedPL methods create the same number of prototypes for each client, leading to cross-domain performance gaps and disparities for clients with varied data distributions. To mitigate cross-domain feature representation variance, we introduce FedPLVM, which establishes variance-aware dual-level prototypes clustering and employs a novel α-sparsity prototype loss. The dual-level prototypes clustering strategy creates local clustered prototypes based on private data features, then performs global prototypes clustering to reduce communication complexity and preserve local data privacy. The α-sparsity prototype loss aligns samples from underrepresented domains, enhancing intra-class similarity and reducing inter-class similarity. Evaluations on Digit-5, Office-10, and DomainNet datasets demonstrate our method's superiority over existing approaches.

Motivated by the experimental identification of magnetic compounds consisting of zigzag chains, we analyze the band structure topology of magnons in ferromagnets on a zigzag lattice. We account for the general lattice geometry by including spatially anisotropic Heisenberg exchange interactions and by Dzyaloshinskii-Moriya interaction on inversion asymmetric bonds. Within the linear spin-wave theory, we find two magnon branches, whose band structure topology (i.e., Chern numbers) we map out in a comprehensive phase diagram. Notably, besides topologically trivial and gapless phases, we identify topologically nontrivial phases that support chiral edge magnons. We show that these edge states are robust against elastic defect scattering.

25 Jun 2021

A quadrangular embedding of a graph in a surface Σ, also known as a quadrangulation of Σ, is a cellular embedding in which every face is bounded by a 4-cycle. A quadrangulation of Σ is minimal if there is no quadrangular embedding of a (simple) graph of smaller order in Σ. In this paper we determine n(Σ), the order of a minimal quadrangulation of a surface Σ, for all surfaces, both orientable and nonorientable. Letting S0 denote the sphere and N2 the Klein bottle, we prove that n(S0)=4,n(N2)=6, and n(Σ)=⌈(5+25−16χ(Σ))/2⌉ for all other surfaces Σ, where χ(Σ) is the Euler characteristic. Our proofs use a `diagonal technique', introduced by Hartsfield in 1994. We explain the general features of this method.

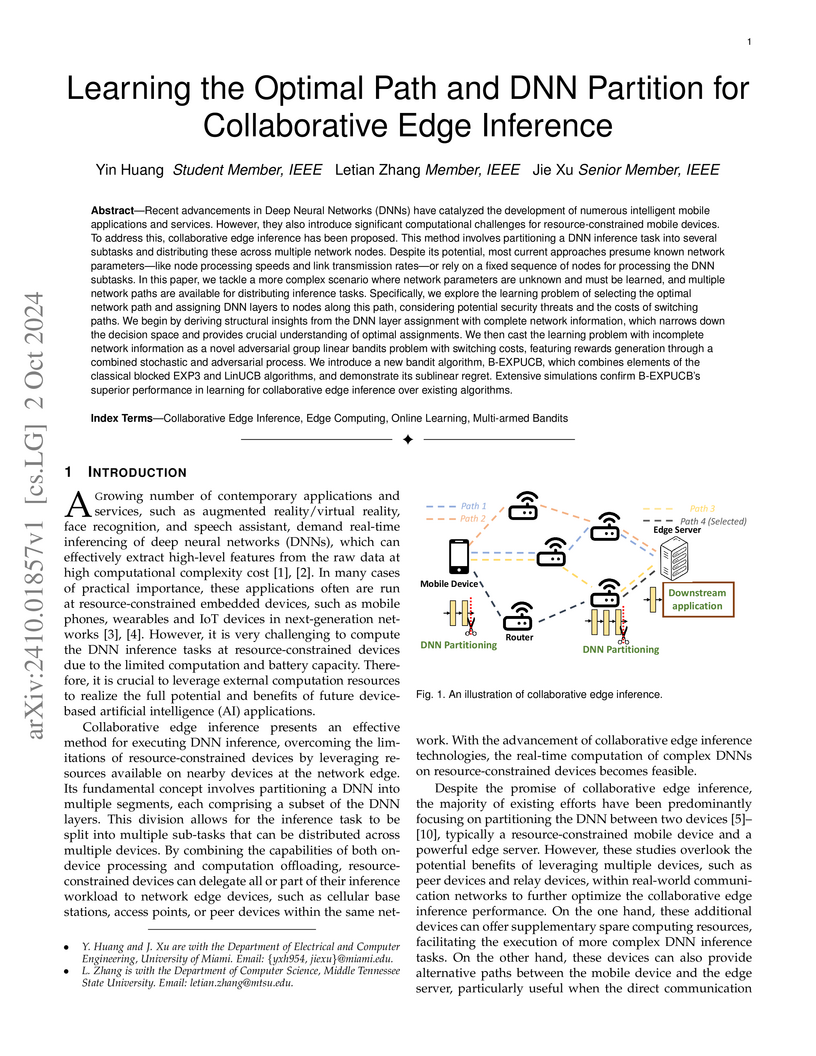

Recent advancements in Deep Neural Networks (DNNs) have catalyzed the development of numerous intelligent mobile applications and services. However, they also introduce significant computational challenges for resource-constrained mobile devices. To address this, collaborative edge inference has been proposed. This method involves partitioning a DNN inference task into several subtasks and distributing these across multiple network nodes. Despite its potential, most current approaches presume known network parameters -- like node processing speeds and link transmission rates -- or rely on a fixed sequence of nodes for processing the DNN subtasks. In this paper, we tackle a more complex scenario where network parameters are unknown and must be learned, and multiple network paths are available for distributing inference tasks. Specifically, we explore the learning problem of selecting the optimal network path and assigning DNN layers to nodes along this path, considering potential security threats and the costs of switching paths. We begin by deriving structural insights from the DNN layer assignment with complete network information, which narrows down the decision space and provides crucial understanding of optimal assignments. We then cast the learning problem with incomplete network information as a novel adversarial group linear bandits problem with switching costs, featuring rewards generation through a combined stochastic and adversarial process. We introduce a new bandit algorithm, B-EXPUCB, which combines elements of the classical blocked EXP3 and LinUCB algorithms, and demonstrate its sublinear regret. Extensive simulations confirm B-EXPUCB's superior performance in learning for collaborative edge inference over existing algorithms.

The phenomenon of Anderson localization in various disordered media has sustained significant interest over many decades. Specifically, the Anderson localization of phonons has been viewed as a potential mechanism for creating fascinating thermal transport properties in materials. However, despite extensive work, the influence of the vector nature of phonons on the Anderson localization transition has not been well explored. In order to achieve such an understanding, we extend a recently developed phonon dynamical cluster approximation (DCA) and its typical medium variant (TMDCA) to investigate spectra and localization of multi-branch phonons in the presence of pure mass disorder. We validate the new formalism against several limiting cases and exact diagonalization results. A comparison of results for the single-branch versus multi-branch case shows that the vector nature of the phonons does not affect the Anderson transition of phonons significantly. The developed multi-branch TMDCA formalism can be employed for studying phonon localization in real materials.

There are no more papers matching your filters at the moment.