Pontifical Catholic University of Rio de Janeiro

27 Feb 2024

CNRS

CNRS University of Southern California

University of Southern California National University of Singapore

National University of Singapore Georgia Institute of Technology

Georgia Institute of Technology Beihang University

Beihang University Osaka University

Osaka University Zhejiang University

Zhejiang University Cornell University

Cornell University Northwestern University

Northwestern University University of Texas at Austin

University of Texas at Austin Nanyang Technological University

Nanyang Technological University Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles

Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de Montpellier

University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de MontpellierIn the "Beyond Moore's Law" era, with increasing edge intelligence, domain-specific computing embracing unconventional approaches will become increasingly prevalent. At the same time, adopting a variety of nanotechnologies will offer benefits in energy cost, computational speed, reduced footprint, cyber resilience, and processing power. The time is ripe for a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fill that need. The authors provide a comprehensive roadmap for neuromorphic computing using electron spins, memristive devices, two-dimensional nanomaterials, nanomagnets, and various dynamical systems. They also address other paradigms such as Ising machines, Bayesian inference engines, probabilistic computing with p-bits, processing in memory, quantum memories and algorithms, computing with skyrmions and spin waves, and brain-inspired computing for incremental learning and problem-solving in severely resource-constrained environments. These approaches have advantages over traditional Boolean computing based on von Neumann architecture. As the computational requirements for artificial intelligence grow 50 times faster than Moore's Law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will help identify future needs and challenges. In a very fertile field, experts in the field aim to present some of the dominant and most promising technologies for unconventional computing that will be around for some time to come. Within a holistic approach, the goal is to provide pathways for solidifying the field and guiding future impactful discoveries.

University of Pittsburgh

University of Pittsburgh University of Cambridge

University of Cambridge Imperial College London

Imperial College London National University of Singapore

National University of Singapore University College London

University College London University of Oxford

University of Oxford Shanghai Jiao Tong UniversityUniversity of Ljubljana

Shanghai Jiao Tong UniversityUniversity of Ljubljana Yale University

Yale University Northwestern UniversityRutherford Appleton Laboratory

Northwestern UniversityRutherford Appleton Laboratory University of SouthamptonKorea Institute for Advanced Study

University of SouthamptonKorea Institute for Advanced Study Perimeter Institute for Theoretical PhysicsThe University of SydneyOkinawa Institute of Science and Technology Graduate University

Perimeter Institute for Theoretical PhysicsThe University of SydneyOkinawa Institute of Science and Technology Graduate University University of WarwickUniversity of Sussex

University of WarwickUniversity of Sussex University of GroningenPontifical Catholic University of Rio de JaneiroBen-Gurion University of the NegevRoyal Holloway, University of LondonQueen's University BelfastINRIMShiv Nadar Institution of EminenceNational Quantum Computing CentreUniversit

degli Studi di Palermo

University of GroningenPontifical Catholic University of Rio de JaneiroBen-Gurion University of the NegevRoyal Holloway, University of LondonQueen's University BelfastINRIMShiv Nadar Institution of EminenceNational Quantum Computing CentreUniversit

degli Studi di PalermoA key open problem in physics is the correct way to combine gravity (described by general relativity) with everything else (described by quantum mechanics). This problem suggests that general relativity and possibly also quantum mechanics need fundamental corrections. Most physicists expect that gravity should be quantum in character, but gravity is fundamentally different to the other forces because it alone is described by spacetime geometry. Experiments are needed to test whether gravity, and hence space-time, is quantum or classical. We propose an experiment to test the quantum nature of gravity by checking whether gravity can entangle two micron-sized crystals. A pathway to this is to create macroscopic quantum superpositions of each crystal first using embedded spins and Stern-Gerlach forces. These crystals could be nanodiamonds containing nitrogen-vacancy (NV) centres. The spins can subsequently be measured to witness the gravitationally generated entanglement. This is based on extensive theoretical feasibility studies and experimental progress in quantum technology. The eventual experiment will require a medium-sized consortium with excellent suppression of decoherence including vibrations and gravitational noise. In this white paper, we review the progress and plans towards realizing this. While implementing these plans, we will further explore the most macroscopic superpositions that are possible, which will test theories that predict a limit to this.

03 May 2024

Panoptic-SLAM integrates panoptic segmentation with ORB-SLAM3 to enable robust visual localization and mapping in dynamic environments, specifically addressing unknown and unlabeled moving objects by using geometric checks against a static background. This system demonstrated superior accuracy and lower trajectory errors on public benchmarks and real-world quadruped robot experiments, particularly when encountering previously unseen dynamic elements.

25 Jun 2025

A systematic mapping study consolidated existing evidence on how domain knowledge supports Requirements Engineering (RE), analyzing 75 primary studies to identify prevalent methods, techniques, challenges, and future directions. The analysis revealed a strong focus on explicit domain knowledge and functional requirements, alongside key technical challenges in integration and acquisition, pointing towards AI-driven techniques and enhanced empirical validation as future research avenues.

11 Feb 2025

In recent years, Software Engineering (SE) scholars and practitioners have

emphasized the importance of integrating soft skills into SE education.

However, teaching and learning soft skills are complex, as they cannot be

acquired passively through raw knowledge acquisition. On the other hand,

hackathons have attracted increasing attention due to their experiential,

collaborative, and intensive nature, which certain tasks could be similar to

real-world software development. This paper aims to discuss the idea of

hackathons as an educational strategy for shaping SE students' soft skills in

practice. Initially, we overview the existing literature on soft skills and

hackathons in SE education. Then, we report preliminary empirical evidence from

a seven-day hybrid hackathon involving 40 students. We assess how the hackathon

experience promoted innovative and creative thinking, collaboration and

teamwork, and knowledge application among participants through a structured

questionnaire designed to evaluate students' self-awareness. Lastly, our

findings and new directions are analyzed through the lens of Self-Determination

Theory, which offers a psychological lens to understand human behavior. This

paper contributes to academia by advocating the potential of hackathons in SE

education and proposing concrete plans for future research within SDT. For

industry, our discussion has implications around developing soft skills in

future SE professionals, thereby enhancing their employability and readiness in

the software market.

This work presents an unsupervised method for automatically constructing and expanding topic taxonomies using instruction-based fine-tuned LLMs (Large Language Models). We apply topic modeling and keyword extraction techniques to create initial topic taxonomies and LLMs to post-process the resulting terms and create a hierarchy. To expand an existing taxonomy with new terms, we use zero-shot prompting to find out where to add new nodes, which, to our knowledge, is the first work to present such an approach to taxonomy tasks. We use the resulting taxonomies to assign tags that characterize merchants from a retail bank dataset. To evaluate our work, we asked 12 volunteers to answer a two-part form in which we first assessed the quality of the taxonomies created and then the tags assigned to merchants based on that taxonomy. The evaluation revealed a coherence rate exceeding 90% for the chosen taxonomies. The taxonomies' expansion with LLMs also showed exciting results for parent node prediction, with an f1-score above 70% in our taxonomies.

In this paper, we propose iterative interference cancellation schemes with access points selection (APs-Sel) for cell-free massive multiple-input multiple-output (CF-mMIMO) systems. Closed-form expressions for centralized and decentralized linear minimum mean square error (LMMSE) receive filters with APs-Sel are derived assuming imperfect channel state information (CSI). Furthermore, we develop a list-based detector based on LMMSE receive filters that exploits interference cancellation and the constellation points. A message-passing-based iterative detection and decoding (IDD) scheme that employs low-density parity-check (LDPC) codes is then developed. Moreover, log-likelihood ratio (LLR) refinement strategies based on censoring and a linear combination of local LLRs are proposed to improve the network performance. We compare the cases with centralized and decentralized processing in terms of bit error rate (BER) performance, complexity, and signaling under perfect CSI (PCSI) and imperfect CSI (ICSI) and verify the superiority of the distributed architecture with LLR refinements.

This systematic mapping study categorizes and analyzes the use of Large Language Models (LLMs) in qualitative research, showing that LLM-assisted analysis frequently achieves comparable results to traditional human methods. The findings highlight the critical role of prompt engineering and human oversight, alongside common challenges such as hallucinations and biases, across a field primarily developed in 2023-2024.

Large language models (LLMs) have taken centre stage in debates on Artificial Intelligence. Yet there remains a gap in how to assess LLMs' conformity to important human values. In this paper, we investigate whether state-of-the-art LLMs, GPT-4 and Claude 2.1 (Gemini Pro and LLAMA 2 did not generate valid results) are moral hypocrites. We employ two research instruments based on the Moral Foundations Theory: (i) the Moral Foundations Questionnaire (MFQ), which investigates which values are considered morally relevant in abstract moral judgements; and (ii) the Moral Foundations Vignettes (MFVs), which evaluate moral cognition in concrete scenarios related to each moral foundation. We characterise conflicts in values between these different abstractions of moral evaluation as hypocrisy. We found that both models displayed reasonable consistency within each instrument compared to humans, but they displayed contradictory and hypocritical behaviour when we compared the abstract values present in the MFQ to the evaluation of concrete moral violations of the MFV.

Reconfigurable intelligent surfaces (RIS) can actively perform beamforming and have become a crucial enabler for wireless systems in the future. The direction-of-arrival (DOA) estimates of RIS received signals can help design the reflection control matrix and improve communication quality. In this paper, we design a RIS-assisted system and propose a robust Lawson norm-based multiple-signal-classification (LN-MUSIC) DOA estimation algorithm for impulsive noise, which is divided into two parts. The first one, the non-convex Lawson norm is used as the error criterion along with a regularization constraint to formulate the optimization problem. Then, a Bregman distance based alternating direction method of multipliers is used to solve the problem and recover the desired signal. The second part is to use the multiple signal classification (MUSIC) to find out the DOAs of targets based on their sparsity in the spatial domain. In addition, we also propose a RIS control matrix optimization strategy that requires no channel state information, which effectively enhances the desired signals and improves the performance of the LN-MUSIC algorithm. A Cramer-Rao-lower-bound (CRLB) of the proposed DOA estimation algorithm is presented and verifies its feasibility. Simulated results show that the proposed robust DOA estimation algorithm based on the Lawson norm can effectively suppress the impact of large outliers caused by impulsive noise on the estimation results, outperforming existing methods.

07 Oct 2024

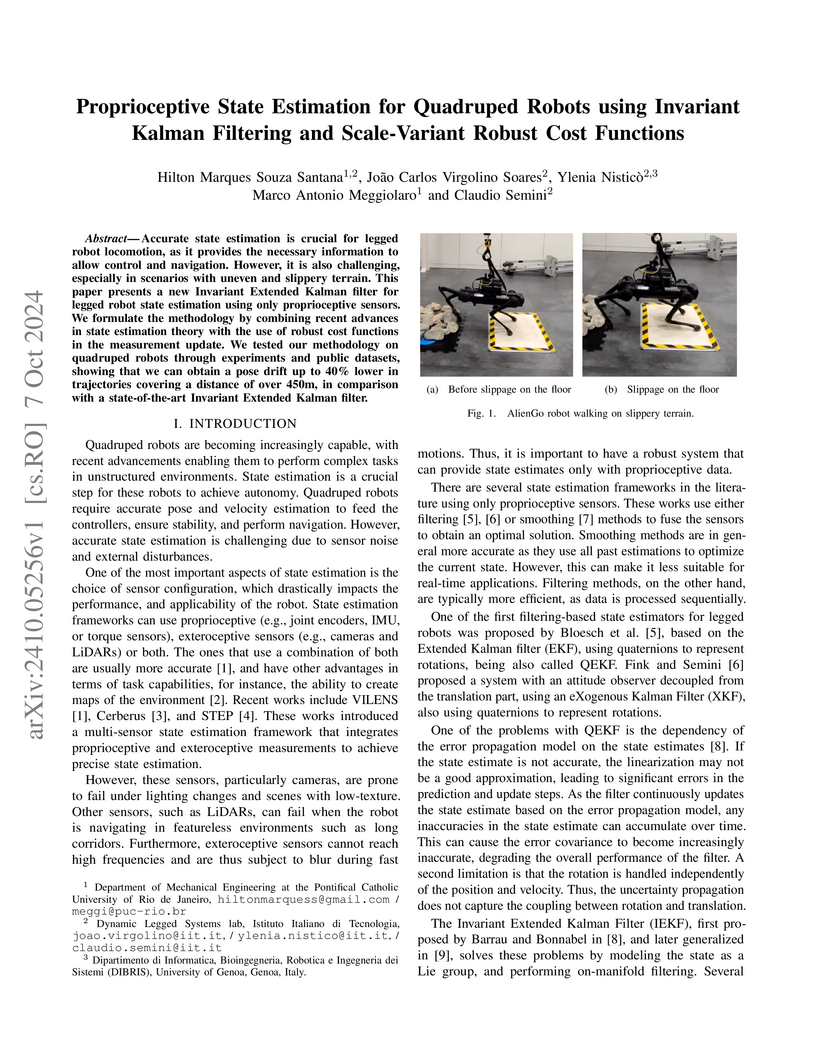

Accurate state estimation is crucial for legged robot locomotion, as it provides the necessary information to allow control and navigation. However, it is also challenging, especially in scenarios with uneven and slippery terrain. This paper presents a new Invariant Extended Kalman filter for legged robot state estimation using only proprioceptive sensors. We formulate the methodology by combining recent advances in state estimation theory with the use of robust cost functions in the measurement update. We tested our methodology on quadruped robots through experiments and public datasets, showing that we can obtain a pose drift up to 40% lower in trajectories covering a distance of over 450m, in comparison with a state-of-the-art Invariant Extended Kalman filter.

Configuring the Linux kernel to meet specific requirements, such as binary

size, is highly challenging due to its immense complexity-with over 15,000

interdependent options evolving rapidly across different versions. Although

several studies have explored sampling strategies and machine learning methods

to understand and predict the impact of configuration options, the literature

still lacks a comprehensive and large-scale dataset encompassing multiple

kernel versions along with detailed quantitative measurements. To bridge this

gap, we introduce LinuxData, an accessible collection of kernel configurations

spanning several kernel releases, specifically from versions 4.13 to 5.8. This

dataset, gathered through automated tools and build processes, comprises over

240,000 kernel configurations systematically labeled with compilation outcomes

and binary sizes. By providing detailed records of configuration evolution and

capturing the intricate interplay among kernel options, our dataset enables

innovative research in feature subset selection, prediction models based on

machine learning, and transfer learning across kernel versions. Throughout this

paper, we describe how the dataset has been made easily accessible via OpenML

and illustrate how it can be leveraged using only a few lines of Python code to

evaluate AI-based techniques, such as supervised machine learning. We

anticipate that this dataset will significantly enhance reproducibility and

foster new insights into configuration-space analysis at a scale that presents

unique opportunities and inherent challenges, thereby advancing our

understanding of the Linux kernel's configurability and evolution.

In this paper, channel estimation problem for extremely large-scale

multi-input multi-output (XL-MIMO) systems is investigated with the

considerations of the spherical wavefront effect and the spatially

non-stationary (SnS) property. Due to the diversities of SnS characteristics

among different propagation paths, the concurrent channel estimation of

multiple paths becomes intractable. To address this challenge, we propose a

two-phase channel estimation scheme. In the first phase, the angles of

departure (AoDs) on the user side are estimated, and a carefully designed pilot

transmission scheme enables the decomposition of the received signal from

different paths. In the second phase, the subchannel estimation corresponding

to different paths is formulated as a three-layer Bayesian inference problem.

Specifically, the first layer captures block sparsity in the angular domain,

the second layer promotes SnS property in the antenna domain, and the third

layer decouples the subchannels from the observed signals. To efficiently

facilitate Bayesian inference, we propose a novel three-layer generalized

approximate message passing (TL-GAMP) algorithm based on structured variational

massage passing and belief propagation rules. Simulation results validate the

convergence and effectiveness of the proposed algorithm, showcasing its

robustness to different channel scenarios.

The application of deep learning algorithms to Earth observation (EO) in recent years has enabled substantial progress in fields that rely on remotely sensed data. However, given the data scale in EO, creating large datasets with pixel-level annotations by experts is expensive and highly time-consuming. In this context, priors are seen as an attractive way to alleviate the burden of manual labeling when training deep learning methods for EO. For some applications, those priors are readily available. Motivated by the great success of contrastive-learning methods for self-supervised feature representation learning in many computer-vision tasks, this study proposes an online deep clustering method using crop label proportions as priors to learn a sample-level classifier based on government crop-proportion data for a whole agricultural region. We evaluate the method using two large datasets from two different agricultural regions in Brazil. Extensive experiments demonstrate that the method is robust to different data types (synthetic-aperture radar and optical images), reporting higher accuracy values considering the major crop types in the target regions. Thus, it can alleviate the burden of large-scale image annotation in EO applications.

We show that the self-interactions present in the effective field theory formulation of general relativity can couple gravitational wave modes and generate nonclassical states. The output of gravitational nonlinear processes can also be sensitive to quantum features of the input states, indicating that nonlinearities can act both as sources and detectors of quantum features of gravitational waves. Due to gauge and quantization issues in strongly curved spacetimes, we work in the geometric optics limit of gravitational radiation, but we expect the key ideas extend to situations of astrophysical interest. This offers a new direction for probing the quantum nature of gravity, analogous to how the quantumness of electrodynamics was established through quantum optics.

03 Sep 2022

Factor and sparse models are two widely used methods to impose a low-dimensional structure in high-dimensions. However, they are seemingly mutually exclusive. We propose a lifting method that combines the merits of these two models in a supervised learning methodology that allows for efficiently exploring all the information in high-dimensional datasets. The method is based on a flexible model for high-dimensional panel data, called factor-augmented regression model with observable and/or latent common factors, as well as idiosyncratic components. This model not only includes both principal component regression and sparse regression as specific models but also significantly weakens the cross-sectional dependence and facilitates model selection and interpretability. The method consists of several steps and a novel test for (partial) covariance structure in high dimensions to infer the remaining cross-section dependence at each step. We develop the theory for the model and demonstrate the validity of the multiplier bootstrap for testing a high-dimensional (partial) covariance structure. The theory is supported by a simulation study and applications.

12 Nov 2013

We perform a proof-of-principle demonstration of the measurement-device-independent quantum key distribution (MDI-QKD) protocol using weak coherent states and polarization-encoded qubits over two optical fiber links of 8.5 km each. Each link was independently stabilized against polarization drifts using a full-polarization control system employing two wavelength-multiplexed control channels. A linear-optics-based polarization Bell-state analyzer was built into the intermediate station, Charlie, which is connected to both Alice and Bob via the optical fiber links. Using decoy-states, a lower bound for the secret-key generation rate of 1.04x10^-6 bits/pulse is computed.

Decision diagrams for classification have some notable advantages over decision trees, as their internal connections can be determined at training time and their width is not bound to grow exponentially with their depth. Accordingly, decision diagrams are usually less prone to data fragmentation in internal nodes. However, the inherent complexity of training these classifiers acted as a long-standing barrier to their widespread adoption. In this context, we study the training of optimal decision diagrams (ODDs) from a mathematical programming perspective. We introduce a novel mixed-integer linear programming model for training and demonstrate its applicability for many datasets of practical importance. Further, we show how this model can be easily extended for fairness, parsimony, and stability notions. We present numerical analyses showing that our model allows training ODDs in short computational times, and that ODDs achieve better accuracy than optimal decision trees, while allowing for improved stability without significant accuracy losses.

07 Feb 2025

[Background] Emotional Intelligence (EI) can impact Software Engineering (SE)

outcomes through improved team communication, conflict resolution, and stress

management. SE workers face increasing pressure to develop both technical and

interpersonal skills, as modern software development emphasizes collaborative

work and complex team interactions. Despite EI's documented importance in

professional practice, SE education continues to prioritize technical knowledge

over emotional and social competencies. [Objective] This paper analyzes SE

students' self-perceptions of their EI after a two-month cooperative learning

project, using Mayer and Salovey's four-ability model to examine how students

handle emotions in collaborative development. [Method] We conducted a case

study with 29 SE students organized into four squads within a project-based

learning course, collecting data through questionnaires and focus groups that

included brainwriting and sharing circles, then analyzing the data using

descriptive statistics and open coding. [Results] Students demonstrated

stronger abilities in managing their own emotions compared to interpreting

others' emotional states. Despite limited formal EI training, they developed

informal strategies for emotional management, including structured planning and

peer support networks, which they connected to improved productivity and

conflict resolution. [Conclusion] This study shows how SE students perceive EI

in a collaborative learning context and provides evidence-based insights into

the important role of emotional competencies in SE education.

03 Feb 2022

We provide a new theory for nodewise regression when the residuals from a

fitted factor model are used. We apply our results to the analysis of the

consistency of Sharpe ratio estimators when there are many assets in a

portfolio. We allow for an increasing number of assets as well as time

observations of the portfolio. Since the nodewise regression is not feasible

due to the unknown nature of idiosyncratic errors, we provide a

feasible-residual-based nodewise regression to estimate the precision matrix of

errors which is consistent even when number of assets, p, exceeds the time span

of the portfolio, n. In another new development, we also show that the

precision matrix of returns can be estimated consistently, even with an

increasing number of factors and p>n. We show that: (1) with p>n, the Sharpe

ratio estimators are consistent in global minimum-variance and mean-variance

portfolios; and (2) with p>n, the maximum Sharpe ratio estimator is consistent

when the portfolio weights sum to one; and (3) with p<

There are no more papers matching your filters at the moment.