Shirley Ryan AbilityLab

Advances in markerless motion capture are expanding access to biomechanical

movement analysis, making it feasible to obtain high-quality movement data from

outpatient clinics, inpatient hospitals, therapy, and even home. Expanding

access to movement data in these diverse contexts makes the challenge of

performing downstream analytics all the more acute. Creating separate bespoke

analysis code for all the tasks end users might want is both intractable and

does not take advantage of the common features of human movement underlying

them all. Recent studies have shown that fine-tuning language models to accept

tokenized movement as an additional modality enables successful descriptive

captioning of movement. Here, we explore whether such a multimodal

motion-language model can answer detailed, clinically meaningful questions

about movement. We collected over 30 hours of biomechanics from nearly 500

participants, many with movement impairments from a variety of etiologies,

performing a range of movements used in clinical outcomes assessments. After

tokenizing these movement trajectories, we created a multimodal dataset of

motion-related questions and answers spanning a range of tasks. We developed

BiomechGPT, a multimodal biomechanics-language model, on this dataset. Our

results show that BiomechGPT demonstrates high performance across a range of

tasks such as activity recognition, identifying movement impairments,

diagnosis, scoring clinical outcomes, and measuring walking. BiomechGPT

provides an important step towards a foundation model for rehabilitation

movement data.

11 Jul 2025

Portable Biomechanics Laboratory: Clinically Accessible Movement Analysis from a Handheld Smartphone

Portable Biomechanics Laboratory: Clinically Accessible Movement Analysis from a Handheld Smartphone

The way a person moves is a direct reflection of their neurological and musculoskeletal health, yet it remains one of the most underutilized vital signs in clinical practice. Although clinicians visually observe movement impairments, they lack accessible and validated methods to objectively measure movement in routine care. This gap prevents wider use of biomechanical measurements in practice, which could enable more sensitive outcome measures or earlier identification of impairment. We present our Portable Biomechanics Laboratory (PBL), which includes a secure, cloud-enabled smartphone app for data collection and a novel algorithm for fitting biomechanical models to this data. We extensively validated PBL's biomechanical measures using a large, clinically representative dataset. Next, we tested the usability and utility of our system in neurosurgery and sports medicine clinics. We found joint angle errors within 3 degrees across participants with neurological injury, lower-limb prosthesis users, pediatric inpatients, and controls. In addition to being easy to use, gait metrics computed from the PBL showed high reliability and were sensitive to clinical differences. For example, in individuals undergoing decompression surgery for cervical myelopathy, the mJOA score is a common patient-reported outcome measure; we found that PBL gait metrics correlated with mJOA scores and demonstrated greater responsiveness to surgical intervention than the patient-reported outcomes. These findings support the use of handheld smartphone video as a scalable, low-burden tool for capturing clinically meaningful biomechanical data, offering a promising path toward accessible monitoring of mobility impairments. We release the first clinically validated method for measuring whole-body kinematics from handheld smartphone video at this https URL .

Northwestern University researchers develop KinTwin, an imitation learning framework that accurately replicates human movement from markerless motion capture data using biomechanical models, achieving joint angle tracking errors under 1 degree while inferring muscle activations and ground reaction forces across both able-bodied and movement-impaired individuals.

27 Aug 2021

Human locomotion involves continuously variable activities including walking, running, and stair climbing over a range of speeds and inclinations as well as sit-stand, walk-run, and walk-stairs transitions. Understanding the kinematics and kinetics of the lower limbs during continuously varying locomotion is fundamental to developing robotic prostheses and exoskeletons that assist in community ambulation. However, available datasets on human locomotion neglect transitions between activities and/or continuous variations in speed and inclination during these activities. This data paper reports a new dataset that includes the lower-limb kinematics and kinetics of ten able-bodied participants walking at multiple inclines (± 0, 5, 10 ∘) and speeds (0.8, 1, 1.2 m/s), running at multiple speeds (1.8, 2, 2.2, 2.4 m/s), walking and running with constant acceleration (± 0.2, 0.5 \text{m/s^2}), and stair ascent/descent with multiple stair inclines (20, 25, 30, 35 ∘). This dataset also includes sit-stand transitions, walk-run transitions, and walk-stairs transitions. Data were recorded by a Vicon motion capture system and, for applicable tasks, a Bertec instrumented treadmill.

20 Jul 2023

Physical interaction between individuals plays an important role in human motor learning and performance during shared tasks. Using robotic devices, researchers have studied the effects of dyadic haptic interaction mostly focusing on the upper-limb. Developing infrastructure that enables physical interactions between multiple individuals' lower limbs can extend the previous work and facilitate investigation of new dyadic lower-limb rehabilitation schemes.

We designed a system to render haptic interactions between two users while they walk in multi-joint lower-limb exoskeletons. Specifically, we developed an infrastructure where desired interaction torques are commanded to the individual lower-limb exoskeletons based on the users' kinematics and the properties of the virtual coupling. In this pilot study, we demonstrated the capacity of the platform to render different haptic properties (e.g., soft and hard), different haptic connection types (e.g., bidirectional and unidirectional), and connections expressed in joint space and in task space. With haptic connection, dyads generated synchronized movement, and the difference between joint angles decreased as the virtual stiffness increased. This is the first study where multi-joint dyadic haptic interactions are created between lower-limb exoskeletons. This platform will be used to investigate effects of haptic interaction on motor learning and task performance during walking, a complex and meaningful task for gait rehabilitation.

Markerless motion capture using computer vision and human pose estimation

(HPE) has the potential to expand access to precise movement analysis. This

could greatly benefit rehabilitation by enabling more accurate tracking of

outcomes and providing more sensitive tools for research. There are numerous

steps between obtaining videos to extracting accurate biomechanical results and

limited research to guide many critical design decisions in these pipelines. In

this work, we analyze several of these steps including the algorithm used to

detect keypoints and the keypoint set, the approach to reconstructing

trajectories for biomechanical inverse kinematics and optimizing the IK

process. Several features we find important are: 1) using a recent algorithm

trained on many datasets that produces a dense set of biomechanically-motivated

keypoints, 2) using an implicit representation to reconstruct smooth,

anatomically constrained marker trajectories for IK, 3) iteratively optimizing

the biomechanical model to match the dense markers, 4) appropriate

regularization of the IK process. Our pipeline makes it easy to obtain accurate

biomechanical estimates of movement in a rehabilitation hospital.

This paper aims to detect the potential injury risk of the anterior cruciate ligament (ACL) by proposing an ACL potential injury risk assessment algorithm based on key points of the human body detected using computer vision technology. To obtain the key points data of the human body in each frame, OpenPose, an open source computer vision algorithm, was employed. The obtained data underwent preprocessing and were then fed into an ACL potential injury feature extraction model based on the Landing Error Evaluation System (LESS). This model extracted several important parameters, including the knee flexion angle, the trunk flexion on the sagittal plane, trunk flexion angle on the frontal plane, the ankle knee horizontal distance, and the ankle shoulder horizontal distance. Each of these features was assigned a threshold interval, and a segmented evaluation function was utilized to score them accordingly. To calculate the final score of the participant, the score values were input into a weighted scoring model designed based on the Analytic Hierarchy Process (AHP). The AHP based model takes into account the relative importance of each feature in the overall assessment. The results demonstrate that the proposed algorithm effectively detects the potential risk of ACL injury. The proposed algorithm demonstrates its effectiveness in detecting ACL injury risk, offering valuable insights for injury prevention and intervention strategies in sports and related fields. Code is available at: this https URL

Single camera 3D pose estimation is an ill-defined problem due to inherent ambiguities from depth, occlusion or keypoint noise. Multi-hypothesis pose estimation accounts for this uncertainty by providing multiple 3D poses consistent with the 2D measurements. Current research has predominantly concentrated on generating multiple hypotheses for single frame static pose estimation or single hypothesis motion estimation. In this study we focus on the new task of multi-hypothesis motion estimation. Multi-hypothesis motion estimation is not simply multi-hypothesis pose estimation applied to multiple frames, which would ignore temporal correlation across frames. Instead, it requires distributions which are capable of generating temporally consistent samples, which is significantly more challenging than multi-hypothesis pose estimation or single-hypothesis motion estimation. To this end, we introduce Platypose, a framework that uses a diffusion model pretrained on 3D human motion sequences for zero-shot 3D pose sequence estimation. Platypose outperforms baseline methods on multiple hypotheses for motion estimation. Additionally, Platypose also achieves state-of-the-art calibration and competitive joint error when tested on static poses from Human3.6M, MPI-INF-3DHP and 3DPW. Finally, because it is zero-shot, our method generalizes flexibly to different settings such as multi-camera inference.

Advances in multiview markerless motion capture (MMMC) promise high-quality

movement analysis for clinical practice and research. While prior validation

studies show MMMC performs well on average, they do not provide what is needed

in clinical practice or for large-scale utilization of MMMC -- confidence

intervals over specific kinematic estimates from a specific individual analyzed

using a possibly unique camera configuration. We extend our previous work using

an implicit representation of trajectories optimized end-to-end through a

differentiable biomechanical model to learn the posterior probability

distribution over pose given all the detected keypoints. This posterior

probability is learned through a variational approximation and estimates

confidence intervals for individual joints at each moment in a trial, showing

confidence intervals generally within 10-15 mm of spatial error for virtual

marker locations, consistent with our prior validation studies. Confidence

intervals over joint angles are typically only a few degrees and widen for more

distal joints. The posterior also models the correlation structure over joint

angles, such as correlations between hip and pelvis angles. The confidence

intervals estimated through this method allow us to identify times and trials

where kinematic uncertainty is high.

Markerless pose estimation allows reconstructing human movement from multiple synchronized and calibrated views, and has the potential to make movement analysis easy and quick, including gait analysis. This could enable much more frequent and quantitative characterization of gait impairments, allowing better monitoring of outcomes and responses to interventions. However, the impact of different keypoint detectors and reconstruction algorithms on markerless pose estimation accuracy has not been thoroughly evaluated. We tested these algorithmic choices on data acquired from a multicamera system from a heterogeneous sample of 25 individuals seen in a rehabilitation hospital. We found that using a top-down keypoint detector and reconstructing trajectories with an implicit function enabled accurate, smooth and anatomically plausible trajectories, with a noise in the step width estimates compared to a GaitRite walkway of only 8mm.

Video and wearable sensor data provide complementary information about human

movement. Video provides a holistic understanding of the entire body in the

world while wearable sensors provide high-resolution measurements of specific

body segments. A robust method to fuse these modalities and obtain

biomechanically accurate kinematics would have substantial utility for clinical

assessment and monitoring. While multiple video-sensor fusion methods exist,

most assume that a time-intensive, and often brittle, sensor-body calibration

process has already been performed. In this work, we present a method to

combine handheld smartphone video and uncalibrated wearable sensor data at

their full temporal resolution. Our monocular, video-only, biomechanical

reconstruction already performs well, with only several degrees of error at the

knee during walking compared to markerless motion capture. Reconstructing from

a fusion of video and wearable sensor data further reduces this error. We

validate this in a mixture of people with no gait impairments, lower limb

prosthesis users, and individuals with a history of stroke. We also show that

sensor data allows tracking through periods of visual occlusion.

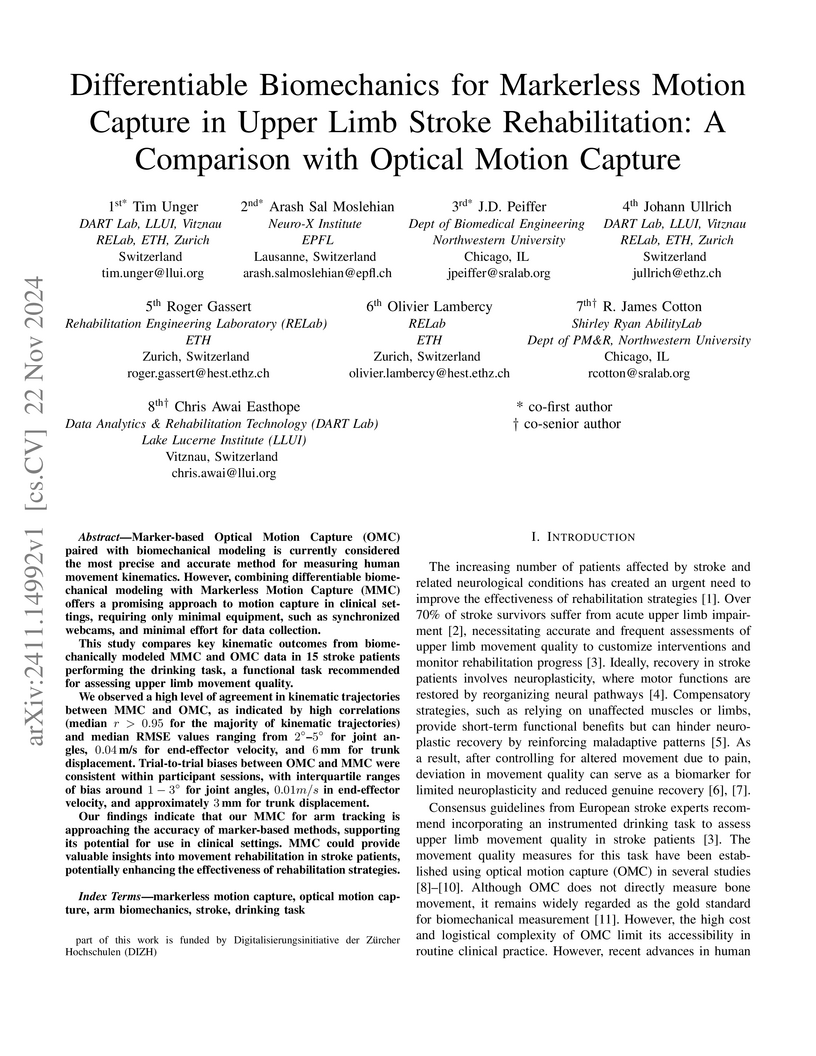

Marker-based Optical Motion Capture (OMC) paired with biomechanical modeling is currently considered the most precise and accurate method for measuring human movement kinematics. However, combining differentiable biomechanical modeling with Markerless Motion Capture (MMC) offers a promising approach to motion capture in clinical settings, requiring only minimal equipment, such as synchronized webcams, and minimal effort for data collection. This study compares key kinematic outcomes from biomechanically modeled MMC and OMC data in 15 stroke patients performing the drinking task, a functional task recommended for assessing upper limb movement quality. We observed a high level of agreement in kinematic trajectories between MMC and OMC, as indicated by high correlations (median r above 0.95 for the majority of kinematic trajectories) and median RMSE values ranging from 2-5 degrees for joint angles, 0.04 m/s for end-effector velocity, and 6 mm for trunk displacement. Trial-to-trial biases between OMC and MMC were consistent within participant sessions, with interquartile ranges of bias around 1-3 degrees for joint angles, 0.01 m/s in end-effector velocity, and approximately 3mm for trunk displacement. Our findings indicate that our MMC for arm tracking is approaching the accuracy of marker-based methods, supporting its potential for use in clinical settings. MMC could provide valuable insights into movement rehabilitation in stroke patients, potentially enhancing the effectiveness of rehabilitation strategies.

22 Apr 2025

Partial-assistance exoskeletons hold significant potential for gait

rehabilitation by promoting active participation during (re)learning of

normative walking patterns. Typically, the control of interaction torques in

partial-assistance exoskeletons relies on a hierarchical control structure.

These approaches require extensive calibration due to the complexity of the

controller and user-specific parameter tuning, especially for activities like

stair or ramp navigation. To address the limitations of hierarchical control in

exoskeletons, this work proposes a three-step, data-driven approach: (1) using

recent sensor data to probabilistically infer locomotion states (landing step

length, landing step height, walking velocity, step clearance, gait phase), (2)

allowing therapists to modify these features via a user interface, and (3)

using the adjusted locomotion features to predict the desired joint posture and

model stiffness in a spring-damper system based on prediction uncertainty. We

evaluated the proposed approach with two healthy participants engaging in

treadmill walking and stair ascent and descent at varying speeds, with and

without external modification of the gait features through a user interface.

Results showed a variation in kinematics according to the gait characteristics

and a negative interaction power suggesting exoskeleton assistance across the

different conditions.

23 Jul 2025

University of Cincinnati Northeastern University

Northeastern University Northwestern UniversityUniversity of GenoaPolitecnico di Milano

Northwestern UniversityUniversity of GenoaPolitecnico di Milano Chalmers University of TechnologyUniversidad Carlos III de Madrid

Chalmers University of TechnologyUniversidad Carlos III de Madrid University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

Northeastern University

Northeastern University Northwestern UniversityUniversity of GenoaPolitecnico di Milano

Northwestern UniversityUniversity of GenoaPolitecnico di Milano Chalmers University of TechnologyUniversidad Carlos III de Madrid

Chalmers University of TechnologyUniversidad Carlos III de Madrid University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

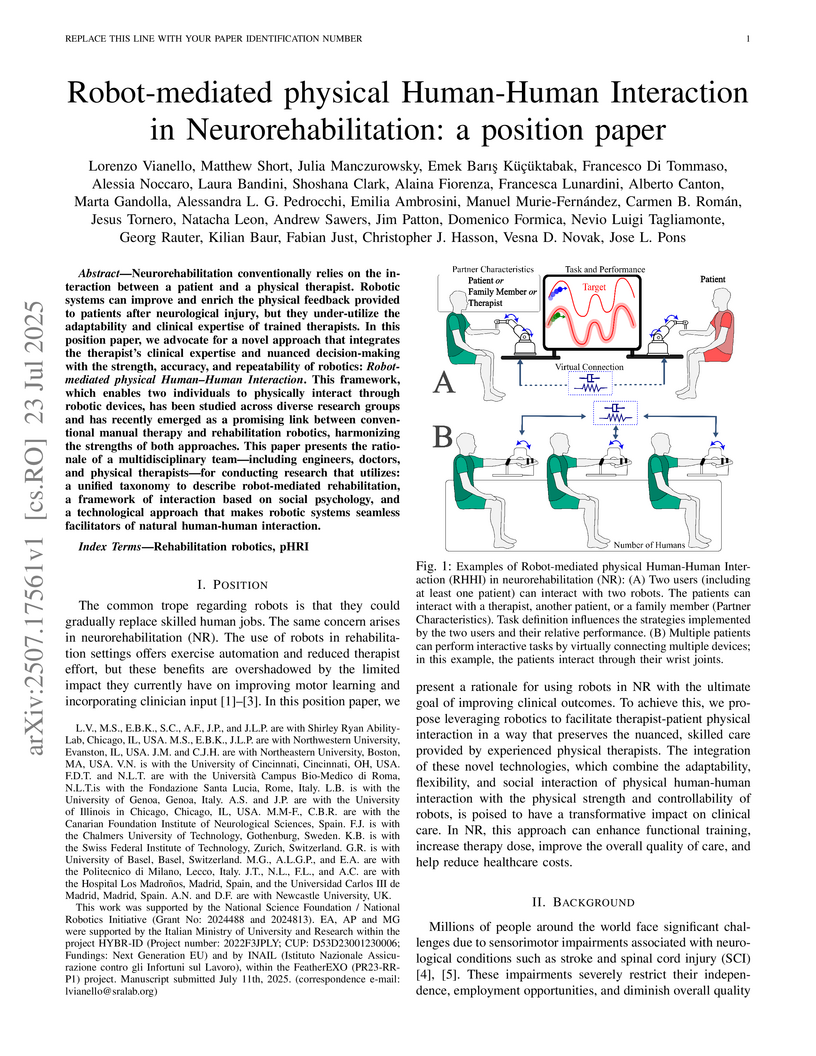

University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in ChicagoNeurorehabilitation conventionally relies on the interaction between a patient and a physical therapist. Robotic systems can improve and enrich the physical feedback provided to patients after neurological injury, but they under-utilize the adaptability and clinical expertise of trained therapists. In this position paper, we advocate for a novel approach that integrates the therapist's clinical expertise and nuanced decision-making with the strength, accuracy, and repeatability of robotics: Robot-mediated physical Human-Human Interaction. This framework, which enables two individuals to physically interact through robotic devices, has been studied across diverse research groups and has recently emerged as a promising link between conventional manual therapy and rehabilitation robotics, harmonizing the strengths of both approaches. This paper presents the rationale of a multidisciplinary team-including engineers, doctors, and physical therapists-for conducting research that utilizes: a unified taxonomy to describe robot-mediated rehabilitation, a framework of interaction based on social psychology, and a technological approach that makes robotic systems seamless facilitators of natural human-human interaction.

Gait analysis from videos obtained from a smartphone would open up many

clinical opportunities for detecting and quantifying gait impairments. However,

existing approaches for estimating gait parameters from videos can produce

physically implausible results. To overcome this, we train a policy using

reinforcement learning to control a physics simulation of human movement to

replicate the movement seen in video. This forces the inferred movements to be

physically plausible, while improving the accuracy of the inferred step length

and walking velocity.

Recent developments have created differentiable physics simulators designed for machine learning pipelines that can be accelerated on a GPU. While these can simulate biomechanical models, these opportunities have not been exploited for biomechanics research or markerless motion capture. We show that these simulators can be used to fit inverse kinematics to markerless motion capture data, including scaling the model to fit the anthropomorphic measurements of an individual. This is performed end-to-end with an implicit representation of the movement trajectory, which is propagated through the forward kinematic model to minimize the error from the 3D markers reprojected into the images. The differential optimizer yields other opportunities, such as adding bundle adjustment during trajectory optimization to refine the extrinsic camera parameters or meta-optimization to improve the base model jointly over trajectories from multiple participants. This approach improves the reprojection error from markerless motion capture over prior methods and produces accurate spatial step parameters compared to an instrumented walkway for control and clinical populations.

26 May 2019

We describe a shared control methodology that can, without knowledge of the

task, be used to improve a human's control of a dynamic system, be used as a

training mechanism, and be used in conjunction with Imitation Learning to

generate autonomous policies that recreate novel behaviors. Our algorithm

introduces autonomy that assists the human partner by enforcing safety and

stability constraints. The autonomous agent has no a priori knowledge of the

desired task and therefore only adds control information when there is concern

for the safety of the system. We evaluate the efficacy of our approach with a

human subjects study consisting of 20 participants. We find that our shared

control algorithm significantly improves the rate at which users are able to

successfully execute novel behaviors. Experimental results suggest that the

benefits of our safety-aware shared control algorithm also extend to the human

partner's understanding of the system and their control skill. Finally, we

demonstrate how a combination of our safety-aware shared control algorithm and

Imitation Learning can be used to autonomously recreate the demonstrated

behaviors.

31 Jul 2020

Assistive robotic devices can increase the independence of individuals with motor impairments. However, each person is unique in their level of injury, preferences, and skills, which moreover can change over time. Further, the amount of assistance required can vary throughout the day due to pain or fatigue, or over longer periods due to rehabilitation, debilitating conditions, or aging. Therefore, in order to become an effective team member, the assistive machine should be able to learn from and adapt to the human user. To do so, we need to be able to characterize the user's control commands to determine when and how autonomy should change to best assist the user. We perform a 20 person pilot study in order to establish a set of meaningful performance measures which can be used to characterize the user's control signals and as cues for the autonomy to modify the level and amount of assistance. Our study includes 8 spinal cord injured and 12 uninjured individuals. The results unveil a set of objective, runtime-computable metrics that are correlated with user-perceived task difficulty, and thus could be used by an autonomy system when deciding whether assistance is required. The results further show that metrics which evaluate the user interaction with the robotic device, robot execution, and the perceived task difficulty show differences among spinal cord injured and uninjured groups, and are affected by the type of control interface used. The results will be used to develop an adaptable, user-centered, and individually customized shared-control algorithms.

Markerless motion capture (MMC) is revolutionizing gait analysis in clinical settings by making it more accessible, raising the question of how to extract the most clinically meaningful information from gait data. In multiple fields ranging from image processing to natural language processing, self-supervised learning (SSL) from large amounts of unannotated data produces very effective representations for downstream tasks. However, there has only been limited use of SSL to learn effective representations of gait and movement, and it has not been applied to gait analysis with MMC. One SSL objective that has not been applied to gait is contrastive learning, which finds representations that place similar samples closer together in the learned space. If the learned similarity metric captures clinically meaningful differences, this could produce a useful representation for many downstream clinical tasks. Contrastive learning can also be combined with causal masking to predict future timesteps, which is an appealing SSL objective given the dynamical nature of gait. We applied these techniques to gait analyses performed with MMC in a rehabilitation hospital from a diverse clinical population. We find that contrastive learning on unannotated gait data learns a representation that captures clinically meaningful information. We probe this learned representation using the framework of biomarkers and show it holds promise as both a diagnostic and response biomarker, by showing it can accurately classify diagnosis from gait and is responsive to inpatient therapy, respectively. We ultimately hope these learned representations will enable predictive and prognostic gait-based biomarkers that can facilitate precision rehabilitation through greater use of MMC to quantify movement in rehabilitation.

Controlling the interaction forces between a human and an exoskeleton is crucial for providing transparency or adjusting assistance or resistance levels. However, it is an open problem to control the interaction forces of lower-limb exoskeletons designed for unrestricted overground walking. For these types of exoskeletons, it is challenging to implement force/torque sensors at every contact between the user and the exoskeleton for direct force measurement. Moreover, it is important to compensate for the exoskeleton's whole-body gravitational and dynamical forces, especially for heavy lower-limb exoskeletons. Previous works either simplified the dynamic model by treating the legs as independent double pendulums, or they did not close the loop with interaction force feedback.

The proposed whole-exoskeleton closed-loop compensation (WECC) method calculates the interaction torques during the complete gait cycle by using whole-body dynamics and joint torque measurements on a hip-knee exoskeleton. Furthermore, it uses a constrained optimization scheme to track desired interaction torques in a closed loop while considering physical and safety constraints. We evaluated the haptic transparency and dynamic interaction torque tracking of WECC control on three subjects. We also compared the performance of WECC with a controller based on a simplified dynamic model and a passive version of the exoskeleton. The WECC controller results in a consistently low absolute interaction torque error during the whole gait cycle for both zero and nonzero desired interaction torques. In contrast, the simplified controller yields poor performance in tracking desired interaction torques during the stance phase.

There are no more papers matching your filters at the moment.