TH Köln

07 Oct 2025

Research and development on conversational recommender systems (CRSs) critically depends on sound and reliable evaluation methodologies. However, the interactive nature of these systems poses significant challenges for automatic evaluation. This paper critically examines current evaluation practices and identifies two key limitations: the over-reliance on static test collections and the inadequacy of existing evaluation metrics. To substantiate this critique, we analyze real user interactions with nine existing CRSs and demonstrate a striking disconnect between self-reported user satisfaction and performance scores reported in prior literature. To address these limitations, this work explores the potential of user simulation to generate dynamic interaction data, offering a departure from static datasets. Furthermore, we propose novel evaluation metrics, based on a general reward/cost framework, designed to better align with real user satisfaction. Our analysis of different simulation approaches provides valuable insights into their effectiveness and reveals promising initial results, showing improved correlation with system rankings compared to human evaluation. While these findings indicate a significant step forward in CRS evaluation, we also identify areas for future research and refinement in both simulation techniques and evaluation metrics.

Motivated by recent work on monotone additive statistics and questions regarding optimal risk sharing for return-based risk measures, we investigate the existence, structure, and applications of Meyer risk measures. Those are monetary risk measures consistent with fractional stochastic orders suggested by Meyer (1977a,b) as refinement of second-order stochastic dominance (SSD). These so-called v-SD orders are based on a threshold utility function v. The test utilities defining the associated order are those at least as risk averse in absolute terms as v. The generality of v allows to subsume SSD and other examples from the literature. The structure of risk measures respecting the v-SD order is clarified by two types of representations. The existence of nontrivial examples is more subtle: for many choices of v outside the exponential (CARA) class, they do not exist. Additional properties like convexity or positive homogeneity further restrict admissible examples, even within the CARA class. We present impossibility theorems that demonstrate a deeper link between the axiomatic structure of monetary risk measures and SSD than previously acknowledged. The study concludes with two applications: portfolio optimisation under a Meyer risk measure as objective, and risk assessment of financial time series data.

Researchers at TH Köln developed highly accurate machine learning techniques for distinguishing human-written from ChatGPT-generated Python code, achieving up to 98% detection accuracy on unseen programming tasks. Their work demonstrates that detectable stylistic differences persist even after uniform code formatting, primarily driven by subtle statistical patterns in token usage.

Despite the growing interest in Explainable Artificial Intelligence (XAI),

explainability is rarely considered during hyperparameter tuning or neural

architecture optimization, where the focus remains primarily on minimizing

predictive loss. In this work, we introduce the novel concept of XAI

consistency, defined as the agreement among different feature attribution

methods, and propose new metrics to quantify it. For the first time, we

integrate XAI consistency directly into the hyperparameter tuning objective,

creating a multi-objective optimization framework that balances predictive

performance with explanation robustness. Implemented within the Sequential

Parameter Optimization Toolbox (SPOT), our approach uses both weighted

aggregation and desirability-based strategies to guide model selection. Through

our proposed framework and supporting tools, we explore the impact of

incorporating XAI consistency into the optimization process. This enables us to

characterize distinct regions in the architecture configuration space: one

region with poor performance and comparatively low interpretability, another

with strong predictive performance but weak interpretability due to low

\gls{xai} consistency, and a trade-off region that balances both objectives by

offering high interpretability alongside competitive performance. Beyond

introducing this novel approach, our research provides a foundation for future

investigations into whether models from the trade-off zone-balancing

performance loss and XAI consistency-exhibit greater robustness by avoiding

overfitting to training performance, thereby leading to more reliable

predictions on out-of-distribution data.

22 Apr 2025

In an increasingly data-driven world, the ability to understand, interpret,

and use data - data literacy - is emerging as a critical competence across all

academic disciplines. The Data Literacy Initiative (DaLI) at TH K\"oln

addresses this need by developing a comprehensive competence model for

promoting data literacy in higher education. Based on interdisciplinary

collaboration and empirical research, the DaLI model defines seven overarching

competence areas: "Establish Data Culture", "Provide Data", "Manage Data",

"Analyze Data", "Evaluate Data", "Interpret Data", and "Publish Data". Each

area is further detailed by specific competence dimensions and progression

levels, providing a structured framework for curriculum design, teaching, and

assessment. Intended for use across disciplines, the model supports the

strategic integration of data literacy into university programs. By providing a

common language and orientation for educators and institutions, the DaLI model

contributes to the broader goal of preparing students to navigate and shape a

data-informed society.

Artificial intelligence is considered as a key technology. It has a huge impact on our society. Besides many positive effects, there are also some negative effects or threats. Some of these threats to society are well-known, e.g., weapons or killer robots. But there are also threats that are ignored. These unknown-knowns or blind spots affect privacy, and facilitate manipulation and mistaken identities. We cannot trust data, audio, video, and identities any more. Democracies are able to cope with known threats, the known-knowns. Transforming unknown-knowns to known-knowns is one important cornerstone of resilient societies. An AI-resilient society is able to transform threats caused by new AI tecchnologies such as generative adversarial networks. Resilience can be seen as a positive adaptation of these threats. We propose three strategies how this adaptation can be achieved: awareness, agreements, and red flags. This article accompanies the TEDx talk "Why we urgently need an AI-resilient society", see this https URL.

07 Jul 2025

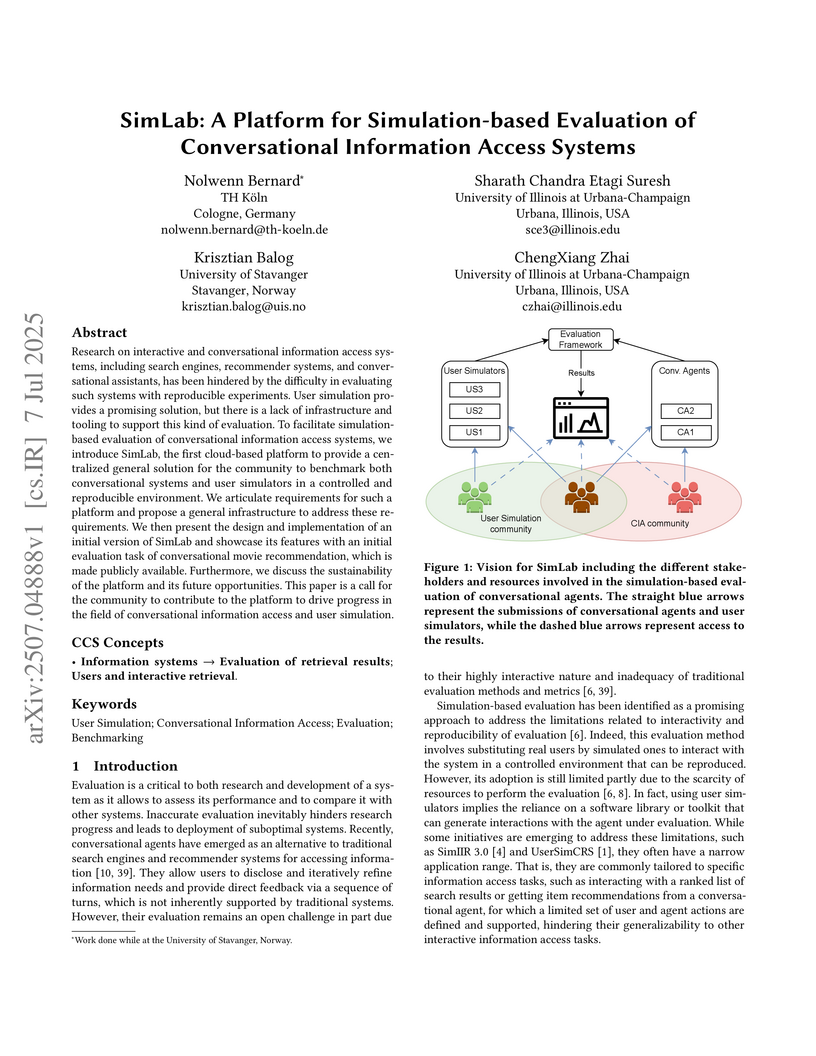

Research on interactive and conversational information access systems, including search engines, recommender systems, and conversational assistants, has been hindered by the difficulty in evaluating such systems with reproducible experiments. User simulation provides a promising solution, but there is a lack of infrastructure and tooling to support this kind of evaluation. To facilitate simulation-based evaluation of conversational information access systems, we introduce SimLab, the first cloud-based platform to provide a centralized general solution for the community to benchmark both conversational systems and user simulators in a controlled and reproducible environment. We articulate requirements for such a platform and propose a general infrastructure to address these requirements. We then present the design and implementation of an initial version of SimLab and showcase its features with an initial evaluation task of conversational movie recommendation, which is made publicly available. Furthermore, we discuss the sustainability of the platform and its future opportunities. This paper is a call for the community to contribute to the platform to drive progress in the field of conversational information access and user simulation.

Artificial intelligence is considered as a key technology. It has a huge impact on our society. Besides many positive effects, there are also some negative effects or threats. Some of these threats to society are well-known, e.g., weapons or killer robots. But there are also threats that are ignored. These unknown-knowns or blind spots affect privacy, and facilitate manipulation and mistaken identities. We cannot trust data, audio, video, and identities any more. Democracies are able to cope with known threats, the known-knowns. Transforming unknown-knowns to known-knowns is one important cornerstone of resilient societies. An AI-resilient society is able to transform threats caused by new AI tecchnologies such as generative adversarial networks. Resilience can be seen as a positive adaptation of these threats. We propose three strategies how this adaptation can be achieved: awareness, agreements, and red flags. This article accompanies the TEDx talk "Why we urgently need an AI-resilient society", see this https URL.

Surrogate-based optimization relies on so-called infill criteria (acquisition

functions) to decide which point to evaluate next. When Kriging is used as the

surrogate model of choice (also called Bayesian optimization), one of the most

frequently chosen criteria is expected improvement. We argue that the

popularity of expected improvement largely relies on its theoretical properties

rather than empirically validated performance. Few results from the literature

show evidence, that under certain conditions, expected improvement may perform

worse than something as simple as the predicted value of the surrogate model.

We benchmark both infill criteria in an extensive empirical study on the `BBOB'

function set. This investigation includes a detailed study of the impact of

problem dimensionality on algorithm performance. The results support the

hypothesis that exploration loses importance with increasing problem

dimensionality. A statistical analysis reveals that the purely exploitative

search with the predicted value criterion performs better on most problems of

five or higher dimensions. Possible reasons for these results are discussed. In

addition, we give an in-depth guide for choosing the infill criteria based on

prior knowledge about the problem at hand, its dimensionality, and the

available budget.

This survey compiles ideas and recommendations from more than a dozen

researchers with different backgrounds and from different institutes around the

world. Promoting best practice in benchmarking is its main goal. The article

discusses eight essential topics in benchmarking: clearly stated goals,

well-specified problems, suitable algorithms, adequate performance measures,

thoughtful analysis, effective and efficient designs, comprehensible

presentations, and guaranteed reproducibility. The final goal is to provide

well-accepted guidelines (rules) that might be useful for authors and

reviewers. As benchmarking in optimization is an active and evolving field of

research this manuscript is meant to co-evolve over time by means of periodic

updates.

Surrogate-based optimization, nature-inspired metaheuristics, and hybrid

combinations have become state of the art in algorithm design for solving

real-world optimization problems. Still, it is difficult for practitioners to

get an overview that explains their advantages in comparison to a large number

of available methods in the scope of optimization. Available taxonomies lack

the embedding of current approaches in the larger context of this broad field.

This article presents a taxonomy of the field, which explores and matches

algorithm strategies by extracting similarities and differences in their search

strategies. A particular focus lies on algorithms using surrogates,

nature-inspired designs, and those created by design optimization. The

extracted features of components or operators allow us to create a set of

classification indicators to distinguish between a small number of classes. The

features allow a deeper understanding of components of the search strategies

and further indicate the close connections between the different algorithm

designs. We present intuitive analogies to explain the basic principles of the

search algorithms, particularly useful for novices in this research field.

Furthermore, this taxonomy allows recommendations for the applicability of the

corresponding algorithms.

In previous research, we developed methods to train decision trees (DT) as agents for reinforcement learning tasks, based on deep reinforcement learning (DRL) networks. The samples from which the DTs are built, use the environment's state as features and the corresponding action as label. To solve the nontrivial task of selecting samples, which on one hand reflect the DRL agent's capabilities of choosing the right action but on the other hand also cover enough state space to generalize well, we developed an algorithm to iteratively train DTs.

In this short paper, we apply this algorithm to a real-world implementation of a robotic task for the first time. Real-world tasks pose additional challenges compared to simulations, such as noise and delays. The task consists of a physical pendulum attached to a cart, which moves on a linear track. By movements to the left and to the right, the pendulum is to be swung in the upright position and balanced in the unstable equilibrium. Our results demonstrate the applicability of the algorithm to real-world tasks by generating a DT whose performance matches the performance of the DRL agent, while consisting of fewer parameters. This research could be a starting point for distilling DTs from DRL agents to obtain transparent, lightweight models for real-world reinforcement learning tasks.

01 Oct 2025

In this paper, we consider a finite-dimensional optimization problem minimizing a continuous objective on a compact domain subject to a multi-dimensional constraint function. For the latter, we assume the availability of a global Lipschitz constant. In recent literature, methods based on non-convex outer approximation are proposed for tackling one-dimensional equality constraints that are Lipschitz with respect to the maximum norm. To the best of our knowledge, however, there does not exist a non-convex outer approximation method for a general problem class. We introduce a meta-level solution framework to solve such problems and tackle the underlying theoretical foundations. Considering the feasible domain without the constraint function as manageable, our method relaxes the multidimensional constraint and iteratively refines the feasible region by means of norm-induced cuts, relying on an oracle for the resulting subproblems. We show the method's correctness and investigate the problem complexity. In order to account for discussions about functionality, limits, and extensions, we present computational examples including illustrations.

29 Apr 2020

University of CologneTH KölnLooking at the provided text:THOUGHTThe user wants me to identify the organizations the authors of the paper are affiliated with.I should look for email domains as a strong indicator of the organization.I must only return organization names, one per line.The author names are "FRANZISKA K ÜHN" and "REN ´E L. SCHILLING".Immediately below their names, there is no explicit affiliation listed.There are no email addresses provided in this snippet.The text focuses on the abstract and introduction, and then jumps into the body of the paper.Sometimes affiliation information is at the very bottom of the first page or on a separate page, but based on the provided text, there is no affiliation information visible for the authors.":

Consider the following stochastic differential equation (SDE) dXt=b(t,Xt−)dt+dLt,X0=x, driven by a d-dimensional Lévy process (Lt)t≥0. We establish conditions on the Lévy process and the drift coefficient b such that the Euler--Maruyama approximation converges strongly to a solution of the SDE with an explicitly given rate. The convergence rate depends on the regularity of b and the behaviour of the Lévy measure at the origin. As a by-product of the proof, we obtain that the SDE has a pathwise unique solution. Our result covers many important examples of Lévy processes, e.g. isotropic stable, relativistic stable, tempered stable and layered stable.

Box-constraints limit the domain of decision variables and are common in real-world optimization problems, for example, due to physical, natural or spatial limitations. Consequently, solutions violating a box-constraint may not be evaluable. This assumption is often ignored in the literature, e.g., existing benchmark suites, such as COCO/BBOB, allow the optimizer to evaluate infeasible solutions. This paper presents an initial study on the strict-box-constrained benchmarking suite (SBOX-COST), which is a variant of the well-known BBOB benchmark suite that enforces box-constraints by returning an invalid evaluation value for infeasible solutions. Specifically, we want to understand the performance difference between BBOB and SBOX-COST as a function of two initialization methods and six constraint-handling strategies all tested with modular CMA-ES. We find that, contrary to what may be expected, handling box-constraints by saturation is not always better than not handling them at all. However, across all BBOB functions, saturation is better than not handling, and the difference increases with the number of dimensions. Strictly enforcing box-constraints also has a clear negative effect on the performance of classical CMA-ES (with uniform random initialization and no constraint handling), especially as problem dimensionality increases.

In the last years, reinforcement learning received a lot of attention. One

method to solve reinforcement learning tasks is Neuroevolution, where neural

networks are optimized by evolutionary algorithms. A disadvantage of

Neuroevolution is that it can require numerous function evaluations, while not

fully utilizing the available information from each fitness evaluation. This is

especially problematic when fitness evaluations become expensive. To reduce the

cost of fitness evaluations, surrogate models can be employed to partially

replace the fitness function. The difficulty of surrogate modeling for

Neuroevolution is the complex search space and how to compare different

networks. To that end, recent studies showed that a kernel based approach,

particular with phenotypic distance measures, works well. These kernels compare

different networks via their behavior (phenotype) rather than their topology or

encoding (genotype). In this work, we discuss the use of surrogate model-based

Neuroevolution (SMB-NE) using a phenotypic distance for reinforcement learning.

In detail, we investigate a) the potential of SMB-NE with respect to evaluation

efficiency and b) how to select adequate input sets for the phenotypic distance

measure in a reinforcement learning problem. The results indicate that we are

able to considerably increase the evaluation efficiency using dynamic input

sets.

False theta functions form a family of functions with intriguing modular properties and connections to mock modular forms. In this paper, we take the first step towards investigating modular transformations of higher rank false theta functions, following the example of higher depth mock modular forms. In particular, we prove that under quite general conditions, a rank two false theta function is determined in terms of iterated, holomorphic, Eichler-type integrals. This provides a new method for examining their modular properties and we apply it in a variety of situations where rank two false theta functions arise. We first consider generic parafermion characters of vertex algebras of type A2 and B2. This requires a fairly non-trivial analysis of Fourier coefficients of meromorphic Jacobi forms of negative index, which is of independent interest. Then we discuss modularity of rank two false theta functions coming from superconformal Schur indices. Lastly, we analyze Z^-invariants of Gukov, Pei, Putrov, and Vafa for certain plumbing H-graphs. Along the way, our method clarifies previous results on depth two quantum modularity.

Stochastic optimization algorithms have been successfully applied in several domains to find optimal solutions. Because of the ever-growing complexity of the integrated systems, novel stochastic algorithms are being proposed, which makes the task of the performance analysis of the algorithms extremely important. In this paper, we provide a novel ranking scheme to rank the algorithms over multiple single-objective optimization problems. The results of the algorithms are compared using a robust bootstrapping-based hypothesis testing procedure that is based on the principles of severity. Analogous to the football league scoring scheme, we propose pairwise comparison of algorithms as in league competition. Each algorithm accumulates points and a performance metric of how good or bad it performed against other algorithms analogous to goal differences metric in football league scoring system. The goal differences performance metric can not only be used as a tie-breaker but also be used to obtain a quantitative performance of each algorithm. The key novelty of the proposed ranking scheme is that it takes into account the performance of each algorithm considering the magnitude of the achieved performance improvement along with its practical relevance and does not have any distributional assumptions. The proposed ranking scheme is compared to classical hypothesis testing and the analysis of the results shows that the results are comparable and our proposed ranking showcases many additional benefits.

28 Jun 2018

Contemporary web pages with increasingly sophisticated interfaces rival

traditional desktop applications for interface complexity and are often called

web applications or RIA (Rich Internet Applications). They often require the

execution of JavaScript in a web browser and can call AJAX requests to

dynamically generate the content, reacting to user interaction. From the

automatic data acquisition point of view, thus, it is essential to be able to

correctly render web pages and mimic user actions to obtain relevant data from

the web page content. Briefly, to obtain data through existing Web interfaces

and transform it into structured form, contemporary wrappers should be able to:

1) interact with sophisticated interfaces of web applications; 2) precisely

acquire relevant data; 3) scale with the number of crawled web pages or states

of web application; 4) have an embeddable programming API for integration with

existing web technologies. OXPath is a state-of-the-art technology, which is

compliant with these requirements and demonstrated its efficiency in

comprehensive experiments. OXPath integrates Firefox for correct rendering of

web pages and extends XPath 1.0 for the DOM node selection, interaction, and

extraction. It provides means for converting extracted data into different

formats, such as XML, JSON, CSV, and saving data into relational databases.

This tutorial explains main features of the OXPath language and the setup of

a suitable working environment. The guidelines for using OXPath are provided in

the form of prototypical examples.

01 Jul 2024

Information retrieval systems have been evaluated using the Cranfield paradigm for many years. This paradigm allows a systematic, fair, and reproducible evaluation of different retrieval methods in fixed experimental environments. However, real-world retrieval systems must cope with dynamic environments and temporal changes that affect the document collection, topical trends, and the individual user's perception of what is considered relevant. Yet, the temporal dimension in IR evaluations is still understudied.

To this end, this work investigates how the temporal generalizability of effectiveness evaluations can be assessed. As a conceptual model, we generalize Cranfield-type experiments to the temporal context by classifying the change in the essential components according to the create, update, and delete operations of persistent storage known from CRUD. From the different types of change different evaluation scenarios are derived and it is outlined what they imply. Based on these scenarios, renowned state-of-the-art retrieval systems are tested and it is investigated how the retrieval effectiveness changes on different levels of granularity.

We show that the proposed measures can be well adapted to describe the changes in the retrieval results. The experiments conducted confirm that the retrieval effectiveness strongly depends on the evaluation scenario investigated. We find that not only the average retrieval performance of single systems but also the relative system performance are strongly affected by the components that change and to what extent these components changed.

There are no more papers matching your filters at the moment.