TIB—Leibniz Information Centre for Science and Technology

This work introduces EMONET-VOICE, a novel suite of open-access synthetic speech datasets and models for fine-grained Speech Emotion Recognition. It presents EMONET-VOICEBIG, a large-scale corpus for pre-training, and EMONET-VOICEBENCH, an expert-verified benchmark with 40 emotion categories, and also establishes EMPATHICINSIGHT-VOICE models which demonstrate state-of-the-art alignment with human expert judgments.

Effective human-AI interaction relies on AI's ability to accurately perceive

and interpret human emotions. Current benchmarks for vision and vision-language

models are severely limited, offering a narrow emotional spectrum that

overlooks nuanced states (e.g., bitterness, intoxication) and fails to

distinguish subtle differences between related feelings (e.g., shame vs.

embarrassment). Existing datasets also often use uncontrolled imagery with

occluded faces and lack demographic diversity, risking significant bias. To

address these critical gaps, we introduce EmoNet Face, a comprehensive

benchmark suite. EmoNet Face features: (1) A novel 40-category emotion

taxonomy, meticulously derived from foundational research to capture finer

details of human emotional experiences. (2) Three large-scale, AI-generated

datasets (EmoNet HQ, Binary, and Big) with explicit, full-face expressions and

controlled demographic balance across ethnicity, age, and gender. (3) Rigorous,

multi-expert annotations for training and high-fidelity evaluation. (4) We

built EmpathicInsight-Face, a model achieving human-expert-level performance on

our benchmark. The publicly released EmoNet Face suite - taxonomy, datasets,

and model - provides a robust foundation for developing and evaluating AI

systems with a deeper understanding of human emotions.

Paywalls, licenses and copyright rules often restrict the broad dissemination

and reuse of scientific knowledge. We take the position that it is both legally

and technically feasible to extract the scientific knowledge in scholarly

texts. Current methods, like text embeddings, fail to reliably preserve factual

content, and simple paraphrasing may not be legally sound. We propose a new

idea for the community to adopt: convert scholarly documents into knowledge

preserving, but style agnostic representations we term Knowledge Units using

LLMs. These units use structured data capturing entities, attributes and

relationships without stylistic content. We provide evidence that Knowledge

Units (1) form a legally defensible framework for sharing knowledge from

copyrighted research texts, based on legal analyses of German copyright law and

U.S. Fair Use doctrine, and (2) preserve most (~95\%) factual knowledge from

original text, measured by MCQ performance on facts from the original

copyrighted text across four research domains. Freeing scientific knowledge

from copyright promises transformative benefits for scientific research and

education by allowing language models to reuse important facts from copyrighted

text. To support this, we share open-source tools for converting research

documents into Knowledge Units. Overall, our work posits the feasibility of

democratizing access to scientific knowledge while respecting copyright.

25 Mar 2025

Scientific hypothesis generation is a fundamentally challenging task in

research, requiring the synthesis of novel and empirically grounded insights.

Traditional approaches rely on human intuition and domain expertise, while

purely large language model (LLM) based methods often struggle to produce

hypotheses that are both innovative and reliable. To address these limitations,

we propose the Monte Carlo Nash Equilibrium Self-Refine Tree (MC-NEST), a novel

framework that integrates Monte Carlo Tree Search with Nash Equilibrium

strategies to iteratively refine and validate hypotheses. MC-NEST dynamically

balances exploration and exploitation through adaptive sampling strategies,

which prioritize high-potential hypotheses while maintaining diversity in the

search space. We demonstrate the effectiveness of MC-NEST through comprehensive

experiments across multiple domains, including biomedicine, social science, and

computer science. MC-NEST achieves average scores of 2.65, 2.74, and 2.80 (on a

1-3 scale) for novelty, clarity, significance, and verifiability metrics on the

social science, computer science, and biomedicine datasets, respectively,

outperforming state-of-the-art prompt-based methods, which achieve 2.36, 2.51,

and 2.52 on the same datasets. These results underscore MC-NEST's ability to

generate high-quality, empirically grounded hypotheses across diverse domains.

Furthermore, MC-NEST facilitates structured human-AI collaboration, ensuring

that LLMs augment human creativity rather than replace it. By addressing key

challenges such as iterative refinement and the exploration-exploitation

balance, MC-NEST sets a new benchmark in automated hypothesis generation.

Additionally, MC-NEST's ethical design enables responsible AI use, emphasizing

transparency and human supervision in hypothesis generation.

14 Sep 2025

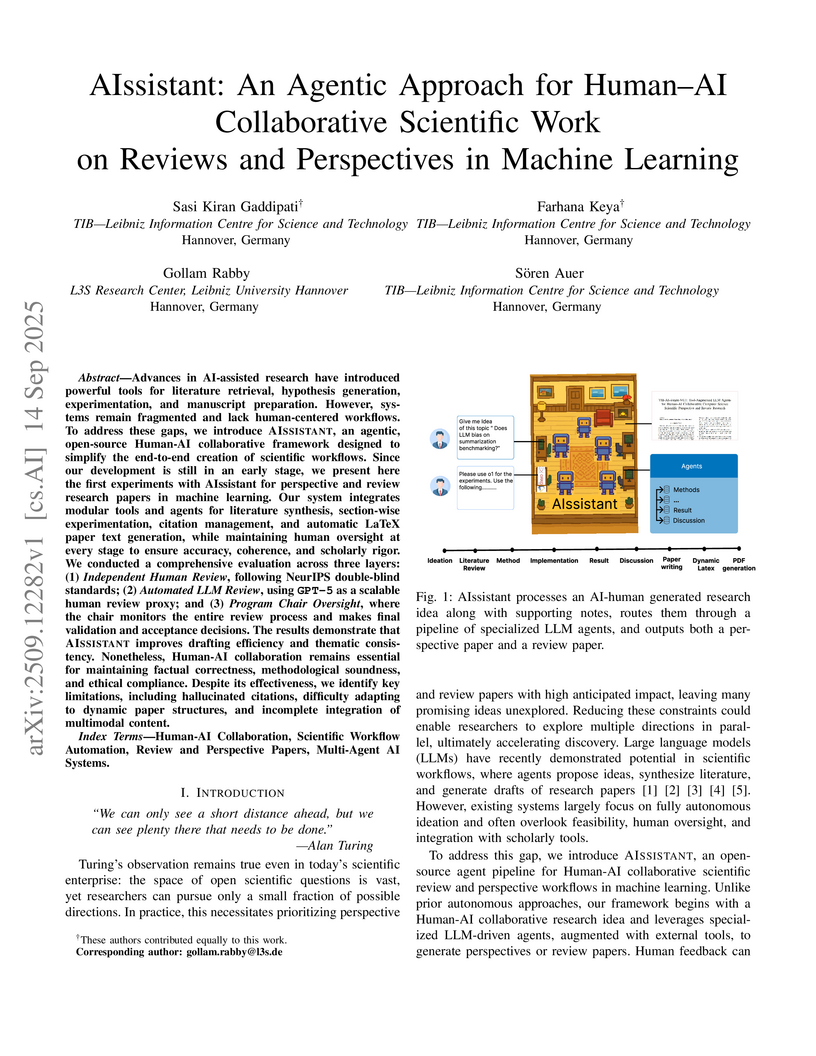

Advances in AI-assisted research have introduced powerful tools for literature retrieval, hypothesis generation, experimentation, and manuscript preparation. However, systems remain fragmented and lack human-centred workflows. To address these gaps, we introduce AIssistant, an agentic, open-source Human-AI collaborative framework designed to simplify the end-to-end creation of scientific workflows. Since our development is still in an early stage, we present here the first experiments with AIssistant for perspective and review research papers in machine learning. Our system integrates modular tools and agents for literature synthesis, section-wise experimentation, citation management, and automatic LaTeX paper text generation, while maintaining human oversight at every stage to ensure accuracy, coherence, and scholarly rigour. We conducted a comprehensive evaluation across three layers: (1) Independent Human Review, following NeurIPS double-blind standards; (2) Automated LLM Review, using GPT-5 as a scalable human review proxy; and (3) Program Chair Oversight, where the chair monitors the entire review process and makes final validation and acceptance decisions. The results demonstrate that AIssistant improves drafting efficiency and thematic consistency. Nonetheless, Human-AI collaboration remains essential for maintaining factual correctness, methodological soundness, and ethical compliance. Despite its effectiveness, we identify key limitations, including hallucinated citations, difficulty adapting to dynamic paper structures, and incomplete integration of multimodal content.

SCI-IDEA introduces an iterative, human-in-the-loop framework that leverages large language models and semantic embeddings to generate context-aware and innovative scientific ideas. The system identifies potential "Aha Moments" and refines research concepts through a multi-dimensional evaluation across novelty, excitement, feasibility, and effectiveness.

01 Jun 2025

Mathematical reasoning presents significant challenges for large language models (LLMs). To enhance their capabilities, we propose Monte Carlo Self-Refine Tree (MC-NEST), an extension of Monte Carlo Tree Search that integrates LLM-based self-refinement and self-evaluation for improved decision-making in complex reasoning tasks. MC-NEST balances exploration and exploitation using Upper Confidence Bound (UCT) scores combined with diverse selection policies. Through iterative critique and refinement, LLMs learn to reason more strategically. Empirical results demonstrate that MC-NEST with an importance sampling policy substantially improves GPT-4o's performance, achieving state-of-the-art pass@1 scores on Olympiad-level benchmarks. Specifically, MC-NEST attains a pass@1 of 38.6 on AIME and 12.6 on MathOdyssey. The solution quality for MC-NEST using GPT-4o and Phi-3-mini reaches 84.0\% and 82.08\%, respectively, indicating robust consistency across different LLMs. MC-NEST performs strongly across Algebra, Geometry, and Number Theory, benefiting from its ability to handle abstraction, logical deduction, and multi-step reasoning -- core skills in mathematical problem solving.

15 Dec 2022

A plethora of scientific software packages are published in repositories,

e.g., Zenodo and figshare. These software packages are crucial for the

reproducibility of published research. As an additional route to scholarly

knowledge graph construction, we propose an approach for automated extraction

of machine actionable (structured) scholarly knowledge from published software

packages by static analysis of their (meta)data and contents (in particular

scripts in languages such as Python). The approach can be summarized as

follows. First, we extract metadata information (software description,

programming languages, related references) from software packages by leveraging

the Software Metadata Extraction Framework (SOMEF) and the GitHub API. Second,

we analyze the extracted metadata to find the research articles associated with

the corresponding software repository. Third, for software contained in

published packages, we create and analyze the Abstract Syntax Tree (AST)

representation to extract information about the procedures performed on data.

Fourth, we search the extracted information in the full text of related

articles to constrain the extracted information to scholarly knowledge, i.e.

information published in the scholarly literature. Finally, we publish the

extracted machine actionable scholarly knowledge in the Open Research Knowledge

Graph (ORKG).

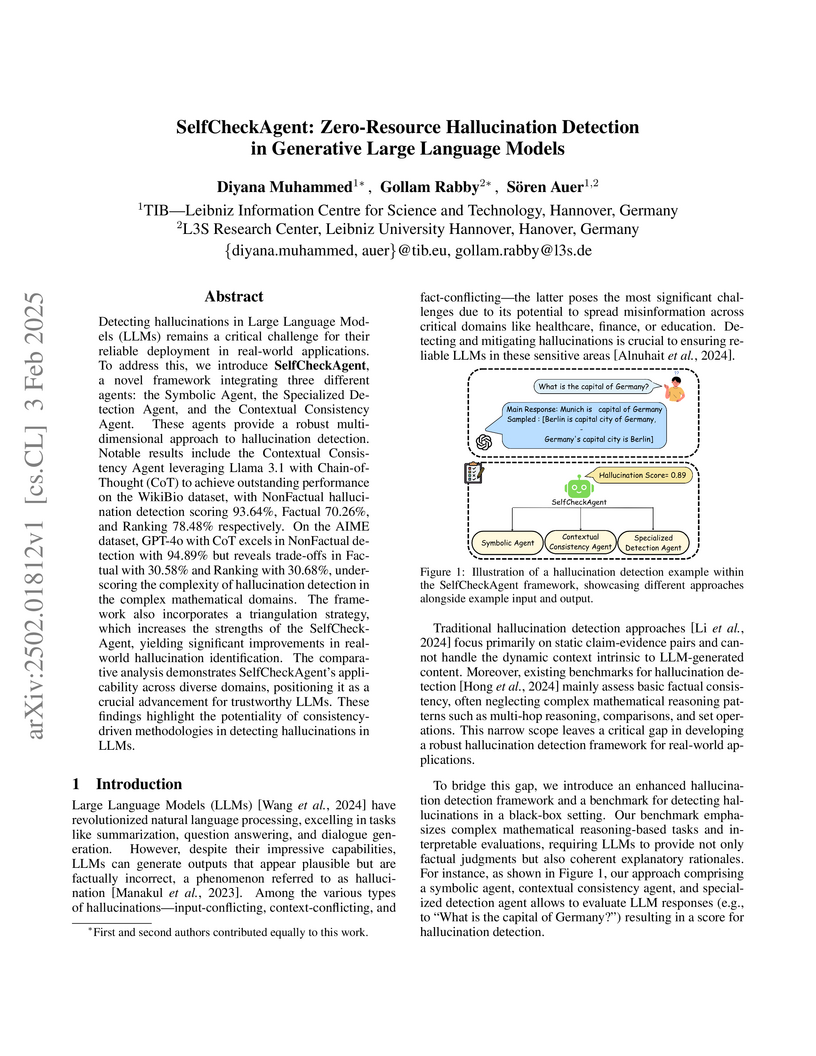

03 Feb 2025

The SelfCheckAgent framework introduces a zero-resource, black-box method for detecting hallucinations in large language models, particularly in complex mathematical reasoning. It combines three specialized agents—Symbolic, Specialized Detection, and Contextual Consistency—via a triangulation strategy, demonstrating improved detection performance on datasets like WikiBio and the newly introduced AIME dataset.

20 Mar 2024

The proliferation of scholarly publications underscores the necessity for

reliable tools to navigate scientific literature. OpenAlex, an emerging

platform amalgamating data from diverse academic sources, holds promise in

meeting these evolving demands. Nonetheless, our investigation uncovered a flaw

in OpenAlex's portrayal of publication status, particularly concerning

retractions. Despite accurate metadata sourced from Crossref database, OpenAlex

consolidated this information into a single boolean field, "is_retracted,"

leading to misclassifications of papers. This challenge not only impacts

OpenAlex users but also extends to users of other academic resources

integrating the OpenAlex API. The issue affects data provided by OpenAlex in

the period between 22 Dec 2023 and 19 Mar 2024. Anyone using data from this

period should urgently check it and replace it if necessary.

Content-based information retrieval is based on the information contained in documents rather than using metadata such as keywords. Most information retrieval methods are either based on text or image. In this paper, we investigate the usefulness of multimodal features for cross-lingual news search in various domains: politics, health, environment, sport, and finance. To this end, we consider five feature types for image and text and compare the performance of the retrieval system using different combinations. Experimental results show that retrieval results can be improved when considering both visual and textual information. In addition, it is observed that among textual features entity overlap outperforms word embeddings, while geolocation embeddings achieve better performance among visual features in the retrieval task.

The growing volume of biomedical scholarly document abstracts presents an increasing challenge in efficiently retrieving accurate and relevant information. To address this, we introduce a novel approach that integrates an optimized topic modelling framework, OVB-LDA, with the BI-POP CMA-ES optimization technique for enhanced scholarly document abstract categorization. Complementing this, we employ the distilled MiniLM model, fine-tuned on domain-specific data, for high-precision answer extraction. Our approach is evaluated across three configurations: scholarly document abstract retrieval, gold-standard scholarly documents abstract, and gold-standard snippets, consistently outperforming established methods such as RYGH and bio-answer finder. Notably, we demonstrate that extracting answers from scholarly documents abstracts alone can yield high accuracy, underscoring the sufficiency of abstracts for many biomedical queries. Despite its compact size, MiniLM exhibits competitive performance, challenging the prevailing notion that only large, resource-intensive models can handle such complex tasks. Our results, validated across various question types and evaluation batches, highlight the robustness and adaptability of our method in real-world biomedical applications. While our approach shows promise, we identify challenges in handling complex list-type questions and inconsistencies in evaluation metrics. Future work will focus on refining the topic model with more extensive domain-specific datasets, further optimizing MiniLM and utilizing large language models (LLM) to improve both precision and efficiency in biomedical question answering.

The consumption of news has changed significantly as the Web has become the most influential medium for information. To analyze and contextualize the large amount of news published every day, the geographic focus of an article is an important aspect in order to enable content-based news retrieval. There are methods and datasets for geolocation estimation from text or photos, but they are typically considered as separate tasks. However, the photo might lack geographical cues and text can include multiple locations, making it challenging to recognize the focus location using a single modality. In this paper, a novel dataset called Multimodal Focus Location of News (MM-Locate-News) is introduced. We evaluate state-of-the-art methods on the new benchmark dataset and suggest novel models to predict the focus location of news using both textual and image content. The experimental results show that the multimodal model outperforms unimodal models.

17 Jul 2025

In research, measuring instruments play a crucial role in producing the data that underpin scientific discoveries. Information about instruments is essential in data interpretation and, thus, knowledge production. However, if at all available and accessible, such information is scattered across numerous data sources. Relating the relevant details, e.g. instrument specifications or calibrations, with associated research assets (data, but also operating infrastructures) is challenging. Moreover, understanding the (possible) use of instruments is essential for researchers in experiment design and execution. To address these challenges, we propose a Knowledge Graph (KG) based approach for representing, publishing, and using information, extracted from various data sources, about instruments and associated scholarly artefacts. The resulting KG serves as a foundation for exploring and gaining a deeper understanding of the use and role of instruments in research, discovering relations between instruments and associated artefacts (articles and datasets), and opens the possibility to quantify the impact of instruments in research.

Leveraging a GraphQL-based federated query service that integrates multiple scholarly communication infrastructures (specifically, DataCite, ORCID, ROR, OpenAIRE, Semantic Scholar, Wikidata and Altmetric), we develop a novel web widget based approach for the presentation of scholarly knowledge with rich contextual information. We implement the proposed approach in the Open Research Knowledge Graph (ORKG) and showcase it on three kinds of widgets. First, we devise a widget for the ORKG paper view that presents contextual information about related datasets, software, project information, topics, and metrics. Second, we extend the ORKG contributor profile view with contextual information including authored articles, developed software, linked projects, and research interests. Third, we advance ORKG comparison faceted search by introducing contextual facets (e.g. citations). As a result, the devised approach enables presenting ORKG scholarly knowledge flexibly enriched with contextual information sourced in a federated manner from numerous technologically heterogeneous scholarly communication infrastructures.

There are no more papers matching your filters at the moment.