Université Jean Monnet

19 Sep 2025

We consider constrained-degree percolation on the hypercubic lattice. Initially, all edges are closed, and each edge independently attempts to open at a uniformly distributed random time; the attempt succeeds if, at that instant, both end-vertices have degrees strictly less than a prescribed parameter. The absence of the FKG inequality and the finite energy property, as well as the infinite range of dependency, make the rigorous analysis of the model particularly challenging. In this work, we show that the one-arm probability exhibits exponential decay in its entire subcritical phase. The proof relies on the Duminil-Copin--Raoufi--Tassion randomized algorithm method and resolves a problem of dos Santos and the second author. At the heart of the argument lies an intricate combinatorial transformation of pivotality in the spirit of Aizenman--Grimmett essential enhancements, but with unbounded range. This technique may be of use in other dynamical settings.

19 Oct 2025

We study a scalar conservation law on the torus in which the flux j is composed of a Coulomb interaction and a nonlinear mobility: j=−um∇g∗u. We prove existence of entropy solutions and a weak-strong uniqueness principle. We also prove several properties shared among entropy solutions, in particular a lower barrier in the fast diffusion regime m<1. In the porous media regime m≥1, we study the decreasing rearrangement of solutions, which allows to prove an instantaneous growth of the support and a waiting time phenomenon. We also show exponential convergence of the solutions towards the spatial average in several topologies.

Individual identification plays a pivotal role in ecology and ethology, notably as a tool for complex social structures understanding. However, traditional identification methods often involve invasive physical tags and can prove both disruptive for animals and time-intensive for researchers. In recent years, the integration of deep learning in research offered new methodological perspectives through automatization of complex tasks. Harnessing object detection and recognition technologies is increasingly used by researchers to achieve identification on video footage. This study represents a preliminary exploration into the development of a non-invasive tool for face detection and individual identification of Japanese macaques (Macaca fuscata) through deep learning. The ultimate goal of this research is, using identifications done on the dataset, to automatically generate a social network representation of the studied population. The current main results are promising: (i) the creation of a Japanese macaques' face detector (Faster-RCNN model), reaching a 82.2% accuracy and (ii) the creation of an individual recognizer for K{ō}jima island macaques population (YOLOv8n model), reaching a 83% accuracy. We also created a K{ō}jima population social network by traditional methods, based on co-occurrences on videos. Thus, we provide a benchmark against which the automatically generated network will be assessed for reliability. These preliminary results are a testament to the potential of this innovative approach to provide the scientific community with a tool for tracking individuals and social network studies in Japanese macaques.

18 Feb 2025

The JKO scheme is a time-discrete scheme of implicit Euler type that allows

to construct weak solutions of evolution PDEs which have a Wasserstein gradient

structure. The purpose of this work is to study the effect of replacing the

classical quadratic optimal transport problem by the Schr\"odinger problem

(\emph{a.k.a.}\ the entropic regularization of optimal transport, efficiently

computed by the Sinkhorn algorithm) at each step of this scheme. We find that

if ϵ is the regularization parameter of the Schr\"odinger problem, and

τ is the time step parameter, considering the limit τ,ϵ→0

with τϵ→α∈R+ results in adding the

term 2αΔρ on the right-hand side of the limiting PDE.

In the case α=0 we improve a previous result by Carlier, Duval,

Peyr{\'e} and Schmitzer (2017).

3D human body shape and pose estimation from RGB images is a challenging problem with potential applications in augmented/virtual reality, healthcare and fitness technology and virtual retail. Recent solutions have focused on three types of inputs: i) single images, ii) multi-view images and iii) videos. In this study, we surveyed and compared 3D body shape and pose estimation methods for contemporary dance and performing arts, with a special focus on human body pose and dressing, camera viewpoint, illumination conditions and background conditions. We demonstrated that multi-frame methods, such as PHALP, provide better results than single-frame method for pose estimation when dancers are performing contemporary dances.

22 May 2023

Public Procurement refers to governments' purchasing activities of goods, services, and construction of public works. In the European Union (EU), it is an essential sector, corresponding to 15% of the GDP. EU public procurement generates large amounts of data, because award notices related to contracts exceeding a predefined threshold must be published on the TED (EU's official journal). Under the framework of the DeCoMaP project, which aims at leveraging such data in order to predict fraud in public procurement, we constitute the FOPPA (French Open Public Procurement Award notices) database. It contains the description of 1,380,965 lots obtained from the TED, covering the 2010--2020 period for France. We detect a number of substantial issues in these data, and propose a set of automated and semi-automated methods to solve them and produce a usable database. It can be leveraged to study public procurement in an academic setting, but also to facilitate the monitoring of public policies, and to improve the quality of the data offered to buyers and suppliers.

26 Aug 2025

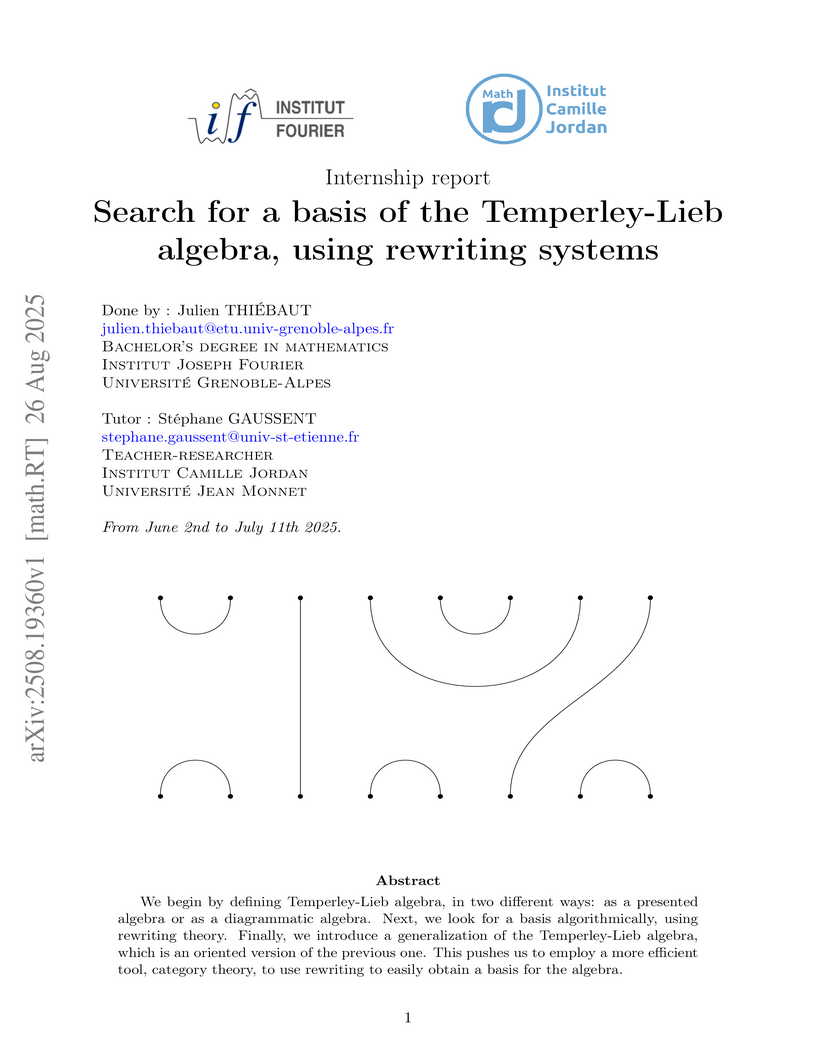

We begin by defining Temperley-Lieb algebra, in two different ways: as a presented algebra or as a diagrammatic algebra. Next, we look for a basis algorithmically, using rewriting theory. Finally, we introduce a generalization of the Temperley-Lieb algebra, which is an oriented version of the previous one. This pushes us to employ a more efficient tool, category theory, to use rewriting to easily obtain a basis for the algebra.

08 May 2024

Recent developments on deep learning established some theoretical properties of deep neural networks estimators. However, most of the existing works on this topic are restricted to bounded loss functions or (sub)-Gaussian or bounded input. This paper considers robust deep learning from weakly dependent observations, with unbounded loss function and unbounded input/output. It is only assumed that the output variable has a finite r order moment, with r>1. Non asymptotic bounds for the expected excess risk of the deep neural network estimator are established under strong mixing, and ψ-weak dependence assumptions on the observations. We derive a relationship between these bounds and r, and when the data have moments of any order (that is r=∞), the convergence rate is close to some well-known results. When the target predictor belongs to the class of Hölder smooth functions with sufficiently large smoothness index, the rate of the expected excess risk for exponentially strongly mixing data is close to or as same as those for obtained with i.i.d. samples. Application to robust nonparametric regression and robust nonparametric autoregression are considered. The simulation study for models with heavy-tailed errors shows that, robust estimators with absolute loss and Huber loss function outperform the least squares method.

13 Mar 2025

We discuss non-reversible Markov-chain Monte Carlo algorithms that, for

particle systems, rigorously sample the positional Boltzmann distribution and

that have faster than physical dynamics. These algorithms all feature a

non-thermal velocity distribution. They are exemplified by the lifted TASEP

(totally asymmetric simple exclusion process), a one-dimensional lattice

reduction of event-chain Monte Carlo. We analyze its dynamics in terms of a

velocity trapping that arises from correlations between the local density and

the particle velocities. This allows us to formulate a conjecture for its

out-of-equilibrium mixing time scale, and to rationalize its equilibrium

superdiffusive time scale. Both scales are faster than for the (unlifted)

TASEP. They are further justified by our analysis of the lifted TASEP in terms

of many-particle realizations of true self-avoiding random walks. We discuss

velocity trapping beyond the case of one-dimensional lattice models and in more

than one physical dimensions. Possible applications beyond physics are pointed

out.

16 Sep 2025

We develop, simulate and extend an initial proposition by Chaves et al. concerning a random incompressible vector field able to reproduce key ingredients of three-dimensional turbulence in both space and time. In this first article, we focus on the important underlying Gaussian structure that will be generalized in a second article to account for higher-order statistics. Presently, the statistical spatial structure of this velocity field is consistent with a divergence-free fractional Gaussian vector field that encodes all known properties of homogeneous and isotropic fluid turbulence at a given finite Reynolds number, up to second-order statistics. The temporal structure of the velocity field is introduced through a stochastic evolution of the respective Fourier modes. In the simplest picture, Fourier modes evolve according to an Ornstein-Uhlenbeck process, where the characteristic time scale depends on the wave-vector amplitude. For consistency with direct numerical simulations (DNSs) of the Navier-Stokes equations, this time scale is inversely proportional to the wave vector amplitude. As a consequence, the characteristic velocity that governs the eddies is independent of their size and is related to the velocity standard deviation, which is consistent with some features of the so-called sweeping effect. To ensure differentiability in time while respecting the causal nature of the evolution, we use the methodology developed by Viggiano et al. to propose a fully consistent stochastic picture, predicting in particular proper temporal covariance of the Fourier modes. We finally derive analytically all statistical quantities in a continuous setup and develop precise and efficient numerical schemes of the corresponding periodic framework. Both exact predictions and numerical estimations of the model are compared to DNSs provided by the Johns Hopkins database.

Nowadays, IoT devices have an enlarging scope of activities spanning from

sensing, computing to acting and even more, learning, reasoning and planning.

As the number of IoT applications increases, these objects are becoming more

and more ubiquitous. Therefore, they need to adapt their functionality in

response to the uncertainties of their environment to achieve their goals. In

Human-centered IoT, objects and devices have direct interactions with human

beings and have access to online contextual information. Self-adaptation of

such applications is a crucial subject that needs to be addressed in a way that

respects human goals and human values. Hence, IoT applications must be equipped

with self-adaptation techniques to manage their run-time uncertainties locally

or in cooperation with each other. This paper presents SMASH: a multi-agent

approach for self-adaptation of IoT applications in human-centered

environments. In this paper, we have considered the Smart Home as the case

study of smart environments. SMASH agents are provided with a 4-layer

architecture based on the BDI agent model that integrates human values with

goal-reasoning, planning, and acting. It also takes advantage of a

semantic-enabled platform called Home'In to address interoperability issues

among non-identical agents and devices with heterogeneous protocols and data

formats. This approach is compared with the literature and is validated by

developing a scenario as the proof of concept. The timely responses of SMASH

agents show the feasibility of the proposed approach in human-centered

environments.

Recent research considers few-shot intent detection as a meta-learning

problem: the model is learning to learn from a consecutive set of small tasks

named episodes. In this work, we propose ProtAugment, a meta-learning algorithm

for short texts classification (the intent detection task). ProtAugment is a

novel extension of Prototypical Networks, that limits overfitting on the bias

introduced by the few-shots classification objective at each episode. It relies

on diverse paraphrasing: a conditional language model is first fine-tuned for

paraphrasing, and diversity is later introduced at the decoding stage at each

meta-learning episode. The diverse paraphrasing is unsupervised as it is

applied to unlabelled data, and then fueled to the Prototypical Network

training objective as a consistency loss. ProtAugment is the state-of-the-art

method for intent detection meta-learning, at no extra labeling efforts and

without the need to fine-tune a conditional language model on a given

application domain.

Recent advances in depth imaging sensors provide easy access to the

synchronized depth with color, called RGB-D image. In this paper, we propose an

unsupervised method for indoor RGB-D image segmentation and analysis. We

consider a statistical image generation model based on the color and geometry

of the scene. Our method consists of a joint color-spatial-directional

clustering method followed by a statistical planar region merging method. We

evaluate our method on the NYU depth database and compare it with existing

unsupervised RGB-D segmentation methods. Results show that, it is comparable

with the state of the art methods and it needs less computation time. Moreover,

it opens interesting perspectives to fuse color and geometry in an unsupervised

manner.

20 Jul 2020

We consider the contact process on the model of hyperbolic random graph, in

the regime when the degree distribution obeys a power law with exponent $\chi

\in(1,2)$ (so that the degree distribution has finite mean and infinite second

moment). We show that the probability of non-extinction as the rate of

infection goes to zero decays as a power law with an exponent that only depends

on χ and which is the same as in the configuration model, suggesting some

universality of this critical exponent. We also consider finite versions of the

hyperbolic graph and prove metastability results, as the size of the graph goes

to infinity.

We prove an existence result for a large class of PDEs with a nonlinear Wasserstein gradient flow structure. We use the classical theory of Wasserstein gradient flow to derive an EDI formulation of our PDE and prove that under some integrability assumptions on the initial condition the PDE is satisfied in the sense of distributions.

27 Mar 2023

We study a class of time-inhomogeneous diffusion: the self-interacting one. We show a convergence result with a rate of convergence that does not depend on the diffusion coefficient. Finally, we establish a so-called Kramers' type law for the first exit-time of the process from domain of attractions when the landscapes are uniformly convex.

Light propagation in semiconductors is the cornerstone of emerging disruptive

technologies holding considerable potential to revolutionize

telecommunications, sensors, quantum engineering, healthcare, and artificial

intelligence. Sky-high optical nonlinearities make these materials ideal

platforms for photonic integrated circuits. The fabrication of such complex

devices could greatly benefit from in-volume ultrafast laser writing for

monolithic and contactless integration. Ironically, as exemplified for Si,

nonlinearities act as an efficient immune system self-protecting the material

from internal permanent modifications that ultrashort laser pulses could

potentially produce. While nonlinear propagation of high-intensity ultrashort

laser pulses has been extensively investigated in Si, other semiconductors

remain uncharted. In this work, we demonstrate that filamentation universally

dictates ultrashort laser pulse propagation in various semiconductors. The

effective key nonlinear parameters obtained strongly differ from standard

measurements with low-intensity pulses. Furthermore, the temporal scaling laws

for these key parameters are extracted. Temporal-spectral shaping is finally

proposed to optimize energy deposition inside semiconductors. The whole set of

results lays the foundations for future improvements, up to the point where

semiconductors can be selectively tailored internally by ultrafast laser

writing, thus leading to countless applications for in-chip processing and

functionalization, and opening new markets in various sectors including

technology, photonics, and semiconductors.

The ability of a human being to extrapolate previously gained knowledge to other domains inspired a new family of methods in machine learning called transfer learning. Transfer learning is often based on the assumption that objects in both target and source domains share some common feature and/or data space. In this paper, we propose a simple and intuitive approach that minimizes iteratively the distance between source and target task distributions by optimizing the kernel target alignment (KTA). We show that this procedure is suitable for transfer learning by relating it to Hilbert-Schmidt Independence Criterion (HSIC) and Quadratic Mutual Information (QMI) maximization. We run our method on benchmark computer vision data sets and show that it can outperform some state-of-art methods.

12 May 2025

We describe an algorithm that computes the minimal list of inequalities for

the moment cone of any representation of a complex reductive group. We give

more details for the Kronecker cone (that is the asymptotic support of the

Kronecker coefficients) and the fermionic cone. These two cases correspond to

the action of GLd1(C)×⋯×GLds(C) on

Cd1⊗⋯⊗Cds and GLd(C) on

⋀rCd, respectively. These two cases are implemented in

Python-Sage. Available at this https URL, our algorithm improves in

several directions, the one given by Vergne-Walter in Inequalities for Moment

Cones of Finite-Dimensional Representations. For example, it can compute the

minimal list of 463 inequalities for the Kronecker cone (5,5,5) in 20

minutes. An important ingredient is an algorithm that decides whether an

application is birational or not. It could be of independent interest in

effective algebraic geometry.

07 Oct 2024

In 2019, D. Muthiah proposed a strategy to define affine Kazhdan-Lusztig R-polynomials for Kac-Moody groups. Since then, Bardy-Panse, the first author and Rousseau have introduced the formalism of twin masures and the authors have extended combinatorial results from affine root systems to general Kac-Moody root systems in a previous article. In this paper, we use these results to explicitly define affine R-Kazhdan-Lusztig polynomials for Kac-Moody groups. The construction is based on a path model lifting to twin masures. Conjecturally, these polynomials count the cardinality of intersections of opposite affine Schubert cells, as in the case of reductive groups.

There are no more papers matching your filters at the moment.