University of Monastir

A novel knowledge distillation framework enables transfer of Transformer-based language model capabilities to xLSTM architectures through ∆-distillation and Frobenius norm regularization, achieving comparable performance with significantly reduced computational complexity and parameter count while training on only 512M tokens.

MoxE (Mixture of xLSTM Experts) introduces a language model architecture that combines xLSTM-based sequence mixing with a sparse mixture of experts and an entropy-aware routing mechanism. This approach enables efficient computation by intelligently allocating resources based on token difficulty, achieving lower perplexity on generalization tasks like Lambada OpenAI while maintaining efficiency.

To improve the segmentation of diabetic retinopathy lesions (microaneurysms,

hemorrhages, exudates, and soft exudates), we implemented a binary segmentation

method specific to each type of lesion. As post-segmentation, we combined the

individual model outputs into a single image to better analyze the lesion

types. This approach facilitated parameter optimization and improved accuracy,

effectively overcoming challenges related to dataset limitations and annotation

complexity. Specific preprocessing steps included cropping and applying

contrast-limited adaptive histogram equalization to the L channel of the LAB

image. Additionally, we employed targeted data augmentation techniques to

further refine the model's efficacy. Our methodology utilized the DeepLabv3+

model, achieving a segmentation accuracy of 99%. These findings highlight the

efficacy of innovative strategies in advancing medical image analysis,

particularly in the precise segmentation of diabetic retinopathy lesions. The

IDRID dataset was utilized to validate and demonstrate the robustness of our

approach.

The evil twin attack is a major security threat to WLANs. An evil twin is a rogue AP installed by a malicious user to impersonate legitimate APs. It intends to attract victims in order to intercept their credentials, to steal their sensitive information, to eavesdrop on their data, etc. In this paper, we study the security mechanisms of wireless networks and we introduce the different authentication methods, including 802.1X authentication. We show that 802.1X has improved security through the use of digital certificates but does not define any practical technique for the user to check the network certificate. Therefore, it remains vulnerable to the evil twin attack. To repair this vulnerability, we introduce Robust Certificate Management System (RCMS) which takes advantage of the digital certificates of 802.1X to protect the users against rogue APs. RCMS defines a new verification code to allow the user device to check the network certificate. This practical verification combined with the reliability of digital certificates provides a perfect protection against rogue APs. RCMS requires a small software update on the user terminal and does not need any modification of IEEE 802.11. It has a significant flexibility since trusting a single AP is enough to trust all the APs of the extended network. This allows the administrators to extend their networks easily without the need to update any database of trusted APs on the user devices.

22 Oct 2015

In this work, we have theoretically investigated the intermixing effect in

highly strained In0.3Ga0.7As/GaAs quantum well (QW) taking into

consideration the composition profile change resulting from in-situ indium

surface segregation. To study the impact of the segregation effects on the

postgrowth intermixing, one dimensional steady state Schrodinger equation and

Fick's second law of diffusion have been numerically solved by using the finite

difference methods. The impact of the In/Ga interdiffusion on the QW emission

energy is considered for different In segregation coefficients. Our results

show that the intermixed QW emission energy is strongly dependent on the

segregation effects. The interdiffusion enhanced energy shift is found to be

considerably reduced for higher segregation coefficients. This work adds a

considerable insight into the understanding and modelling of the effects of

interdiffusion in semiconductor nanostructures.

Ocular pathology detection from fundus images presents an important challenge

on health care. In fact, each pathology has different severity stages that may

be deduced by verifying the existence of specific lesions. Each lesion is

characterized by morphological features. Moreover, several lesions of different

pathologies have similar features. We note that patient may be affected

simultaneously by several pathologies. Consequently, the ocular pathology

detection presents a multi-class classification with a complex resolution

principle. Several detection methods of ocular pathologies from fundus images

have been proposed. The methods based on deep learning are distinguished by

higher performance detection, due to their capability to configure the network

with respect to the detection objective. This work proposes a survey of ocular

pathology detection methods based on deep learning. First, we study the

existing methods either for lesion segmentation or pathology classification.

Afterwards, we extract the principle steps of processing and we analyze the

proposed neural network structures. Subsequently, we identify the hardware and

software environment required to employ the deep learning architecture.

Thereafter, we investigate about the experimentation principles involved to

evaluate the methods and the databases used either for training and testing

phases. The detection performance ratios and execution times are also reported

and discussed.

In this paper, we introduce a novel concept called the Graph Geometric Control Condition (GGCC). It turns out to be a simple, geometric rewriting of many of the frameworks in which the controllability of PDEs on graphs has been studied. We prove that (GGCC) is a necessary and sufficient condition for the exact controllability of the wave equation on metric graphs with internal controls and Dirichlet boundary conditions. We then investigate the internal exact controllability of the wave equation with mixed boundary conditions and the one of the Schrödinger equation, as well as the internal null-controllability of the heat equation. We show that (GGCC) provides a sufficient condition for the controllability of these equations and we provide explicit examples proving that (GGCC) is not necessary in these cases.

09 Oct 2024

This paper deals with explicit upper and lower bounds for principal eigenvalues and the maximum principle associated to generalized Lane-Emden systems (GLE systems, for short). Regarding the bounds, we generalize the upper estimate of Berestycki, Nirenberg and Varadhan [Comm. Pure Appl. Math. (1994), 47-92] for the first eigenvalue of linear scalar problems on general domains to the case of strongly coupled GLE systems with m⩾2 equations on smooth domains. The explicit lower estimate we obtain is also used to derive a maximum principle to GLE systems relying in terms of quantitative ingredients. Furthermore, as applications of the previous results, upper and lower estimates for the first eigenvalue of weighted poly-Laplacian eigenvalue problems with Lp weights (p>n) and Navier boundary condition are obtained. Moreover, a strong maximum principle depending on the domain and the weight function for scalar problems involving the poly-Laplacian operator is also established.

Deep convolutional neural networks (Deep CNN) have achieved hopeful

performance for single image super-resolution. In particular, the Deep CNN skip

Connection and Network in Network (DCSCN) architecture has been successfully

applied to natural images super-resolution. In this work we propose an approach

called SDT-DCSCN that jointly performs super-resolution and deblurring of

low-resolution blurry text images based on DCSCN. Our approach uses subsampled

blurry images in the input and original sharp images as ground truth. The used

architecture is consists of a higher number of filters in the input CNN layer

to a better analysis of the text details. The quantitative and qualitative

evaluation on different datasets prove the high performance of our model to

reconstruct high-resolution and sharp text images. In addition, in terms of

computational time, our proposed method gives competitive performance compared

to state of the art methods.

Biometric recognition is the process of verifying or classifying human characteristics in images or videos. It is a complex task that requires machine learning algorithms, including convolutional neural networks (CNNs) and Siamese networks. Besides, there are several limitations to consider when using these algorithms for image verification and classification tasks. In fact, training may be computationally intensive, requiring specialized hardware and significant computational resources to train and deploy. Moreover, it necessitates a large amount of labeled data, which can be time-consuming and costly to obtain. The main advantage of the proposed TinySiamese compared to the standard Siamese is that it does not require the whole CNN for training. In fact, using a pre-trained CNN as a feature extractor and the TinySiamese to learn the extracted features gave almost the same performance and efficiency as the standard Siamese for biometric verification. In this way, the TinySiamese solves the problems of memory and computational time with a small number of layers which did not exceed 7. It can be run under low-power machines which possess a normal GPU and cannot allocate a large RAM space. Using TinySiamese with only 8 GO of memory, the matching time decreased by 76.78% on the B2F (Biometric images of Fingerprints and Faces), FVC2000, FVC2002 and FVC2004 while the training time for 10 epochs went down by approximately 93.14% on the B2F, FVC2002, THDD-part1 and CASIA-B datasets. The accuracy of the fingerprint, gait (NM-angle 180 degree) and face verification tasks was better than the accuracy of a standard Siamese by 0.87%, 20.24% and 3.85% respectively. TinySiamese achieved comparable accuracy with related works for the fingerprint and gait classification tasks.

07 Dec 2023

In this paper, we consider a flocculation model in a chemostat where one

species is present in two forms: planktonic and aggregated bacteria with the

presence of a single resource. The removal rates of isolated and attached

bacteria are distinct and include the specific death rates. Considering

distinct yield coefficients with a large class of growth rates, we present a

mathematical analysis of the model by establishing the necessary and sufficient

conditions of the existence and local asymptotic stability of all steady states

according to the two operating parameters which are the dilution rate and the

inflowing concentration of the substrate. Using these conditions, we determine

first theoretically the operating diagram of the flocculation process

describing the asymptotic behavior of the system with respect to two control

parameters. The bifurcations analysis shows a rich set of possible types of

bifurcations: transcritical bifurcation or branch points of steady states,

saddle-node bifurcation or limit points of steady states, Hopf, and homoclinic

bifurcations. Using the numerical method with MATCONT software based on a

continuation and correction algorithm, we find the same operating diagram

obtained theoretically. However, MATCONT detects other types of two-parameter

bifurcations such as Bogdanov-Takens and Cusp bifurcations.

Left ventricular ejection fraction (LVEF) is the most important clinical parameter of cardiovascular function. The accuracy in estimating this parameter is highly dependent upon the precise segmentation of the left ventricle (LV) structure at the end diastole and systole phases. Therefore, it is crucial to develop robust algorithms for the precise segmentation of the heart structure during different phases. Methodology: In this work, an improved 3D UNet model is introduced to segment the myocardium and LV, while excluding papillary muscles, as per the recommendation of the Society for Cardiovascular Magnetic Resonance. For the practical testing of the proposed framework, a total of 8,400 cardiac MRI images were collected and analysed from the military hospital in Tunis (HMPIT), as well as the popular ACDC public dataset. As performance metrics, we used the Dice coefficient and the F1 score for validation/testing of the LV and the myocardium segmentation. Results: The data was split into 70%, 10%, and 20% for training, validation, and testing, respectively. It is worth noting that the proposed segmentation model was tested across three axis views: basal, medio basal and apical at two different cardiac phases: end diastole and end systole instances. The experimental results showed a Dice index of 0.965 and 0.945, and an F1 score of 0.801 and 0.799, at the end diastolic and systolic phases, respectively. Additionally, clinical evaluation outcomes revealed a significant difference in the LVEF and other clinical parameters when the papillary muscles were included or excluded.

Diabetic retinopathy (DR) is a leading cause of blindness worldwide,

underscoring the importance of early detection for effective treatment.

However, automated DR classification remains challenging due to variations in

image quality, class imbalance, and pixel-level similarities that hinder model

training. To address these issues, we propose a robust preprocessing pipeline

incorporating image cropping, Contrast-Limited Adaptive Histogram Equalization

(CLAHE), and targeted data augmentation to improve model generalization and

resilience. Our approach leverages the Swin Transformer, which utilizes

hierarchical token processing and shifted window attention to efficiently

capture fine-grained features while maintaining linear computational

complexity. We validate our method on the Aptos and IDRiD datasets for

multi-class DR classification, achieving accuracy rates of 89.65% and 97.40%,

respectively. These results demonstrate the effectiveness of our model,

particularly in detecting early-stage DR, highlighting its potential for

improving automated retinal screening in clinical settings.

14 Jun 2025

This work presents a rigorous framework for understanding ergodicity and mixing in time-inhomogeneous quantum systems. By analyzing quantum processes driven by sequences of quantum channels, we distinguish between forward and backward dynamics and reveal their fundamental asymmetries. A key contribution is the development of a quantum Markov-Dobrushin approach to characterize mixing behavior, offering clear criteria for convergence and exponential stability. The framework not only generalizes classical and homogeneous quantum models but also applies to non-translation-invariant matrix product states, highlighting its relevance to real-world quantum systems. These results contribute to the mathematical foundations of quantum dynamics and support the broader goal of advancing quantum information science.

01 Apr 2021

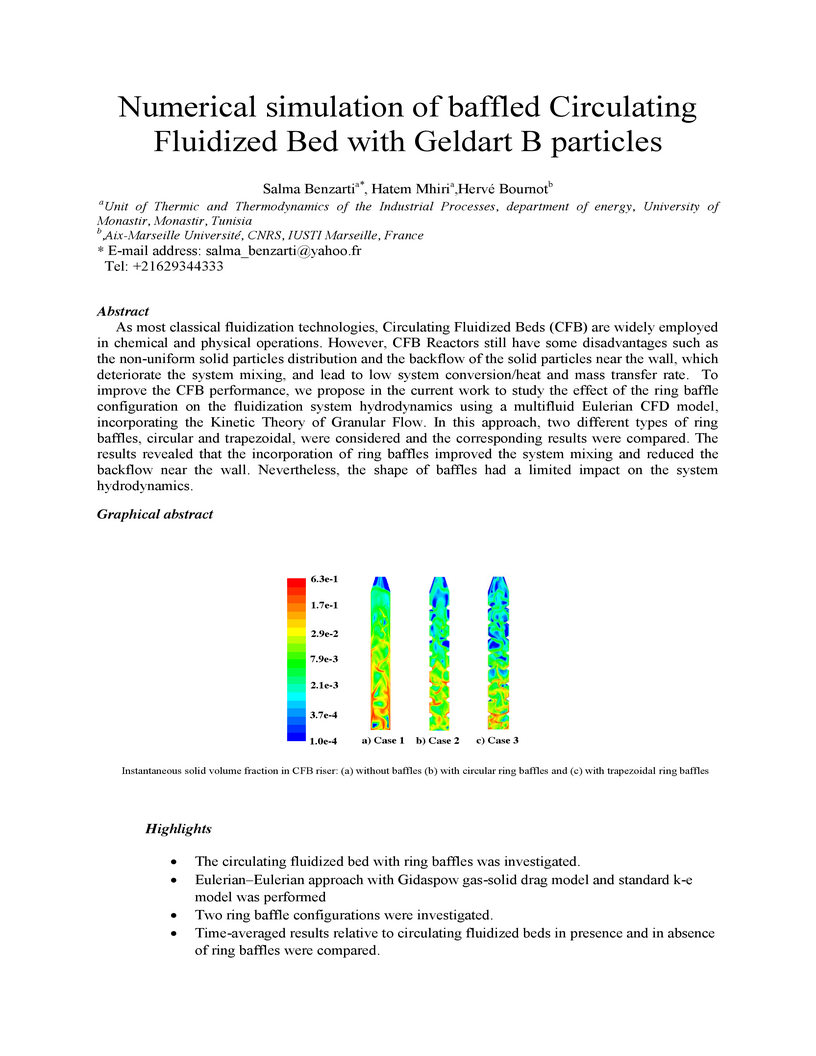

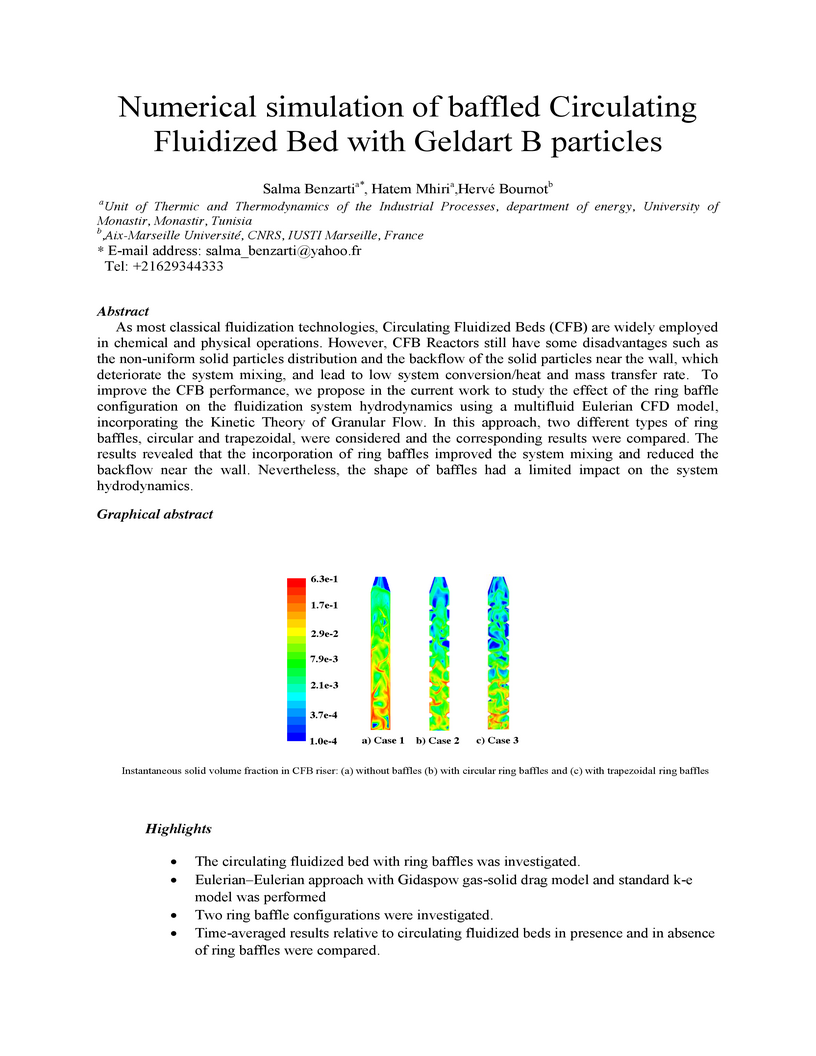

As most classical fluidization technologies, Circulating Fluidized Beds (CFB) are widely employed in chemical and physical operations. However, CFB Reactors still have some disadvantages such as the non-uniform solid particles distribution and the backflow of the solid particles near the wall, which deteriorate the system mixing, and lead to low system conversion/heat and mass transfer rate. To improve the CFB performance, we propose in the current work to study the effect of the ring baffle configuration on the fluidization system hydrodynamics using a multifluid Eulerian CFD model, incorporating the Kinetic Theory of Granular Flow. In this approach, two different types of ring baffles, circular and trapezoidal, were considered and the corresponding results were compared. The results revealed that the incorporation of ring baffles improved the system mixing and reduced the backflow near the wall. Nevertheless, the shape of baffles had a limited impact on the system hydrodynamics.

19 Sep 2025

In this paper, we introduce a new class of De Giorgi type functions, denoted by BG(x,t), and establish the Hölder continuity of its elements under suitable additional assumptions on the generalized \textnormal{N}-function G(x,t). As an application, we prove the Hölder continuity of solutions to quasilinear equations whose principal part is in divergence form with G(x,t)-growth conditions, including both critical and standard growth cases. The novelty of our work lies in the generalization of the Hölder continuity results previously known for variable exponent \cite[X, Fan and D. Zhao]{Fan1999} and Orlicz \cite[G. M. Lieberman]{Li1991} problems. Moreover, our results encompass a wide variety of quasilinear equations.

01 Apr 2021

As most classical fluidization technologies, Circulating Fluidized Beds (CFB) are widely employed in chemical and physical operations. However, CFB Reactors still have some disadvantages such as the non-uniform solid particles distribution and the backflow of the solid particles near the wall, which deteriorate the system mixing, and lead to low system conversion/heat and mass transfer rate. To improve the CFB performance, we propose in the current work to study the effect of the ring baffle configuration on the fluidization system hydrodynamics using a multifluid Eulerian CFD model, incorporating the Kinetic Theory of Granular Flow. In this approach, two different types of ring baffles, circular and trapezoidal, were considered and the corresponding results were compared. The results revealed that the incorporation of ring baffles improved the system mixing and reduced the backflow near the wall. Nevertheless, the shape of baffles had a limited impact on the system hydrodynamics.

Falls are a common problem affecting the older adults and a major public

health issue. Centers for Disease Control and Prevention, and World Health

Organization report that one in three adults over the age of 65 and half of the

adults over 80 fall each year. In recent years, an ever-increasing range of

applications have been developed to help deliver more effective falls

prevention interventions. All these applications rely on a huge elderly

personal database collected from hospitals, mutual health, and other

organizations in caring for elderly. The information describing an elderly is

continually evolving and may become obsolete at a given moment and contradict

what we already know on the same person. So, it needs to be continuously

checked and updated in order to restore the database consistency and then

provide better service. This paper provides an outline of an Obsolete personal

Information Update System (OIUS) designed in the context of the elderly-fall

prevention project. Our OIUS aims to control and update in real-time the

information acquired about each older adult, provide on-demand consistent

information and supply tailored interventions to caregivers and fall-risk

patients. The approach outlined for this purpose is based on a polynomial-time

algorithm build on top of a causal Bayesian network representing the elderly

data. The result is given as a recommendation tree with some accuracy level. We

conduct a thorough empirical study for such a model on an elderly personal

information base. Experiments confirm the viability and effectiveness of our

OIUS.

Imperial College LondonSichuan University

Imperial College LondonSichuan University Fudan University

Fudan University Beihang UniversityNanjing University of Science and Technology

Beihang UniversityNanjing University of Science and Technology University of Copenhagen

University of Copenhagen King’s College LondonUniversity of HoustonErasmus MC

King’s College LondonUniversity of HoustonErasmus MC University of VirginiaUniversity of BurgundyUniversity of Franche ComtéCharité – Universitätsmedizin BerlinGerman Centre for Cardiovascular ResearchUniversity Hospital of DijonUniversity of MonastirFraunhofer MEVISUniversity of AlgarveUniversity of SousseCASIS CompanyNational Engineering School of MonastirNational Engineering School of Sousse

University of VirginiaUniversity of BurgundyUniversity of Franche ComtéCharité – Universitätsmedizin BerlinGerman Centre for Cardiovascular ResearchUniversity Hospital of DijonUniversity of MonastirFraunhofer MEVISUniversity of AlgarveUniversity of SousseCASIS CompanyNational Engineering School of MonastirNational Engineering School of SousseA key factor for assessing the state of the heart after myocardial infarction

(MI) is to measure whether the myocardium segment is viable after reperfusion

or revascularization therapy. Delayed enhancement-MRI or DE-MRI, which is

performed several minutes after injection of the contrast agent, provides high

contrast between viable and nonviable myocardium and is therefore a method of

choice to evaluate the extent of MI. To automatically assess myocardial status,

the results of the EMIDEC challenge that focused on this task are presented in

this paper. The challenge's main objectives were twofold. First, to evaluate if

deep learning methods can distinguish between normal and pathological cases.

Second, to automatically calculate the extent of myocardial infarction. The

publicly available database consists of 150 exams divided into 50 cases with

normal MRI after injection of a contrast agent and 100 cases with myocardial

infarction (and then with a hyperenhanced area on DE-MRI), whatever their

inclusion in the cardiac emergency department. Along with MRI, clinical

characteristics are also provided. The obtained results issued from several

works show that the automatic classification of an exam is a reachable task

(the best method providing an accuracy of 0.92), and the automatic segmentation

of the myocardium is possible. However, the segmentation of the diseased area

needs to be improved, mainly due to the small size of these areas and the lack

of contrast with the surrounding structures.

16 Sep 2021

An extended Polya urn Model with two colors, black and white, is studied with some SLLN and CLT on the proportion of white balls.

There are no more papers matching your filters at the moment.