Blekinge Institute of Technology

22 Sep 2025

This research outlines a multi-phase agenda for treating prompts for Large Language Models as first-class software engineering artifacts, with preliminary survey findings revealing that while LLMs are heavily used in development, prompt management remains largely unsystematic, lacking reuse and consistent guidelines.

In the tourism domain, Large Language Models (LLMs) often struggle to mine

implicit user intentions from tourists' ambiguous inquiries and lack the

capacity to proactively guide users toward clarifying their needs. A critical

bottleneck is the scarcity of high-quality training datasets that facilitate

proactive questioning and implicit intention mining. While recent advances

leverage LLM-driven data synthesis to generate such datasets and transfer

specialized knowledge to downstream models, existing approaches suffer from

several shortcomings: (1) lack of adaptation to the tourism domain, (2) skewed

distributions of detail levels in initial inquiries, (3) contextual redundancy

in the implicit intention mining module, and (4) lack of explicit thinking

about tourists' emotions and intention values. Therefore, we propose SynPT (A

Data Synthesis Method Driven by LLMs for Proactive Mining of Implicit User

Intentions in the Tourism), which constructs an LLM-driven user agent and

assistant agent to simulate dialogues based on seed data collected from Chinese

tourism websites. This approach addresses the aforementioned limitations and

generates SynPT-Dialog, a training dataset containing explicit reasoning. The

dataset is utilized to fine-tune a general LLM, enabling it to proactively mine

implicit user intentions. Experimental evaluations, conducted from both human

and LLM perspectives, demonstrate the superiority of SynPT compared to existing

methods. Furthermore, we analyze key hyperparameters and present case studies

to illustrate the practical applicability of our method, including discussions

on its adaptability to English-language scenarios. All code and data are

publicly available.

07 Oct 2025

The COVID-19 pandemic has permanently altered workplace structures, normalizing remote work. However, critical evidence highlights challenges with fully remote arrangements, particularly for software teams. This study investigates employee resignation patterns at Ericsson, a global developer of software-intensive systems, before, during, and after the pandemic. Using HR data from 2016-2025 in Ericsson Sweden, we analyze how different work modalities (onsite, remote, and hybrid) influence employee retention. Our findings show a marked increase in resignations from summer 2021 to summer 2023, especially among employees with less than five years of tenure. Employees onboarded remotely during the pandemic were significantly more likely to resign within their first three years, even after returning to the office. Exit surveys suggest that remote onboarding may fail to establish the necessary organizational attachment, the feeling of belonging and long-term retention. By contrast, the company's eventual successful return to pre-pandemic retention rates illustrates the value of differentiated work policies and supports reconsidering selective return-to-office (RTO) mandates. Our study demonstrates the importance of employee integration practices in hybrid environments where the requirement for in-office presence for recent hires shall be accompanied by in-office presence from their team members and more senior staff whose mentoring and social interactions contribute to integration into the corporate work environment. We hope these actionable insights will inform HR leaders and policymakers in shaping post-pandemic work practices, demonstrating that carefully crafted hybrid models anchored in organizational attachment and mentorship can sustain retention in knowledge-intensive companies.

25 Sep 2025

Background. The widespread adoption of hybrid work following the COVID-19 pandemic has fundamentally transformed software development practices, introducing new challenges in communication and collaboration as organizations transition from traditional office-based structures to flexible working arrangements. This shift has established a new organizational norm where even traditionally office-first companies now embrace hybrid team structures. While remote participation in meetings has become commonplace in this new environment, it may lead to isolation, alienation, and decreased engagement among remote team members. Aims. This study aims to identify and characterize engagement patterns in hybrid meetings through objective measurements, focusing on the differences between co-located and remote participants. Method. We studied professionals from three software companies over several weeks, employing a multimodal approach to measure engagement. Data were collected through self-reported questionnaires and physiological measurements using biometric devices during hybrid meetings to understand engagement dynamics. Results. The regression analyses revealed comparable engagement levels between onsite and remote participants, though remote participants show lower engagement in long meetings regardless of participation mode. Active roles positively correlate with higher engagement, while larger meetings and afternoon sessions are associated with lower engagement. Conclusions. Our results offer insights into factors associated with engagement and disengagement in hybrid meetings, as well as potential meeting improvement recommendations. These insights are potentially relevant not only for software teams but also for knowledge-intensive organizations across various sectors facing similar hybrid collaboration challenges.

07 Jul 2025

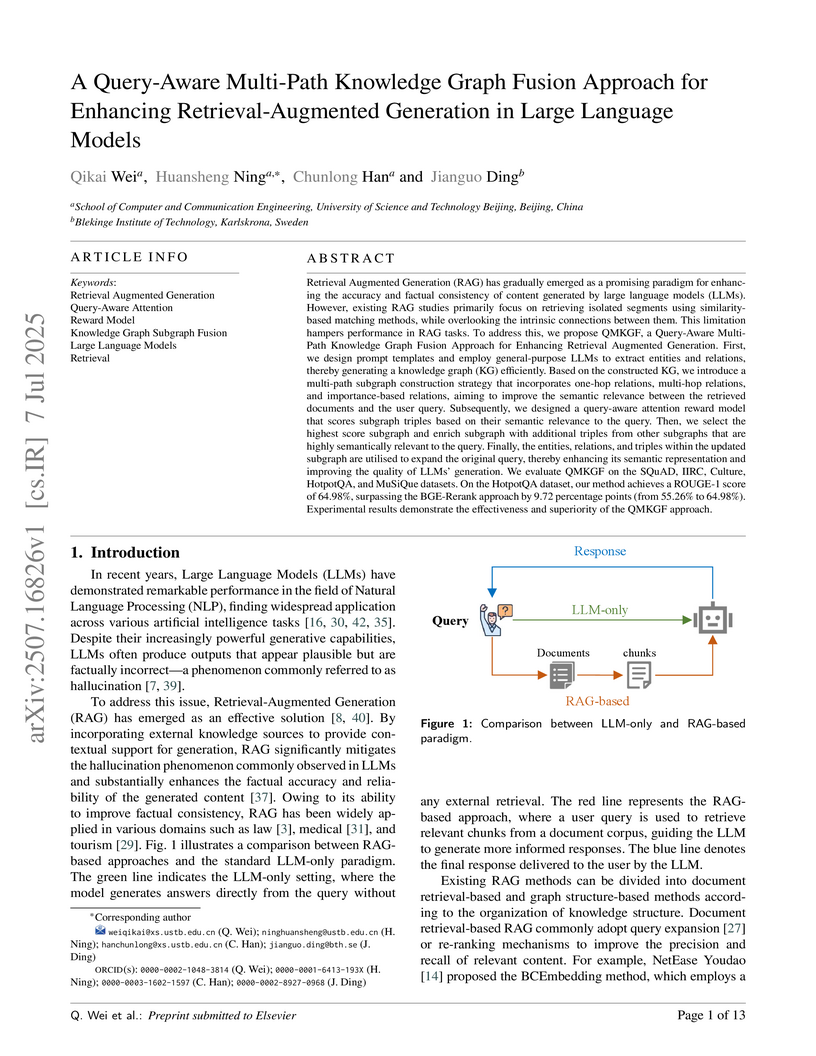

Retrieval Augmented Generation (RAG) has gradually emerged as a promising paradigm for enhancing the accuracy and factual consistency of content generated by large language models (LLMs). However, existing RAG studies primarily focus on retrieving isolated segments using similarity-based matching methods, while overlooking the intrinsic connections between them. This limitation hampers performance in RAG tasks. To address this, we propose QMKGF, a Query-Aware Multi-Path Knowledge Graph Fusion Approach for Enhancing Retrieval Augmented Generation. First, we design prompt templates and employ general-purpose LLMs to extract entities and relations, thereby generating a knowledge graph (KG) efficiently. Based on the constructed KG, we introduce a multi-path subgraph construction strategy that incorporates one-hop relations, multi-hop relations, and importance-based relations, aiming to improve the semantic relevance between the retrieved documents and the user query. Subsequently, we designed a query-aware attention reward model that scores subgraph triples based on their semantic relevance to the query. Then, we select the highest score subgraph and enrich subgraph with additional triples from other subgraphs that are highly semantically relevant to the query. Finally, the entities, relations, and triples within the updated subgraph are utilised to expand the original query, thereby enhancing its semantic representation and improving the quality of LLMs' generation. We evaluate QMKGF on the SQuAD, IIRC, Culture, HotpotQA, and MuSiQue datasets. On the HotpotQA dataset, our method achieves a ROUGE-1 score of 64.98\%, surpassing the BGE-Rerank approach by 9.72 percentage points (from 55.26\% to 64.98\%). Experimental results demonstrate the effectiveness and superiority of the QMKGF approach.

22 Feb 2025

The generation of synthetic network traffic data is essential for network

security testing, machine learning model training, and performance analysis.

However, existing methods for synthetic data generation differ significantly in

their ability to maintain statistical fidelity, utility for classification

tasks, and class balance. This study presents a comparative analysis of twelve

synthetic network traffic data generation methods, encompassing non-AI

(statistical), classical AI, and generative AI techniques. Using NSL-KDD and

CIC-IDS2017 datasets, we evaluate the fidelity, utility, class balance, and

scalability of these methods under standardized performance metrics. Results

demonstrate that GAN-based models, particularly CTGAN and CopulaGAN, achieve

superior fidelity and utility, making them ideal for high-quality synthetic

data generation. Statistical methods such as SMOTE and Cluster Centroid

effectively maintain class balance but fail to capture complex traffic

structures. Meanwhile, diffusion models exhibit computational inefficiencies,

limiting their scalability. Our findings provide a structured benchmarking

framework for selecting the most suitable synthetic data generation techniques

for network traffic analysis and cybersecurity applications.

01 Aug 2022

Non-robust (fragile) test execution is a commonly reported challenge in GUI-based test automation, despite much research and several proposed solutions. A test script needs to be resilient to (minor) changes in the tested application but, at the same time, fail when detecting potential issues that require investigation. Test script fragility is a multi-faceted problem, but one crucial challenge is reliably identifying and locating the correct target web elements when the website evolves between releases or otherwise fails and reports an issue. This paper proposes and evaluates a novel approach called similarity-based web element localization (Similo), which leverages information from multiple web element locator parameters to identify a target element using a weighted similarity score. The experimental study compares Similo to a baseline approach for web element localization. To get an extensive empirical basis, we target 40 of the most popular websites on the Internet in our evaluation. Robustness is considered by counting the number of web elements found in a recent website version compared to how many of these existed in an older version. Results of the experiment show that Similo outperforms the baseline representing the current state-of-the-art; it failed to locate the correct target web element in 72 out of 598 considered cases compared to 146 failed cases for the baseline approach. This study presents evidence that quantifying the similarity between multiple attributes of web elements when trying to locate them, as in our proposed Similo approach, is beneficial. With acceptable efficiency, Similo gives significantly higher effectiveness (i.e., robustness) than the baseline web element localization approach.

21 Oct 2025

This paper explores the challenges of cyberattack attribution, specifically APTs, applying the case study approach for the WhisperGate cyber operation of January 2022 executed by the Russian military intelligence service (GRU) and targeting Ukrainian government entities. The study provides a detailed review of the threat actor identifiers and taxonomies used by leading cybersecurity vendors, focusing on the evolving attribution from Microsoft, ESET, and CrowdStrike researchers. Once the attribution to Ember Bear (GRU Unit 29155) is established through technical and intelligence reports, we use both traditional machine learning classifiers and a large language model (ChatGPT) to analyze the indicators of compromise (IoCs), tactics, and techniques to statistically and semantically attribute the WhisperGate attack. Our findings reveal overlapping indicators with the Sandworm group (GRU Unit 74455) but also strong evidence pointing to Ember Bear, especially when the LLM is fine-tuned or contextually augmented with additional intelligence. Thus, showing how AI/GenAI with proper fine-tuning are capable of solving the attribution challenge.

13 Oct 2022

Acceptance testing is crucial to determine whether a system fulfills end-user requirements. However, the creation of acceptance tests is a laborious task entailing two major challenges: (1) practitioners need to determine the right set of test cases that fully covers a requirement, and (2) they need to create test cases manually due to insufficient tool support. Existing approaches for automatically deriving test cases require semi-formal or even formal notations of requirements, though unrestricted natural language is prevalent in practice. In this paper, we present our tool-supported approach CiRA (Conditionals in Requirements Artifacts) capable of creating the minimal set of required test cases from conditional statements in informal requirements. We demonstrate the feasibility of CiRA in a case study with three industry partners. In our study, out of 578 manually created test cases, 71.8 % can be generated automatically. Additionally, CiRA discovered 80 relevant test cases that were missed in manual test case design. CiRA is publicly available at this http URL.

Causal relations (If A, then B) are prevalent in requirements artifacts. Automatically extracting causal relations from requirements holds great potential for various RE activities (e.g., automatic derivation of suitable test cases). However, we lack an approach capable of extracting causal relations from natural language with reasonable performance. In this paper, we present our tool CATE (CAusality Tree Extractor), which is able to parse the composition of a causal relation as a tree structure. CATE does not only provide an overview of causes and effects in a sentence, but also reveals their semantic coherence by translating the causal relation into a binary tree. We encourage fellow researchers and practitioners to use CATE at this https URL

23 Apr 2025

Multi-label requirements classification is a challenging task, especially

when dealing with numerous classes at varying levels of abstraction. The

difficulties increases when a limited number of requirements is available to

train a supervised classifier. Zero-shot learning (ZSL) does not require

training data and can potentially address this problem. This paper investigates

the performance of zero-shot classifiers (ZSCs) on a multi-label industrial

dataset. We focuse on classifying requirements according to a taxonomy designed

to support requirements tracing. We compare multiple variants of ZSCs using

different embeddings, including 9 language models (LMs) with a reduced number

of parameters (up to 3B), e.g., BERT, and 5 large LMs (LLMs) with a large

number of parameters (up to 70B), e.g., Llama. Our ground truth includes 377

requirements and 1968 labels from 6 output spaces. For the evaluation, we adopt

traditional metrics, i.e., precision, recall, F1, and Fβ, as well as a

novel label distance metric Dn. This aims to better capture the

classification's hierarchical nature and provides a more nuanced evaluation of

how far the results are from the ground truth. 1) The top-performing model on 5

out of 6 output spaces is T5-xl, with maximum Fβ = 0.78 and Dn = 0.04,

while BERT base outperformed the other models in one case, with maximum

Fβ = 0.83 and Dn = 0.04. 2) LMs with smaller parameter size produce the

best classification results compared to LLMs. Thus, addressing the problem in

practice is feasible as limited computing power is needed. 3) The model

architecture (autoencoding, autoregression, and sentence-to-sentence)

significantly affects the classifier's performance. We conclude that using ZSL

for multi-label requirements classification offers promising results. We also

present a novel metric that can be used to select the top-performing model for

this problem

Addressing women's under-representation in the software industry, a widely

recognized concern, requires attracting as well as retaining more women.

Hearing from women practitioners, particularly those positioned in

multi-cultural settings, about their challenges and and adopting their lived

experienced solutions can support the design of programs to resolve the

under-representation issue.

Goal: We investigated the challenges women face in global software

development teams, particularly what motivates women to leave their company;

how those challenges might break down according to demographics; and strategies

to mitigate the identified challenges.

Method: To achieve this goal, we conducted an exploratory case study in

Ericsson, a global technology company. We surveyed 94 women and employed

mixed-methods to analyze the data.

Results: Our findings reveal that women face socio-cultural challenges,

including work-life balance issues, benevolent and hostile sexism, lack of

recognition and peer parity, impostor syndrome, glass ceiling bias effects, the

prove-it-again phenomenon, and the maternal wall. The participants of our

research provided different suggestions to address/mitigate the reported

challenges, including sabbatical policies, flexibility of location and time,

parenthood support, soft skills training for managers, equality of payment and

opportunities between genders, mentoring and role models to support career

growth, directives to hire more women, inclusive groups and events, women's

empowerment, and recognition for women's success. The framework of challenges

and suggestions can inspire further initiatives both in academia and industry

to onboard and retain women.

25 Dec 2020

Context: More than 50 countries have developed COVID contact-tracing apps to limit the spread of coronavirus. However, many experts and scientists cast doubt on the effectiveness of those apps. For each app, a large number of reviews have been entered by end-users in app stores. Objective: Our goal is to gain insights into the user reviews of those apps, and to find out the main problems that users have reported. Our focus is to assess the "software in society" aspects of the apps, based on user reviews. Method: We selected nine European national apps for our analysis and used a commercial app-review analytics tool to extract and mine the user reviews. For all the apps combined, our dataset includes 39,425 user reviews. Results: Results show that users are generally dissatisfied with the nine apps under study, except the Scottish ("Protect Scotland") app. Some of the major issues that users have complained about are high battery drainage and doubts on whether apps are really working. Conclusion: Our results show that more work is needed by the stakeholders behind the apps (e.g., app developers, decision-makers, public health experts) to improve the public adoption, software quality and public perception of these apps.

Labeling issues with the skills required to complete them can help contributors to choose tasks in Open Source Software projects. However, manually labeling issues is time-consuming and error-prone, and current automated approaches are mostly limited to classifying issues as bugs/non-bugs. We investigate the feasibility and relevance of automatically labeling issues with what we call "API-domains," which are high-level categories of APIs. Therefore, we posit that the APIs used in the source code affected by an issue can be a proxy for the type of skills (e.g., DB, security, UI) needed to work on the issue. We ran a user study (n=74) to assess API-domain labels' relevancy to potential contributors, leveraged the issues' descriptions and the project history to build prediction models, and validated the predictions with contributors (n=20) of the projects. Our results show that (i) newcomers to the project consider API-domain labels useful in choosing tasks, (ii) labels can be predicted with a precision of 84% and a recall of 78.6% on average, (iii) the results of the predictions reached up to 71.3% in precision and 52.5% in recall when training with a project and testing in another (transfer learning), and (iv) project contributors consider most of the predictions helpful in identifying needed skills. These findings suggest our approach can be applied in practice to automatically label issues, assisting developers in finding tasks that better match their skills.

21 Oct 2022

Context: Developing software-intensive products or services usually involves a plethora of software artefacts. Assets are artefacts intended to be used more than once and have value for organisations; examples include test cases, code, requirements, and documentation. During the development process, assets might degrade, affecting the effectiveness and efficiency of the development process. Therefore, assets are an investment that requires continuous management. Identifying assets is the first step for their effective management. However, there is a lack of awareness of what assets and types of assets are common in software-developing organisations. Most types of assets are understudied, and their state of quality and how they degrade over time have not been well-understood. Method: We perform a systematic literature review and a field study at five companies to study and identify assets to fill the gap in research. The results were analysed qualitatively and summarised in a taxonomy. Results: We create the first comprehensive, structured, yet extendable taxonomy of assets, containing 57 types of assets. Conclusions: The taxonomy serves as a foundation for identifying assets that are relevant for an organisation and enables the study of asset management and asset degradation concepts.

26 Aug 2002

Classical Lie group theory provides a universal tool for calculating symmetry groups for systems of differential equations. However Lie's method is not as much effective in the case of integral or integro-differential equations as well as in the case of infinite systems of differential equations. This paper is aimed to survey the modern approaches to symmetries of integro-differential equations. As an illustration, an infinite symmetry Lie algebra is calculated for a system of integro-differential equations, namely the well-known Benney equations. The crucial idea is to look for symmetry generators in the form of canonical Lie-Backlund operators.

13 Dec 2018

Deep Neural Networks (DNN) will emerge as a cornerstone in automotive

software engineering. However, developing systems with DNNs introduces novel

challenges for safety assessments. This paper reviews the state-of-the-art in

verification and validation of safety-critical systems that rely on machine

learning. Furthermore, we report from a workshop series on DNNs for perception

with automotive experts in Sweden, confirming that ISO 26262 largely

contravenes the nature of DNNs. We recommend aerospace-to-automotive knowledge

transfer and systems-based safety approaches, e.g., safety cage architectures

and simulated system test cases.

22 May 2024

Cyberbullying has been a significant challenge in the digital era world, given the huge number of people, especially adolescents, who use social media platforms to communicate and share information. Some individuals exploit these platforms to embarrass others through direct messages, electronic mail, speech, and public posts. This behavior has direct psychological and physical impacts on victims of bullying. While several studies have been conducted in this field and various solutions proposed to detect, prevent, and monitor cyberbullying instances on social media platforms, the problem continues. Therefore, it is necessary to conduct intensive studies and provide effective solutions to address the situation. These solutions should be based on detection, prevention, and prediction criteria methods. This paper presents a comprehensive systematic review of studies conducted on cyberbullying detection. It explores existing studies, proposed solutions, identified gaps, datasets, technologies, approaches, challenges, and recommendations, and then proposes effective solutions to address research gaps in future studies.

01 May 2025

From Requirements to Test Cases: An NLP-Based Approach for High-Performance ECU Test Case Automation

From Requirements to Test Cases: An NLP-Based Approach for High-Performance ECU Test Case Automation

Automating test case specification generation is vital for improving the

efficiency and accuracy of software testing, particularly in complex systems

like high-performance Electronic Control Units (ECUs). This study investigates

the use of Natural Language Processing (NLP) techniques, including Rule-Based

Information Extraction and Named Entity Recognition (NER), to transform natural

language requirements into structured test case specifications. A dataset of

400 feature element documents from the Polarion tool was used to evaluate both

approaches for extracting key elements such as signal names and values. The

results reveal that the Rule-Based method outperforms the NER method, achieving

95% accuracy for more straightforward requirements with single signals, while

the NER method, leveraging SVM and other machine learning algorithms, achieved

77.3% accuracy but struggled with complex scenarios. Statistical analysis

confirmed that the Rule-Based approach significantly enhances efficiency and

accuracy compared to manual methods. This research highlights the potential of

NLP-driven automation in improving quality assurance, reducing manual effort,

and expediting test case generation, with future work focused on refining NER

and hybrid models to handle greater complexity.

02 Jan 2025

This paper reports an analysis of aspects of the project planning stage. The object of research is the decision-making processes that take place at this stage. This work considers the problem of building a hierarchy of tasks, their distribution among performers, taking into account restrictions on financial costs and duration of project implementation. Verbal and mathematical models of the task of constructing a hierarchy of tasks and other tasks that take place at the stage of project planning were constructed. Such indicators of the project implementation process efficiency were introduced as the time, cost, and cost-time efficiency. In order to be able to apply these criteria, the tasks of estimating the minimum value of the duration of the project and its minimum required cost were considered. Appropriate methods have been developed to solve them. The developed iterative method for assessing the minimum duration of project implementation is based on taking into account the possibility of simultaneous execution of various tasks. The method of estimating the minimum cost of the project is to build and solve the problem of Boolean programming. The values obtained as a result of solving these problems form an «ideal point», approaching which is enabled by the developed iterative method of constructing a hierarchy of tasks based on the method of sequential concessions. This method makes it possible to devise options for management decisions to obtain valid solutions to the problem. According to them, the decision maker can introduce a concession on the value of one or both components of the «ideal point» or change the input data to the task. The models and methods built can be used when planning projects in education, science, production, etc.

There are no more papers matching your filters at the moment.