Bogazici University

Metabolic energy consumption of a powered lower-limb exoskeleton user mainly

comes from the upper body effort since the lower body is considered to be

passive. However, the upper body effort of the users is largely ignored in the

literature when designing motion controllers. In this work, we use deep

reinforcement learning to develop a locomotion controller that minimizes ground

reaction forces (GRF) on crutches. The rationale for minimizing GRF is to

reduce the upper body effort of the user. Accordingly, we design a model and a

learning framework for a human-exoskeleton system with crutches. We formulate a

reward function to encourage the forward displacement of a human-exoskeleton

system while satisfying the predetermined constraints of a physical robot. We

evaluate our new framework using Proximal Policy Optimization, a

state-of-the-art deep reinforcement learning (RL) method, on the MuJoCo physics

simulator with different hyperparameters and network architectures over

multiple trials. We empirically show that our learning model can generate joint

torques based on the joint angle, velocities, and the GRF on the feet and

crutch tips. The resulting exoskeleton model can directly generate joint

torques from states in line with the RL framework. Finally, we empirically show

that policy trained using our method can generate a gait with a 35% reduction

in GRF with respect to the baseline.

the University of TokyoKeio University

the University of TokyoKeio University Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityUniversity of EssexDonders Institute for Brain, Cognition, and BehaviourBogazici UniversityNational Autonomous University of MexicoCajal International Neuroscience CenterUniversità Milano-BicoccaUniversidad Autónoma del Estado de MorelosInstitute of Cognitive Sciences and Technologies, National Research Council of Italy

Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityUniversity of EssexDonders Institute for Brain, Cognition, and BehaviourBogazici UniversityNational Autonomous University of MexicoCajal International Neuroscience CenterUniversità Milano-BicoccaUniversidad Autónoma del Estado de MorelosInstitute of Cognitive Sciences and Technologies, National Research Council of ItalyAn international consortium of researchers provides a comprehensive survey unifying the concepts of world models from AI and predictive coding from neuroscience, highlighting their shared principles for how agents build and use internal representations to predict and interact with their environment. The paper outlines six key research frontiers for integrating these two fields to advance cognitive and developmental robotics, offering a roadmap for creating more intelligent and adaptable robots.

Generative Large Language Models (LLMs)inevitably produce untruthful responses. Accurately predicting the truthfulness of these outputs is critical, especially in high-stakes settings. To accelerate research in this domain and make truthfulness prediction methods more accessible, we introduce TruthTorchLM an open-source, comprehensive Python library featuring over 30 truthfulness prediction methods, which we refer to as Truth Methods. Unlike existing toolkits such as Guardrails, which focus solely on document-grounded verification, or LM-Polygraph, which is limited to uncertainty-based methods, TruthTorchLM offers a broad and extensible collection of techniques. These methods span diverse tradeoffs in computational cost, access level (e.g., black-box vs white-box), grounding document requirements, and supervision type (self-supervised or supervised). TruthTorchLM is seamlessly compatible with both HuggingFace and LiteLLM, enabling support for locally hosted and API-based models. It also provides a unified interface for generation, evaluation, calibration, and long-form truthfulness prediction, along with a flexible framework for extending the library with new methods. We conduct an evaluation of representative truth methods on three datasets, TriviaQA, GSM8K, and FactScore-Bio. The code is available at this https URL

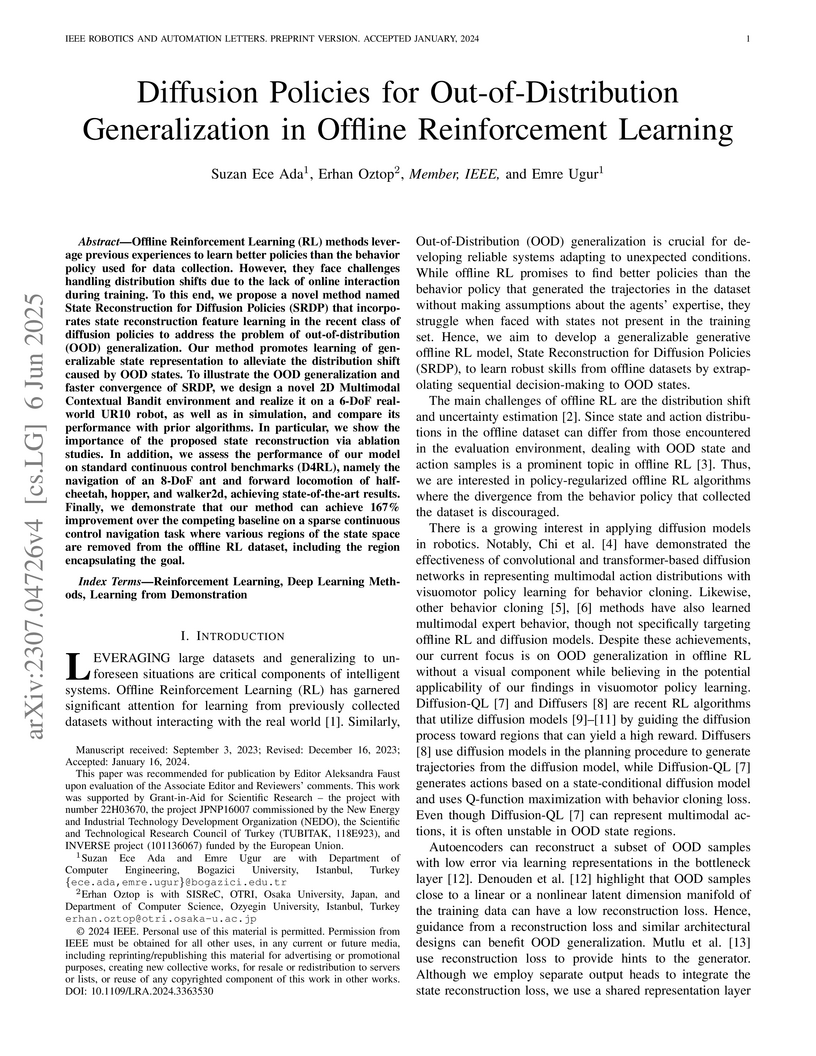

State Reconstruction for Diffusion Policies (SRDP) improves out-of-distribution generalization in offline reinforcement learning by integrating an auxiliary state reconstruction objective into diffusion policies. This method demonstrates a 167% performance improvement over Diffusion-QL in maze navigation with missing data and achieves a Chamfer distance of 0.071

0.02 against ground truth in real-world UR10 robot experiments.

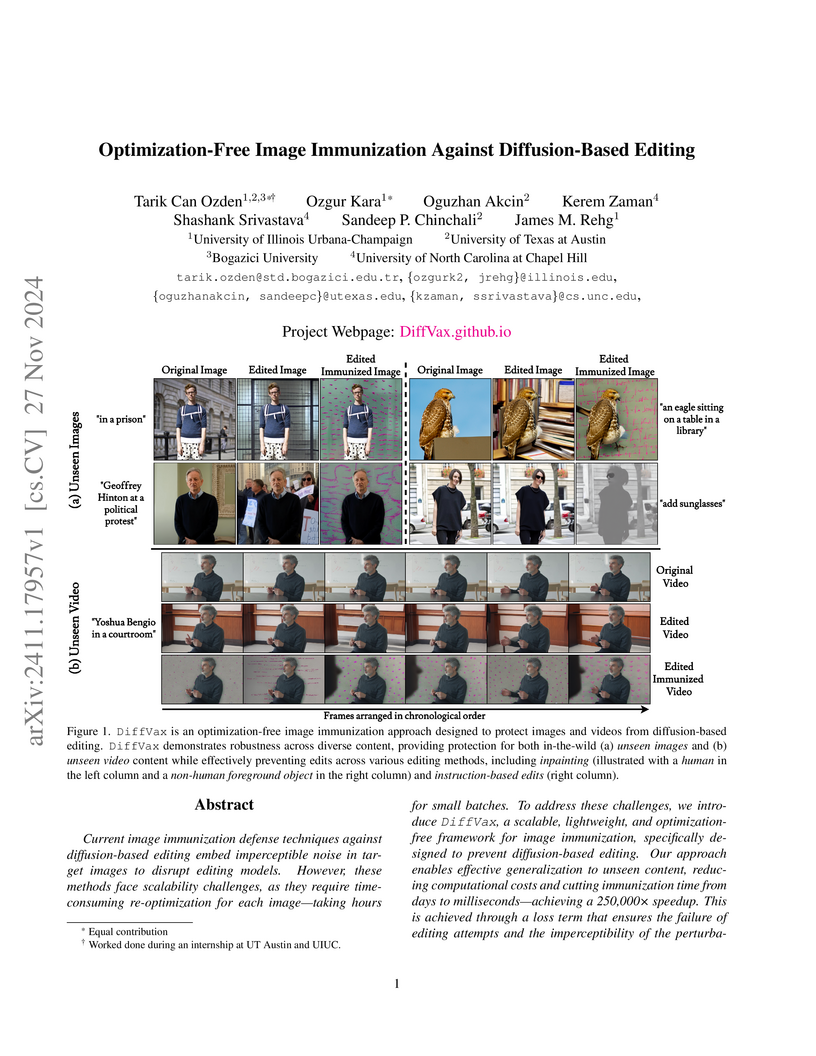

Current image immunization defense techniques against diffusion-based editing embed imperceptible noise in target images to disrupt editing models. However, these methods face scalability challenges, as they require time-consuming re-optimization for each image-taking hours for small batches. To address these challenges, we introduce DiffVax, a scalable, lightweight, and optimization-free framework for image immunization, specifically designed to prevent diffusion-based editing. Our approach enables effective generalization to unseen content, reducing computational costs and cutting immunization time from days to milliseconds-achieving a 250,000x speedup. This is achieved through a loss term that ensures the failure of editing attempts and the imperceptibility of the perturbations. Extensive qualitative and quantitative results demonstrate that our model is scalable, optimization-free, adaptable to various diffusion-based editing tools, robust against counter-attacks, and, for the first time, effectively protects video content from editing. Our code is provided in our project webpage.

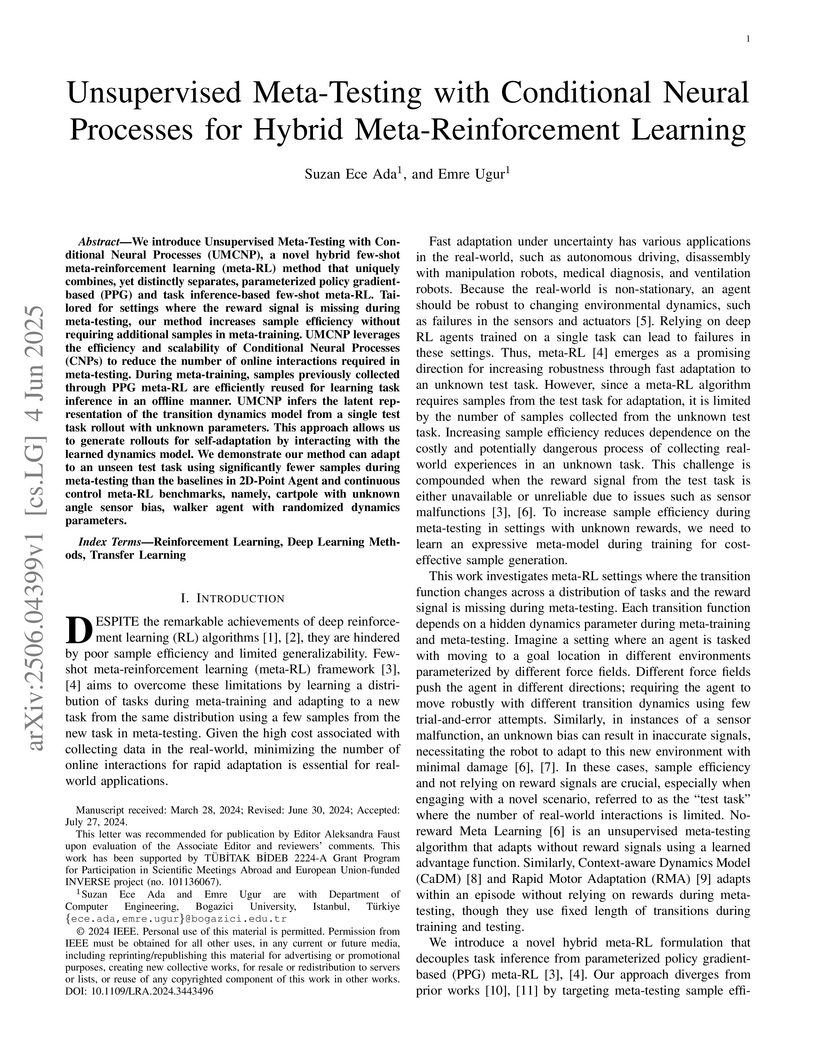

We introduce Unsupervised Meta-Testing with Conditional Neural Processes

(UMCNP), a novel hybrid few-shot meta-reinforcement learning (meta-RL) method

that uniquely combines, yet distinctly separates, parameterized policy

gradient-based (PPG) and task inference-based few-shot meta-RL. Tailored for

settings where the reward signal is missing during meta-testing, our method

increases sample efficiency without requiring additional samples in

meta-training. UMCNP leverages the efficiency and scalability of Conditional

Neural Processes (CNPs) to reduce the number of online interactions required in

meta-testing. During meta-training, samples previously collected through PPG

meta-RL are efficiently reused for learning task inference in an offline

manner. UMCNP infers the latent representation of the transition dynamics model

from a single test task rollout with unknown parameters. This approach allows

us to generate rollouts for self-adaptation by interacting with the learned

dynamics model. We demonstrate our method can adapt to an unseen test task

using significantly fewer samples during meta-testing than the baselines in

2D-Point Agent and continuous control meta-RL benchmarks, namely, cartpole with

unknown angle sensor bias, walker agent with randomized dynamics parameters.

The ENTIRe-ID dataset addresses a core limitation in person re-identification by offering an extensive and diverse collection of over 4.45 million images from 37 cameras across four continents. This dataset aims to facilitate the development of more generalized ReID models capable of performing robustly in varied real-world conditions, while also incorporating privacy-preserving facial blurring.

Panoramic X-rays are frequently used in dentistry for treatment planning, but their interpretation can be both time-consuming and prone to error. Artificial intelligence (AI) has the potential to aid in the analysis of these X-rays, thereby improving the accuracy of dental diagnoses and treatment plans. Nevertheless, designing automated algorithms for this purpose poses significant challenges, mainly due to the scarcity of annotated data and variations in anatomical structure. To address these issues, the Dental Enumeration and Diagnosis on Panoramic X-rays Challenge (DENTEX) has been organized in association with the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) in 2023. This challenge aims to promote the development of algorithms for multi-label detection of abnormal teeth, using three types of hierarchically annotated data: partially annotated quadrant data, partially annotated quadrant-enumeration data, and fully annotated quadrant-enumeration-diagnosis data, inclusive of four different diagnoses. In this paper, we present the results of evaluating participant algorithms on the fully annotated data, additionally investigating performance variation for quadrant, enumeration, and diagnosis labels in the detection of abnormal teeth. The provision of this annotated dataset, alongside the results of this challenge, may lay the groundwork for the creation of AI-powered tools that can offer more precise and efficient diagnosis and treatment planning in the field of dentistry. The evaluation code and datasets can be accessed at this https URL

In this work, we investigate new solutions to the decoration transformation in terms of various special functions, including the hyperbolic gamma function, the basic hypergeometric function, and the Euler gamma function. These solutions to the symmetry transformation are important to decorate Ising-like integrable lattice spin models obtained via the gauge/YBE correspondence. The integral identities represented as the solution of the decoration transformation are derived from the three-dimensional partition functions and superconformal index for the dual supersymmetric gauge theories.

22 Oct 2025

We study a two-dimensional N=(0,2) supersymmetric duality and construct novel Bailey pairs for the associated elliptic genera. This framework provides a systematic method to establish the equivalence of the elliptic genera of quiver gauge theories generated via iterative applications of the seed duality.

We construct the lens hyperbolic modular double, a new algebraic structure whose intertwining operator produces a lens hyperbolic hypergeometric solution of the Yang--Baxter equation.

CNRS

CNRS California Institute of TechnologyUniversity of Oslo

California Institute of TechnologyUniversity of Oslo University of CambridgeHeidelberg UniversityINFN Sezione di Napoli

University of CambridgeHeidelberg UniversityINFN Sezione di Napoli University of Waterloo

University of Waterloo University of Manchester

University of Manchester University College London

University College London University of CopenhagenUniversity of Edinburgh

University of CopenhagenUniversity of Edinburgh NASA Goddard Space Flight Center

NASA Goddard Space Flight Center Université Paris-SaclayHelsinki Institute of Physics

Université Paris-SaclayHelsinki Institute of Physics Stockholm UniversityUniversity of Helsinki

Stockholm UniversityUniversity of Helsinki Perimeter Institute for Theoretical PhysicsUniversité de Genève

Perimeter Institute for Theoretical PhysicsUniversité de Genève Sorbonne UniversitéInstitut Polytechnique de Paris

Sorbonne UniversitéInstitut Polytechnique de Paris Leiden University

Leiden University CEAÉcole Polytechnique Fédérale de Lausanne (EPFL)Universitat Politècnica de CatalunyaUniversity of PortsmouthLudwig-Maximilians-Universität MünchenUniversidad Complutense de MadridUniversität BonnUniversidade do PortoPolitecnico di TorinoUniversity of OuluObservatoire de ParisTechnical University of DenmarkINAF - Osservatorio Astrofisico di TorinoUniversité Côte d’Azur

CEAÉcole Polytechnique Fédérale de Lausanne (EPFL)Universitat Politècnica de CatalunyaUniversity of PortsmouthLudwig-Maximilians-Universität MünchenUniversidad Complutense de MadridUniversität BonnUniversidade do PortoPolitecnico di TorinoUniversity of OuluObservatoire de ParisTechnical University of DenmarkINAF - Osservatorio Astrofisico di TorinoUniversité Côte d’Azur Durham University

Durham University University of GroningenInstituto de Astrofísica e Ciências do EspaçoNiels Bohr InstituteLund UniversityTélécom ParisJet Propulsion LaboratoryInstituto de Astrofísica de CanariasUniversity of NottinghamEuropean Space AgencyRuhr-Universität BochumUniversity of Central LancashireSISSACNESINFN, Sezione di TorinoUniversidad de ValparaísoUniversidad de La LagunaObservatoire de la Côte d’AzurUniversity of Hawai’iINFN, Sezione di MilanoThe University of ArizonaKapteyn Astronomical InstituteBogazici UniversityMax Planck Institute for AstronomyObservatoire astronomique de StrasbourgThe Barcelona Institute of Science and TechnologyLaboratoire d’Astrophysique de MarseilleCNRS/IN2P3INAF – Osservatorio Astronomico di RomaIKERBASQUE-Basque Foundation for ScienceDonostia International Physics Center DIPCInstitut d'Astrophysique de ParisBarcelona Supercomputing CenterUniversidad de SalamancaInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsINFN - Sezione di PadovaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoInstitute of Space ScienceThe Oskar Klein Centre for Cosmoparticle PhysicsUniversitäts-Sternwarte MünchenINFN Sezione di RomaArgelander-Institut für AstronomieUniversidad Politécnica de CartagenaLeiden ObservatoryAIMAgenzia Spaziale Italiana (ASI)INAF, Istituto di Astrofisica Spaziale e Fisica Cosmica di BolognaUniversité LyonESACPort d’Informació CientíficaUniversité de LisboaCentre de Calcul de l’IN2P3Centre de Données astronomiques de Strasbourg (CDS)Aurora Technology for ESAUniversit di CataniaUniversit PSLINFN-Sezione di Roma TreINFN-Sezione di FerraraLaboratoire d’Astrophysique de Bordeaux (LAB)The Institute of Basic Science (IBS)Universit degli Studi di FerraraUniversit

degli Studi di GenovaUniversit

Claude Bernard Lyon 1INAF

Osservatorio Astronomico di CapodimonteAix-Marseille Universit",Universit

degli Studi di PadovaUniversit

de BordeauxUniversit

Roma TreUniversit

Paris CitUniversit

de StrasbourgRWTH Aachen UniversityMax Planck-Institute for Extraterrestrial PhysicsUniversit

Clermont AuvergneUniversit

degli Studi di MilanoINAF

Osservatorio Astronomico di PadovaUniversit

degli Studi di TorinoUniversit

degli Studi di Napoli

Federico IIINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaUniversit

Di BolognaIFPU

Institute for fundamental physics of the UniverseINFN

Sezione di TriesteINAF

` Osservatorio Astronomico di TriesteUniversit

degli Studi di TriesteINAF

Osservatorio Astronomico di Brera

University of GroningenInstituto de Astrofísica e Ciências do EspaçoNiels Bohr InstituteLund UniversityTélécom ParisJet Propulsion LaboratoryInstituto de Astrofísica de CanariasUniversity of NottinghamEuropean Space AgencyRuhr-Universität BochumUniversity of Central LancashireSISSACNESINFN, Sezione di TorinoUniversidad de ValparaísoUniversidad de La LagunaObservatoire de la Côte d’AzurUniversity of Hawai’iINFN, Sezione di MilanoThe University of ArizonaKapteyn Astronomical InstituteBogazici UniversityMax Planck Institute for AstronomyObservatoire astronomique de StrasbourgThe Barcelona Institute of Science and TechnologyLaboratoire d’Astrophysique de MarseilleCNRS/IN2P3INAF – Osservatorio Astronomico di RomaIKERBASQUE-Basque Foundation for ScienceDonostia International Physics Center DIPCInstitut d'Astrophysique de ParisBarcelona Supercomputing CenterUniversidad de SalamancaInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsINFN - Sezione di PadovaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoInstitute of Space ScienceThe Oskar Klein Centre for Cosmoparticle PhysicsUniversitäts-Sternwarte MünchenINFN Sezione di RomaArgelander-Institut für AstronomieUniversidad Politécnica de CartagenaLeiden ObservatoryAIMAgenzia Spaziale Italiana (ASI)INAF, Istituto di Astrofisica Spaziale e Fisica Cosmica di BolognaUniversité LyonESACPort d’Informació CientíficaUniversité de LisboaCentre de Calcul de l’IN2P3Centre de Données astronomiques de Strasbourg (CDS)Aurora Technology for ESAUniversit di CataniaUniversit PSLINFN-Sezione di Roma TreINFN-Sezione di FerraraLaboratoire d’Astrophysique de Bordeaux (LAB)The Institute of Basic Science (IBS)Universit degli Studi di FerraraUniversit

degli Studi di GenovaUniversit

Claude Bernard Lyon 1INAF

Osservatorio Astronomico di CapodimonteAix-Marseille Universit",Universit

degli Studi di PadovaUniversit

de BordeauxUniversit

Roma TreUniversit

Paris CitUniversit

de StrasbourgRWTH Aachen UniversityMax Planck-Institute for Extraterrestrial PhysicsUniversit

Clermont AuvergneUniversit

degli Studi di MilanoINAF

Osservatorio Astronomico di PadovaUniversit

degli Studi di TorinoUniversit

degli Studi di Napoli

Federico IIINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaUniversit

Di BolognaIFPU

Institute for fundamental physics of the UniverseINFN

Sezione di TriesteINAF

` Osservatorio Astronomico di TriesteUniversit

degli Studi di TriesteINAF

Osservatorio Astronomico di BreraThe Euclid satellite will provide data on the clustering of galaxies and on the distortion of their measured shapes, which can be used to constrain and test the cosmological model. However, the increase in precision places strong requirements on the accuracy of the theoretical modelling for the observables and of the full analysis pipeline. In this paper, we investigate the accuracy of the calculations performed by the Cosmology Likelihood for Observables in Euclid (CLOE), a software able to handle both the modelling of observables and their fit against observational data for both the photometric and spectroscopic surveys of Euclid, by comparing the output of CLOE with external codes used as benchmark. We perform such a comparison on the quantities entering the calculations of the observables, as well as on the final outputs of these calculations. Our results highlight the high accuracy of CLOE when comparing its calculation against external codes for Euclid observables on an extended range of operative cases. In particular, all the summary statistics of interest always differ less than 0.1σ from the chosen benchmark, and CLOE predictions are statistically compatible with simulated data obtained from benchmark codes. The same holds for the comparison of correlation function in configuration space for spectroscopic and photometric observables.

We introduce the concrete autoencoder, an end-to-end differentiable method for global feature selection, which efficiently identifies a subset of the most informative features and simultaneously learns a neural network to reconstruct the input data from the selected features. Our method is unsupervised, and is based on using a concrete selector layer as the encoder and using a standard neural network as the decoder. During the training phase, the temperature of the concrete selector layer is gradually decreased, which encourages a user-specified number of discrete features to be learned. During test time, the selected features can be used with the decoder network to reconstruct the remaining input features. We evaluate concrete autoencoders on a variety of datasets, where they significantly outperform state-of-the-art methods for feature selection and data reconstruction. In particular, on a large-scale gene expression dataset, the concrete autoencoder selects a small subset of genes whose expression levels can be use to impute the expression levels of the remaining genes. In doing so, it improves on the current widely-used expert-curated L1000 landmark genes, potentially reducing measurement costs by 20%. The concrete autoencoder can be implemented by adding just a few lines of code to a standard autoencoder.

For safety, medical AI systems undergo thorough evaluations before deployment, validating their predictions against a ground truth which is assumed to be fixed and certain. However, this ground truth is often curated in the form of differential diagnoses. While a single differential diagnosis reflects the uncertainty in one expert assessment, multiple experts introduce another layer of uncertainty through disagreement. Both forms of uncertainty are ignored in standard evaluation which aggregates these differential diagnoses to a single label. In this paper, we show that ignoring uncertainty leads to overly optimistic estimates of model performance, therefore underestimating risk associated with particular diagnostic decisions. To this end, we propose a statistical aggregation approach, where we infer a distribution on probabilities of underlying medical condition candidates themselves, based on observed annotations. This formulation naturally accounts for the potential disagreements between different experts, as well as uncertainty stemming from individual differential diagnoses, capturing the entire ground truth uncertainty. Our approach boils down to generating multiple samples of medical condition probabilities, then evaluating and averaging performance metrics based on these sampled probabilities. In skin condition classification, we find that a large portion of the dataset exhibits significant ground truth uncertainty and standard evaluation severely over-estimates performance without providing uncertainty estimates. In contrast, our framework provides uncertainty estimates on common metrics of interest such as top-k accuracy and average overlap, showing that performance can change multiple percentage points. We conclude that, while assuming a crisp ground truth can be acceptable for many AI applications, a more nuanced evaluation protocol should be utilized in medical diagnosis.

Large annotated datasets in NLP are overwhelmingly in English. This is an obstacle to progress in other languages. Unfortunately, obtaining new annotated resources for each task in each language would be prohibitively expensive. At the same time, commercial machine translation systems are now robust. Can we leverage these systems to translate English-language datasets automatically? In this paper, we offer a positive response for natural language inference (NLI) in Turkish. We translated two large English NLI datasets into Turkish and had a team of experts validate their translation quality and fidelity to the original labels. Using these datasets, we address core issues of representation for Turkish NLI. We find that in-language embeddings are essential and that morphological parsing can be avoided where the training set is large. Finally, we show that models trained on our machine-translated datasets are successful on human-translated evaluation sets. We share all code, models, and data publicly.

In this paper, we consider the lens hyperbolic gamma solution to the star-star relation and the flipping relation from three-dimensional N=2 supersymmetric gauge theories on Sb3/Zr. We explore that a certain limit of the star-star relation yields the latter symmetry transformation, which exchanges the edge interactions of two outer spins with a centrally sited spin. Furthermore, we obtain more solutions to the flipping relation in terms of the hyperbolic gamma, basic hypergeometric, and the Euler gamma functions.

Current capsule endoscopes and next-generation robotic capsules for diagnosis

and treatment of gastrointestinal diseases are complex cyber-physical platforms

that must orchestrate complex software and hardware functions. The desired

tasks for these systems include visual localization, depth estimation, 3D

mapping, disease detection and segmentation, automated navigation, active

control, path realization and optional therapeutic modules such as targeted

drug delivery and biopsy sampling. Data-driven algorithms promise to enable

many advanced functionalities for capsule endoscopes, but real-world data is

challenging to obtain. Physically-realistic simulations providing synthetic

data have emerged as a solution to the development of data-driven algorithms.

In this work, we present a comprehensive simulation platform for capsule

endoscopy operations and introduce VR-Caps, a virtual active capsule

environment that simulates a range of normal and abnormal tissue conditions

(e.g., inflated, dry, wet etc.) and varied organ types, capsule endoscope

designs (e.g., mono, stereo, dual and 360{\deg}camera), and the type, number,

strength, and placement of internal and external magnetic sources that enable

active locomotion. VR-Caps makes it possible to both independently or jointly

develop, optimize, and test medical imaging and analysis software for the

current and next-generation endoscopic capsule systems. To validate this

approach, we train state-of-the-art deep neural networks to accomplish various

medical image analysis tasks using simulated data from VR-Caps and evaluate the

performance of these models on real medical data. Results demonstrate the

usefulness and effectiveness of the proposed virtual platform in developing

algorithms that quantify fractional coverage, camera trajectory, 3D map

reconstruction, and disease classification.

Deep neural network models owe their representational power to the high number of learnable parameters. It is often infeasible to run these largely parametrized deep models in limited resource environments, like mobile phones. Network models employing conditional computing are able to reduce computational requirements while achieving high representational power, with their ability to model hierarchies. We propose Conditional Information Gain Networks, which allow the feed forward deep neural networks to execute conditionally, skipping parts of the model based on the sample and the decision mechanisms inserted in the architecture. These decision mechanisms are trained using cost functions based on differentiable Information Gain, inspired by the training procedures of decision trees. These information gain based decision mechanisms are differentiable and can be trained end-to-end using a unified framework with a general cost function, covering both classification and decision losses. We test the effectiveness of the proposed method on MNIST and recently introduced Fashion MNIST datasets and show that our information gain based conditional execution approach can achieve better or comparable classification results using significantly fewer parameters, compared to standard convolutional neural network baselines.

We present several known solutions to the two-dimensional Ising model. This

review originated from the ``Ising 100'' seminar series held at

Bo\u{g}azi\c{c}i University, Istanbul, in 2024.

28 Sep 2017

University of OsloNikhefPanjab University University of Copenhagen

University of Copenhagen INFNYonsei UniversityJoint Institute for Nuclear Research

INFNYonsei UniversityJoint Institute for Nuclear Research Yale University

Yale University Lawrence Berkeley National LaboratoryOak Ridge National LaboratoryUniversity of HoustonCentral China Normal UniversityUtrecht UniversityUniversidade Federal do ABCPolitecnico di TorinoUniversity of BirminghamUniversity of TsukubaNiels Bohr InstituteLund UniversityCzech Technical University in PragueUniversity of JyväskyläUniversidad Nacional Autónoma de MéxicoSaha Institute of Nuclear PhysicsUniversity of Cape TownLaboratori Nazionali di FrascatiUniversity of BergenPolish Academy of SciencesEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCHFrankfurt Institute for Advanced StudiesBenemérita Universidad Autónoma de PueblaSt. Petersburg State UniversityKharkov Institute of Physics and TechnologyComenius UniversityCINVESTAVAligarh Muslim UniversityBogazici UniversityUniversità di BariUniversidad de OviedoUniversità degli Studi di CagliariGSI Helmholtzzentrum für SchwerionenforschungHoria Hulubei National Institute for R&D in Physics and Nuclear EngineeringInstitute of Physics, BhubaneswarInstitute for Nuclear Research, Russian Academy of SciencesCreighton UniversityPolitecnico di BariUniversidad de HuelvaInstitute for Theoretical and Experimental PhysicsUniversidad de Santiago de CompostelaVariable Energy Cyclotron CentreUniversity of AthensRuprecht-Karls-Universität HeidelbergCEA IrfuUniversidad Autónoma de SinaloaJohann Wolfgang Goethe-UniversitätMoscow Engineering Physics InstituteInstitute for High Energy PhysicsNuclear Physics Institute, Academy of Sciences of the Czech RepublicCNRS-IN2P3Laboratoire de Physique CorpusculaireNational Institute for R&D of Isotopic and Molecular TechnologiesInstitute of Experimental PhysicsUniversity of JammuKFKI Research Institute for Particle and Nuclear PhysicsInstitut de Physique Nucléaire d'OrsayEcole des Mines de NantesTechnical University of KošiceUniversità del Piemonte Orientale “A. Avogadro”Russian Research Centre, Kurchatov InstituteCentre de Recherches SubatomiquesLaboratorio de Aplicaciones NuclearesRudjer Boškovic´ InstituteLaboratoire de Physique Subatomique et et de CosmologieV. Ulyanov-Lenin Kazan Federal UniversityHenryk Niewodnicza´nski Institute of Nuclear PhysicsSt.Petersburg State Polytechnical UniversityUniversit

Paris-SudUniversit

de NantesUniversit

Claude Bernard Lyon 1Universit

di SalernoUniversit

Joseph FourierUniversit

degli Studi di TorinoUniversit

Di BolognaUniversit

degli Studi di Trieste

Lawrence Berkeley National LaboratoryOak Ridge National LaboratoryUniversity of HoustonCentral China Normal UniversityUtrecht UniversityUniversidade Federal do ABCPolitecnico di TorinoUniversity of BirminghamUniversity of TsukubaNiels Bohr InstituteLund UniversityCzech Technical University in PragueUniversity of JyväskyläUniversidad Nacional Autónoma de MéxicoSaha Institute of Nuclear PhysicsUniversity of Cape TownLaboratori Nazionali di FrascatiUniversity of BergenPolish Academy of SciencesEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCHFrankfurt Institute for Advanced StudiesBenemérita Universidad Autónoma de PueblaSt. Petersburg State UniversityKharkov Institute of Physics and TechnologyComenius UniversityCINVESTAVAligarh Muslim UniversityBogazici UniversityUniversità di BariUniversidad de OviedoUniversità degli Studi di CagliariGSI Helmholtzzentrum für SchwerionenforschungHoria Hulubei National Institute for R&D in Physics and Nuclear EngineeringInstitute of Physics, BhubaneswarInstitute for Nuclear Research, Russian Academy of SciencesCreighton UniversityPolitecnico di BariUniversidad de HuelvaInstitute for Theoretical and Experimental PhysicsUniversidad de Santiago de CompostelaVariable Energy Cyclotron CentreUniversity of AthensRuprecht-Karls-Universität HeidelbergCEA IrfuUniversidad Autónoma de SinaloaJohann Wolfgang Goethe-UniversitätMoscow Engineering Physics InstituteInstitute for High Energy PhysicsNuclear Physics Institute, Academy of Sciences of the Czech RepublicCNRS-IN2P3Laboratoire de Physique CorpusculaireNational Institute for R&D of Isotopic and Molecular TechnologiesInstitute of Experimental PhysicsUniversity of JammuKFKI Research Institute for Particle and Nuclear PhysicsInstitut de Physique Nucléaire d'OrsayEcole des Mines de NantesTechnical University of KošiceUniversità del Piemonte Orientale “A. Avogadro”Russian Research Centre, Kurchatov InstituteCentre de Recherches SubatomiquesLaboratorio de Aplicaciones NuclearesRudjer Boškovic´ InstituteLaboratoire de Physique Subatomique et et de CosmologieV. Ulyanov-Lenin Kazan Federal UniversityHenryk Niewodnicza´nski Institute of Nuclear PhysicsSt.Petersburg State Polytechnical UniversityUniversit

Paris-SudUniversit

de NantesUniversit

Claude Bernard Lyon 1Universit

di SalernoUniversit

Joseph FourierUniversit

degli Studi di TorinoUniversit

Di BolognaUniversit

degli Studi di Trieste

University of Copenhagen

University of Copenhagen INFNYonsei UniversityJoint Institute for Nuclear Research

INFNYonsei UniversityJoint Institute for Nuclear Research Yale University

Yale University Lawrence Berkeley National LaboratoryOak Ridge National LaboratoryUniversity of HoustonCentral China Normal UniversityUtrecht UniversityUniversidade Federal do ABCPolitecnico di TorinoUniversity of BirminghamUniversity of TsukubaNiels Bohr InstituteLund UniversityCzech Technical University in PragueUniversity of JyväskyläUniversidad Nacional Autónoma de MéxicoSaha Institute of Nuclear PhysicsUniversity of Cape TownLaboratori Nazionali di FrascatiUniversity of BergenPolish Academy of SciencesEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCHFrankfurt Institute for Advanced StudiesBenemérita Universidad Autónoma de PueblaSt. Petersburg State UniversityKharkov Institute of Physics and TechnologyComenius UniversityCINVESTAVAligarh Muslim UniversityBogazici UniversityUniversità di BariUniversidad de OviedoUniversità degli Studi di CagliariGSI Helmholtzzentrum für SchwerionenforschungHoria Hulubei National Institute for R&D in Physics and Nuclear EngineeringInstitute of Physics, BhubaneswarInstitute for Nuclear Research, Russian Academy of SciencesCreighton UniversityPolitecnico di BariUniversidad de HuelvaInstitute for Theoretical and Experimental PhysicsUniversidad de Santiago de CompostelaVariable Energy Cyclotron CentreUniversity of AthensRuprecht-Karls-Universität HeidelbergCEA IrfuUniversidad Autónoma de SinaloaJohann Wolfgang Goethe-UniversitätMoscow Engineering Physics InstituteInstitute for High Energy PhysicsNuclear Physics Institute, Academy of Sciences of the Czech RepublicCNRS-IN2P3Laboratoire de Physique CorpusculaireNational Institute for R&D of Isotopic and Molecular TechnologiesInstitute of Experimental PhysicsUniversity of JammuKFKI Research Institute for Particle and Nuclear PhysicsInstitut de Physique Nucléaire d'OrsayEcole des Mines de NantesTechnical University of KošiceUniversità del Piemonte Orientale “A. Avogadro”Russian Research Centre, Kurchatov InstituteCentre de Recherches SubatomiquesLaboratorio de Aplicaciones NuclearesRudjer Boškovic´ InstituteLaboratoire de Physique Subatomique et et de CosmologieV. Ulyanov-Lenin Kazan Federal UniversityHenryk Niewodnicza´nski Institute of Nuclear PhysicsSt.Petersburg State Polytechnical UniversityUniversit

Paris-SudUniversit

de NantesUniversit

Claude Bernard Lyon 1Universit

di SalernoUniversit

Joseph FourierUniversit

degli Studi di TorinoUniversit

Di BolognaUniversit

degli Studi di Trieste

Lawrence Berkeley National LaboratoryOak Ridge National LaboratoryUniversity of HoustonCentral China Normal UniversityUtrecht UniversityUniversidade Federal do ABCPolitecnico di TorinoUniversity of BirminghamUniversity of TsukubaNiels Bohr InstituteLund UniversityCzech Technical University in PragueUniversity of JyväskyläUniversidad Nacional Autónoma de MéxicoSaha Institute of Nuclear PhysicsUniversity of Cape TownLaboratori Nazionali di FrascatiUniversity of BergenPolish Academy of SciencesEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCHFrankfurt Institute for Advanced StudiesBenemérita Universidad Autónoma de PueblaSt. Petersburg State UniversityKharkov Institute of Physics and TechnologyComenius UniversityCINVESTAVAligarh Muslim UniversityBogazici UniversityUniversità di BariUniversidad de OviedoUniversità degli Studi di CagliariGSI Helmholtzzentrum für SchwerionenforschungHoria Hulubei National Institute for R&D in Physics and Nuclear EngineeringInstitute of Physics, BhubaneswarInstitute for Nuclear Research, Russian Academy of SciencesCreighton UniversityPolitecnico di BariUniversidad de HuelvaInstitute for Theoretical and Experimental PhysicsUniversidad de Santiago de CompostelaVariable Energy Cyclotron CentreUniversity of AthensRuprecht-Karls-Universität HeidelbergCEA IrfuUniversidad Autónoma de SinaloaJohann Wolfgang Goethe-UniversitätMoscow Engineering Physics InstituteInstitute for High Energy PhysicsNuclear Physics Institute, Academy of Sciences of the Czech RepublicCNRS-IN2P3Laboratoire de Physique CorpusculaireNational Institute for R&D of Isotopic and Molecular TechnologiesInstitute of Experimental PhysicsUniversity of JammuKFKI Research Institute for Particle and Nuclear PhysicsInstitut de Physique Nucléaire d'OrsayEcole des Mines de NantesTechnical University of KošiceUniversità del Piemonte Orientale “A. Avogadro”Russian Research Centre, Kurchatov InstituteCentre de Recherches SubatomiquesLaboratorio de Aplicaciones NuclearesRudjer Boškovic´ InstituteLaboratoire de Physique Subatomique et et de CosmologieV. Ulyanov-Lenin Kazan Federal UniversityHenryk Niewodnicza´nski Institute of Nuclear PhysicsSt.Petersburg State Polytechnical UniversityUniversit

Paris-SudUniversit

de NantesUniversit

Claude Bernard Lyon 1Universit

di SalernoUniversit

Joseph FourierUniversit

degli Studi di TorinoUniversit

Di BolognaUniversit

degli Studi di TriesteThe first measurement of the charged-particle multiplicity density at mid-rapidity in Pb-Pb collisions at a centre-of-mass energy per nucleon pair sNN = 2.76 TeV is presented. For an event sample corresponding to the most central 5% of the hadronic cross section the pseudo-rapidity density of primary charged particles at mid-rapidity is 1584 ± 4 (stat) ± 76 (sys.), which corresponds to 8.3 ± 0.4 (sys.) per participating nucleon pair. This represents an increase of about a factor 1.9 relative to pp collisions at similar collision energies, and about a factor 2.2 to central Au-Au collisions at sNN = 0.2 TeV. This measurement provides the first experimental constraint for models of nucleus-nucleus collisions at LHC energies.

There are no more papers matching your filters at the moment.