Complexity Science Hub Vienna

This research introduces a co-evolutionary framework where individual strategies and the game environments they embody undergo simultaneous evolutionary selection. It demonstrates how such dynamic environments, particularly when structured by complex networks, foster the emergence and maintenance of cooperative behavior from various initial conditions.

Researchers from Graz University of Technology, Complexity Science Hub Vienna, and ETH Zurich developed "model folding," a data-free and fine-tuning-free compression technique that reduces neural network size by merging structurally similar neurons across layers while preserving internal data statistics. This method consistently outperforms other data-free baselines and traditional pruning at high sparsity levels across CNNs and LLaMA-7B, achieving significant compression and efficiency without compromising performance.

Measuring similarity of neural networks to understand and improve their

behavior has become an issue of great importance and research interest. In this

survey, we provide a comprehensive overview of two complementary perspectives

of measuring neural network similarity: (i) representational similarity, which

considers how activations of intermediate layers differ, and (ii) functional

similarity, which considers how models differ in their outputs. In addition to

providing detailed descriptions of existing measures, we summarize and discuss

results on the properties of and relationships between these measures, and

point to open research problems. We hope our work lays a foundation for more

systematic research on the properties and applicability of similarity measures

for neural network models.

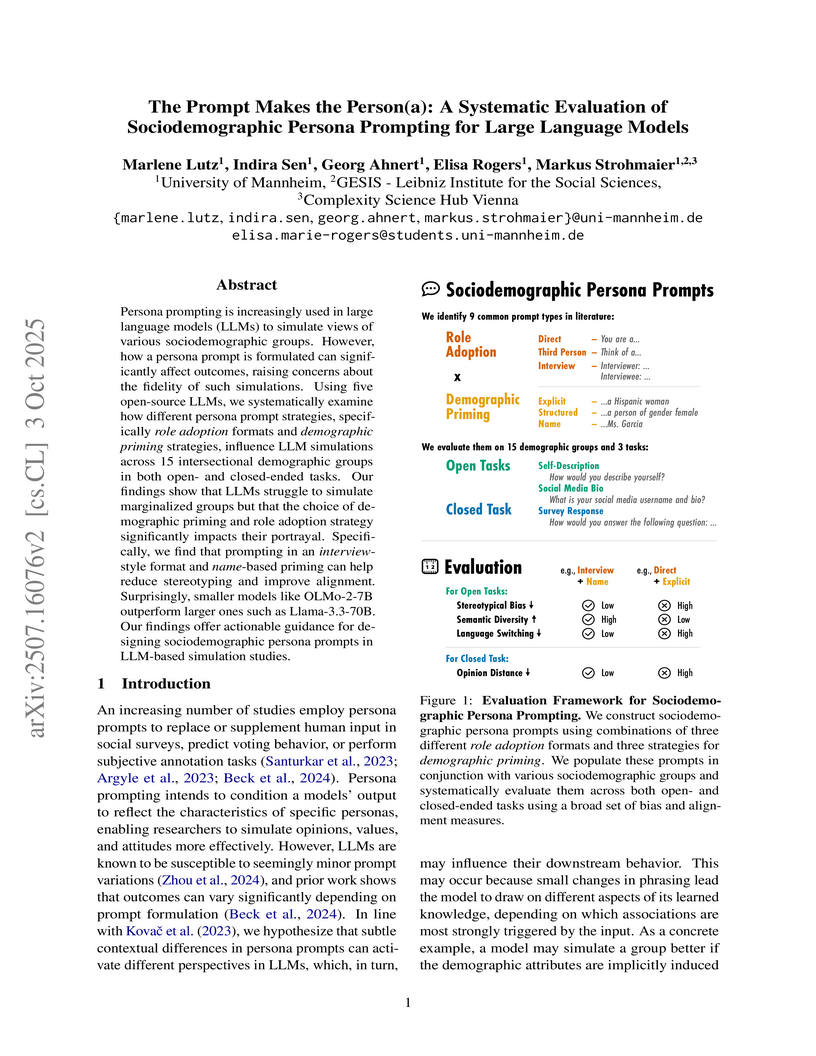

Researchers from the University of Mannheim systematically evaluated how different prompting strategies affect large language models' ability to simulate sociodemographic personas. The study identified that name-based priming and interview-style prompts reduced stereotyping, increased semantic diversity, and improved opinion alignment, with smaller models often outperforming larger ones in these tasks.

Researchers from ETH Zurich investigated the voting behaviors of Large Language Models (LLMs) like GPT-4 and LLaMA-2 in a simulated participatory budgeting scenario, comparing them to human voting patterns. The study revealed that current LLMs demonstrate biases, limited preference diversity, and susceptibility to order effects, highlighting their inadequacy for fully replicating complex human preferences in democratic contexts.

09 Jul 2018

A computational agent-based model demonstrates that extreme success is more strongly correlated with exceptional luck than with extreme talent, despite a normal distribution of talent. The model also shows that egalitarian funding strategies are more efficient at fostering successful talented individuals compared to elitist approaches.

14 Oct 2025

Researchers from ETH Zurich and Complexity Science Hub Vienna established a data-driven model demonstrating that the growth advantage of large cities diminishes as urban systems mature, unifying prior conflicting theories of urban development. Their analysis predicts a substantial slowdown in global urban population concentration, with the share of urban residents in cities over one million increasing at less than half the rate between 2025 and 2075 compared to the preceding five decades.

17 Jan 2025

Diversified economies are critical for cities to sustain their growth and development, but they are also costly because diversification often requires expanding a city's capability base. We analyze how cities manage this trade-off by measuring the coherence of the economic activities they support, defined as the technological distance between randomly sampled productive units in a city. We use this framework to study how the US urban system developed over almost two centuries, from 1850 to today. To do so, we rely on historical census data, covering over 600M individual records to describe the economic activities of cities between 1850 and 1940, and 8 million patent records as well as detailed occupational and industrial profiles of cities for more recent decades. Despite massive shifts in the economic geography of the U.S. over this 170-year period, average coherence in its urban system remains unchanged. Moreover, across different time periods, datasets and relatedness measures, coherence falls with city size at the exact same rate, pointing to constraints to diversification that are governed by a city's size in universal ways.

Learning on temporal graphs has become a central topic in graph representation learning, with numerous benchmarks indicating the strong performance of state-of-the-art models. However, recent work has raised concerns about the reliability of benchmark results, noting issues with commonly used evaluation protocols and the surprising competitiveness of simple heuristics. This contrast raises the question of which properties of the underlying graphs temporal graph learning models actually use to form their predictions. We address this by systematically evaluating seven models on their ability to capture eight fundamental attributes related to the link structure of temporal graphs. These include structural characteristics such as density, temporal patterns such as recency, and edge formation mechanisms such as homophily. Using both synthetic and real-world datasets, we analyze how well models learn these attributes. Our findings reveal a mixed picture: models capture some attributes well but fail to reproduce others. With this, we expose important limitations. Overall, we believe that our results provide practical insights for the application of temporal graph learning models, and motivate more interpretability-driven evaluations in temporal graph learning research.

Dataset distillation has emerged as a strategy to compress real-world datasets for efficient training. However, it struggles with large-scale and high-resolution datasets, limiting its practicality. This paper introduces a novel resolution-independent dataset distillation method Focus ed Dataset Distillation (FocusDD), which achieves diversity and realism in distilled data by identifying key information patches, thereby ensuring the generalization capability of the distilled dataset across different network architectures. Specifically, FocusDD leverages a pre-trained Vision Transformer (ViT) to extract key image patches, which are then synthesized into a single distilled image. These distilled images, which capture multiple targets, are suitable not only for classification tasks but also for dense tasks such as object detection. To further improve the generalization of the distilled dataset, each synthesized image is augmented with a downsampled view of the original image. Experimental results on the ImageNet-1K dataset demonstrate that, with 100 images per class (IPC), ResNet50 and MobileNet-v2 achieve validation accuracies of 71.0% and 62.6%, respectively, outperforming state-of-the-art methods by 2.8% and 4.7%. Notably, FocusDD is the first method to use distilled datasets for object detection tasks. On the COCO2017 dataset, with an IPC of 50, YOLOv11n and YOLOv11s achieve 24.4% and 32.1% mAP, respectively, further validating the effectiveness of our approach.

08 Oct 2025

Chronic diseases frequently co-occur in patterns that are unlikely to arise by chance, a phenomenon known as multimorbidity. This growing challenge for patients and healthcare systems is amplified by demographic aging and the rising burden of chronic conditions. However, our understanding of how individuals transition from a disease-free-state to accumulating diseases as they age is limited. Recently, data-driven methods have been developed to characterize morbidity trajectories using electronic health records; however, their generalizability across healthcare settings remains largely unexplored. In this paper, we conduct a cross-country validation of a data-driven multimorbidity trajectory model using population-wide health data from Denmark and Austria. Despite considerable differences in healthcare organization, we observe a high degree of similarity in disease cluster structures. The Adjusted Rand Index (0.998) and the Normalized Mutual Information (0.88) both indicate strong alignment between the two clusterings. These findings suggest that multimorbidity trajectories are shaped by robust, shared biological and epidemiological mechanisms that transcend national healthcare contexts.

Identifying patterns of relations among the units of a complex system from

measurements of their activities in time is a fundamental problem with many

practical applications. Here, we introduce a method that detects dependencies

of any order in multivariate time series data. The method first transforms a

multivariate time series into a symbolic sequence, and then extract

statistically significant strings of symbols through a Bayesian approach. Such

motifs are finally modelled as the hyperedges of a hypergraph, allowing us to

use network theory to study higher-order interactions in the original data.

When applied to neural and social systems, our method reveals meaningful

higher-order dependencies, highlighting their importance in both brain function

and social behaviour.

20 Jul 2022

After Big Data and Artificial Intelligence (AI), the subject of Digital Twins

has emerged as another promising technology, advocated, built, and sold by

various IT companies. The approach aims to produce highly realistic models of

real systems. In the case of dynamically changing systems, such digital twins

would have a life, i.e. they would change their behaviour over time and, in

perspective, take decisions like their real counterparts \textemdash so the

vision. In contrast to animated avatars, however, which only imitate the

behaviour of real systems, like deep fakes, digital twins aim to be accurate

"digital copies", i.e. "duplicates" of reality, which may interact with reality

and with their physical counterparts. This chapter explores, what are possible

applications and implications, limitations, and threats.

Successful collective action on issues from climate change to the maintenance of democracy depends on societal properties such as cultural tightness and social cohesion. How these properties evolve is not well understood because they emerge from a complex interplay between beliefs and behaviors that are usually modeled separately. Here we address this challenge by developing a game-theoretical framework incorporating norm-utility models to study the coevolutionary dynamics of cooperative action, expressed belief, and norm-utility preferences. We show that the introduction of evolving beliefs and preferences into the Snowdrift game and Prisoner's Dilemma leads to a proliferation of evolutionary stable equilibria, each with different societal properties. In particular, we find that a declining material environment can simultaneously be associated with increased cultural tightness (defined as the degree to which individuals behave in accordance with widely held beliefs) and reduced social cohesion (defined as the degree of social homogeneity i.e. the extent to which individuals belong to a single well-defined group). Loss of social homogeneity occurs via a process of evolutionary branching, in which a population fragments into two distinct social groups with strikingly different characteristics. The groups that emerge differ not only in their willingness to cooperate, but also in their beliefs about cooperation and in their preferences for conformity and coherence of their actions and beliefs. These results have implications for our understanding of the resilience of cooperation and collective action in times of crisis.

We introduce a novel proxy for firm linkages, Characteristic Vector Linkages (CVLs). We use this concept to estimate firm linkages, first through Euclidean similarity, and then by applying Quantum Cognition Machine Learning (QCML) to similarity learning. We demonstrate that both methods can be used to construct profitable momentum spillover trading strategies, but QCML similarity outperforms the simpler Euclidean similarity.

The spread of information through socio-technical systems determines which individuals are the first to gain access to opportunities and insights. Yet, the pathways through which information flows can be skewed, leading to systematic differences in access across social groups. These inequalities remain poorly characterized in settings involving nonlinear social contagion and higher-order interactions that exhibit homophily. We introduce a enerative model for hypergraphs with hyperedge homophily, a hyperedge size-dependent property, and tunable degree distribution, called the H3 model, along with a model for nonlinear social contagion that incorporates asymmetric transmission between in-group and out-group nodes. Using stochastic simulations of a social contagion process on hypergraphs from the H3 model and diverse empirical datasets, we show that the interaction between social contagion dynamics and hyperedge homophily -- an effect unique to higher-order networks due to its dependence on hyperedge size -- can critically shape group-level differences in information access. By emphasizing how hyperedge homophily shapes interaction patterns, our findings underscore the need to rethink socio-technical system design through a higher-order perspective and suggest that dynamics-informed, targeted interventions at specific hyperedge sizes, embedded in a platform architecture, offer a powerful lever for reducing inequality.

Northwestern Polytechnical University University of Cambridge

University of Cambridge Beijing Normal University

Beijing Normal University National University of Singapore

National University of Singapore Boston UniversityUniversidad Rey Juan CarlosMoscow Institute of Physics and TechnologyUniversity of MariborRuđer Bošković InstituteTokyo Institute of TechnologyComplexity Science Hub ViennaUniversity of RijekaChina Medical UniversityPotsdam Institute for Climate Impact Research (PIK)Japan Science and Technology Agencythe University of TsukubaYunnan University of Finance and EconomicsCNR—Institute for Complex Systems

Boston UniversityUniversidad Rey Juan CarlosMoscow Institute of Physics and TechnologyUniversity of MariborRuđer Bošković InstituteTokyo Institute of TechnologyComplexity Science Hub ViennaUniversity of RijekaChina Medical UniversityPotsdam Institute for Climate Impact Research (PIK)Japan Science and Technology Agencythe University of TsukubaYunnan University of Finance and EconomicsCNR—Institute for Complex Systems

University of Cambridge

University of Cambridge Beijing Normal University

Beijing Normal University National University of Singapore

National University of Singapore Boston UniversityUniversidad Rey Juan CarlosMoscow Institute of Physics and TechnologyUniversity of MariborRuđer Bošković InstituteTokyo Institute of TechnologyComplexity Science Hub ViennaUniversity of RijekaChina Medical UniversityPotsdam Institute for Climate Impact Research (PIK)Japan Science and Technology Agencythe University of TsukubaYunnan University of Finance and EconomicsCNR—Institute for Complex Systems

Boston UniversityUniversidad Rey Juan CarlosMoscow Institute of Physics and TechnologyUniversity of MariborRuđer Bošković InstituteTokyo Institute of TechnologyComplexity Science Hub ViennaUniversity of RijekaChina Medical UniversityPotsdam Institute for Climate Impact Research (PIK)Japan Science and Technology Agencythe University of TsukubaYunnan University of Finance and EconomicsCNR—Institute for Complex SystemsRecent decades have seen a rise in the use of physics methods to study different societal phenomena. This development has been due to physicists venturing outside of their traditional domains of interest, but also due to scientists from other disciplines taking from physics the methods that have proven so successful throughout the 19th and the 20th century. Here we dub this field 'social physics' and pay our respect to intellectual mavericks who nurtured it to maturity. We do so by reviewing the current state of the art. Starting with a set of topics that are at the heart of modern human societies, we review research dedicated to urban development and traffic, the functioning of financial markets, cooperation as the basis for our evolutionary success, the structure of social networks, and the integration of intelligent machines into these networks. We then shift our attention to a set of topics that explore potential threats to society. These include criminal behaviour, large-scale migrations, epidemics, environmental challenges, and climate change. We end the coverage of each topic with promising directions for future research. Based on this, we conclude that the future for social physics is bright. Physicists studying societal phenomena are no longer a curiosity, but rather a force to be reckoned with. Notwithstanding, it remains of the utmost importance that we continue to foster constructive dialogue and mutual respect at the interfaces of different scientific disciplines.

Adversarial attacks pose a significant threat to the robustness and

reliability of machine learning systems, particularly in computer vision

applications. This study investigates the performance of adversarial patches

for the YOLO object detection network in the physical world. Two attacks were

tested: a patch designed to be placed anywhere within the scene - global patch,

and another patch intended to partially overlap with specific object targeted

for removal from detection - local patch. Various factors such as patch size,

position, rotation, brightness, and hue were analyzed to understand their

impact on the effectiveness of the adversarial patches. The results reveal a

notable dependency on these parameters, highlighting the challenges in

maintaining attack efficacy in real-world conditions. Learning to align

digitally applied transformation parameters with those measured in the real

world still results in up to a 64\% discrepancy in patch performance. These

findings underscore the importance of understanding environmental influences on

adversarial attacks, which can inform the development of more robust defenses

for practical machine learning applications.

Social dialogue, the foundation of our democracies, is currently threatened by disinformation and partisanship, with their disrupting role on individual and collective awareness and detrimental effects on decision-making processes. Despite a great deal of attention to the news sphere itself, little is known about the subtle interplay between the offer and the demand for information. Still, a broader perspective on the news ecosystem, including both the producers and the consumers of information, is needed to build new tools to assess the health of the infosphere. Here, we combine in the same framework news supply, as mirrored by a fairly complete Italian news database - partially annotated for fake news, and news demand, as captured through the Google Trends data for Italy. Our investigation focuses on the temporal and semantic interplay of news, fake news, and searches in several domains, including the virus SARS-CoV-2 pandemic. Two main results emerge. First, disinformation is extremely reactive to people's interests and tends to thrive, especially when there is a mismatch between what people are interested in and what news outlets provide. Second, a suitably defined index can assess the level of disinformation only based on the available volumes of news and searches. Although our results mainly concern the Coronavirus subject, we provide hints that the same findings can have more general applications. We contend these results can be a powerful asset in informing campaigns against disinformation and providing news outlets and institutions with potentially relevant strategies.

16 May 2025

Walking is the most sustainable form of urban mobility, but is compromised by

uncomfortable or unhealthy sun exposure, which is an increasing problem due to

global warming. Shade from buildings can provide cooling and protection for

pedestrians, but the extent of this potential benefit is unknown. Here we

explore the potential for shaded walking, using building footprints and street

networks from both synthetic and real cities. We introduce a route choice model

with a sun avoidance parameter α and define the CoolWalkability metric

to measure opportunities for walking in shade. We derive analytically that on a

regular grid with constant building heights, CoolWalkability is independent of

α, and that the grid provides no CoolWalkability benefit for

shade-seeking individuals compared to the shortest path. However, variations in

street geometry and building heights create such benefits. We further uncover

that the potential for shaded routing differs between grid-like and irregular

street networks, forms local clusters, and is sensitive to the mapped network

geometry. Our research identifies the limitations and potential of shade for

cool, active travel, and is a first step towards a rigorous understanding of

shade provision for sustainable mobility in cities.

There are no more papers matching your filters at the moment.