Digital Futures

17 Jul 2024

Useful robot control algorithms should not only achieve performance objectives but also adhere to hard safety constraints. Control Barrier Functions (CBFs) have been developed to provably ensure system safety through forward invariance. However, they often unnecessarily sacrifice performance for safety since they are purely reactive. Receding horizon control (RHC), on the other hand, consider planned trajectories to account for the future evolution of a system. This work provides a new perspective on safety-critical control by introducing Forward Invariance in Trajectory Spaces (FITS). We lift the problem of safe RHC into the trajectory space and describe the evolution of planned trajectories as a controlled dynamical system. Safety constraints defined over states can be converted into sets in the trajectory space which we render forward invariant via a CBF framework. We derive an efficient quadratic program (QP) to synthesize trajectories that provably satisfy safety constraints. Our experiments support that FITS improves the adherence to safety specifications without sacrificing performance over alternative CBF and NMPC methods.

An iterative training framework called IFeF-PINN enhances Physics-Informed Neural Networks by integrating Fourier-enhanced features and a bi-level optimization scheme. This approach effectively addresses spectral bias, achieving significantly lower L2-errors of 0.0092 on high-frequency Helmholtz and 0.0025 on high-frequency convection problems, where conventional methods often fail.

29 Sep 2025

We present a data-driven framework for assessing the attack resilience of linear time-invariant systems against malicious false data injection sensor attacks. Based on the concept of sparse observability, data-driven resilience metrics are proposed. First, we derive a data-driven necessary and sufficient condition for assessing the system's resilience against sensor attacks, using data collected without any attacks. If we obtain attack-free data that satisfy a specific rank condition, we can exactly evaluate the attack resilience level even in a model-free setting. We then extend this analysis to a scenario where only poisoned data are available. Given the poisoned data, we can only conservatively assess the system's resilience. In both scenarios, we also provide polynomial-time algorithms to assess the system resilience under specific conditions. Finally, numerical examples illustrate the efficacy and limitations of the proposed framework.

22 Jan 2025

Robust Data-Driven Kalman Filtering for Unknown Linear Systems using Maximum Likelihood Optimization

Robust Data-Driven Kalman Filtering for Unknown Linear Systems using Maximum Likelihood Optimization

This paper investigates the state estimation problem for unknown linear systems subject to both process and measurement noise. Based on a prior input-output trajectory sampled at a higher frequency and a prior state trajectory sampled at a lower frequency, we propose a novel robust data-driven Kalman filter (RDKF) that integrates model identification with state estimation for the unknown system. Specifically, the state estimation problem is formulated as a non-convex maximum likelihood optimization problem. Then, we slightly modify the optimization problem to get a problem solvable with a recursive algorithm. Based on the optimal solution to this new problem, the RDKF is designed, which can estimate the state of a given but unknown state-space model. The performance gap between the RDKF and the optimal Kalman filter based on known system matrices is quantified through a sample complexity bound. In particular, when the number of the pre-collected states tends to infinity, this gap converges to zero. Finally, the effectiveness of the theoretical results is illustrated by numerical simulations.

21 Aug 2023

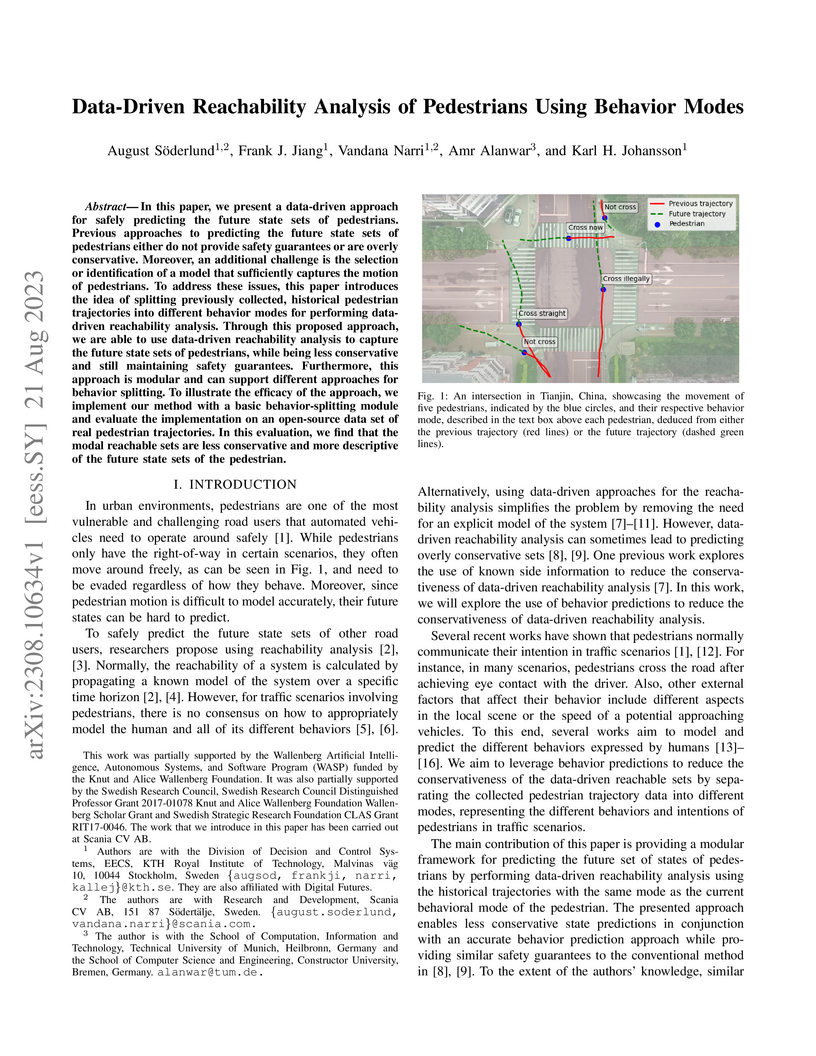

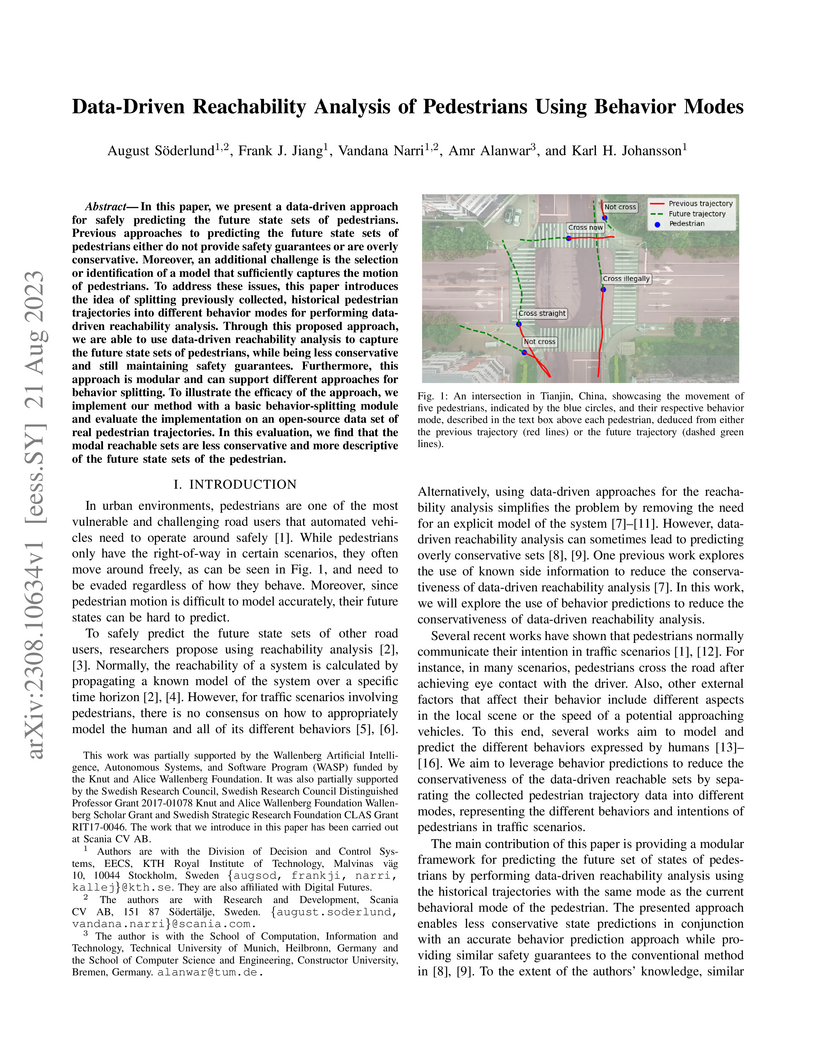

In this paper, we present a data-driven approach for safely predicting the future state sets of pedestrians. Previous approaches to predicting the future state sets of pedestrians either do not provide safety guarantees or are overly conservative. Moreover, an additional challenge is the selection or identification of a model that sufficiently captures the motion of pedestrians. To address these issues, this paper introduces the idea of splitting previously collected, historical pedestrian trajectories into different behavior modes for performing data-driven reachability analysis. Through this proposed approach, we are able to use data-driven reachability analysis to capture the future state sets of pedestrians, while being less conservative and still maintaining safety guarantees. Furthermore, this approach is modular and can support different approaches for behavior splitting. To illustrate the efficacy of the approach, we implement our method with a basic behavior-splitting module and evaluate the implementation on an open-source data set of real pedestrian trajectories. In this evaluation, we find that the modal reachable sets are less conservative and more descriptive of the future state sets of the pedestrian.

Distributed Optimization and Learning for Automated Stepsize Selection with Finite Time Coordination

Distributed Optimization and Learning for Automated Stepsize Selection with Finite Time Coordination

Distributed optimization and learning algorithms are designed to operate over large scale networks enabling processing of vast amounts of data effectively and efficiently. One of the main challenges for ensuring a smooth learning process in gradient-based methods is the appropriate selection of a learning stepsize. Most current distributed approaches let individual nodes adapt their stepsizes locally. However, this may introduce stepsize heterogeneity in the network, thus disrupting the learning process and potentially leading to divergence. In this paper, we propose a distributed learning algorithm that incorporates a novel mechanism for automating stepsize selection among nodes. Our main idea relies on implementing a finite time coordination algorithm for eliminating stepsize heterogeneity among nodes. We analyze the operation of our algorithm and we establish its convergence to the optimal solution. We conclude our paper with numerical simulations for a linear regression problem, showcasing that eliminating stepsize heterogeneity enhances convergence speed and accuracy against current approaches.

02 Oct 2025

In this paper, we investigate data-driven attack detection and identification in a model-free setting. Unlike existing studies, we consider the case where the available output data include malicious false-data injections. We aim to detect and identify such attacks solely from the compromised data. We address this problem in two scenarios: (1) when the system operator is aware of the system's sparse observability condition, and (2) when the data are partially clean (i.e., attack-free). In both scenarios, we derive conditions and algorithms for detecting and identifying attacks using only the compromised data. Finally, we demonstrate the effectiveness of the proposed framework via numerical simulations on a three-inertia system.

Networked Control Systems (NCSs) are integral in critical infrastructures such as power grids, transportation networks, and production systems. Ensuring the resilient operation of these large-scale NCSs against cyber-attacks is crucial for societal well-being. Over the past two decades, extensive research has been focused on developing metrics to quantify the vulnerabilities of NCSs against attacks. Once the vulnerabilities are quantified, mitigation strategies can be employed to enhance system resilience. This article provides a comprehensive overview of methods developed for assessing NCS vulnerabilities and the corresponding mitigation strategies. Furthermore, we emphasize the importance of probabilistic risk metrics to model vulnerabilities under adversaries with imperfect process knowledge. The article concludes by outlining promising directions for future research.

This paper presents a novel observer-based approach to detect and isolate faulty sensors in nonlinear systems. The proposed sensor fault detection and isolation (s-FDI) method applies to a general class of nonlinear systems. Our focus is on s-FDI for two types of faults: complete failure and sensor degradation. The key aspect of this approach lies in the utilization of a neural network-based Kazantzis-Kravaris/Luenberger (KKL) observer. The neural network is trained to learn the dynamics of the observer, enabling accurate output predictions of the system. Sensor faults are detected by comparing the actual output measurements with the predicted values. If the difference surpasses a theoretical threshold, a sensor fault is detected. To identify and isolate which sensor is faulty, we compare the numerical difference of each sensor meassurement with an empirically derived threshold. We derive both theoretical and empirical thresholds for detection and isolation, respectively. Notably, the proposed approach is robust to measurement noise and system uncertainties. Its effectiveness is demonstrated through numerical simulations of sensor faults in a network of Kuramoto oscillators.

15 Mar 2024

This paper considers risk-averse learning in convex games involving multiple agents that aim to minimize their individual risk of incurring significantly high costs. Specifically, the agents adopt the conditional value at risk (CVaR) as a risk measure with possibly different risk levels. To solve this problem, we propose a first-order risk-averse leaning algorithm, in which the CVaR gradient estimate depends on an estimate of the Value at Risk (VaR) value combined with the gradient of the stochastic cost function. Although estimation of the CVaR gradients using finitely many samples is generally biased, we show that the accumulated error of the CVaR gradient estimates is bounded with high probability. Moreover, assuming that the risk-averse game is strongly monotone, we show that the proposed algorithm converges to the risk-averse Nash equilibrium. We present numerical experiments on a Cournot game example to illustrate the performance of the proposed method.

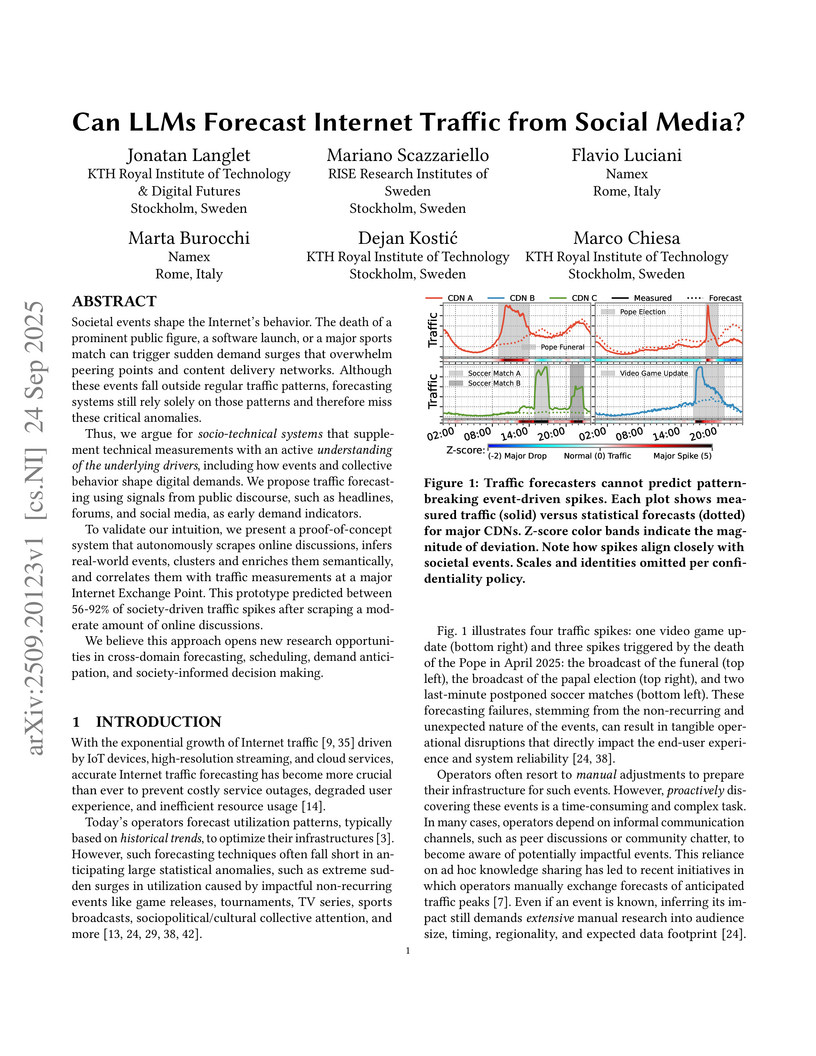

Societal events shape the Internet's behavior. The death of a prominent public figure, a software launch, or a major sports match can trigger sudden demand surges that overwhelm peering points and content delivery networks. Although these events fall outside regular traffic patterns, forecasting systems still rely solely on those patterns and therefore miss these critical anomalies.

Thus, we argue for socio-technical systems that supplement technical measurements with an active understanding of the underlying drivers, including how events and collective behavior shape digital demands. We propose traffic forecasting using signals from public discourse, such as headlines, forums, and social media, as early demand indicators.

To validate our intuition, we present a proof-of-concept system that autonomously scrapes online discussions, infers real-world events, clusters and enriches them semantically, and correlates them with traffic measurements at a major Internet Exchange Point. This prototype predicted between 56-92% of society-driven traffic spikes after scraping a moderate amount of online discussions.

We believe this approach opens new research opportunities in cross-domain forecasting, scheduling, demand anticipation, and society-informed decision making.

In this paper, we analyze the problem of optimally allocating resources in a distributed and privacy-preserving manner. We propose a novel distributed optimal resource allocation algorithm with privacy-preserving guarantees, which operates over a directed communication network. Our algorithm converges in finite time and allows each node to process and transmit quantized messages. Our algorithm utilizes a distributed quantized average consensus strategy combined with a privacy-preserving mechanism. We show that the algorithm converges in finite-time, and we prove that, under specific conditions on the network topology, nodes are able to preserve the privacy of their initial state. Finally, to illustrate the results, we consider an example where test kits need to be optimally allocated proportionally to the number of infections in a region. It is shown that the proposed privacy-preserving resource allocation algorithm performs well with an appropriate convergence rate under privacy guarantees.

21 Aug 2023

In this paper, we present a data-driven approach for safely predicting the future state sets of pedestrians. Previous approaches to predicting the future state sets of pedestrians either do not provide safety guarantees or are overly conservative. Moreover, an additional challenge is the selection or identification of a model that sufficiently captures the motion of pedestrians. To address these issues, this paper introduces the idea of splitting previously collected, historical pedestrian trajectories into different behavior modes for performing data-driven reachability analysis. Through this proposed approach, we are able to use data-driven reachability analysis to capture the future state sets of pedestrians, while being less conservative and still maintaining safety guarantees. Furthermore, this approach is modular and can support different approaches for behavior splitting. To illustrate the efficacy of the approach, we implement our method with a basic behavior-splitting module and evaluate the implementation on an open-source data set of real pedestrian trajectories. In this evaluation, we find that the modal reachable sets are less conservative and more descriptive of the future state sets of the pedestrian.

12 Sep 2023

Ensuring safety in real-world robotic systems is often challenging due to unmodeled disturbances and noisy sensor measurements. To account for such stochastic uncertainties, many robotic systems leverage probabilistic state estimators such as Kalman filters to obtain a robot's belief, i.e. a probability distribution over possible states. We propose belief control barrier functions (BCBFs) to enable risk-aware control synthesis, leveraging all information provided by state estimators. This allows robots to stay in predefined safety regions with desired confidence under these stochastic uncertainties. BCBFs are general and can be applied to a variety of robotic systems that use extended Kalman filters as state estimator. We demonstrate BCBFs on a quadrotor that is exposed to external disturbances and varying sensing conditions. Our results show improved safety compared to traditional state-based approaches while allowing control frequencies of up to 1kHz.

21 Jul 2024

We study the split Conformal Prediction method when applied to Markovian data. We quantify the gap in terms of coverage induced by the correlations in the data (compared to exchangeable data). This gap strongly depends on the mixing properties of the underlying Markov chain, and we prove that it typically scales as tmixln(n)/n (where tmix is the mixing time of the chain). We also derive upper bounds on the impact of the correlations on the size of the prediction set. Finally we present K-split CP, a method that consists in thinning the calibration dataset and that adapts to the mixing properties of the chain. Its coverage gap is reduced to tmix/(nln(n)) without really affecting the size of the prediction set. We finally test our algorithms on synthetic and real-world datasets.

We consider risk-averse learning in repeated unknown games where the goal of the agents is to minimize their individual risk of incurring significantly high cost. Specifically, the agents use the conditional value at risk (CVaR) as a risk measure and rely on bandit feedback in the form of the cost values of the selected actions at every episode to estimate their CVaR values and update their actions. A major challenge in using bandit feedback to estimate CVaR is that the agents can only access their own cost values, which, however, depend on the actions of all agents. To address this challenge, we propose a new risk-averse learning algorithm with momentum that utilizes the full historical information on the cost values. We show that this algorithm achieves sub-linear regret and matches the best known algorithms in the literature. We provide numerical experiments for a Cournot game that show that our method outperforms existing methods.

07 Aug 2025

Distributed Quantized Average Consensus in Open Multi-Agent Systems with Dynamic Communication Links

Distributed Quantized Average Consensus in Open Multi-Agent Systems with Dynamic Communication Links

In this paper, we focus on the distributed quantized average consensus problem in open multi-agent systems consisting of communication links that change dynamically over time. Open multi-agent systems exhibiting the aforementioned characteristic are referred to as \textit{open dynamic multi-agent systems} in this work. We present a distributed algorithm that enables active nodes in the open dynamic multi-agent system to calculate the quantized average of their initial states. Our algorithm consists of the following advantages: (i) ensures efficient communication by enabling nodes to exchange quantized valued messages, and (ii) exhibits finite time convergence to the desired solution. We establish the correctness of our algorithm and we present necessary and sufficient topological conditions for it to successfully solve the quantized average consensus problem in an open dynamic multi-agent system. Finally, we illustrate the performance of our algorithm with numerical simulations.

Neural networks that can capture key principles underlying brain computation offer exciting new opportunities for developing artificial intelligence and brain-like computing algorithms. Such networks remain biologically plausible while leveraging localized forms of synaptic learning rules and modular network architecture found in the neocortex. Compared to backprop-driven deep learning approches, they provide more suitable models for deployment of neuromorphic hardware and have greater potential for scalability on large-scale computing clusters. The development of such brain-like neural networks depends on having a learning procedure that can build effective internal representations from data. In this work, we introduce and evaluate a brain-like neural network model capable of unsupervised representation learning. It builds on the Bayesian Confidence Propagation Neural Network (BCPNN), which has earlier been implemented as abstract as well as biophyscially detailed recurrent attractor neural networks explaining various cortical associative memory phenomena. Here we developed a feedforward BCPNN model to perform representation learning by incorporating a range of brain-like attributes derived from neocortical circuits such as cortical columns, divisive normalization, Hebbian synaptic plasticity, structural plasticity, sparse activity, and sparse patchy connectivity. The model was tested on a diverse set of popular machine learning benchmarks: grayscale images (MNIST, F-MNIST), RGB natural images (SVHN, CIFAR-10), QSAR (MUV, HIV), and malware detection (EMBER). The performance of the model when using a linear classifier to predict the class labels fared competitively with conventional multi-layer perceptrons and other state-of-the-art brain-like neural networks.

We consider the problem of learning an ε-optimal policy in controlled dynamical systems with low-rank latent structure. For this problem, we present LoRa-PI (Low-Rank Policy Iteration), a model-free learning algorithm alternating between policy improvement and policy evaluation steps. In the latter, the algorithm estimates the low-rank matrix corresponding to the (state, action) value function of the current policy using the following two-phase procedure. The entries of the matrix are first sampled uniformly at random to estimate, via a spectral method, the leverage scores of its rows and columns. These scores are then used to extract a few important rows and columns whose entries are further sampled. The algorithm exploits these new samples to complete the matrix estimation using a CUR-like method. For this leveraged matrix estimation procedure, we establish entry-wise guarantees that remarkably, do not depend on the coherence of the matrix but only on its spikiness. These guarantees imply that LoRa-PI learns an ε-optimal policy using O(poly(1−γ)ε2S+A) samples where S (resp. A) denotes the number of states (resp. actions) and γ the discount factor. Our algorithm achieves this order-optimal (in S, A and ε) sample complexity under milder conditions than those assumed in previously proposed approaches.

19 Jun 2025

Over-the-air computation (OAC) leverages the physical superposition property of wireless multiple access channels (MACs) to compute functions while communication occurs, enabling scalable and low-latency processing in distributed networks. While analog OAC methods suffer from noise sensitivity and hardware constraints, existing digital approaches are often limited in design complexity, which may hinder scalability and fail to exploit spectral efficiency fully. This two-part paper revisits and extends the ChannelComp framework, a general methodology for computing arbitrary finite-valued functions using digital modulation. In Part I, we develop a novel constellation design approach that is aware of the noise distribution and formulates the encoder design as a max-min optimization problem using noise-tailored distance metrics. Our design supports noise models, including Gaussian, Laplace, and heavy-tailed distributions. We further demonstrate that, for heavy-tailed noise, the optimal ChannelComp setup coincides with the solution to the corresponding max-min criterion for the channel noise with heavy-tailed distributions. Numerical experiments confirm that our noise-aware design achieves a substantially lower mean-square error than leading digital OAC methods over noisy MACs. In Part II, we consider a constellation design with a quantization-based sampling scheme to enhance modulation scalability and computational accuracy for large-scale digital OAC.

There are no more papers matching your filters at the moment.