Ford

29 Jul 2025

This paper develops a game-theoretic decision-making framework for autonomous driving in multi-agent scenarios. A novel hierarchical game-based decision framework is developed for the ego vehicle. This framework features an interaction graph, which characterizes the interaction relationships between the ego and its surrounding traffic agents (including AVs, human driven vehicles, pedestrians, and bicycles, and others), and enables the ego to smartly select a limited number of agents as its game players. Compared to the standard multi-player games, where all surrounding agents are considered as game players, the hierarchical game significantly reduces the computational complexity. In addition, compared to pairwise games, the most popular approach in the literature, the hierarchical game promises more efficient decisions for the ego (in terms of less unnecessary waiting and yielding). To further reduce the computational cost, we then propose an improved hierarchical game, which decomposes the hierarchical game into a set of sub-games. Decision safety and efficiency are analyzed in both hierarchical games. Comprehensive simulation studies are conducted to verify the effectiveness of the proposed frameworks, with an intersection-crossing scenario as a case study.

We describe a Deep-Geometric Localizer that is able to estimate the full 6

Degree of Freedom (DoF) global pose of the camera from a single image in a

previously mapped environment. Our map is a topo-metric one, with discrete

topological nodes whose 6 DoF poses are known. Each topo-node in our map also

comprises of a set of points, whose 2D features and 3D locations are stored as

part of the mapping process. For the mapping phase, we utilise a stereo camera

and a regular stereo visual SLAM pipeline. During the localization phase, we

take a single camera image, localize it to a topological node using Deep

Learning, and use a geometric algorithm (PnP) on the matched 2D features (and

their 3D positions in the topo map) to determine the full 6 DoF globally

consistent pose of the camera. Our method divorces the mapping and the

localization algorithms and sensors (stereo and mono), and allows accurate 6

DoF pose estimation in a previously mapped environment using a single camera.

With potential VR/AR and localization applications in single camera devices

such as mobile phones and drones, our hybrid algorithm compares favourably with

the fully Deep-Learning based Pose-Net that regresses pose from a single image

in simulated as well as real environments.

The task of 2D human pose estimation is challenging as the number of

keypoints is typically large (~ 17) and this necessitates the use of robust

neural network architectures and training pipelines that can capture the

relevant features from the input image. These features are then aggregated to

make accurate heatmap predictions from which the final keypoints of human body

parts can be inferred. Many papers in literature use CNN-based architectures

for the backbone, and/or combine it with a transformer, after which the

features are aggregated to make the final keypoint predictions [1]. In this

paper, we consider the recently proposed Bottleneck Transformers [2], which

combine CNN and multi-head self attention (MHSA) layers effectively, and we

integrate it with a Transformer encoder and apply it to the task of 2D human

pose estimation. We consider different backbone architectures and pre-train

them using the DINO self-supervised learning method [3], this pre-training is

found to improve the overall prediction accuracy. We call our model BTranspose,

and experiments show that on the COCO validation set, our model achieves an AP

of 76.4, which is competitive with other methods such as [1] and has fewer

network parameters. Furthermore, we also present the dependencies of the final

predicted keypoints on both the MHSA block and the Transformer encoder layers,

providing clues on the image sub-regions the network attends to at the mid and

high levels.

In the burgeoning field of intelligent transportation systems, enhancing vehicle-driver interaction through facial attribute recognition, such as facial expression, eye gaze, age, etc., is of paramount importance for safety, personalization, and overall user experience. However, the scarcity of comprehensive large-scale, real-world datasets poses a significant challenge for training robust multi-task models. Existing literature often overlooks the potential of synthetic datasets and the comparative efficacy of state-of-the-art vision foundation models in such constrained settings. This paper addresses these gaps by investigating the utility of synthetic datasets for training complex multi-task models that recognize facial attributes of passengers of a vehicle, such as gaze plane, age, and facial expression. Utilizing transfer learning techniques with both pre-trained Vision Transformer (ViT) and Residual Network (ResNet) models, we explore various training and adaptation methods to optimize performance, particularly when data availability is limited. We provide extensive post-evaluation analysis, investigating the effects of synthetic data distributions on model performance in in-distribution data and out-of-distribution inference. Our study unveils counter-intuitive findings, notably the superior performance of ResNet over ViTs in our specific multi-task context, which is attributed to the mismatch in model complexity relative to task complexity. Our results highlight the challenges and opportunities for enhancing the use of synthetic data and vision foundation models in practical applications.

Engineering design is traditionally performed by hand: an expert makes design proposals based on past experience, and these proposals are then tested for compliance with certain target specifications. Testing for compliance is performed first by computer simulation using what is called a discipline model. Such a model can be implemented by a finite element analysis, multibody systems approach, etc. Designs passing this simulation are then considered for physical prototyping. The overall process may take months, and is a significant cost in practice. We have developed a Bayesian optimization system for partially automating this process by directly optimizing compliance with the target specification with respect to the design parameters. The proposed method is a general framework for computing a generalized inverse of a high-dimensional non-linear function that does not require e.g. gradient information, which is often unavailable from discipline models. We furthermore develop a two-tier convergence criterion based on (i) convergence to a solution optimally satisfying all specified design criteria, or (ii) convergence to a minimum-norm solution. We demonstrate the proposed approach on a vehicle chassis design problem motivated by an industry setting using a state-of-the-art commercial discipline model. We show that the proposed approach is general, scalable, and efficient, and that the novel convergence criteria can be implemented straightforwardly based on existing concepts and subroutines in popular Bayesian optimization software packages.

Reaction-diffusion systems are ubiquitous in nature and in engineering applications, and are often modeled using a non-linear system of governing equations. While robust numerical methods exist to solve them, deep learning-based reduced ordermodels (ROMs) are gaining traction as they use linearized dynamical models to advance the solution in time. One such family of algorithms is based on Koopman theory, and this paper applies this numerical simulation strategy to reaction-diffusion systems. Adversarial and gradient losses are introduced, and are found to robustify the predictions. The proposed model is extended to handle missing training data as well as recasting the problem from a control perspective. The efficacy of these developments are demonstrated for two different reaction-diffusion problems: (1) the Kuramoto-Sivashinsky equation of chaos and (2) the Turing instability using the Gray-Scott model.

We present a Deep Learning based system for the twin tasks of localization

and obstacle avoidance essential to any mobile robot. Our system learns from

conventional geometric SLAM, and outputs, using a single camera, the

topological pose of the camera in an environment, and the depth map of

obstacles around it. We use a CNN to localize in a topological map, and a

conditional VAE to output depth for a camera image, conditional on this

topological location estimation. We demonstrate the effectiveness of our

monocular localization and depth estimation system on simulated and real

datasets.

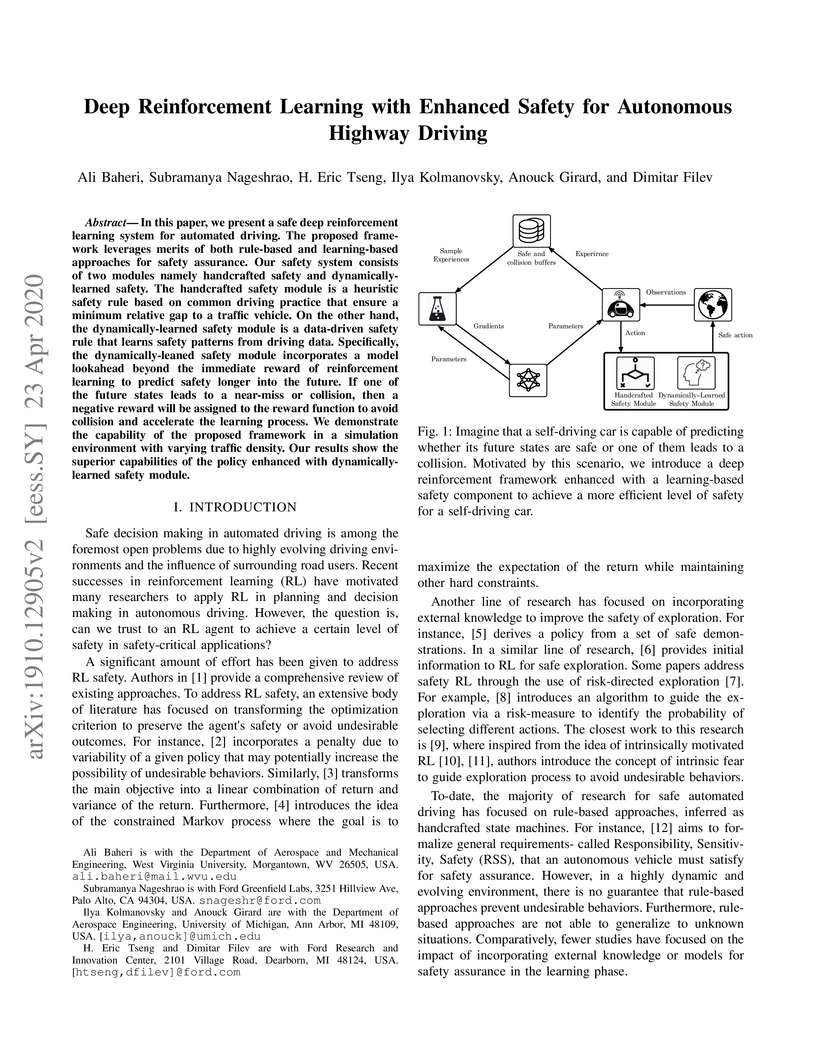

In this paper, we present a safe deep reinforcement learning system for automated driving. The proposed framework leverages merits of both rule-based and learning-based approaches for safety assurance. Our safety system consists of two modules namely handcrafted safety and dynamically-learned safety. The handcrafted safety module is a heuristic safety rule based on common driving practice that ensure a minimum relative gap to a traffic vehicle. On the other hand, the dynamically-learned safety module is a data-driven safety rule that learns safety patterns from driving data. Specifically, the dynamically-leaned safety module incorporates a model lookahead beyond the immediate reward of reinforcement learning to predict safety longer into the future. If one of the future states leads to a near-miss or collision, then a negative reward will be assigned to the reward function to avoid collision and accelerate the learning process. We demonstrate the capability of the proposed framework in a simulation environment with varying traffic density. Our results show the superior capabilities of the policy enhanced with dynamically-learned safety module.

Dynamical systems are ubiquitous and are often modeled using a non-linear

system of governing equations. Numerical solution procedures for many dynamical

systems have existed for several decades, but can be slow due to

high-dimensional state space of the dynamical system. Thus, deep learning-based

reduced order models (ROMs) are of interest and one such family of algorithms

along these lines are based on the Koopman theory. This paper extends a

recently developed adversarial Koopman model (Balakrishnan \& Upadhyay,

arXiv:2006.05547) to stochastic space, where the Koopman operator applies on

the probability distribution of the latent encoding of an encoder.

Specifically, the latent encoding of the system is modeled as a Gaussian, and

is advanced in time by using an auxiliary neural network that outputs two

Koopman matrices Kμ and Kσ. Adversarial and gradient losses

are used and this is found to lower the prediction errors. A reduced Koopman

formulation is also undertaken where the Koopman matrices are assumed to have a

tridiagonal structure, and this yields predictions comparable to the baseline

model with full Koopman matrices. The efficacy of the stochastic Koopman model

is demonstrated on different test problems in chaos, fluid dynamics,

combustion, and reaction-diffusion models. The proposed model is also applied

in a setting where the Koopman matrices are conditioned on other input

parameters for generalization and this is applied to simulate the state of a

Lithium-ion battery in time. The Koopman models discussed in this study are

very promising for the wide range of problems considered.

There are no more papers matching your filters at the moment.