Gdańsk University of Technology

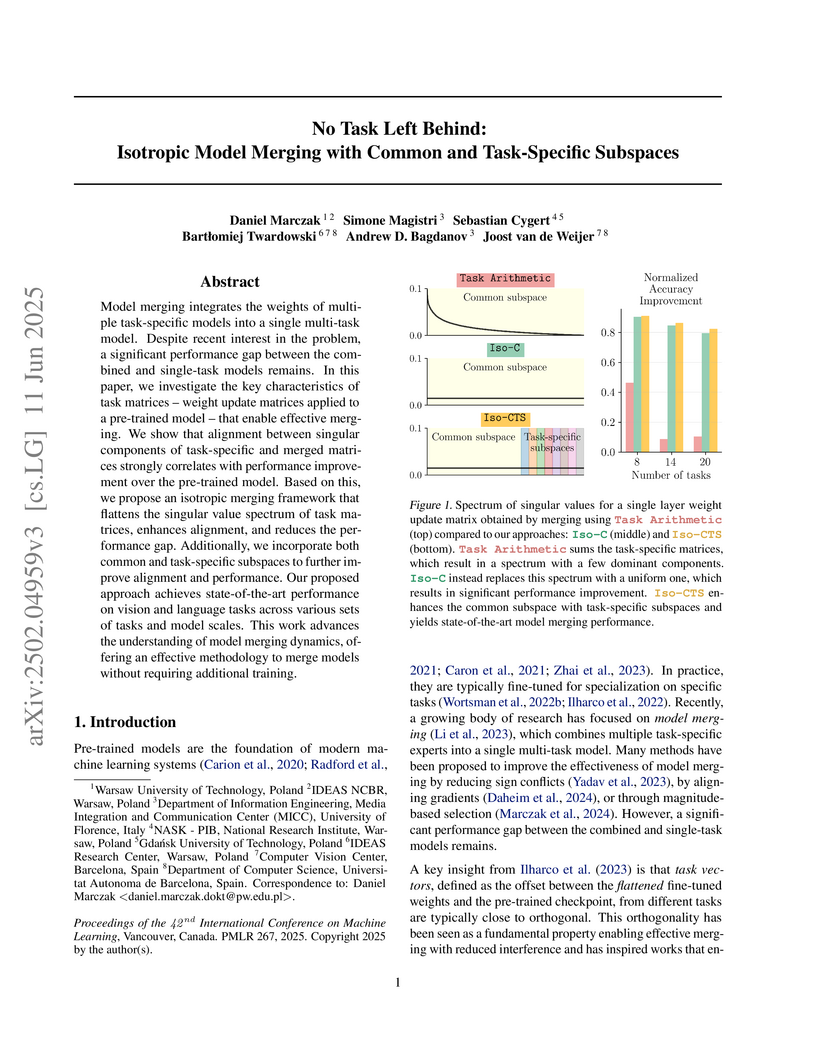

Researchers from Warsaw University of Technology and other European institutions developed Isotropic Model Merging, a framework that integrates specialized model weights into a single multi-task model by focusing on subspace alignment and isotropic singular value spectra. The approach achieves state-of-the-art performance across diverse vision and language tasks while maintaining efficiency.

MagMax introduces a model merging strategy for continual learning by combining sequential fine-tuning with maximum magnitude weight selection to mitigate catastrophic forgetting in large pre-trained models. The method consistently outperforms existing continual learning approaches and other merging strategies, achieving an average 2.1% improvement in class-incremental learning, while also demonstrating that sequential fine-tuning universally enhances model merging performance.

07 Oct 2025

Certifying random number generators is challenging, especially in security-critical fields like cryptography. Here, we demonstrate a measurement-device-independent quantum random number generator (MDI-QRNG) using high-dimensional photonic path states. Our setup extends the standard qubit beam-splitter QRNG to a three-output version with tunable fiber-optic interferometers acting as tunable beam splitters and superconducting detectors. This setup generates over 1.2 bits per round and 1.77 Mbits per second of certifiably secure private randomness without requiring \emph{any} trust in the measurement apparatus, a critical requirement for the security of real-world cryptographic applications. Our results demonstrate certifiably secure high-dimensional quantum random-number generation, paving the way for practical, scalable QRNGs without the need for complex devices.

CNRS

CNRS University of Pittsburgh

University of Pittsburgh University of Chicago

University of Chicago University of Oxford

University of Oxford INFN

INFN Yale University

Yale University Northwestern UniversityCONICET

Northwestern UniversityCONICET CERN

CERN Argonne National Laboratory

Argonne National Laboratory University of Wisconsin-Madison

University of Wisconsin-Madison Purdue University

Purdue University University of ArizonaUniversity of RochesterDeutsches Elektronen-Synchrotron DESY

University of ArizonaUniversity of RochesterDeutsches Elektronen-Synchrotron DESY CEAUniversity of GlasgowUniversidad Complutense de MadridUniversity of SussexUniversidad Nacional de La PlataUniversity of TartuUniversity Paris-SaclayJagiellonian UniversityUniversity of TriesteOklahoma State UniversityUniversity of GdańskGdańsk University of TechnologyUniversity of Hawai’iGeorg-August Universität GöttingenUniversity of DortmundIFT UAM/CSICUniversity of ŁódźKBFIUniversit

catholique de LouvainUniversit

Grenoble AlpesSapienza Universit

di RomaUniversit

degli Studi di Milano-BicoccaUniversit

Di Bologna

CEAUniversity of GlasgowUniversidad Complutense de MadridUniversity of SussexUniversidad Nacional de La PlataUniversity of TartuUniversity Paris-SaclayJagiellonian UniversityUniversity of TriesteOklahoma State UniversityUniversity of GdańskGdańsk University of TechnologyUniversity of Hawai’iGeorg-August Universität GöttingenUniversity of DortmundIFT UAM/CSICUniversity of ŁódźKBFIUniversit

catholique de LouvainUniversit

Grenoble AlpesSapienza Universit

di RomaUniversit

degli Studi di Milano-BicoccaUniversit

Di BolognaSome of the most astonishing and prominent properties of Quantum Mechanics, such as entanglement and Bell nonlocality, have only been studied extensively in dedicated low-energy laboratory setups. The feasibility of these studies in the high-energy regime explored by particle colliders was only recently shown and has gathered the attention of the scientific community. For the range of particles and fundamental interactions involved, particle colliders provide a novel environment where quantum information theory can be probed, with energies exceeding by about 12 orders of magnitude those employed in dedicated laboratory setups. Furthermore, collider detectors have inherent advantages in performing certain quantum information measurements, and allow for the reconstruction of the state of the system under consideration via quantum state tomography. Here, we elaborate on the potential, challenges, and goals of this innovative and rapidly evolving line of research and discuss its expected impact on both quantum information theory and high-energy physics.

The article presents a new multi-label comprehensive image dataset from flexible endoscopy, colonoscopy and capsule endoscopy, named ERS. The collection has been labeled according to the full medical specification of 'Minimum Standard Terminology 3.0' (MST 3.0), describing all possible findings in the gastrointestinal tract (104 possible labels), extended with an additional 19 labels useful in common machine learning applications.

The dataset contains around 6000 precisely and 115,000 approximately labeled frames from endoscopy videos, 3600 precise and 22,600 approximate segmentation masks, and 1.23 million unlabeled frames from flexible and capsule endoscopy videos. The labeled data cover almost entirely the MST 3.0 standard. The data came from 1520 videos of 1135 patients.

Additionally, this paper proposes and describes four exemplary experiments in gastrointestinal image classification task performed using the created dataset. The obtained results indicate the high usefulness and flexibility of the dataset in training and testing machine learning algorithms in the field of endoscopic data analysis.

16 May 2024

This paper presents a comprehensive overview of Quantum Machine Learning (QML) applications in quantitative finance, examining their connection to various use cases. It explores how QML, leveraging quantum computing principles, can be applied to areas such as portfolio optimization, market prediction, option pricing, and risk management, while also detailing current limitations and future research directions.

The very shallow marine basin of Puck Lagoon in the southern Baltic Sea, on the Northern coast of Poland, hosts valuable benthic habitats and cultural heritage sites. These include, among others, protected Zostera marina meadows, one of the Baltic's major medieval harbours, a ship graveyard, and likely other submerged features that are yet to be discovered. Prior to this project, no comprehensive high-resolution remote sensing data were available for this area. This article describes the first Digital Elevation Models (DEMs) derived from a combination of airborne bathymetric LiDAR, multibeam echosounder, airborne photogrammetry and satellite imagery. These datasets also include multibeam echosounder backscatter and LiDAR intensity, allowing determination of the character and properties of the seafloor. Combined, these datasets are a vital resource for assessing and understanding seafloor morphology, benthic habitats, cultural heritage, and submerged landscapes. Given the significance of Puck Lagoon's hydrographical, ecological, geological, and archaeological environs, the high-resolution bathymetry, acquired by our project, can provide the foundation for sustainable management and informed decision-making for this area of interest.

30 Jul 2025

We introduce a new methodology for the analysis of the phenomenon of chaotic itinerancy in a dynamical system using the notion of entropy and a clustering algorithm. We determine systems likely to experience chaotic itinerancy by means of local Shannon entropy and local permutation entropy. In such systems, we find quasi-stable states (attractor ruins) and chaotic transition states using a density-based clustering algorithm. Our approach then focuses on examining the chaotic itinerancy dynamics through the characterization of residence times within these states and chaotic transitions between them with the help of some statistical tests. The effectiveness of these methods is demonstrated on two systems that serve as well-known models exhibiting chaotic itinerancy: globally coupled logistic maps (GCM) and mutually coupled Gaussian maps. In particular, we conduct comprehensive computations for a large number of parameters in the GCM system and algorithmically identify itinerant dynamics observed previously by Kaneko in numerical simulations as the coherent and intermittent phases.

In this work, we investigate exemplar-free class incremental learning (CIL) with knowledge distillation (KD) as a regularization strategy, aiming to prevent forgetting. KD-based methods are successfully used in CIL, but they often struggle to regularize the model without access to exemplars of the training data from previous tasks. Our analysis reveals that this issue originates from substantial representation shifts in the teacher network when dealing with out-of-distribution data. This causes large errors in the KD loss component, leading to performance degradation in CIL models. Inspired by recent test-time adaptation methods, we introduce Teacher Adaptation (TA), a method that concurrently updates the teacher and the main models during incremental training. Our method seamlessly integrates with KD-based CIL approaches and allows for consistent enhancement of their performance across multiple exemplar-free CIL benchmarks. The source code for our method is available at this https URL.

In the field of continual learning, models are designed to learn tasks one after the other. While most research has centered on supervised continual learning, there is a growing interest in unsupervised continual learning, which makes use of the vast amounts of unlabeled data. Recent studies have highlighted the strengths of unsupervised methods, particularly self-supervised learning, in providing robust representations. The improved transferability of those representations built with self-supervised methods is often associated with the role played by the multi-layer perceptron projector. In this work, we depart from this observation and reexamine the role of supervision in continual representation learning. We reckon that additional information, such as human annotations, should not deteriorate the quality of representations. Our findings show that supervised models when enhanced with a multi-layer perceptron head, can outperform self-supervised models in continual representation learning. This highlights the importance of the multi-layer perceptron projector in shaping feature transferability across a sequence of tasks in continual learning. The code is available on github: this https URL.

14 Jul 2025

We reveal key connections between non-locality and advantage in correlation-assisted classical communication. First, using the wire-cutting technique, we provide a Bell inequality tailored to any correlation-assisted bounded classical communication task. The violation of this inequality by a quantum correlation is equivalent to its quantum-assisted advantage in the corresponding communication task. Next, we introduce wire-reading, which leverages the readability of classical messages to demonstrate advantageous assistance of non-local correlations in setups where no such advantage can be otherwise observed. Building on this, we introduce families of classical communication tasks in a Bob-without-input prepare-and-measure scenario, where non-local correlation enhances bounded classical communication while shared randomness assistance yields strictly suboptimal payoff. For the first family of tasks, assistance from any non-local facet leads to optimal payoff, while each task in the second family is tailored to a non-local facet. We reveal quantum advantage in these tasks, including qutrit over qubit entanglement advantage.

We introduce temporal flows on temporal networks, i.e., networks the links of which exist only at certain moments of time. Such networks are ephemeral in the sense that no link exists after some time. Our flow model is new and differs from the "flows over time" model, also called "dynamic flows" in the literature. We show that the problem of finding the maximum amount of flow that can pass from a source vertex s to a sink vertex t up to a given time is solvable in Polynomial time, even when node buffers are bounded. We then examine mainly the case of unbounded node buffers. We provide a simplified static Time-Extended network (STEG), which is of polynomial size to the input and whose static flow rates are equivalent to the respective temporal flow of the temporal network, using STEG, we prove that the maximum temporal flow is equal to the minimum temporal s-t cut. We further show that temporal flows can always be decomposed into flows, each of which moves only through a journey, i.e., a directed path whose successive edges have strictly increasing moments of existence. We partially characterise networks with random edge availabilities that tend to eliminate the s-t temporal flow. We then consider mixed temporal networks, which have some edges with specified availabilities and some edges with random availabilities, we show that it is #P-hard to compute the tails and expectations of the maximum temporal flow (which is now a random variable) in a mixed temporal network.

24 Apr 2025

Applications such as Device-Independent Quantum Key Distribution (DIQKD)

require loophole-free certification of long-distance quantum correlations.

However, these distances remain severely constrained by detector inefficiencies

and unavoidable transmission losses. To overcome this challenge, we consider

parallel repetitions of the recently proposed routed Bell experiments, where

transmissions from the source are actively directed either to a nearby or a

distant measurement device. We analytically show that the threshold detection

efficiency of the distant device--needed to certify non-jointly-measurable

measurements, a prerequisite of secure DIQKD--decreases exponentially,

optimally, and robustly, following η∗=1/2N, with the number N of

parallel repetitions.

In this work, we improve the generative replay in a continual learning

setting to perform well on challenging scenarios. Current generative rehearsal

methods are usually benchmarked on small and simple datasets as they are not

powerful enough to generate more complex data with a greater number of classes.

We notice that in VAE-based generative replay, this could be attributed to the

fact that the generated features are far from the original ones when mapped to

the latent space. Therefore, we propose three modifications that allow the

model to learn and generate complex data. More specifically, we incorporate the

distillation in latent space between the current and previous models to reduce

feature drift. Additionally, a latent matching for the reconstruction and

original data is proposed to improve generated features alignment. Further,

based on the observation that the reconstructions are better for preserving

knowledge, we add the cycling of generations through the previously trained

model to make them closer to the original data. Our method outperforms other

generative replay methods in various scenarios. Code available at

this https URL

Exemplar-Free Class Incremental Learning (EFCIL) tackles the problem of training a model on a sequence of tasks without access to past data. Existing state-of-the-art methods represent classes as Gaussian distributions in the feature extractor's latent space, enabling Bayes classification or training the classifier by replaying pseudo features. However, we identify two critical issues that compromise their efficacy when the feature extractor is updated on incremental tasks. First, they do not consider that classes' covariance matrices change and must be adapted after each task. Second, they are susceptible to a task-recency bias caused by dimensionality collapse occurring during training. In this work, we propose AdaGauss -- a novel method that adapts covariance matrices from task to task and mitigates the task-recency bias owing to the additional anti-collapse loss function. AdaGauss yields state-of-the-art results on popular EFCIL benchmarks and datasets when training from scratch or starting from a pre-trained backbone. The code is available at: this https URL.

27 Jun 2025

The exploration of entanglement and Bell non-locality among multi-particle quantum systems offers a profound avenue for testing and understanding the limits of quantum mechanics and local real hidden variable theories. In this work, we examine non-local correlations among three massless spin-1/2 particles generated from the three-body decay of a massive particle, utilizing a framework based on general four-fermion interactions. By analyzing several inequalities, we address the detection of deviations from quantum mechanics as well as violations of two key hidden variable theories: fully local-real and bipartite local-real theories. Our approach encompasses the standard Mermin inequality and the tight 4×4×2 inequality, providing a comprehensive framework for probing three-partite non-local correlations. Our findings provide deeper insights into the boundaries of classical and quantum theories in three-particle systems, advancing the understanding of non-locality in particle decays and its relevance to particle physics and quantum foundations.

We consider the following generalization of the binary search problem. A search strategy is required to locate an unknown target node t in a given tree T. Upon querying a node v of the tree, the strategy receives as a reply an indication of the connected component of T∖{v} containing the target t. The cost of querying each node is given by a known non-negative weight function, and the considered objective is to minimize the total query cost for a worst-case choice of the target. Designing an optimal strategy for a weighted tree search instance is known to be strongly NP-hard, in contrast to the unweighted variant of the problem which can be solved optimally in linear time. Here, we show that weighted tree search admits a quasi-polynomial time approximation scheme: for any 0\textlessε\textless1, there exists a (1+ε)-approximation strategy with a computation time of nO(logn/ε2). Thus, the problem is not APX-hard, unless NP⊆DTIME(nO(logn)). By applying a generic reduction, we obtain as a corollary that the studied problem admits a polynomial-time O(logn)-approximation. This improves previous O^(logn)-approximation approaches, where the O^-notation disregards O(polyloglogn)-factors.

Test-time adaptation is a promising research direction that allows the source

model to adapt itself to changes in data distribution without any supervision.

Yet, current methods are usually evaluated on benchmarks that are only a

simplification of real-world scenarios. Hence, we propose to validate test-time

adaptation methods using the recently introduced datasets for autonomous

driving, namely CLAD-C and SHIFT. We observe that current test-time adaptation

methods struggle to effectively handle varying degrees of domain shift, often

resulting in degraded performance that falls below that of the source model. We

noticed that the root of the problem lies in the inability to preserve the

knowledge of the source model and adapt to dynamically changing, temporally

correlated data streams. Therefore, we enhance the well-established

self-training framework by incorporating a small memory buffer to increase

model stability and at the same time perform dynamic adaptation based on the

intensity of domain shift. The proposed method, named AR-TTA, outperforms

existing approaches on both synthetic and more real-world benchmarks and shows

robustness across a variety of TTA scenarios. The code is available at

this https URL

This paper presents a Finite Element Model Updating framework for identifying heterogeneous material distributions in planar Bernoulli-Euler beams based on a rotation-free isogeometric formulation. The procedure follows two steps: First, the elastic properties are identified from quasi-static displacements; then, the density is determined from modal data (low frequencies and mode shapes), given the previously obtained elastic properties. The identification relies on three independent discretizations: the isogeometric finite element mesh, a high-resolution grid of experimental measurements, and a material mesh composed of low-order Lagrange elements. The material mesh approximates the unknown material distributions, with its nodal values serving as design variables. The error between experiments and numerical model is expressed in a least squares manner. The objective is minimized using local optimization with the trust-region method, providing analytical derivatives to accelerate computations. Several numerical examples exhibiting large displacements are provided to test the proposed approach. To alleviate membrane locking, the B2M1 discretization is employed when necessary. Quasi-experimental data is generated using refined finite element models with random noise applied up to 4%. The method yields satisfactory results as long as a sufficient amount of experimental data is available, even for high measurement noise. Regularization is used to ensure a stable solution for dense material meshes. The density can be accurately reconstructed based on the previously identified elastic properties. The proposed framework can be straightforwardly extended to shells and 3D continua.

In 2020, Behr defined the problem of edge coloring of signed graphs and showed that every signed graph (G,σ) can be colored using exactly Δ(G) or Δ(G)+1 colors, where Δ(G) is the maximum degree in graph G.

In this paper, we focus on products of signed graphs. We recall the definitions of the Cartesian, tensor, strong, and corona products of signed graphs and prove results for them. In particular, we show that (1) the Cartesian product of Δ-edge-colorable signed graphs is Δ-edge-colorable, (2) the tensor product of a Δ-edge-colorable signed graph and a signed tree requires only Δ colors and (3) the corona product of almost any two signed graphs is Δ-edge-colorable. We also prove some results related to the coloring of products of signed paths and cycles.

There are no more papers matching your filters at the moment.