IIT Jodhpur

13 Mar 2025

Researchers at IIT Patna and IIT Jodhpur present a comprehensive survey analyzing reasoning strategies in Large Language Models (LLMs), organizing techniques into three paradigms - reinforcement learning, test-time computation, and self-training - while examining their effectiveness across complex problem-solving tasks and identifying key challenges in automating process supervision and managing computational overhead.

Existing unlearning algorithms in text-to-image generative models often fail

to preserve the knowledge of semantically related concepts when removing

specific target concepts: a challenge known as adjacency. To address this, we

propose FADE (Fine grained Attenuation for Diffusion Erasure), introducing

adjacency aware unlearning in diffusion models. FADE comprises two components:

(1) the Concept Neighborhood, which identifies an adjacency set of related

concepts, and (2) Mesh Modules, employing a structured combination of

Expungement, Adjacency, and Guidance loss components. These enable precise

erasure of target concepts while preserving fidelity across related and

unrelated concepts. Evaluated on datasets like Stanford Dogs, Oxford Flowers,

CUB, I2P, Imagenette, and ImageNet1k, FADE effectively removes target concepts

with minimal impact on correlated concepts, achieving atleast a 12% improvement

in retention performance over state-of-the-art methods.

Existing vision-language models (VLMs) treat text descriptions as a unit, confusing individual concepts in a prompt and impairing visual semantic matching and reasoning. An important aspect of reasoning in logic and language is negations. This paper highlights the limitations of popular VLMs such as CLIP, at understanding the implications of negations, i.e., the effect of the word "not" in a given prompt. To enable evaluation of VLMs on fluent prompts with negations, we present CC-Neg, a dataset containing 228,246 images, true captions and their corresponding negated captions. Using CC-Neg along with modifications to the contrastive loss of CLIP, our proposed CoN-CLIP framework, has an improved understanding of negations. This training paradigm improves CoN-CLIP's ability to encode semantics reliably, resulting in 3.85% average gain in top-1 accuracy for zero-shot image classification across 8 datasets. Further, CoN-CLIP outperforms CLIP on challenging compositionality benchmarks such as SugarCREPE by 4.4%, showcasing emergent compositional understanding of objects, relations, and attributes in text. Overall, our work addresses a crucial limitation of VLMs by introducing a dataset and framework that strengthens semantic associations between images and text, demonstrating improved large-scale foundation models with significantly reduced computational cost, promoting efficiency and accessibility.

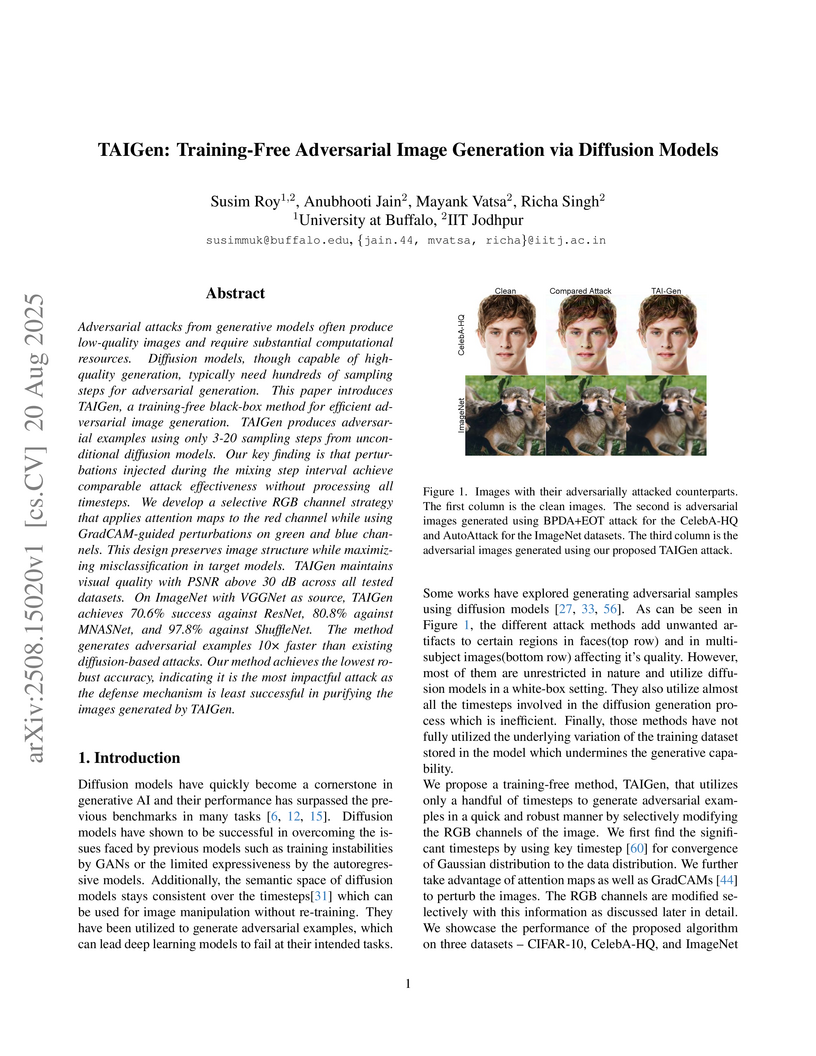

Adversarial attacks from generative models often produce low-quality images and require substantial computational resources. Diffusion models, though capable of high-quality generation, typically need hundreds of sampling steps for adversarial generation. This paper introduces TAIGen, a training-free black-box method for efficient adversarial image generation. TAIGen produces adversarial examples using only 3-20 sampling steps from unconditional diffusion models. Our key finding is that perturbations injected during the mixing step interval achieve comparable attack effectiveness without processing all timesteps. We develop a selective RGB channel strategy that applies attention maps to the red channel while using GradCAM-guided perturbations on green and blue channels. This design preserves image structure while maximizing misclassification in target models. TAIGen maintains visual quality with PSNR above 30 dB across all tested datasets. On ImageNet with VGGNet as source, TAIGen achieves 70.6% success against ResNet, 80.8% against MNASNet, and 97.8% against ShuffleNet. The method generates adversarial examples 10x faster than existing diffusion-based attacks. Our method achieves the lowest robust accuracy, indicating it is the most impactful attack as the defense mechanism is least successful in purifying the images generated by TAIGen.

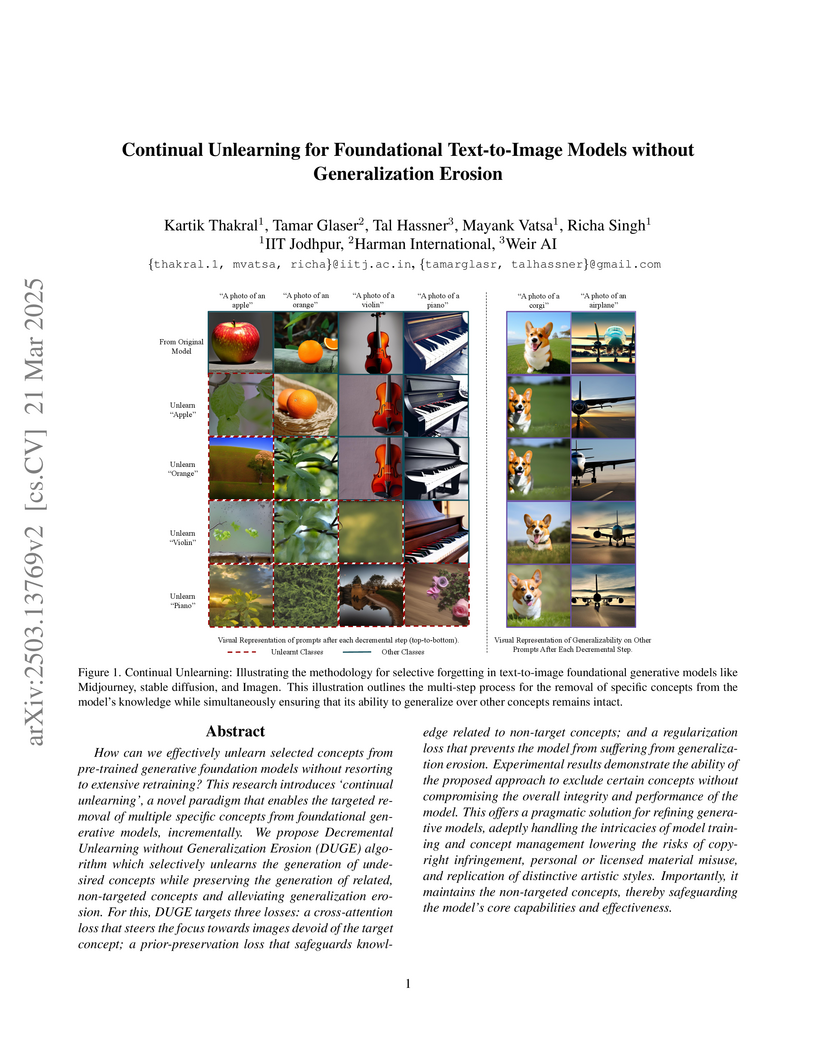

How can we effectively unlearn selected concepts from pre-trained generative

foundation models without resorting to extensive retraining? This research

introduces `continual unlearning', a novel paradigm that enables the targeted

removal of multiple specific concepts from foundational generative models,

incrementally. We propose Decremental Unlearning without Generalization Erosion

(DUGE) algorithm which selectively unlearns the generation of undesired

concepts while preserving the generation of related, non-targeted concepts and

alleviating generalization erosion. For this, DUGE targets three losses: a

cross-attention loss that steers the focus towards images devoid of the target

concept; a prior-preservation loss that safeguards knowledge related to

non-target concepts; and a regularization loss that prevents the model from

suffering from generalization erosion. Experimental results demonstrate the

ability of the proposed approach to exclude certain concepts without

compromising the overall integrity and performance of the model. This offers a

pragmatic solution for refining generative models, adeptly handling the

intricacies of model training and concept management lowering the risks of

copyright infringement, personal or licensed material misuse, and replication

of distinctive artistic styles. Importantly, it maintains the non-targeted

concepts, thereby safeguarding the model's core capabilities and effectiveness.

19 Mar 2025

Entropy has emerged as a dynamic, interdisciplinary, and widely accepted

quantitative measure of uncertainty across different disciplines. A unified

understanding of entropy measures, supported by a detailed review of their

theoretical foundations and practical applications, is crucial to advance

research across disciplines. This review article provides motivation,

fundamental properties, and constraints of various entropy measures. These

measures are categorized with time evolution ranging from Shannon entropy

generalizations, distribution function theory, fuzzy theory, fractional

calculus to graph theory, all explained in a simplified and accessible manner.

These entropy measures are selected on the basis of their usability, with

descriptions arranged chronologically. We have further discussed the

applicability of these measures across different domains, including

thermodynamics, communication theory, financial engineering, categorical data,

artificial intelligence, signal processing, and chemical and biological

systems, highlighting their multifaceted roles. A number of examples are

included to demonstrate the prominence of specific measures in terms of their

applicability. The article also focuses on entropy-based applications in

different disciplines, emphasizing openly accessible resources. Furthermore,

this article emphasizes the applicability of various entropy measures in the

field of finance. The article may provide a good insight to the researchers and

experts working to quantify uncertainties, along with potential future

directions.

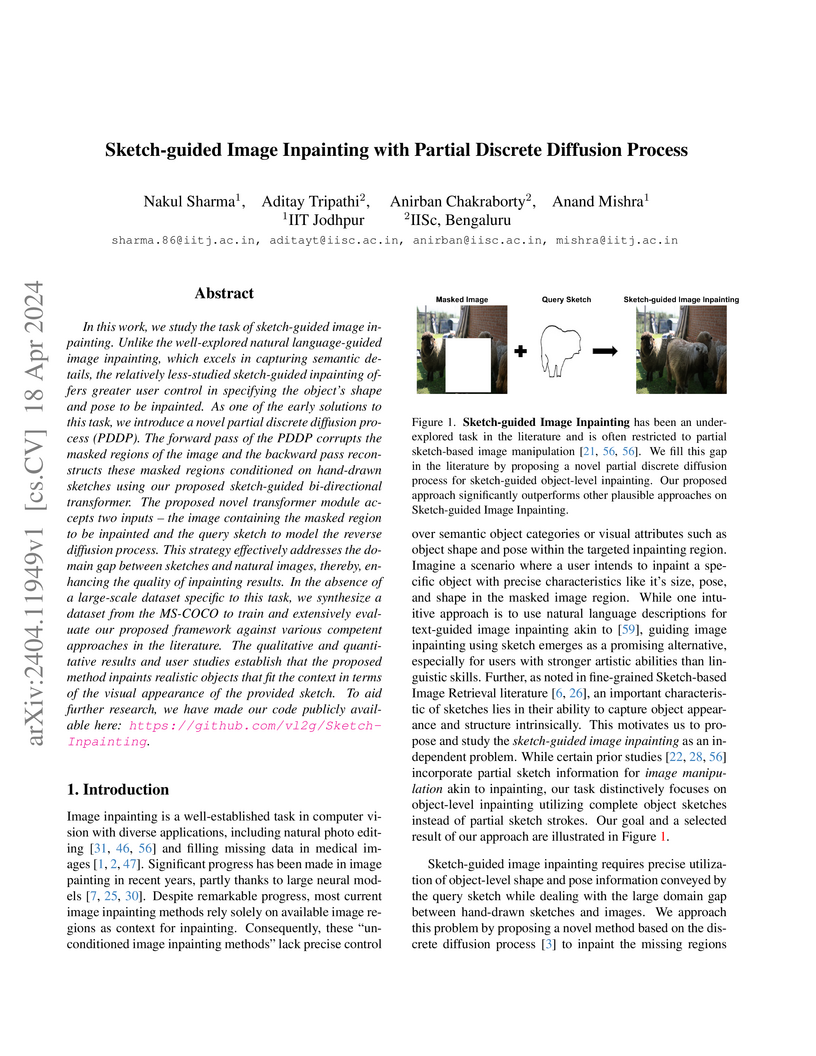

In this work, we study the task of sketch-guided image inpainting. Unlike the

well-explored natural language-guided image inpainting, which excels in

capturing semantic details, the relatively less-studied sketch-guided

inpainting offers greater user control in specifying the object's shape and

pose to be inpainted. As one of the early solutions to this task, we introduce

a novel partial discrete diffusion process (PDDP). The forward pass of the PDDP

corrupts the masked regions of the image and the backward pass reconstructs

these masked regions conditioned on hand-drawn sketches using our proposed

sketch-guided bi-directional transformer. The proposed novel transformer module

accepts two inputs -- the image containing the masked region to be inpainted

and the query sketch to model the reverse diffusion process. This strategy

effectively addresses the domain gap between sketches and natural images,

thereby, enhancing the quality of inpainting results. In the absence of a

large-scale dataset specific to this task, we synthesize a dataset from the

MS-COCO to train and extensively evaluate our proposed framework against

various competent approaches in the literature. The qualitative and

quantitative results and user studies establish that the proposed method

inpaints realistic objects that fit the context in terms of the visual

appearance of the provided sketch. To aid further research, we have made our

code publicly available at this https URL .

Due to the COVID-19 pandemic, wearing face masks has become a mandate in

public places worldwide. Face masks occlude a significant portion of the facial

region. Additionally, people wear different types of masks, from simple ones to

ones with graphics and prints. These pose new challenges to face recognition

algorithms. Researchers have recently proposed a few masked face datasets for

designing algorithms to overcome the challenges of masked face recognition.

However, existing datasets lack the cultural diversity and collection in the

unrestricted settings. Country like India with attire diversity, people are not

limited to wearing traditional masks but also clothing like a thin cotton

printed towel (locally called as ``gamcha''), ``stoles'', and ``handkerchiefs''

to cover their faces. In this paper, we present a novel \textbf{Indian Masked

Faces in the Wild (IMFW)} dataset which contains images with variations in

pose, illumination, resolution, and the variety of masks worn by the subjects.

We have also benchmarked the performance of existing face recognition models on

the proposed IMFW dataset. Experimental results demonstrate the limitations of

existing algorithms in presence of diverse conditions.

Facial recognition technology has made significant advances, yet its effectiveness across diverse ethnic backgrounds, particularly in specific Indian demographics, is less explored. This paper presents a detailed evaluation of both traditional and deep learning-based facial recognition models using the established LFW dataset and our newly developed IITJ Faces of Academia Dataset (JFAD), which comprises images of students from IIT Jodhpur. This unique dataset is designed to reflect the ethnic diversity of India, providing a critical test bed for assessing model performance in a focused academic environment. We analyze models ranging from holistic approaches like Eigenfaces and SIFT to advanced hybrid models that integrate CNNs with Gabor filters, Laplacian transforms, and segmentation techniques. Our findings reveal significant insights into the models' ability to adapt to the ethnic variability within Indian demographics and suggest modifications to enhance accuracy and inclusivity in real-world applications. The JFAD not only serves as a valuable resource for further research but also highlights the need for developing facial recognition systems that perform equitably across diverse populations.

'Quis custodiet ipsos custodes?' Who will watch the watchmen? On Detecting AI-generated peer-reviews

'Quis custodiet ipsos custodes?' Who will watch the watchmen? On Detecting AI-generated peer-reviews

Researchers developed two methods, Term Frequency (TF) and Review Regeneration (RR), for identifying AI-generated peer reviews, outperforming existing detectors. The RR model, when combined with a specific defense, demonstrated superior robustness against adversarial paraphrasing attacks, making it suitable for real-world application in academic publishing.

The research from IIT Jodhpur introduces FloCo-T5, a transformer-based framework, and FloCo, a large-scale dataset, to convert digitized flowchart images into executable Python code. FloCo-T5 achieved a CodeBLEU score of 75.7 and an Exact Match of 20.0% on the FloCo dataset, outperforming other baselines.

Electroencephalography (EEG) is a widely used tool for diagnosing brain disorders due to its high temporal resolution, non-invasive nature, and affordability. Manual analysis of EEG is labor-intensive and requires expertise, making automatic EEG interpretation crucial for reducing workload and accurately assessing seizures. In epilepsy diagnosis, prolonged EEG monitoring generates extensive data, often spanning hours, days, or even weeks. While machine learning techniques for automatic EEG interpretation have advanced significantly in recent decades, there remains a gap in its ability to efficiently analyze large datasets with a balance of accuracy and computational efficiency. To address the challenges mentioned above, an Attention Recurrent Neural Network (ARNN) is proposed that can process a large amount of data efficiently and accurately. This ARNN cell recurrently applies attention layers along a sequence and has linear complexity with the sequence length and leverages parallel computation by processing multi-channel EEG signals rather than single-channel signals. In this architecture, the attention layer is a computational unit that efficiently applies self-attention and cross-attention mechanisms to compute a recurrent function over a wide number of state vectors and input signals. This framework is inspired in part by the attention layer and long short-term memory (LSTM) cells, but it scales this typical cell up by several orders to parallelize for multi-channel EEG signals. It inherits the advantages of attention layers and LSTM gate while avoiding their respective drawbacks. The model's effectiveness is evaluated through extensive experiments with heterogeneous datasets, including the CHB-MIT and UPenn and Mayo's Clinic datasets.

Negation, a linguistic construct conveying absence, denial, or contradiction,

poses significant challenges for multilingual multimodal foundation models.

These models excel in tasks like machine translation, text-guided generation,

image captioning, audio interactions, and video processing but often struggle

to accurately interpret negation across diverse languages and cultural

contexts. In this perspective paper, we propose a comprehensive taxonomy of

negation constructs, illustrating how structural, semantic, and cultural

factors influence multimodal foundation models. We present open research

questions and highlight key challenges, emphasizing the importance of

addressing these issues to achieve robust negation handling. Finally, we

advocate for specialized benchmarks, language-specific tokenization,

fine-grained attention mechanisms, and advanced multimodal architectures. These

strategies can foster more adaptable and semantically precise multimodal

foundation models, better equipped to navigate and accurately interpret the

complexities of negation in multilingual, multimodal environments.

11 Oct 2023

In this paper, we propose a quasi Newton method to solve the robust counterpart of an uncertain multiobjective optimization problem under an arbitrary finite uncertainty set. Here the robust counterpart of an uncertain multiobjective optimization problem is the minimum of objective-wise worst case, which is a nonsmooth deterministic multiobjective optimization problem. In order to solve this robust counterpart with the help of quasi Newton method, we construct a sub-problem using Hessian approximation and solve it to determine a descent direction for the robust counterpart. We introduce an Armijo-type inexact line search technique to find an appropriate step length, and develop a modified BFGS formula to ensure positive definiteness of the Hessian matrix at each iteration. By incorporating descent direction, step length size, and modified BFGS formula, we write the quasi Newton's descent algorithm for the robust counterpart. We prove the convergence of the algorithm under standard assumptions and demonstrate that it achieves superlinear convergence rate. Furthermore, we validate the algorithm by comparing it with the weighted sum method through some numerical examples by using a performance profile.

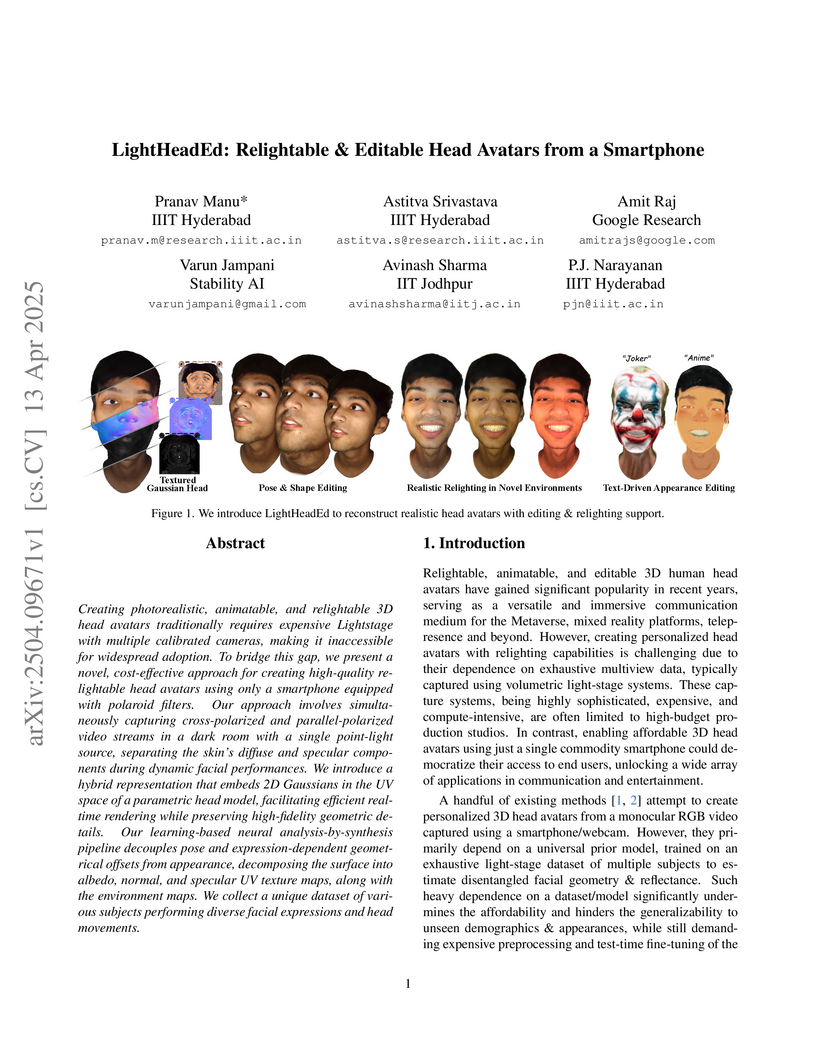

Creating photorealistic, animatable, and relightable 3D head avatars

traditionally requires expensive Lightstage with multiple calibrated cameras,

making it inaccessible for widespread adoption. To bridge this gap, we present

a novel, cost-effective approach for creating high-quality relightable head

avatars using only a smartphone equipped with polaroid filters. Our approach

involves simultaneously capturing cross-polarized and parallel-polarized video

streams in a dark room with a single point-light source, separating the skin's

diffuse and specular components during dynamic facial performances. We

introduce a hybrid representation that embeds 2D Gaussians in the UV space of a

parametric head model, facilitating efficient real-time rendering while

preserving high-fidelity geometric details. Our learning-based neural

analysis-by-synthesis pipeline decouples pose and expression-dependent

geometrical offsets from appearance, decomposing the surface into albedo,

normal, and specular UV texture maps, along with the environment maps. We

collect a unique dataset of various subjects performing diverse facial

expressions and head movements.

11 Jul 2016

This paper discusses the concept and parameter design of a Robust Stair Climbing Compliant Modular Robot, capable of tackling stairs with overhangs. Modifying the geometry of the periphery of the wheels of our robot helps in tackling overhangs. Along with establishing a concept design, robust design parameters are set to minimize performance variation. The Grey-based Taguchi Method is adopted for providing an optimal setting for the design parameters of the robot. The robot prototype is shown to have successfully scaled stairs of varying dimensions, with overhang, thus corroborating the analysis performed.

We present a novel problem of text-based visual question generation or

TextVQG in short. Given the recent growing interest of the document image

analysis community in combining text understanding with conversational

artificial intelligence, e.g., text-based visual question answering, TextVQG

becomes an important task. TextVQG aims to generate a natural language question

for a given input image and an automatically extracted text also known as OCR

token from it such that the OCR token is an answer to the generated question.

TextVQG is an essential ability for a conversational agent. However, it is

challenging as it requires an in-depth understanding of the scene and the

ability to semantically bridge the visual content with the text present in the

image. To address TextVQG, we present an OCR consistent visual question

generation model that Looks into the visual content, Reads the scene text, and

Asks a relevant and meaningful natural language question. We refer to our

proposed model as OLRA. We perform an extensive evaluation of OLRA on two

public benchmarks and compare them against baselines. Our model OLRA

automatically generates questions similar to the public text-based visual

question answering datasets that were curated manually. Moreover, we

significantly outperform baseline approaches on the performance measures

popularly used in text generation literature.

Face recognition algorithms have demonstrated very high recognition performance, suggesting suitability for real world applications. Despite the enhanced accuracies, robustness of these algorithms against attacks and bias has been challenged. This paper summarizes different ways in which the robustness of a face recognition algorithm is challenged, which can severely affect its intended working. Different types of attacks such as physical presentation attacks, disguise/makeup, digital adversarial attacks, and morphing/tampering using GANs have been discussed. We also present a discussion on the effect of bias on face recognition models and showcase that factors such as age and gender variations affect the performance of modern algorithms. The paper also presents the potential reasons for these challenges and some of the future research directions for increasing the robustness of face recognition models.

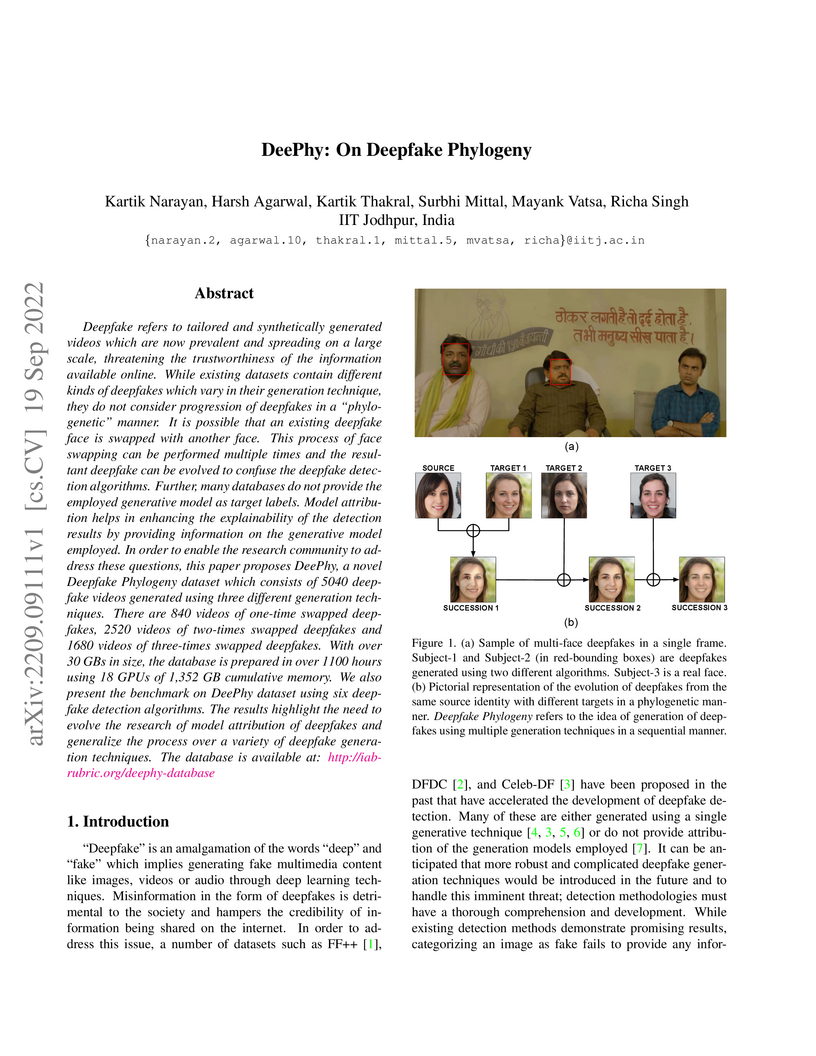

Deepfake refers to tailored and synthetically generated videos which are now

prevalent and spreading on a large scale, threatening the trustworthiness of

the information available online. While existing datasets contain different

kinds of deepfakes which vary in their generation technique, they do not

consider progression of deepfakes in a "phylogenetic" manner. It is possible

that an existing deepfake face is swapped with another face. This process of

face swapping can be performed multiple times and the resultant deepfake can be

evolved to confuse the deepfake detection algorithms. Further, many databases

do not provide the employed generative model as target labels. Model

attribution helps in enhancing the explainability of the detection results by

providing information on the generative model employed. In order to enable the

research community to address these questions, this paper proposes DeePhy, a

novel Deepfake Phylogeny dataset which consists of 5040 deepfake videos

generated using three different generation techniques. There are 840 videos of

one-time swapped deepfakes, 2520 videos of two-times swapped deepfakes and 1680

videos of three-times swapped deepfakes. With over 30 GBs in size, the database

is prepared in over 1100 hours using 18 GPUs of 1,352 GB cumulative memory. We

also present the benchmark on DeePhy dataset using six deepfake detection

algorithms. The results highlight the need to evolve the research of model

attribution of deepfakes and generalize the process over a variety of deepfake

generation techniques. The database is available at:

this http URL

Visual Question Answering (VQA) is an interdisciplinary field that bridges the gap between computer vision (CV) and natural language processing(NLP), enabling Artificial Intelligence(AI) systems to answer questions about images. Since its inception in 2015, VQA has rapidly evolved, driven by advances in deep learning, attention mechanisms, and transformer-based models. This survey traces the journey of VQA from its early days, through major breakthroughs, such as attention mechanisms, compositional reasoning, and the rise of vision-language pre-training methods. We highlight key models, datasets, and techniques that shaped the development of VQA systems, emphasizing the pivotal role of transformer architectures and multimodal pre-training in driving recent progress. Additionally, we explore specialized applications of VQA in domains like healthcare and discuss ongoing challenges, such as dataset bias, model interpretability, and the need for common-sense reasoning. Lastly, we discuss the emerging trends in large multimodal language models and the integration of external knowledge, offering insights into the future directions of VQA. This paper aims to provide a comprehensive overview of the evolution of VQA, highlighting both its current state and potential advancements.

There are no more papers matching your filters at the moment.