Mila Institute

Researchers from Technion, MILA Institute, and NVIDIA Research formally demonstrate that state entropy regularization provides unique robustness guarantees against structured and spatially correlated perturbations in reinforcement learning, contrasting with policy entropy's protection against local changes. Their work provides theoretical characterizations of induced uncertainty sets and shows state entropy's empirical superiority in environments with global disruptions.

05 Aug 2025

Morphology-aware policy learning is a means of enhancing policy sample efficiency by aggregating data from multiple agents. These types of policies have previously been shown to help generalize over dynamic, kinematic, and limb configuration variations between agent morphologies. Unfortunately, these policies still have sub-optimal zero-shot performance compared to end-to-end finetuning on morphologies at deployment. This limitation has ramifications in practical applications such as robotics because further data collection to perform end-to-end finetuning can be computationally expensive. In this work, we investigate combining morphology-aware pretraining with parameter efficient finetuning (PEFT) techniques to help reduce the learnable parameters necessary to specialize a morphology-aware policy to a target embodiment. We compare directly tuning sub-sets of model weights, input learnable adapters, and prefix tuning techniques for online finetuning. Our analysis reveals that PEFT techniques in conjunction with policy pre-training generally help reduce the number of samples to necessary to improve a policy compared to training models end-to-end from scratch. We further find that tuning as few as less than 1% of total parameters will improve policy performance compared the zero-shot performance of the base pretrained a policy.

Generative AI has the potential to transform personalization and

accessibility of education. However, it raises serious concerns about accuracy

and helping students become independent critical thinkers. In this study, we

designed a helpful AI "Peer" to help students correct fundamental physics

misconceptions related to Newtonian mechanic concepts. In contrast to

approaches that seek near-perfect accuracy to create an authoritative AI tutor

or teacher, we directly inform students that this AI can answer up to 40% of

questions incorrectly. In a randomized controlled trial with 165 students,

those who engaged in targeted dialogue with the AI Peer achieved post-test

scores that were, on average, 10.5 percentage points higher - with over 20

percentage points higher normalized gain - than a control group that discussed

physics history. Qualitative feedback indicated that 91% of the treatment

group's AI interactions were rated as helpful. Furthermore, by comparing

student performance on pre- and post-test questions about the same concept,

along with experts' annotations of the AI interactions, we find initial

evidence suggesting the improvement in performance does not depend on the

correctness of the AI. With further research, the AI Peer paradigm described

here could open new possibilities for how we learn, adapt to, and grow with AI.

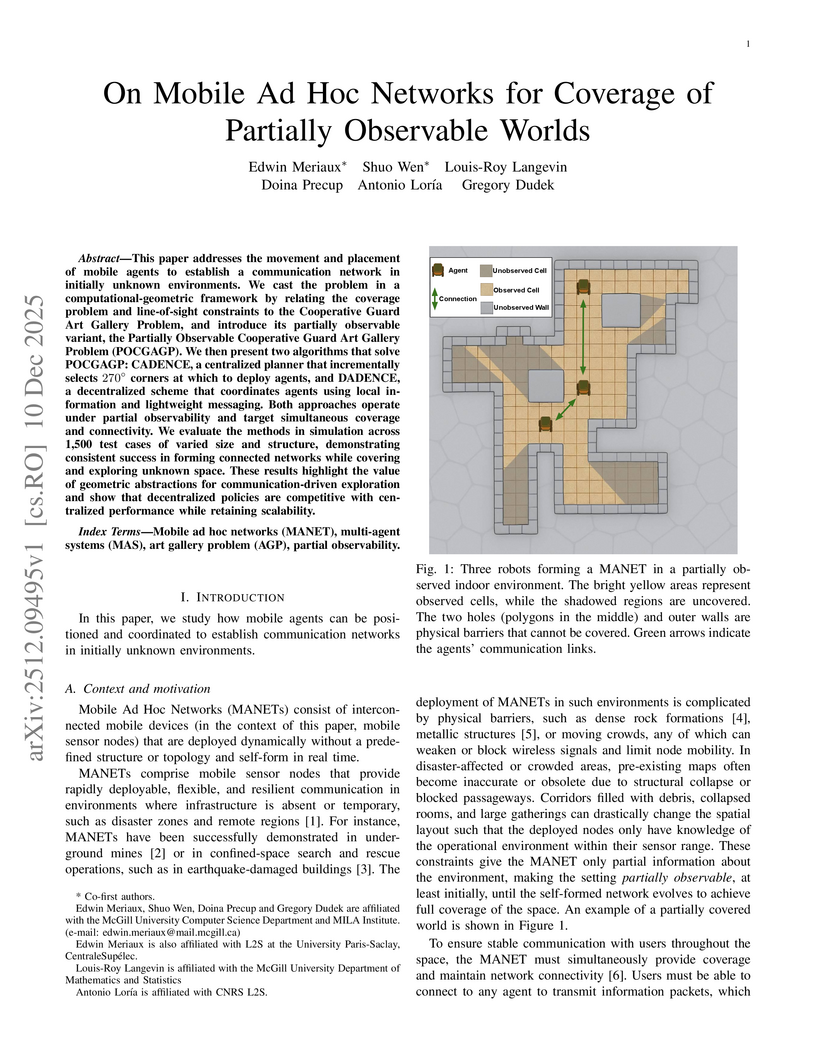

This paper addresses the movement and placement of mobile agents to establish a communication network in initially unknown environments. We cast the problem in a computational-geometric framework by relating the coverage problem and line-of-sight constraints to the Cooperative Guard Art Gallery Problem, and introduce its partially observable variant, the Partially Observable Cooperative Guard Art Gallery Problem (POCGAGP). We then present two algorithms that solve POCGAGP: CADENCE, a centralized planner that incrementally selects 270 degree corners at which to deploy agents, and DADENCE, a decentralized scheme that coordinates agents using local information and lightweight messaging. Both approaches operate under partial observability and target simultaneous coverage and connectivity. We evaluate the methods in simulation across 1,500 test cases of varied size and structure, demonstrating consistent success in forming connected networks while covering and exploring unknown space. These results highlight the value of geometric abstractions for communication-driven exploration and show that decentralized policies are competitive with centralized performance while retaining scalability.

We propose Guided Positive Sampling Self-Supervised Learning (GPS-SSL), a general method to inject a priori knowledge into Self-Supervised Learning (SSL) positive samples selection. Current SSL methods leverage Data-Augmentations (DA) for generating positive samples and incorporate prior knowledge - an incorrect, or too weak DA will drastically reduce the quality of the learned representation. GPS-SSL proposes instead to design a metric space where Euclidean distances become a meaningful proxy for semantic relationship. In that space, it is now possible to generate positive samples from nearest neighbor sampling. Any prior knowledge can now be embedded into that metric space independently from the employed DA. From its simplicity, GPS-SSL is applicable to any SSL method, e.g. SimCLR or BYOL. A key benefit of GPS-SSL is in reducing the pressure in tailoring strong DAs. For example GPS-SSL reaches 85.58% on Cifar10 with weak DA while the baseline only reaches 37.51%. We therefore move a step forward towards the goal of making SSL less reliant on DA. We also show that even when using strong DAs, GPS-SSL outperforms the baselines on under-studied domains. We evaluate GPS-SSL along with multiple baseline SSL methods on numerous downstream datasets from different domains when the models use strong or minimal data augmentations. We hope that GPS-SSL will open new avenues in studying how to inject a priori knowledge into SSL in a principled manner.

A large number of studies on social media compare the behaviour of users from

different political parties. As a basic step, they employ a predictive model

for inferring their political affiliation. The accuracy of this model can

change the conclusions of a downstream analysis significantly, yet the choice

between different models seems to be made arbitrarily. In this paper, we

provide a comprehensive survey and an empirical comparison of the current party

prediction practices and propose several new approaches which are competitive

with or outperform state-of-the-art methods, yet require less computational

resources. Party prediction models rely on the content generated by the users

(e.g., tweet texts), the relations they have (e.g., who they follow), or their

activities and interactions (e.g., which tweets they like). We examine all of

these and compare their signal strength for the party prediction task. This

paper lets the practitioner select from a wide range of data types that all

give strong performance. Finally, we conduct extensive experiments on different

aspects of these methods, such as data collection speed and transfer

capabilities, which can provide further insights for both applied and

methodological research.

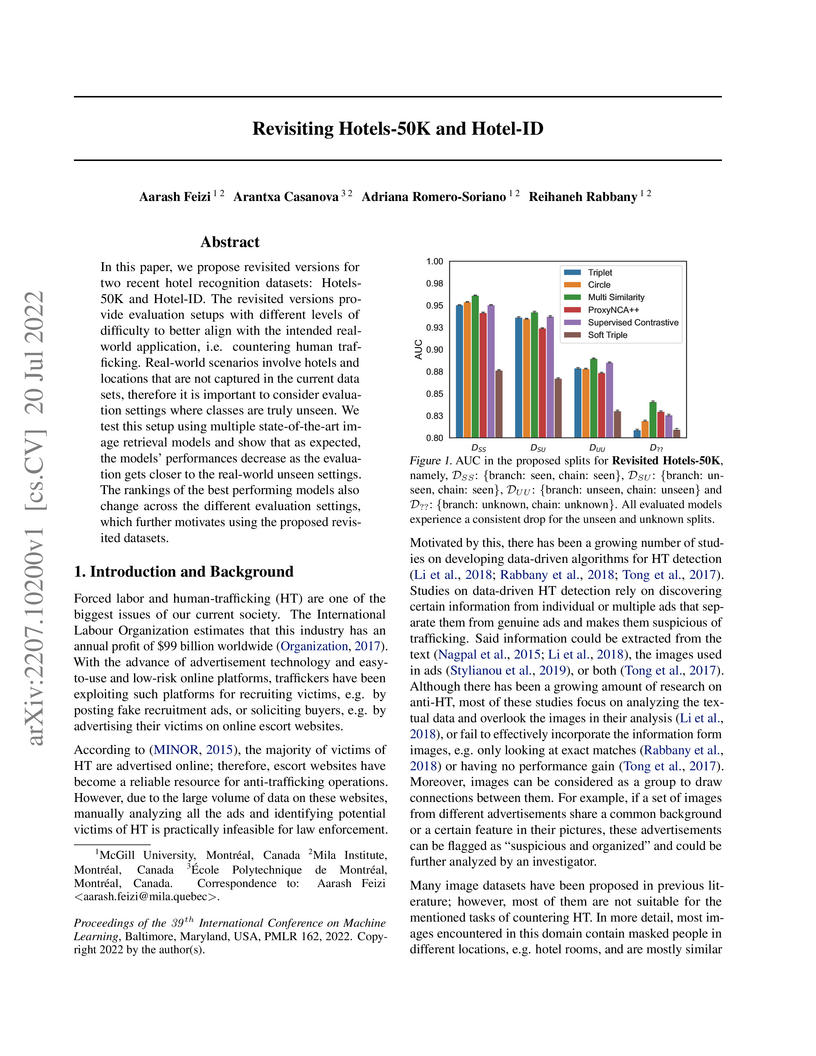

In this paper, we propose revisited versions for two recent hotel recognition

datasets: Hotels50K and Hotel-ID. The revisited versions provide evaluation

setups with different levels of difficulty to better align with the intended

real-world application, i.e. countering human trafficking. Real-world scenarios

involve hotels and locations that are not captured in the current data sets,

therefore it is important to consider evaluation settings where classes are

truly unseen. We test this setup using multiple state-of-the-art image

retrieval models and show that as expected, the models' performances decrease

as the evaluation gets closer to the real-world unseen settings. The rankings

of the best performing models also change across the different evaluation

settings, which further motivates using the proposed revisited datasets.

We present a solver for Mixed Integer Programs (MIP) developed for the MIP

competition 2022. Given the 10 minutes bound on the computational time

established by the rules of the competition, our method focuses on finding a

feasible solution and improves it through a Branch-and-Bound algorithm. Another

rule of the competition allows the use of up to 8 threads. Each thread is given

a different primal heuristic, which has been tuned by hyper-parameters, to find

a feasible solution. In every thread, once a feasible solution is found, we

stop and we use a Branch-and-Bound method, embedded with local search

heuristics, to ameliorate the incumbent solution. The three variants of the

Diving heuristic that we implemented manage to find a feasible solution for 10

instances of the training data set. These heuristics are the best performing

among the heuristics that we implemented. Our Branch-and-Bound algorithm is

effective on a small portion of the training data set, and it manages to find

an incumbent feasible solution for an instance that we could not solve with the

Diving heuristics. Overall, our combined methods, when implemented with

extensive computational power, can solve 11 of the 19 problems of the training

data set within the time limit. Our submission to the MIP competition was

awarded the "Outstanding Student Submission" honorable mention.

There are no more papers matching your filters at the moment.