NAVER LABS Europe

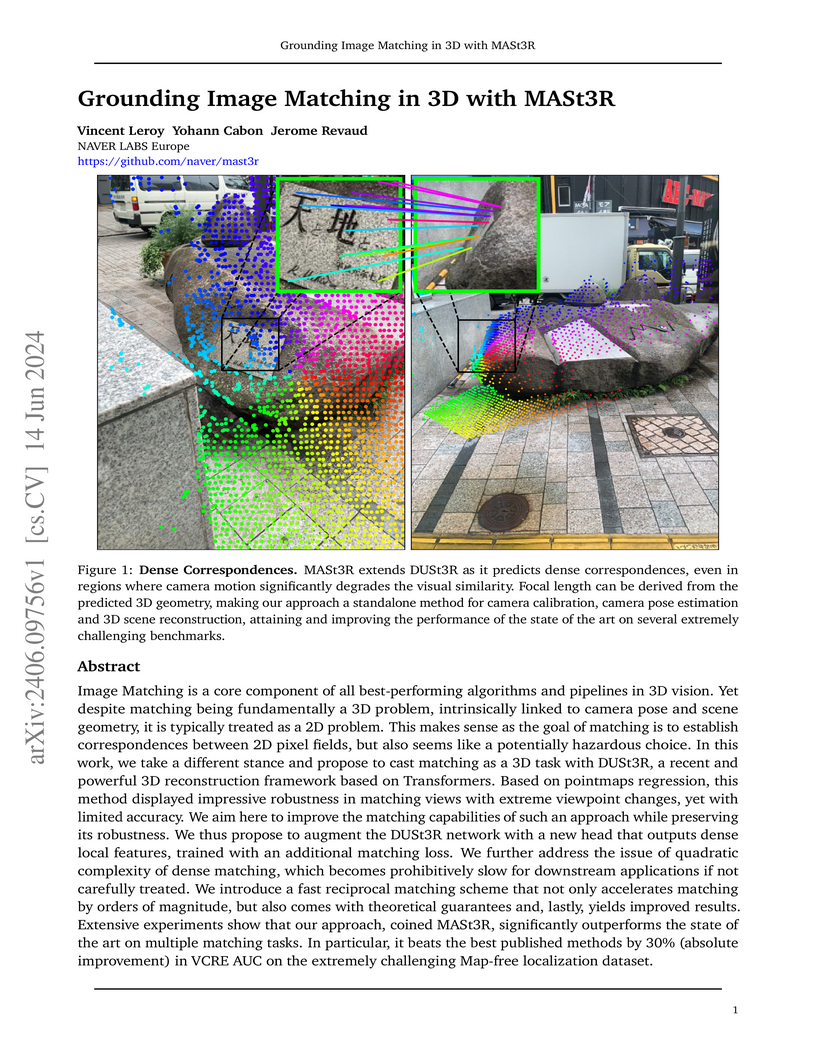

MASt3R, developed by NAVER LABS Europe, presents an image matching framework that explicitly incorporates 3D scene information to establish pixel correspondences. The method achieves a 30% absolute improvement in VCRE AUC on the map-free localization benchmark and sets new state-of-the-art results for relative pose estimation on CO3Dv2 and RealEstate10k datasets.

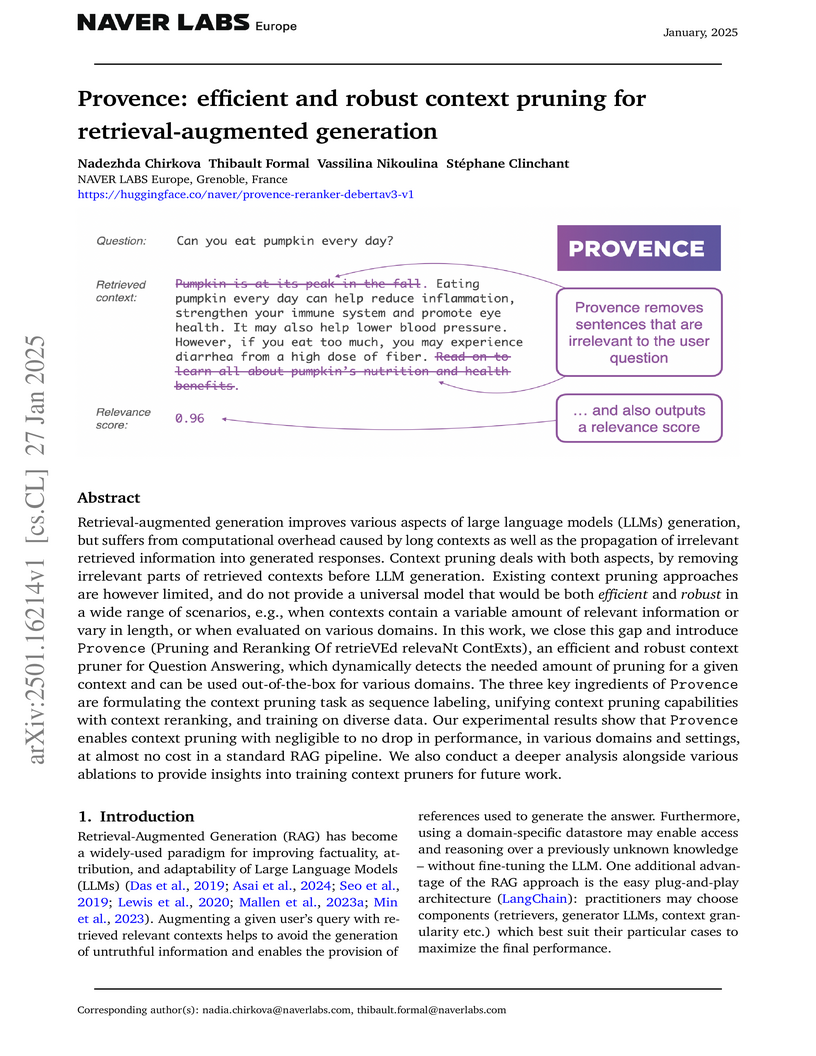

NAVER LABS Europe developed Provence, an efficient and robust context pruner for Retrieval-Augmented Generation (RAG) systems. It dynamically identifies and removes irrelevant sentences from retrieved contexts, achieving up to 80% compression while maintaining or improving LLM answer quality and accelerating inference by up to 2x, notably by unifying pruning with reranking into a single model.

12 Jul 2021

The SPLADE model fine-tunes BERT to generate high-dimensional, sparse lexical representations for queries and documents, enabling efficient first-stage ranking using inverted indexes. It achieves effectiveness comparable to leading dense retrieval models while offering greater efficiency for large-scale systems.

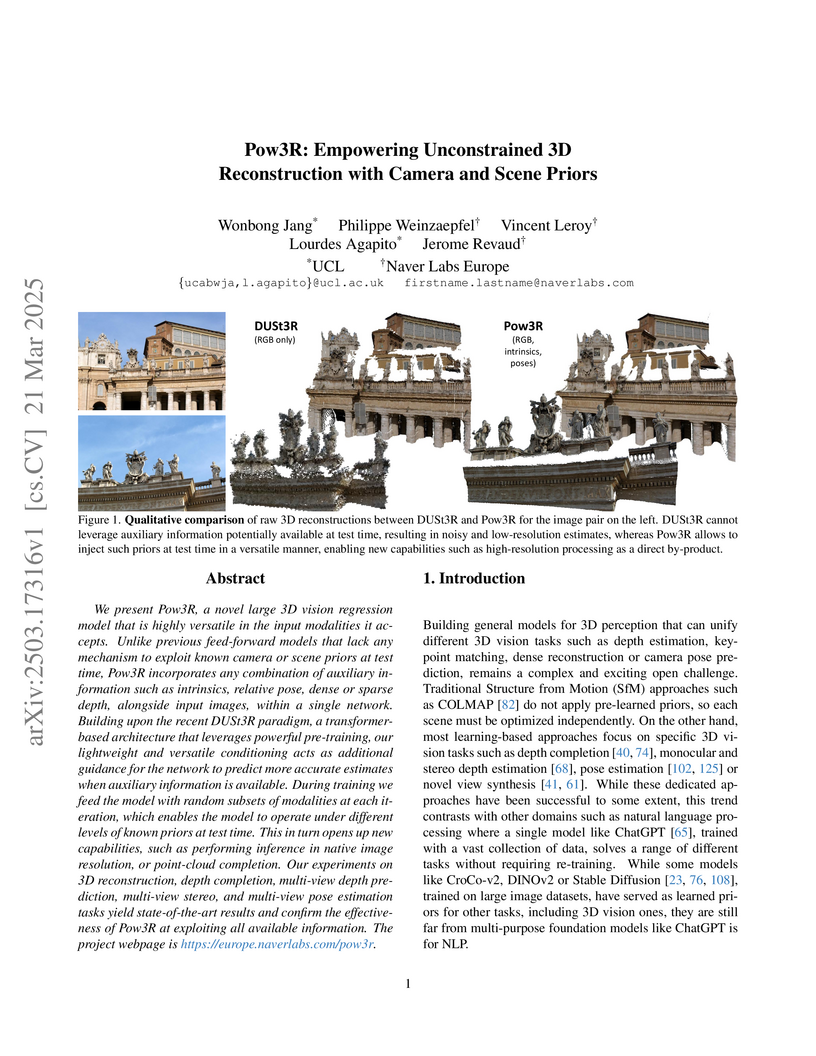

A unified 3D reconstruction framework from UCL and Naver Labs Europe enables flexible incorporation of camera and scene priors like intrinsics, poses, and depth maps into transformer-based architectures, achieving state-of-the-art performance across multiple 3D vision tasks while enabling high-resolution processing and efficient pose estimation through dual coordinate prediction.

We present Multi-HMR, a strong sigle-shot model for multi-person 3D human mesh recovery from a single RGB image. Predictions encompass the whole body, i.e., including hands and facial expressions, using the SMPL-X parametric model and 3D location in the camera coordinate system. Our model detects people by predicting coarse 2D heatmaps of person locations, using features produced by a standard Vision Transformer (ViT) backbone. It then predicts their whole-body pose, shape and 3D location using a new cross-attention module called the Human Prediction Head (HPH), with one query attending to the entire set of features for each detected person. As direct prediction of fine-grained hands and facial poses in a single shot, i.e., without relying on explicit crops around body parts, is hard to learn from existing data, we introduce CUFFS, the Close-Up Frames of Full-Body Subjects dataset, containing humans close to the camera with diverse hand poses. We show that incorporating it into the training data further enhances predictions, particularly for hands. Multi-HMR also optionally accounts for camera intrinsics, if available, by encoding camera ray directions for each image token. This simple design achieves strong performance on whole-body and body-only benchmarks simultaneously: a ViT-S backbone on 448×448 images already yields a fast and competitive model, while larger models and higher resolutions obtain state-of-the-art results.

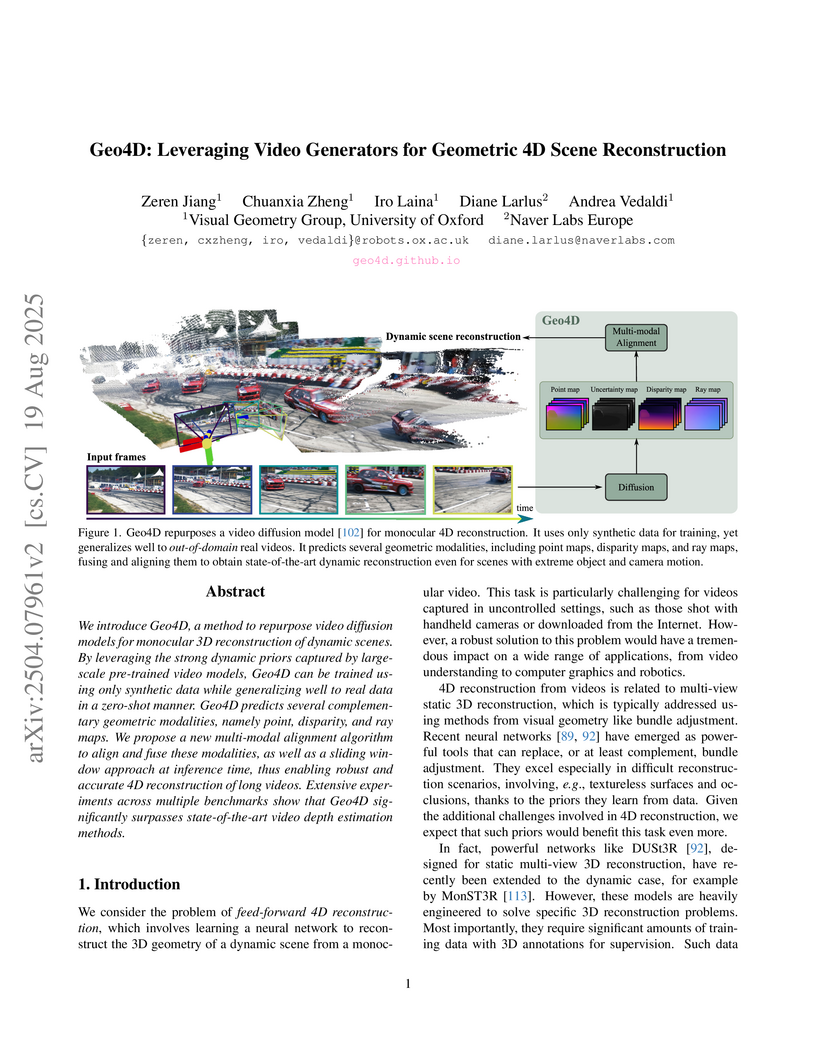

Geo4D adapts a pre-trained video diffusion model, DynamiCrafter, for feed-forward monocular 4D scene reconstruction, effectively extracting explicit 4D geometry from video generators. The method achieves state-of-the-art performance in video depth and camera pose estimation on real-world benchmarks, despite being trained solely on synthetic data.

SPLADE v2, developed by Naver Labs Europe and Sorbonne Université, enhances sparse neural retrieval by incorporating max pooling, knowledge distillation, and document-only expansion. It achieves state-of-the-art performance on MS MARCO (36.8 MRR@10) and strong zero-shot generalization on BEIR, while maintaining efficiency suitable for inverted indexes.

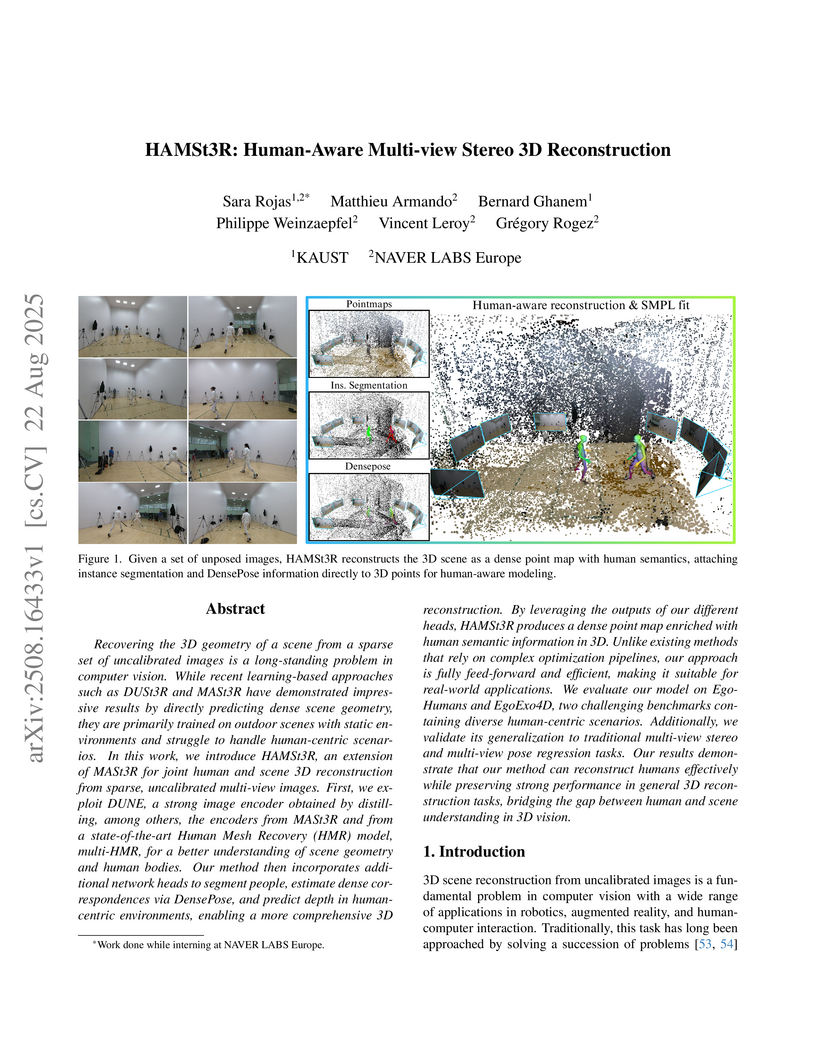

Recovering the 3D geometry of a scene from a sparse set of uncalibrated images is a long-standing problem in computer vision. While recent learning-based approaches such as DUSt3R and MASt3R have demonstrated impressive results by directly predicting dense scene geometry, they are primarily trained on outdoor scenes with static environments and struggle to handle human-centric scenarios. In this work, we introduce HAMSt3R, an extension of MASt3R for joint human and scene 3D reconstruction from sparse, uncalibrated multi-view images. First, we exploit DUNE, a strong image encoder obtained by distilling, among others, the encoders from MASt3R and from a state-of-the-art Human Mesh Recovery (HMR) model, multi-HMR, for a better understanding of scene geometry and human bodies. Our method then incorporates additional network heads to segment people, estimate dense correspondences via DensePose, and predict depth in human-centric environments, enabling a more comprehensive 3D reconstruction. By leveraging the outputs of our different heads, HAMSt3R produces a dense point map enriched with human semantic information in 3D. Unlike existing methods that rely on complex optimization pipelines, our approach is fully feed-forward and efficient, making it suitable for real-world applications. We evaluate our model on EgoHumans and EgoExo4D, two challenging benchmarks con taining diverse human-centric scenarios. Additionally, we validate its generalization to traditional multi-view stereo and multi-view pose regression tasks. Our results demonstrate that our method can reconstruct humans effectively while preserving strong performance in general 3D reconstruction tasks, bridging the gap between human and scene understanding in 3D vision.

Panoptic segmentation of 3D scenes, involving the segmentation and classification of object instances in a dense 3D reconstruction of a scene, is a challenging problem, especially when relying solely on unposed 2D images. Existing approaches typically leverage off-the-shelf models to extract per-frame 2D panoptic segmentations, before optimizing an implicit geometric representation (often based on NeRF) to integrate and fuse the 2D predictions. We argue that relying on 2D panoptic segmentation for a problem inherently 3D and multi-view is likely suboptimal as it fails to leverage the full potential of spatial relationships across views. In addition to requiring camera parameters, these approaches also necessitate computationally expensive test-time optimization for each scene. Instead, in this work, we propose a unified and integrated approach PanSt3R, which eliminates the need for test-time optimization by jointly predicting 3D geometry and multi-view panoptic segmentation in a single forward pass. Our approach builds upon recent advances in 3D reconstruction, specifically upon MUSt3R, a scalable multi-view version of DUSt3R, and enhances it with semantic awareness and multi-view panoptic segmentation capabilities. We additionally revisit the standard post-processing mask merging procedure and introduce a more principled approach for multi-view segmentation. We also introduce a simple method for generating novel-view predictions based on the predictions of PanSt3R and vanilla 3DGS. Overall, the proposed PanSt3R is conceptually simple, yet fast and scalable, and achieves state-of-the-art performance on several benchmarks, while being orders of magnitude faster than existing methods.

NAVER LABS Europe developed CroCo, a self-supervised pre-training method that leverages cross-view image completion to learn robust 3D geometric representations. This approach achieves superior performance on various 3D vision tasks such as depth estimation, optical flow, and relative camera pose, while demonstrating a trade-off in performance for high-level semantic tasks.

NAVER LABS Europe introduces MUSt3R, a groundbreaking multi-view 3D reconstruction framework that achieves state-of-the-art performance while maintaining 8-11 FPS processing speeds, representing a significant advance in both offline and online reconstruction capabilities through its novel symmetric architecture and multi-layer memory mechanism.

SPLADE-v3, developed by Naver Labs Europe, introduces enhanced training methodologies that notably improve the effectiveness of sparse neural retrieval models. The models achieve statistically significant gains over previous SPLADE versions and demonstrate strong competitiveness with cross-encoder re-rankers on diverse benchmarks.

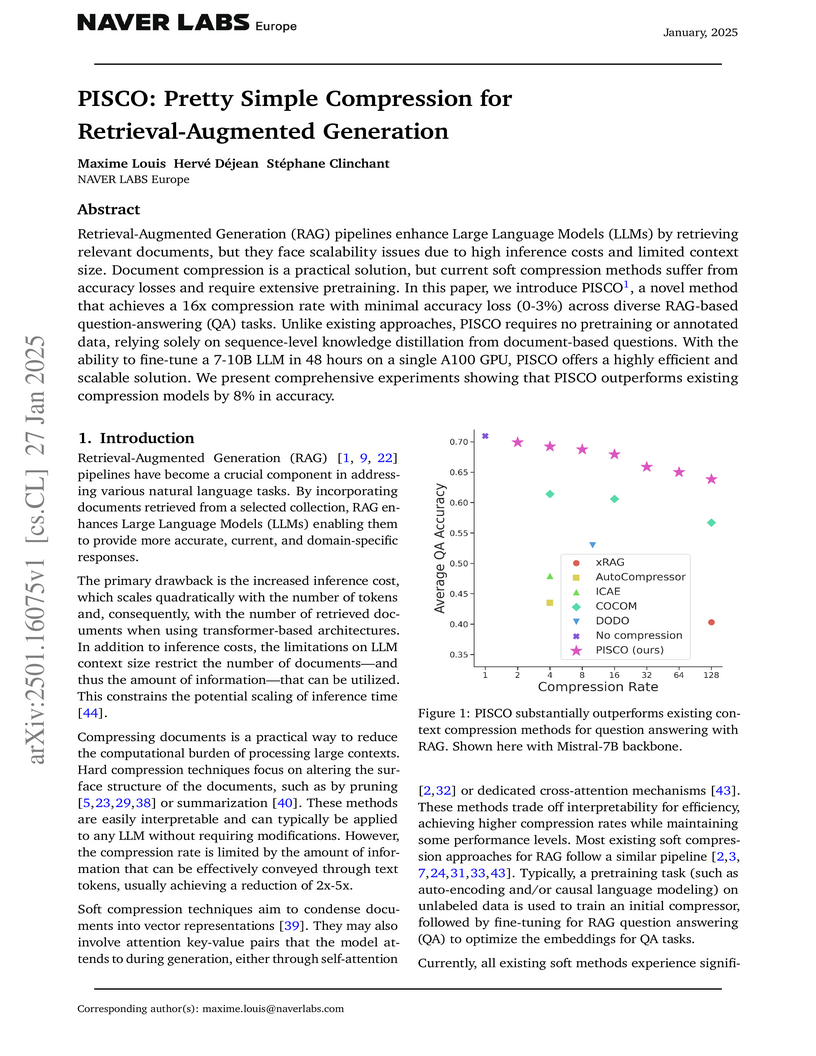

NAVER LABS researchers introduce PISCO, a remarkably efficient document compression framework for retrieval-augmented generation that achieves 16x compression with minimal accuracy loss (0-3%), while requiring only 48 hours of training on a single GPU - challenging conventional wisdom about the necessity of extensive pretraining for effective compression systems.

This work introduces CroCo v2, an enhanced self-supervised pre-training method that enables a generic Vision Transformer architecture to achieve state-of-the-art performance on dense geometric vision tasks like stereo matching and optical flow. It leverages millions of real-world image pairs, employs relative positional embeddings, and scales up the model, demonstrating that specialized architectural components are not strictly necessary for these tasks.

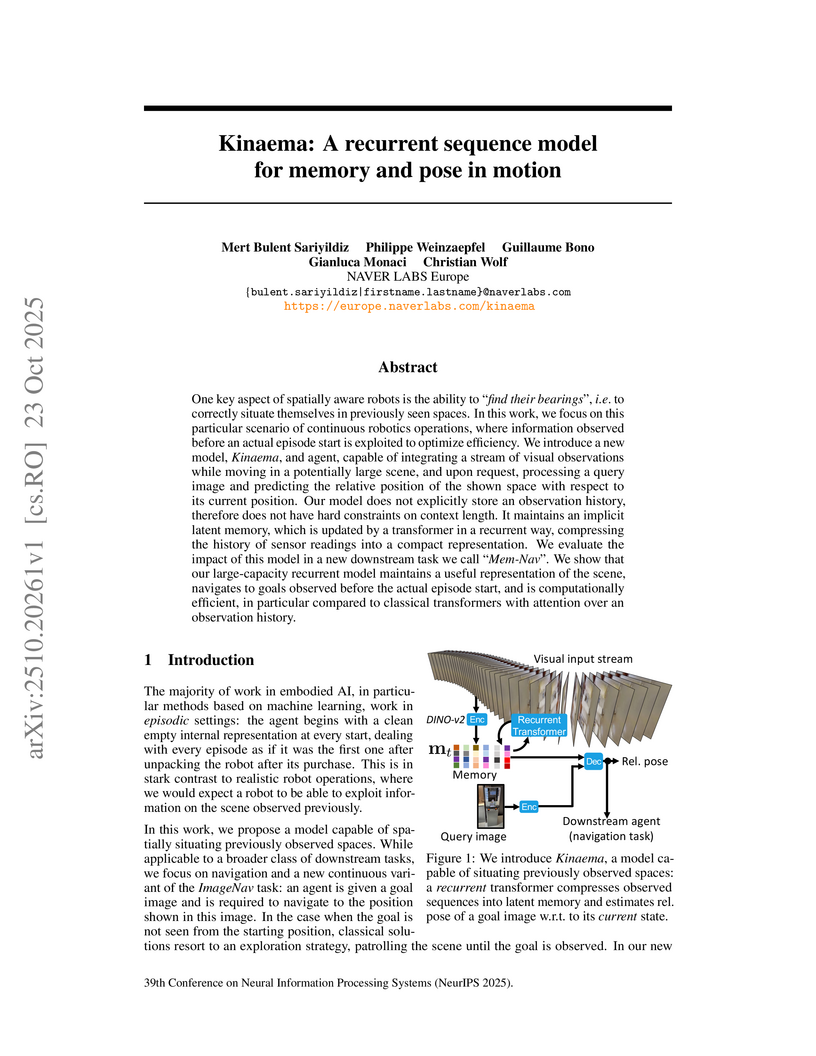

Kinaema, developed by NAVER LABS Europe, introduces a recurrent sequence model that enables embodied AI agents to form scalable, long-term spatial memory from continuous visual and odometry streams. The model outperforms prior memory baselines on memory-based relative pose estimation and improves navigation performance in challenging, continuous scenarios.

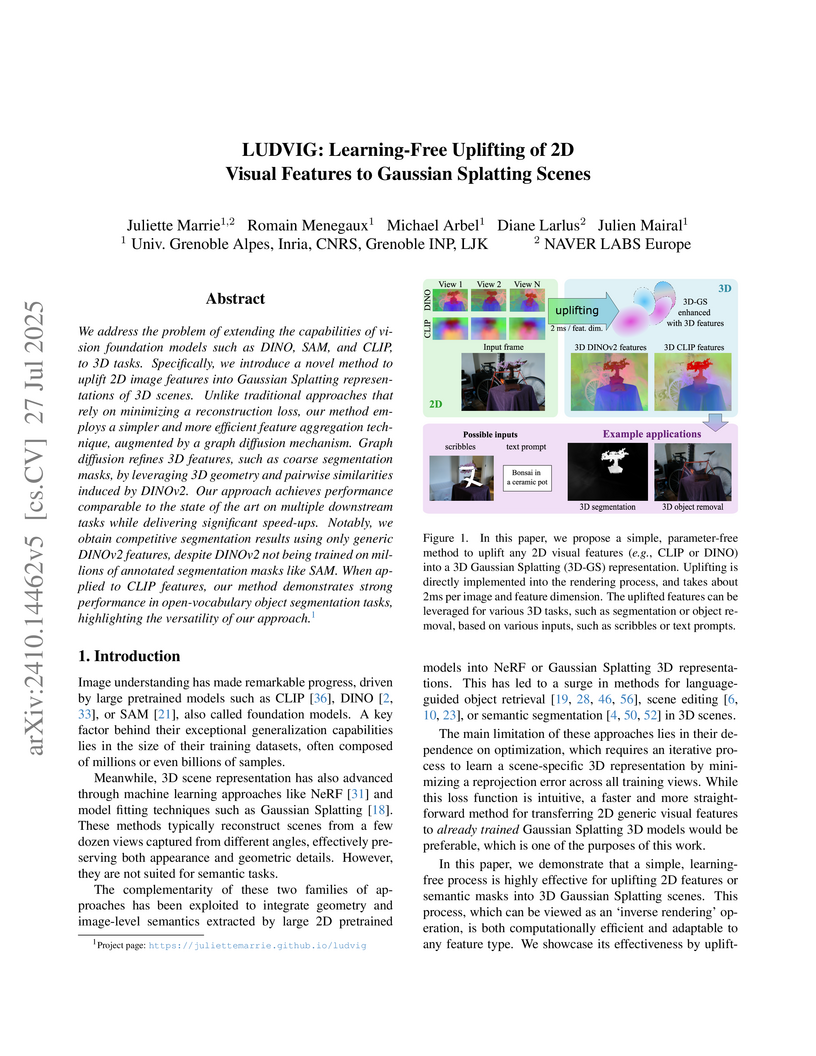

LUDVIG introduces a learning-free "inverse rendering" approach that directly aggregates 2D visual features from models like DINOv2 onto 3D Gaussian Splatting scenes, complemented by a 3D graph diffusion mechanism for refinement. This method from Univ. Grenoble Alpes and NAVER LABS Europe achieves performance comparable to or better than optimization-based techniques across various 3D semantic tasks, while accelerating feature generation and uplifting by 5 to 10 times.

Recent multi-teacher distillation methods have unified the encoders of

multiple foundation models into a single encoder, achieving competitive

performance on core vision tasks like classification, segmentation, and depth

estimation. This led us to ask: Could similar success be achieved when the pool

of teachers also includes vision models specialized in diverse tasks across

both 2D and 3D perception? In this paper, we define and investigate the problem

of heterogeneous teacher distillation, or co-distillation, a challenging

multi-teacher distillation scenario where teacher models vary significantly in

both (a) their design objectives and (b) the data they were trained on. We

explore data-sharing strategies and teacher-specific encoding, and introduce

DUNE, a single encoder excelling in 2D vision, 3D understanding, and 3D human

perception. Our model achieves performance comparable to that of its larger

teachers, sometimes even outperforming them, on their respective tasks.

Notably, DUNE surpasses MASt3R in Map-free Visual Relocalization with a much

smaller encoder.

MASt3R-SfM, from the authors at CentraleSupélec, presents a Structure-from-Motion pipeline that uses foundation models for 3D vision to reconstruct 3D scenes from unconstrained image collections. The system demonstrates improved robustness in challenging scenarios like limited overlap and pure rotation, while maintaining quasi-linear complexity with the number of images.

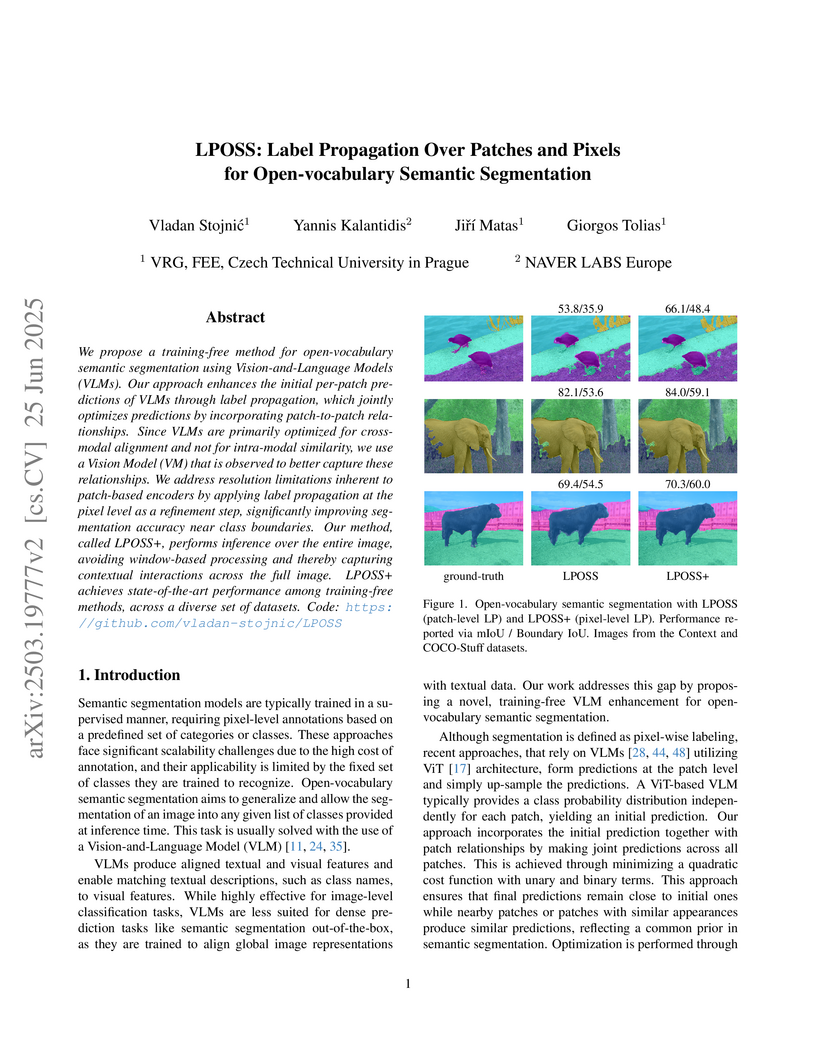

We propose a training-free method for open-vocabulary semantic segmentation using Vision-and-Language Models (VLMs). Our approach enhances the initial per-patch predictions of VLMs through label propagation, which jointly optimizes predictions by incorporating patch-to-patch relationships. Since VLMs are primarily optimized for cross-modal alignment and not for intra-modal similarity, we use a Vision Model (VM) that is observed to better capture these relationships. We address resolution limitations inherent to patch-based encoders by applying label propagation at the pixel level as a refinement step, significantly improving segmentation accuracy near class boundaries. Our method, called LPOSS+, performs inference over the entire image, avoiding window-based processing and thereby capturing contextual interactions across the full image. LPOSS+ achieves state-of-the-art performance among training-free methods, across a diverse set of datasets. Code: this https URL

Neural retrievers based on dense representations combined with Approximate Nearest Neighbors search have recently received a lot of attention, owing their success to distillation and/or better sampling of examples for training -- while still relying on the same backbone architecture. In the meantime, sparse representation learning fueled by traditional inverted indexing techniques has seen a growing interest, inheriting from desirable IR priors such as explicit lexical matching. While some architectural variants have been proposed, a lesser effort has been put in the training of such models. In this work, we build on SPLADE -- a sparse expansion-based retriever -- and show to which extent it is able to benefit from the same training improvements as dense models, by studying the effect of distillation, hard-negative mining as well as the Pre-trained Language Model initialization. We furthermore study the link between effectiveness and efficiency, on in-domain and zero-shot settings, leading to state-of-the-art results in both scenarios for sufficiently expressive models.

There are no more papers matching your filters at the moment.