Masked Image Modeling (MIM) offers a promising approach to self-supervised representation learning, however existing MIM models still lag behind the state-of-the-art. In this paper, we systematically analyze target representations, loss functions, and architectures, to introduce CAPI - a novel pure-MIM framework that relies on the prediction of latent clusterings. Our approach leverages a clustering-based loss, which is stable to train, and exhibits promising scaling properties. Our ViT-L backbone, CAPI, achieves 83.8% accuracy on ImageNet and 32.1% mIoU on ADE20K with simple linear probes, substantially outperforming previous MIM methods and approaching the performance of the current state-of-the-art, DINOv2. We release all our code and models.

16 Jan 2024

We investigate the approximation of high-dimensional target measures as low-dimensional updates of a dominating reference measure. This approximation class replaces the associated density with the composition of: (i) a feature map that identifies the leading principal components or features of the target measure, relative to the reference, and (ii) a low-dimensional profile function. When the reference measure satisfies a subspace ϕ-Sobolev inequality, we construct a computationally tractable approximation that yields certifiable error guarantees with respect to the Amari α-divergences. Our construction proceeds in two stages. First, for any feature map and any α-divergence, we obtain an analytical expression for the optimal profile function. Second, for linear feature maps, the principal features are obtained from eigenvectors of a matrix involving gradients of the log-density. Neither step requires explicit access to normalizing constants. Notably, by leveraging the ϕ-Sobolev inequalities, we demonstrate that these features universally certify approximation errors across the range of α-divergences α∈(0,1]. We then propose an application to Bayesian inverse problems and provide an analogous construction with approximation guarantees that hold in expectation over the data. We conclude with an extension of the proposed dimension reduction strategy to nonlinear feature maps.

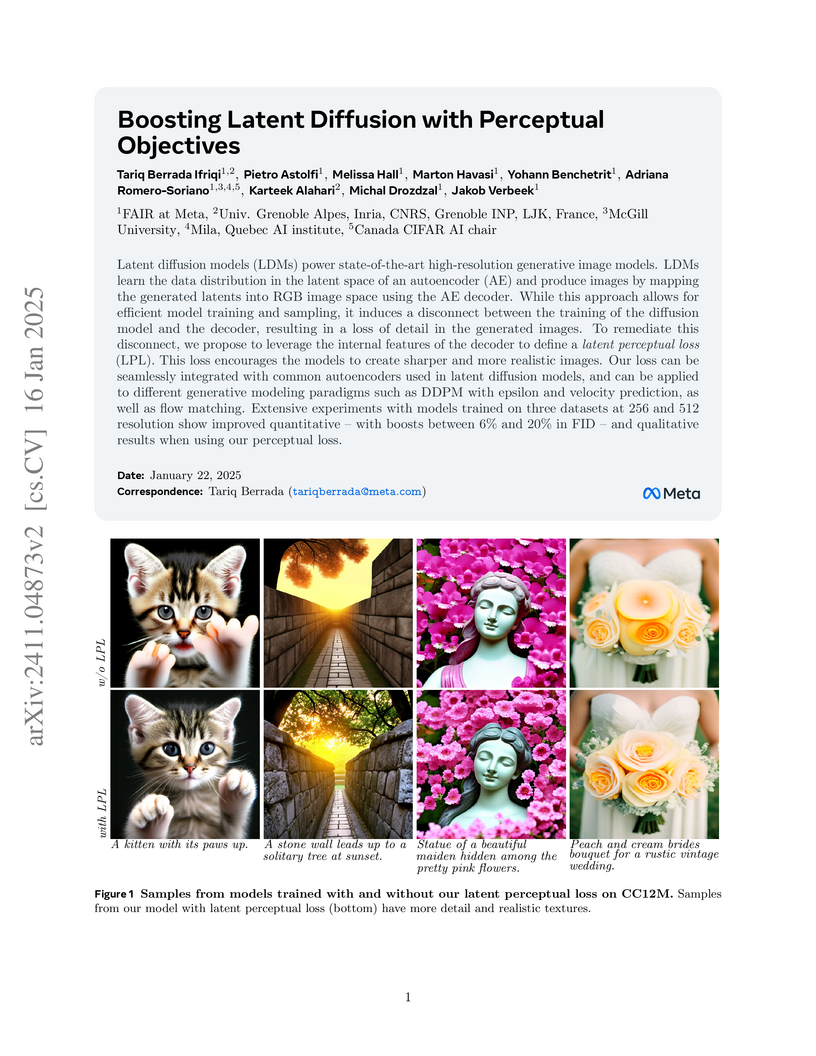

Latent Perceptual Loss (LPL) enhances the perceptual quality of images generated by latent diffusion models by introducing a training objective that leverages intermediate features from the autoencoder's decoder. This method, developed by researchers at FAIR at Meta and academic institutions, consistently improves metrics like FID and CLIPScore while producing sharper, more detailed images.

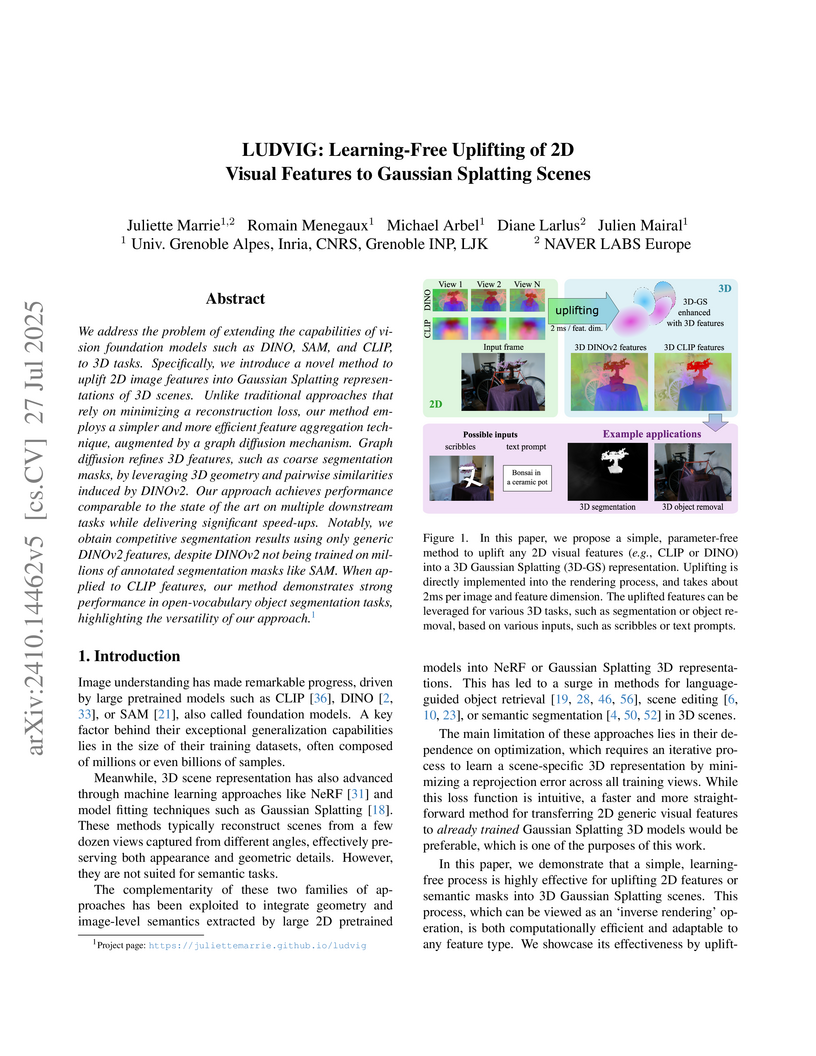

LUDVIG introduces a learning-free "inverse rendering" approach that directly aggregates 2D visual features from models like DINOv2 onto 3D Gaussian Splatting scenes, complemented by a 3D graph diffusion mechanism for refinement. This method from Univ. Grenoble Alpes and NAVER LABS Europe achieves performance comparable to or better than optimization-based techniques across various 3D semantic tasks, while accelerating feature generation and uplifting by 5 to 10 times.

07 Oct 2024

We propose an extreme dimension reduction method extending the Extreme-PLS approach to the case where the covariate lies in a possibly infinite-dimensional Hilbert space. The ideas are partly borrowed from both Partial Least-Squares and Sliced Inverse Regression techniques. As such, the method relies on the projection of the covariate onto a subspace and maximizes the covariance between its projection and the response conditionally to an extreme event driven by a random threshold to capture the tail-information. The covariate and the heavy-tailed response are supposed to be linked through a non-linear inverse single-index model and our goal is to infer the index in this regression framework. We propose a new family of estimators and show its asymptotic consistency with convergence rates under the model. Assuming mild conditions on the noise, most of the assumptions are stated in terms of regular variation unlike the standard literature on SIR and single-index regression. Finally, our results are illustrated on a finite-sample study with synthetic functional data as well as on real data from the financial realm, highlighting the effectiveness of the dimension reduction for estimating extreme risk measures.

Simulation-based inference (SBI) is transforming experimental sciences by enabling parameter estimation in complex non-linear models from simulated data. A persistent challenge, however, is model misspecification: simulators are only approximations of reality, and mismatches between simulated and real data can yield biased or overconfident posteriors. We address this issue by introducing Flow Matching Corrected Posterior Estimation (FMCPE), a framework that leverages the flow matching paradigm to refine simulation-trained posterior estimators using a small set of real calibration samples. Our approach proceeds in two stages: first, a posterior approximator is trained on abundant simulated data; second, flow matching transports its predictions toward the true posterior supported by real observations, without requiring explicit knowledge of the misspecification. This design enables FMCPE to combine the scalability of SBI with robustness to distributional shift. Across synthetic benchmarks and real-world datasets, we show that our proposal consistently mitigates the effects of misspecification, delivering improved inference accuracy and uncertainty calibration compared to standard SBI baselines, while remaining computationally efficient.

Attention operator has been widely used as a basic brick in visual understanding since it provides some flexibility through its adjustable kernels. However, this operator suffers from inherent limitations: (1) the attention kernel is not discriminative enough, resulting in high redundancy, and (2) the complexity in computation and memory is quadratic in the sequence length. In this paper, we propose a novel attention operator, called Lightweight Structure-aware Attention (LiSA), which has a better representation power with log-linear complexity. Our operator transforms the attention kernels to be more discriminative by learning structural patterns. These structural patterns are encoded by exploiting a set of relative position embeddings (RPEs) as multiplicative weights, thereby improving the representation power of the attention kernels. Additionally, the RPEs are approximated to obtain log-linear complexity. Our experiments and analyses demonstrate that the proposed operator outperforms self-attention and other existing operators, achieving state-of-the-art results on ImageNet-1K and other downstream tasks such as video action recognition on Kinetics-400, object detection \& instance segmentation on COCO, and semantic segmentation on ADE-20K.

LCR-Net++ introduces an end-to-end architecture for simultaneously detecting and estimating the 2D and 3D poses of multiple individuals in unconstrained natural images. The system, building on previous work, establishes new state-of-the-art results for multi-person 3D pose estimation on datasets like MuPoTS-3D and improves 3D performance on Human3.6M.

18 Jun 2024

We study a theoretical and algorithmic framework for structured prediction in

the online learning setting. The problem of structured prediction, i.e.

estimating function where the output space lacks a vectorial structure, is well

studied in the literature of supervised statistical learning. We show that our

algorithm is a generalisation of optimal algorithms from the supervised

learning setting, and achieves the same excess risk upper bound also when data

are not i.i.d. Moreover, we consider a second algorithm designed especially for

non-stationary data distributions, including adversarial data. We bound its

stochastic regret in function of the variation of the data distributions.

Guidance techniques are commonly used in diffusion and flow models to improve image quality and input consistency for conditional generative tasks such as class-conditional and text-to-image generation. In particular, classifier-free guidance (CFG) is the most widely adopted guidance technique. It results, however, in trade-offs across quality, diversity and consistency: improving some at the expense of others. While recent work has shown that it is possible to disentangle these factors to some extent, such methods come with an overhead of requiring an additional (weaker) model, or require more forward passes per sampling step. In this paper, we propose Entropy Rectifying Guidance (ERG), a simple and effective guidance method based on inference-time changes in the attention mechanism of state-of-the-art diffusion transformer architectures, which allows for simultaneous improvements over image quality, diversity and prompt consistency. ERG is more general than CFG and similar guidance techniques, as it extends to unconditional sampling. We show that ERG results in significant improvements in various tasks, including text-to-image, class-conditional and unconditional image generation. We also show that ERG can be seamlessly combined with other recent guidance methods such as CADS and APG, further improving generation results.

Ample empirical evidence in deep neural network training suggests that a variety of optimizers tend to find nearly global optima. In this article, we adopt the reversed perspective that convergence to an arbitrary point is assumed rather than proven, focusing on the consequences of this assumption. From this viewpoint, in line with recent advances on the edge-of-stability phenomenon, we argue that different optimizers effectively act as eigenvalue filters determined by their hyperparameters. Specifically, the standard gradient descent method inherently avoids the sharpest minima, whereas Sharpness-Aware Minimization (SAM) algorithms go even further by actively favoring wider basins. Inspired by these insights, we propose two novel algorithms that exhibit enhanced eigenvalue filtering, effectively promoting wider minima. Our theoretical analysis leverages a generalized Hadamard--Perron stable manifold theorem and applies to general semialgebraic C2 functions, without requiring additional non-degeneracy conditions or global Lipschitz bound assumptions. We support our conclusions with numerical experiments on feed-forward neural networks.

Dense ground displacement measurements are crucial for geological studies but

are impractical to collect directly. Traditionally, displacement fields are

estimated using patch matching on optical satellite images from different

acquisition times. While deep learning-based optical flow models are promising,

their adoption in ground deformation analysis is hindered by challenges such as

the absence of real ground truth, the need for sub-pixel precision, and

temporal variations due to geological or anthropogenic changes. In particular,

we identify that deep learning models relying on explicit correlation layers

struggle at estimating small displacements in real-world conditions. Instead,

we propose a model that employs iterative refinements with explicit warping

layers and a correlation-independent backbone, enabling sub-pixel precision.

Additionally, a non-convex variant of Total Variation regularization preserves

fault-line sharpness while maintaining smoothness elsewhere. Our model

significantly outperforms widely used geophysics methods on semi-synthetic

benchmarks and generalizes well to challenging real-world scenarios captured by

both medium- and high-resolution sensors. Project page:

this https URL

Studies on the automatic processing of 3D human pose data have flourished in the recent past. In this paper, we are interested in the generation of plausible and diverse future human poses following an observed 3D pose sequence. Current methods address this problem by injecting random variables from a single latent space into a deterministic motion prediction framework, which precludes the inherent multi-modality in human motion generation. In addition, previous works rarely explore the use of attention to select which frames are to be used to inform the generation process up to our knowledge. To overcome these limitations, we propose Hierarchical Transformer Dynamical Variational Autoencoder, HiT-DVAE, which implements auto-regressive generation with transformer-like attention mechanisms. HiT-DVAE simultaneously learns the evolution of data and latent space distribution with time correlated probabilistic dependencies, thus enabling the generative model to learn a more complex and time-varying latent space as well as diverse and realistic human motions. Furthermore, the auto-regressive generation brings more flexibility on observation and prediction, i.e. one can have any length of observation and predict arbitrary large sequences of poses with a single pre-trained model. We evaluate the proposed method on HumanEva-I and Human3.6M with various evaluation methods, and outperform the state-of-the-art methods on most of the metrics.

Epilepsy represents the most prevalent neurological disease in the world. One-third of people suffering from mesial temporal lobe epilepsy (MTLE) exhibit drug resistance, urging the need to develop new treatments. A key part in anti-seizure medication (ASM) development is the capability of detecting and quantifying epileptic seizures occurring in electroencephalogram (EEG) signals, which is crucial for treatment efficacy evaluation. In this study, we introduced a seizure detection pipeline based on deep learning models applied to raw EEG signals. This pipeline integrates: a new pre-processing technique which segments continuous raw EEG signals without prior distinction between seizure and seizure-free activities; a post-processing algorithm developed to reassemble EEG segments and allow the identification of seizures start/end; and finally, a new evaluation procedure based on a strict seizure events comparison between predicted and real labels. Models training have been performed using a data splitting strategy which addresses the potential for data leakage. We demonstrated the fundamental differences between a seizure classification and a seizure detection task and showed the differences in performance between the two tasks. Finally, we demonstrated the generalization capabilities across species of our best architecture, combining a Convolutional Neural Network and a Transformer encoder. The model was trained on animal EEGs and tested on human EEGs with a F1-score of 93% on a balanced Bonn dataset.

11 Aug 2025

CNRSMax Planck Institute for Intelligent Systems

CNRSMax Planck Institute for Intelligent Systems University of Amsterdam

University of Amsterdam Carnegie Mellon UniversityUniversity of Edinburgh

Carnegie Mellon UniversityUniversity of Edinburgh Google Research

Google Research KU Leuven

KU Leuven Université Paris-SaclayTU Darmstadt

Université Paris-SaclayTU Darmstadt Inria

Inria CEAUniversity of TübingenLJKIMECBMW GroupINSERMHelmholtz-Zentrum Dresden-RossendorfUniversity of the Bundeswehr MunichGrenoble-INPRobert Bosch GmbHHelmholtz AIVIB-Neuroelectronics Research FlandersHertie Institute for AI in Brain HealthappliedAI Institute for EuropeUniversit

Grenoble Alpes

CEAUniversity of TübingenLJKIMECBMW GroupINSERMHelmholtz-Zentrum Dresden-RossendorfUniversity of the Bundeswehr MunichGrenoble-INPRobert Bosch GmbHHelmholtz AIVIB-Neuroelectronics Research FlandersHertie Institute for AI in Brain HealthappliedAI Institute for EuropeUniversit

Grenoble AlpesScientists and engineers use simulators to model empirically observed phenomena. However, tuning the parameters of a simulator to ensure its outputs match observed data presents a significant challenge. Simulation-based inference (SBI) addresses this by enabling Bayesian inference for simulators, identifying parameters that match observed data and align with prior knowledge. Unlike traditional Bayesian inference, SBI only needs access to simulations from the model and does not require evaluations of the likelihood function. In addition, SBI algorithms do not require gradients through the simulator, allow for massive parallelization of simulations, and can perform inference for different observations without further simulations or training, thereby amortizing inference. Over the past years, we have developed, maintained, and extended sbi, a PyTorch-based package that implements Bayesian SBI algorithms based on neural networks. The sbi toolkit implements a wide range of inference methods, neural network architectures, sampling methods, and diagnostic tools. In addition, it provides well-tested default settings, but also offers flexibility to fully customize every step of the simulation-based inference workflow. Taken together, the sbi toolkit enables scientists and engineers to apply state-of-the-art SBI methods to black-box simulators, opening up new possibilities for aligning simulations with empirically observed data.

In this paper, we introduce a new functional point of view on bilevel

optimization problems for machine learning, where the inner objective is

minimized over a function space. These types of problems are most often solved

by using methods developed in the parametric setting, where the inner objective

is strongly convex with respect to the parameters of the prediction function.

The functional point of view does not rely on this assumption and notably

allows using over-parameterized neural networks as the inner prediction

function. We propose scalable and efficient algorithms for the functional

bilevel optimization problem and illustrate the benefits of our approach on

instrumental regression and reinforcement learning tasks.

With the increasing presence of robots in our every-day environments,

improving their social skills is of utmost importance. Nonetheless, social

robotics still faces many challenges. One bottleneck is that robotic behaviors

need to be often adapted as social norms depend strongly on the environment.

For example, a robot should navigate more carefully around patients in a

hospital compared to workers in an office. In this work, we investigate

meta-reinforcement learning (meta-RL) as a potential solution. Here, robot

behaviors are learned via reinforcement learning where a reward function needs

to be chosen so that the robot learns an appropriate behavior for a given

environment. We propose to use a variational meta-RL procedure that quickly

adapts the robots' behavior to new reward functions. As a result, given a new

environment different reward functions can be quickly evaluated and an

appropriate one selected. The procedure learns a vectorized representation for

reward functions and a meta-policy that can be conditioned on such a

representation. Given observations from a new reward function, the procedure

identifies its representation and conditions the meta-policy to it. While

investigating the procedures' capabilities, we realized that it suffers from

posterior collapse where only a subset of the dimensions in the representation

encode useful information resulting in a reduced performance. Our second

contribution, a radial basis function (RBF) layer, partially mitigates this

negative effect. The RBF layer lifts the representation to a higher dimensional

space, which is more easily exploitable for the meta-policy. We demonstrate the

interest of the RBF layer and the usage of meta-RL for social robotics on four

robotic simulation tasks.

Popular approaches for few-shot classification consist of first learning a

generic data representation based on a large annotated dataset, before adapting

the representation to new classes given only a few labeled samples. In this

work, we propose a new strategy based on feature selection, which is both

simpler and more effective than previous feature adaptation approaches. First,

we obtain a multi-domain representation by training a set of semantically

different feature extractors. Then, given a few-shot learning task, we use our

multi-domain feature bank to automatically select the most relevant

representations. We show that a simple non-parametric classifier built on top

of such features produces high accuracy and generalizes to domains never seen

during training, which leads to state-of-the-art results on MetaDataset and

improved accuracy on mini-ImageNet.

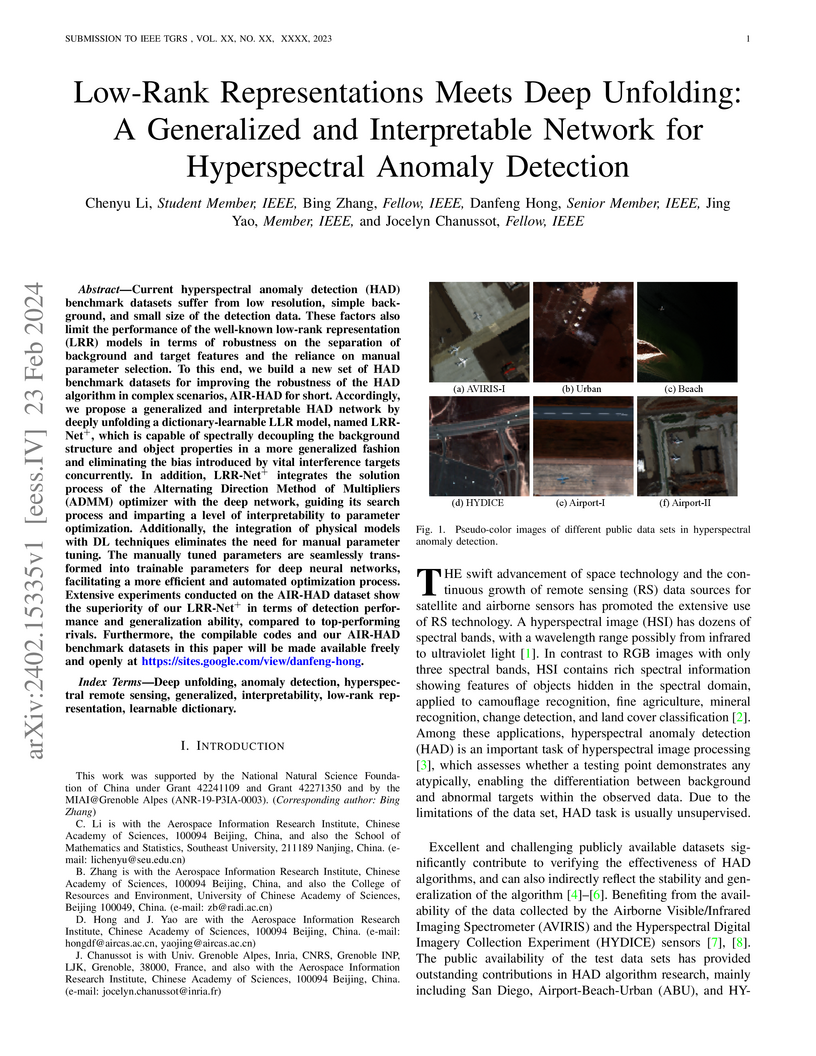

Current hyperspectral anomaly detection (HAD) benchmark datasets suffer from low resolution, simple background, and small size of the detection data. These factors also limit the performance of the well-known low-rank representation (LRR) models in terms of robustness on the separation of background and target features and the reliance on manual parameter selection. To this end, we build a new set of HAD benchmark datasets for improving the robustness of the HAD algorithm in complex scenarios, AIR-HAD for short. Accordingly, we propose a generalized and interpretable HAD network by deeply unfolding a dictionary-learnable LLR model, named LRR-Net+, which is capable of spectrally decoupling the background structure and object properties in a more generalized fashion and eliminating the bias introduced by vital interference targets concurrently. In addition, LRR-Net+ integrates the solution process of the Alternating Direction Method of Multipliers (ADMM) optimizer with the deep network, guiding its search process and imparting a level of interpretability to parameter optimization. Additionally, the integration of physical models with DL techniques eliminates the need for manual parameter tuning. The manually tuned parameters are seamlessly transformed into trainable parameters for deep neural networks, facilitating a more efficient and automated optimization process. Extensive experiments conducted on the AIR-HAD dataset show the superiority of our LRR-Net+ in terms of detection performance and generalization ability, compared to top-performing rivals. Furthermore, the compilable codes and our AIR-HAD benchmark datasets in this paper will be made available freely and openly at \url{this https URL}.

Convolutional neural networks (CNNs) have massively impacted visual recognition in 2D images, and are now ubiquitous in state-of-the-art approaches. CNNs do not easily extend, however, to data that are not represented by regular grids, such as 3D shape meshes or other graph-structured data, to which traditional local convolution operators do not directly apply. To address this problem, we propose a novel graph-convolution operator to establish correspondences between filter weights and graph neighborhoods with arbitrary connectivity. The key novelty of our approach is that these correspondences are dynamically computed from features learned by the network, rather than relying on predefined static coordinates over the graph as in previous work. We obtain excellent experimental results that significantly improve over previous state-of-the-art shape correspondence results. This shows that our approach can learn effective shape representations from raw input coordinates, without relying on shape descriptors.

There are no more papers matching your filters at the moment.