Ask or search anything...

A multi-speaker text-to-speech system allows users to generate custom voices from natural language prompts derived from listener impressions. The system employs Low-rank Adaptation (LoRA) for efficient language model fine-tuning and a hybrid discriminative-generative approach with Flow Matching to synthesize speaker embeddings, yielding high-fidelity and controllable speech.

View blogResearchers from Charles University and NICT developed 'Whisper-Streaming,' an adaptation of OpenAI's Whisper model, to provide real-time, low-latency automatic speech recognition and translation, achieving average latencies of 3.3 seconds for English and 4.4-4.8 seconds for German/Czech ASR in live settings.

View blogResearchers from AI4Bharat and other institutions explored how large language models (LLMs) process non-Roman script languages, revealing that LLMs implicitly leverage Romanization as an intermediate step. This internal Romanization facilitates consistent semantic encoding across native and Romanized scripts and enables target language representations to emerge earlier in the model's layers.

View blog Shanghai Jiao Tong University

Shanghai Jiao Tong University

Nagoya University

Nagoya University Meta

Meta

Microsoft

Microsoft

Osaka University

Osaka University

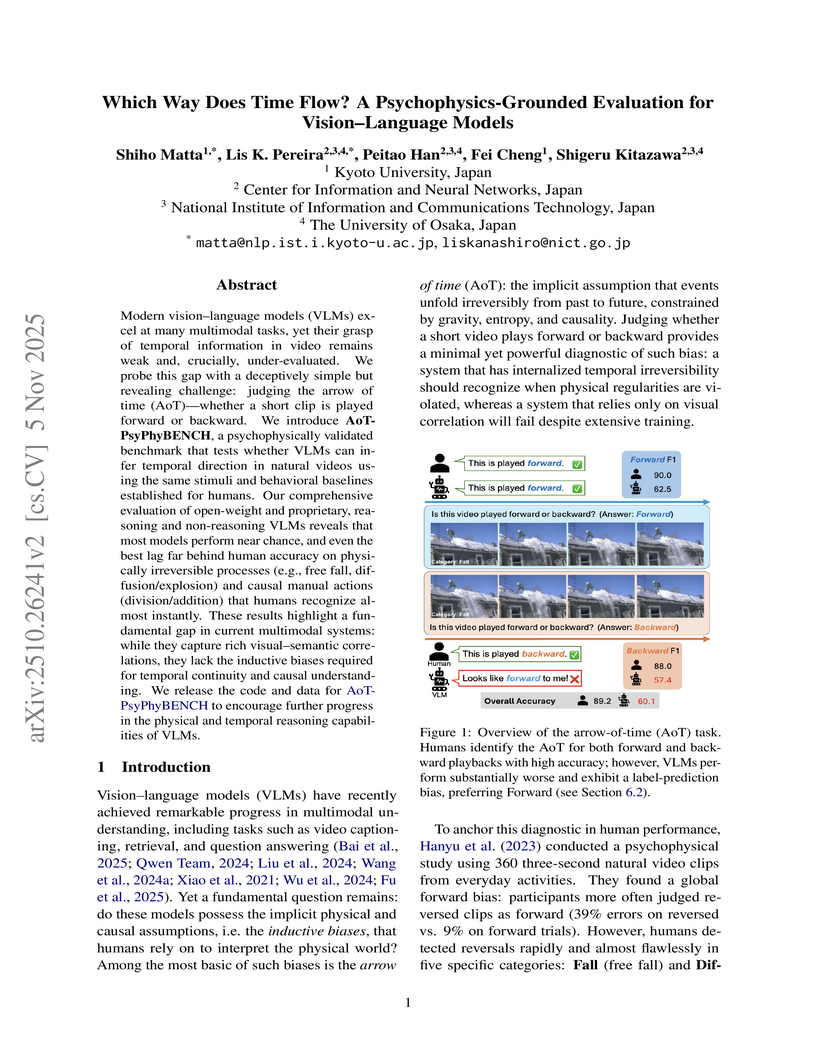

Kyoto University

Kyoto University

Sorbonne Université

Sorbonne Université

the University of Tokyo

the University of Tokyo

Mila - Quebec AI Institute

Mila - Quebec AI Institute

Waseda University

Waseda University