Oulu University

This research from Harokopio University of Athens, National Technical University of Athens, Oulu University, Sejong University, OneSource, and ICTFICIAL OY proposes and evaluates an open-source solution for deploying demanding Extended Reality (XR) services across multiple Kubernetes clusters. The approach combines Cluster API for multi-cluster management and Liqo for cross-cluster networking, demonstrating efficient provisioning and minimal overhead for a video streaming XR use case.

The rapid growth of wireless communications has created a significant demand for high throughput, seamless connectivity, and extremely low latency. To meet these goals, a novel technology -- stacked intelligent metasurfaces (SIMs) -- has been developed to perform signal processing by directly utilizing electromagnetic waves, thus achieving incredibly fast computing speed while reducing hardware requirements. In this article, we provide an overview of SIM technology, including its underlying hardware, benefits, and exciting applications in wireless communications. Specifically, we examine the utilization of SIMs in realizing transmit beamforming and semantic encoding in the wave domain. Additionally, channel estimation in SIM-aided communication systems is discussed. Finally, we highlight potential research opportunities and identify key challenges for deploying SIMs in wireless networks to motivate future research.

Classical medium access control (MAC) protocols are interpretable, yet their task-agnostic control signaling messages (CMs) are ill-suited for emerging mission-critical applications. By contrast, neural network (NN) based protocol models (NPMs) learn to generate task-specific CMs, but their rationale and impact lack interpretability. To fill this void, in this article we propose, for the first time, a semantic protocol model (SPM) constructed by transforming an NPM into an interpretable symbolic graph written in the probabilistic logic programming language (ProbLog). This transformation is viable by extracting and merging common CMs and their connections while treating the NPM as a CM generator. By extensive simulations, we corroborate that the SPM tightly approximates its original NPM while occupying only 0.02% memory. By leveraging its interpretability and memory-efficiency, we demonstrate several SPM-enabled applications such as SPM reconfiguration for collision-avoidance, as well as comparing different SPMs via semantic entropy calculation and storing multiple SPMs to cope with non-stationary environments.

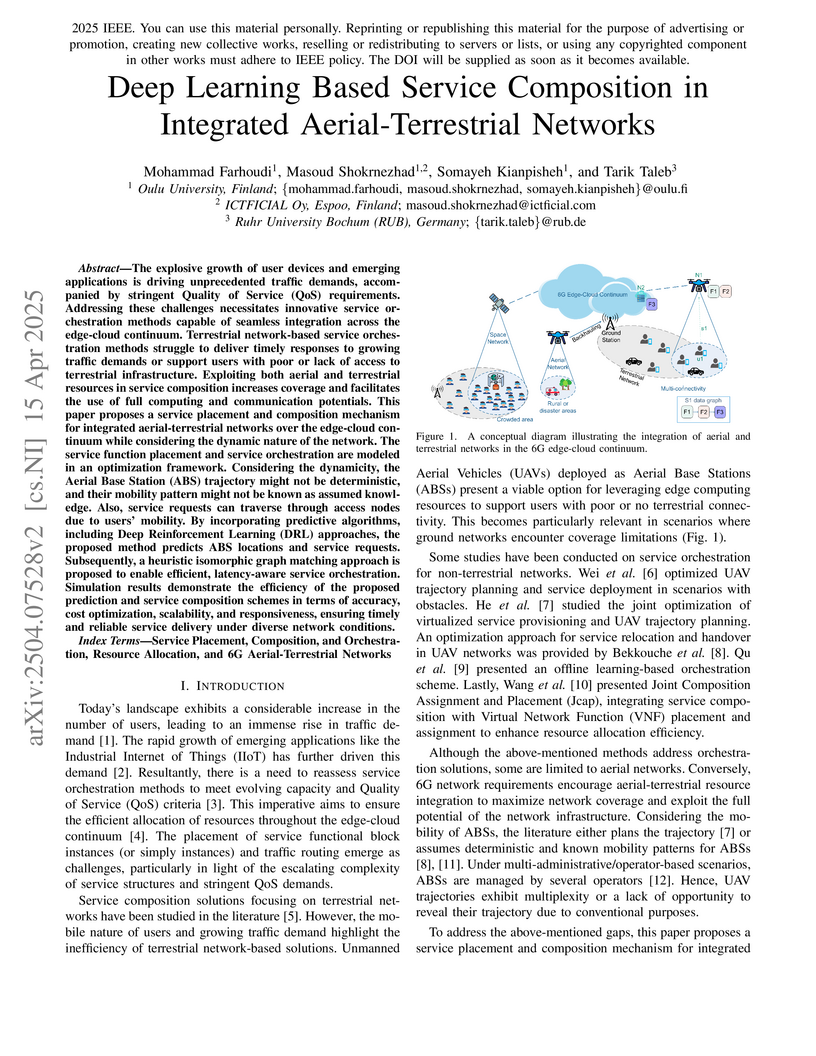

The explosive growth of user devices and emerging applications is driving

unprecedented traffic demands, accompanied by stringent Quality of Service

(QoS) requirements. Addressing these challenges necessitates innovative service

orchestration methods capable of seamless integration across the edge-cloud

continuum. Terrestrial network-based service orchestration methods struggle to

deliver timely responses to growing traffic demands or support users with poor

or lack of access to terrestrial infrastructure. Exploiting both aerial and

terrestrial resources in service composition increases coverage and facilitates

the use of full computing and communication potentials. This paper proposes a

service placement and composition mechanism for integrated aerial-terrestrial

networks over the edge-cloud continuum while considering the dynamic nature of

the network. The service function placement and service orchestration are

modeled in an optimization framework. Considering the dynamicity, the Aerial

Base Station (ABS) trajectory might not be deterministic, and their mobility

pattern might not be known as assumed knowledge. Also, service requests can

traverse through access nodes due to users' mobility. By incorporating

predictive algorithms, including Deep Reinforcement Learning (DRL) approaches,

the proposed method predicts ABS locations and service requests. Subsequently,

a heuristic isomorphic graph matching approach is proposed to enable efficient,

latency-aware service orchestration. Simulation results demonstrate the

efficiency of the proposed prediction and service composition schemes in terms

of accuracy, cost optimization, scalability, and responsiveness, ensuring

timely and reliable service delivery under diverse network conditions.

6G networks aim to achieve global coverage, massive connectivity, and

ultra-stringent requirements. Space-Air-Ground Integrated Networks (SAGINs) and

Semantic Communication (SemCom) are essential for realizing these goals, yet

they introduce considerable complexity in resource orchestration. Drawing

inspiration from research in robotics, a viable solution to manage this

complexity is the application of Large Language Models (LLMs). Although the use

of LLMs in network orchestration has recently gained attention, existing

solutions have not sufficiently addressed LLM hallucinations or their

adaptation to network dynamics. To address this gap, this paper proposes a

framework called Autonomous Reinforcement Coordination (ARC) for a

SemCom-enabled SAGIN. This framework employs an LLM-based Retrieval-Augmented

Generator (RAG) monitors services, users, and resources and processes the

collected data, while a Hierarchical Action Planner (HAP) orchestrates

resources. ARC decomposes orchestration into two tiers, utilizing LLMs for

high-level planning and Reinforcement Learning (RL) agents for low-level

decision-making, in alignment with the Mixture of Experts (MoE) concept. The

LLMs utilize Chain-of-Thought (CoT) reasoning for few-shot learning, empowered

by contrastive learning, while the RL agents employ replay buffer management

for continual learning, thereby achieving efficiency, accuracy, and

adaptability. Simulations are provided to demonstrate the effectiveness of ARC,

along with a comprehensive discussion on potential future research directions

to enhance and upgrade ARC.

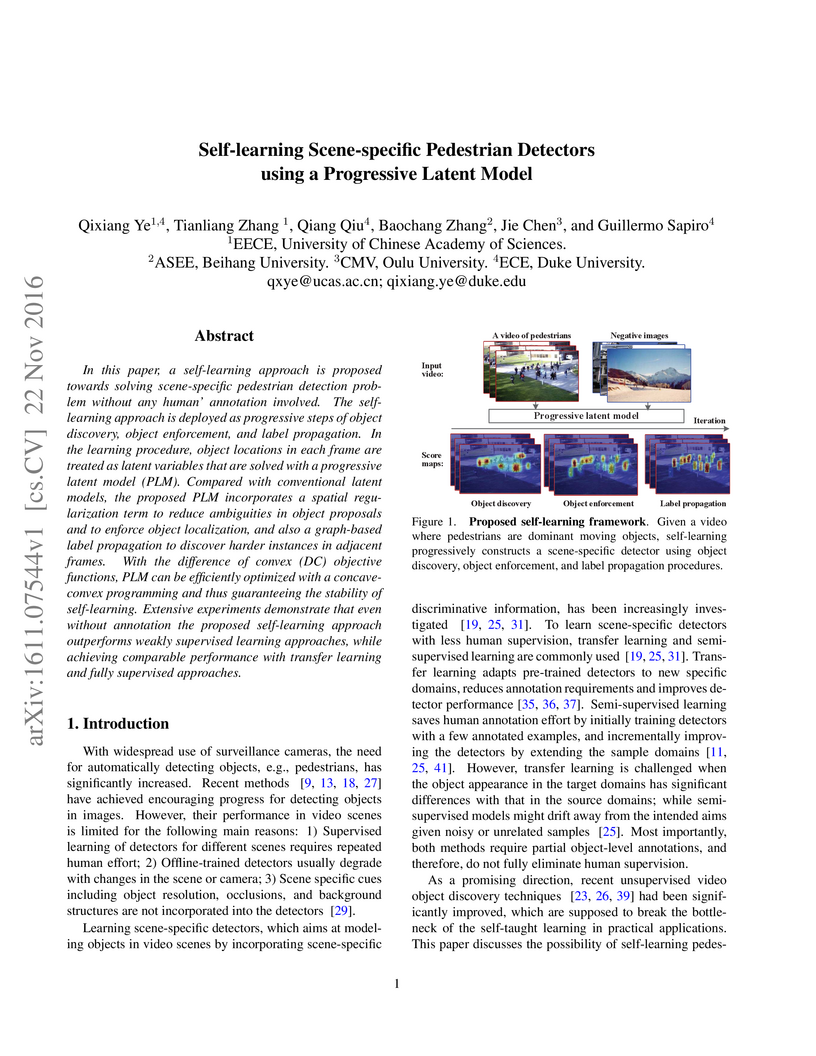

In this paper, a self-learning approach is proposed towards solving

scene-specific pedestrian detection problem without any human' annotation

involved. The self-learning approach is deployed as progressive steps of object

discovery, object enforcement, and label propagation. In the learning

procedure, object locations in each frame are treated as latent variables that

are solved with a progressive latent model (PLM). Compared with conventional

latent models, the proposed PLM incorporates a spatial regularization term to

reduce ambiguities in object proposals and to enforce object localization, and

also a graph-based label propagation to discover harder instances in adjacent

frames. With the difference of convex (DC) objective functions, PLM can be

efficiently optimized with a concave-convex programming and thus guaranteeing

the stability of self-learning. Extensive experiments demonstrate that even

without annotation the proposed self-learning approach outperforms weakly

supervised learning approaches, while achieving comparable performance with

transfer learning and fully supervised approaches.

Along with the increasing demand for latencysensitive services and applications, Deterministic Network (DetNet) concept has been recently proposed to investigate deterministic latency assurance for services featured with bounded latency requirements in 5G edge networks. The Network Function Virtualization (NFV) technology enables Internet Service Providers (ISPs) to flexibly place Virtual Network Functions (VNFs) achieving performance and cost benefits. Then, Service Function Chains (SFC) are formed by steering traffic through a series of VNF instances in a predefined order. Moreover, the required network resources and placement of VNF instances along SFC should be optimized to meet the deterministic latency requirements. Therefore, it is significant for ISPs to determine an optimal SFC deployment strategy to ensure network performance while improving the network revenue. In this paper, we jointly investigate the resource allocation and SFC placement in 5G edge networks for deterministic latency assurance. We formulate this problem as a mathematic programming model with the objective of maximizing the overall network profit for ISP. Furthermore, a novel Deterministic SFC deployment (Det-SFCD) algorithm is proposed to efficiently embed SFC requests with deterministic latency assurance. The performance evaluation results show that the proposed algorithm can provide better performance in terms of SFC request acceptance rate, network cost reduction, and network resource efficiency compared with benchmark strategy.

Novel applications such as the Metaverse have highlighted the potential of beyond 5G networks, which necessitate ultra-low latency communications and massive broadband connections. Moreover, the burgeoning demand for such services with ever-fluctuating users has engendered a need for heightened service continuity consideration in B5G. To enable these services, the edge-cloud paradigm is a potential solution to harness cloud capacity and effectively manage users in real time as they move across the network. However, edge-cloud networks confront a multitude of limitations, including networking and computing resources that must be collectively managed to unlock their full potential. This paper addresses the joint problem of service placement and resource allocation in a network-cloud integrated environment while considering capacity constraints, dynamic users, and end-to-end delays. We present a non-linear programming model that formulates the optimization problem with the aiming objective of minimizing overall cost while enhancing latency. Next, to address the problem, we introduce a DDQL-based technique using RNNs to predict user behavior, empowered by a water-filling-based algorithm for service placement. The proposed framework adeptly accommodates the dynamic nature of users, the placement of services that mandate ultra-low latency in B5G, and service continuity when users migrate from one location to another. Simulation results show that our solution provides timely responses that optimize the network's potential, offering a scalable and efficient placement.

28 May 2024

To enable wireless federated learning (FL) in communication

resource-constrained networks, two communication schemes, i.e., digital and

analog ones, are effective solutions. In this paper, we quantitatively compare

these two techniques, highlighting their essential differences as well as

respectively suitable scenarios. We first examine both digital and analog

transmission schemes, together with a unified and fair comparison framework

under imbalanced device sampling, strict latency targets, and transmit power

constraints. A universal convergence analysis under various imperfections is

established for evaluating the performance of FL over wireless networks. These

analytical results reveal that the fundamental difference between the digital

and analog communications lies in whether communication and computation are

jointly designed or not. The digital scheme decouples the communication design

from FL computing tasks, making it difficult to support uplink transmission

from massive devices with limited bandwidth and hence the performance is mainly

communication-limited. In contrast, the analog communication allows

over-the-air computation (AirComp) and achieves better spectrum utilization.

However, the computation-oriented analog transmission reduces power efficiency,

and its performance is sensitive to computation errors from imperfect channel

state information (CSI). Furthermore, device sampling for both schemes are

optimized and differences in sampling optimization are analyzed. Numerical

results verify the theoretical analysis and affirm the superior performance of

the sampling optimization.

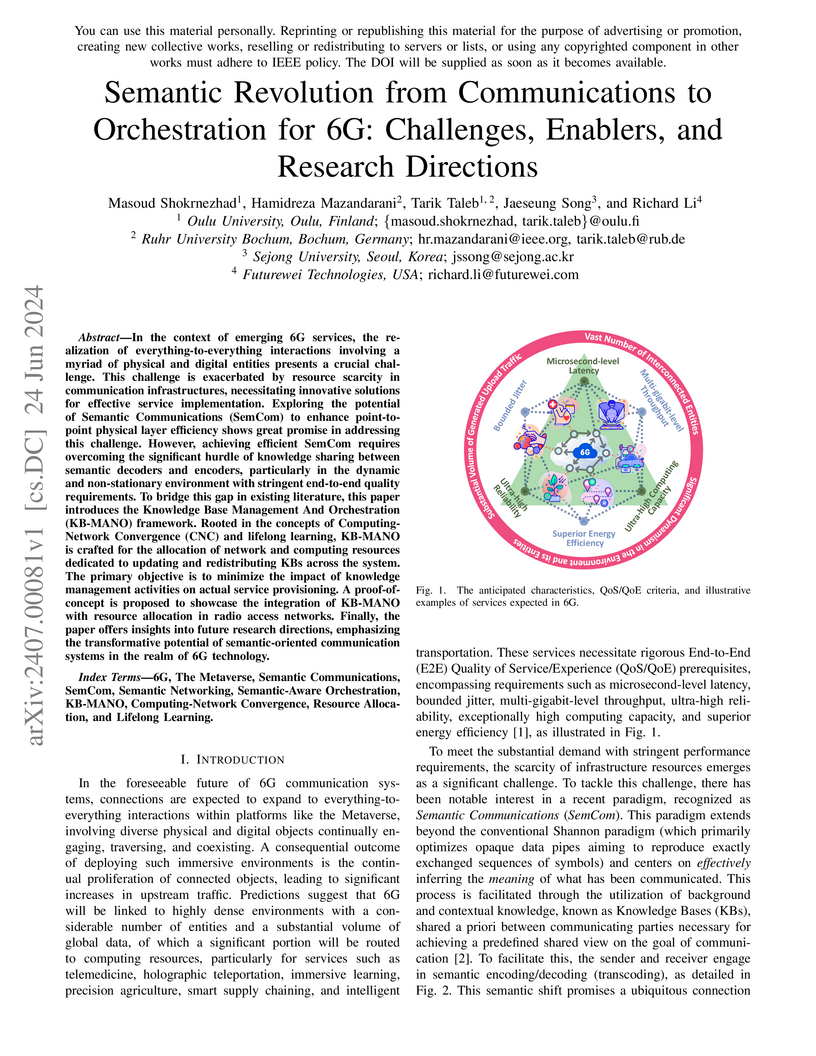

In the context of emerging 6G services, the realization of

everything-to-everything interactions involving a myriad of physical and

digital entities presents a crucial challenge. This challenge is exacerbated by

resource scarcity in communication infrastructures, necessitating innovative

solutions for effective service implementation. Exploring the potential of

Semantic Communications (SemCom) to enhance point-to-point physical layer

efficiency shows great promise in addressing this challenge. However, achieving

efficient SemCom requires overcoming the significant hurdle of knowledge

sharing between semantic decoders and encoders, particularly in the dynamic and

non-stationary environment with stringent end-to-end quality requirements. To

bridge this gap in existing literature, this paper introduces the Knowledge

Base Management And Orchestration (KB-MANO) framework. Rooted in the concepts

of Computing-Network Convergence (CNC) and lifelong learning, KB-MANO is

crafted for the allocation of network and computing resources dedicated to

updating and redistributing KBs across the system. The primary objective is to

minimize the impact of knowledge management activities on actual service

provisioning. A proof-of-concept is proposed to showcase the integration of

KB-MANO with resource allocation in radio access networks. Finally, the paper

offers insights into future research directions, emphasizing the transformative

potential of semantic-oriented communication systems in the realm of 6G

technology.

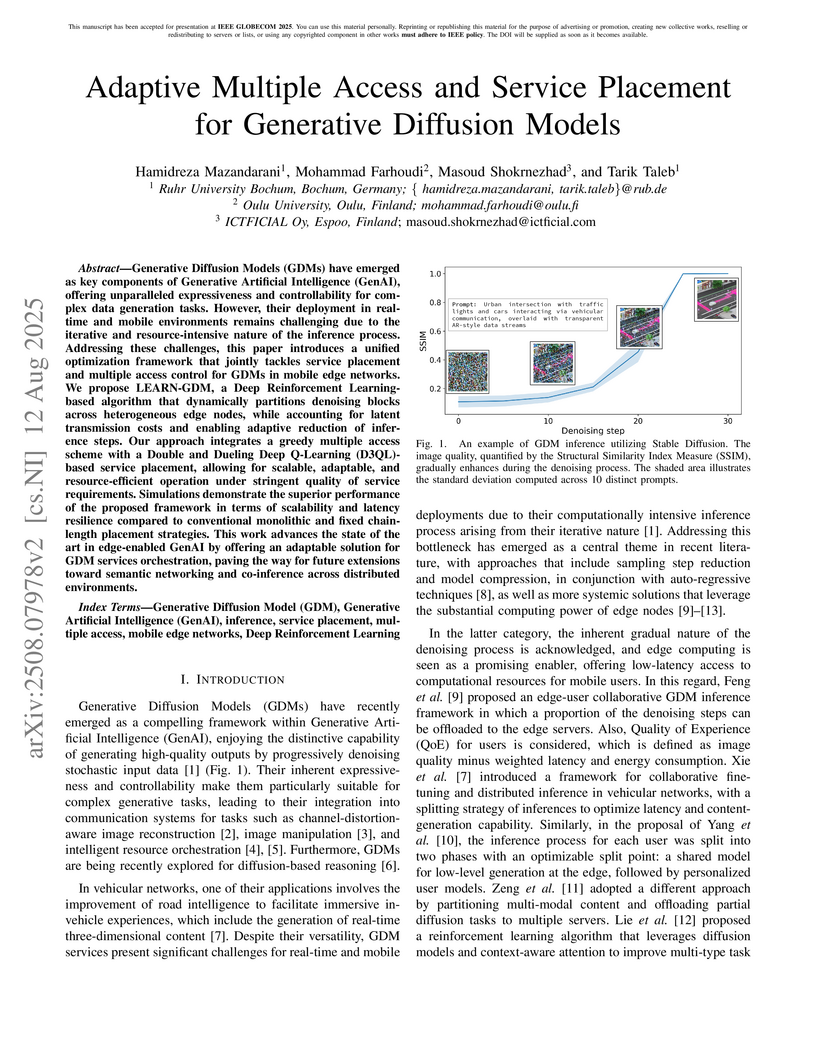

Generative Diffusion Models (GDMs) have emerged as key components of Generative Artificial Intelligence (GenAI), offering unparalleled expressiveness and controllability for complex data generation tasks. However, their deployment in real-time and mobile environments remains challenging due to the iterative and resource-intensive nature of the inference process. Addressing these challenges, this paper introduces a unified optimization framework that jointly tackles service placement and multiple access control for GDMs in mobile edge networks. We propose LEARN-GDM, a Deep Reinforcement Learning-based algorithm that dynamically partitions denoising blocks across heterogeneous edge nodes, while accounting for latent transmission costs and enabling adaptive reduction of inference steps. Our approach integrates a greedy multiple access scheme with a Double and Dueling Deep Q-Learning (D3QL)-based service placement, allowing for scalable, adaptable, and resource-efficient operation under stringent quality of service requirements. Simulations demonstrate the superior performance of the proposed framework in terms of scalability and latency resilience compared to conventional monolithic and fixed chain-length placement strategies. This work advances the state of the art in edge-enabled GenAI by offering an adaptable solution for GDM services orchestration, paving the way for future extensions toward semantic networking and co-inference across distributed environments.

18 Sep 2023

The Metaverse is a new paradigm that aims to create a virtual environment consisting of numerous worlds, each of which will offer a different set of services. To deal with such a dynamic and complex scenario, considering the stringent quality of service requirements aimed at the 6th generation of communication systems (6G), one potential approach is to adopt self-sustaining strategies, which can be realized by employing Adaptive Artificial Intelligence (Adaptive AI) where models are continually re-trained with new data and conditions. One aspect of self-sustainability is the management of multiple access to the frequency spectrum. Although several innovative methods have been proposed to address this challenge, mostly using Deep Reinforcement Learning (DRL), the problem of adapting agents to a non-stationary environment has not yet been precisely addressed. This paper fills in the gap in the current literature by investigating the problem of multiple access in multi-channel environments to maximize the throughput of the intelligent agent when the number of active User Equipments (UEs) may fluctuate over time. To solve the problem, a Double Deep Q-Learning (DDQL) technique empowered by Continual Learning (CL) is proposed to overcome the non-stationary situation, while the environment is unknown. Numerical simulations demonstrate that, compared to other well-known methods, the CL-DDQL algorithm achieves significantly higher throughputs with a considerably shorter convergence time in highly dynamic scenarios.

The rapid growth of wireless communications has created a significant demand for high throughput, seamless connectivity, and extremely low latency. To meet these goals, a novel technology -- stacked intelligent metasurfaces (SIMs) -- has been developed to perform signal processing by directly utilizing electromagnetic waves, thus achieving incredibly fast computing speed while reducing hardware requirements. In this article, we provide an overview of SIM technology, including its underlying hardware, benefits, and exciting applications in wireless communications. Specifically, we examine the utilization of SIMs in realizing transmit beamforming and semantic encoding in the wave domain. Additionally, channel estimation in SIM-aided communication systems is discussed. Finally, we highlight potential research opportunities and identify key challenges for deploying SIMs in wireless networks to motivate future research.

The industrial Internet of Things (IIoT) involves the integration of Internet of Things (IoT) technologies into industrial settings. However, given the high sensitivity of the industry to the security of industrial control system networks and IIoT, the use of software-defined networking (SDN) technology can provide improved security and automation of communication processes. Despite this, the architecture of SDN can give rise to various security threats. Therefore, it is of paramount importance to consider the impact of these threats on SDN-based IIoT environments. Unlike previous research, which focused on security in IIoT and SDN architectures separately, we propose an integrated method including two components that work together seamlessly for better detecting and preventing security threats associated with SDN-based IIoT architectures. The two components consist in a convolutional neural network-based Intrusion Detection System (IDS) implemented as an SDN application and a Blockchain-based system (BS) to empower application layer and network layer security, respectively. A significant advantage of the proposed method lies in jointly minimizing the impact of attacks such as command injection and rule injection on SDN-based IIoT architecture layers. The proposed IDS exhibits superior classification accuracy in both binary and multiclass categories.

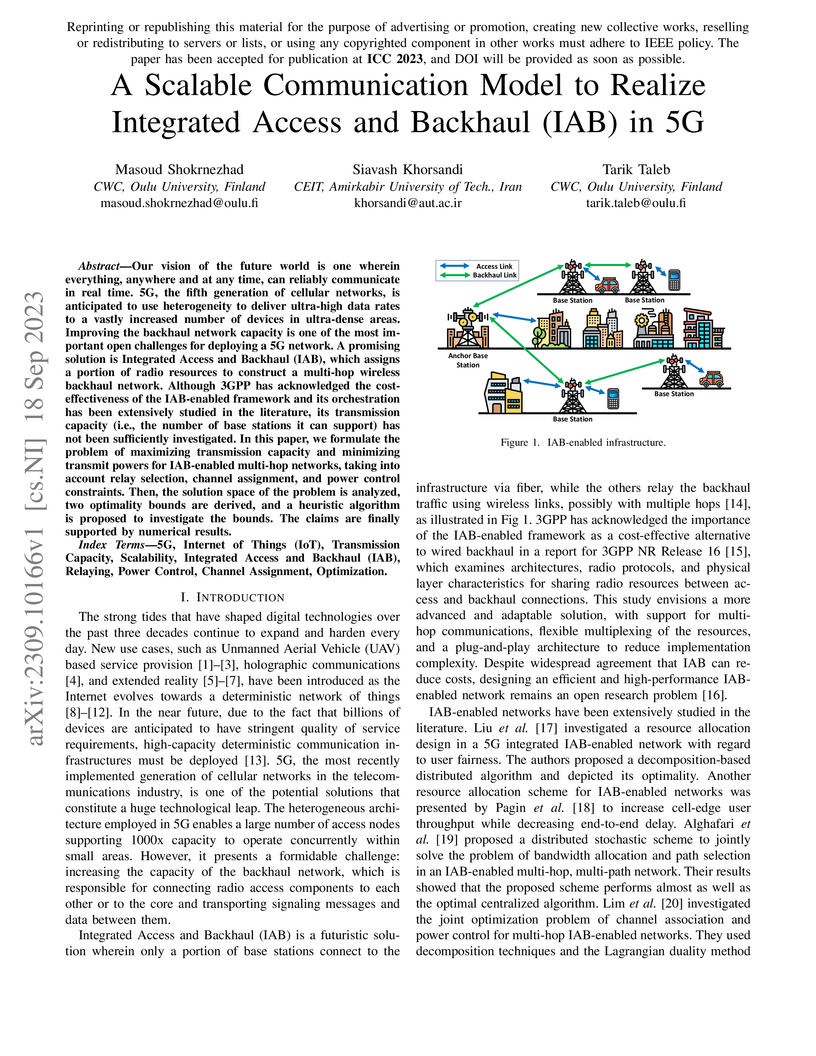

Our vision of the future world is one wherein everything, anywhere and at any time, can reliably communicate in real time. 5G, the fifth generation of cellular networks, is anticipated to use heterogeneity to deliver ultra-high data rates to a vastly increased number of devices in ultra-dense areas. Improving the backhaul network capacity is one of the most important open challenges for deploying a 5G network. A promising solution is Integrated Access and Backhaul (IAB), which assigns a portion of radio resources to construct a multi-hop wireless backhaul network. Although 3GPP has acknowledged the cost-effectiveness of the IAB-enabled framework and its orchestration has been extensively studied in the literature, its transmission capacity (i.e., the number of base stations it can support) has not been sufficiently investigated. In this paper, we formulate the problem of maximizing transmission capacity and minimizing transmit powers for IAB-enabled multi-hop networks, taking into account relay selection, channel assignment, and power control constraints. Then, the solution space of the problem is analyzed, two optimality bounds are derived, and a heuristic algorithm is proposed to investigate the bounds. The claims are finally supported by numerical results.

This research introduces a semantic-aware multiple access scheme for distributed 6G applications, integrating semantic information and multi-agent deep reinforcement learning to optimize wireless resource utilization and fairness. The proposed SAMA-D3QL algorithm achieves superior performance by intelligently leveraging data correlations and assisted throughput, demonstrating significant gains over semantic-oblivious methods.

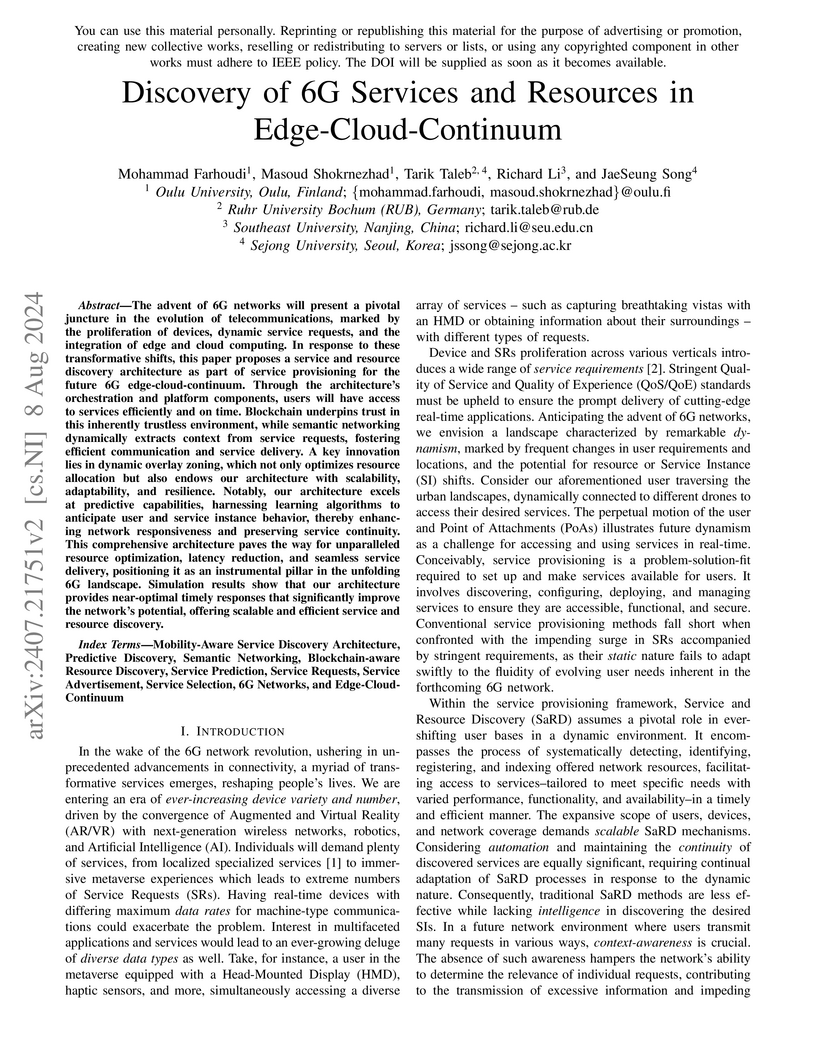

The advent of 6G networks will present a pivotal juncture in the evolution of telecommunications, marked by the proliferation of devices, dynamic service requests, and the integration of edge and cloud computing. In response to these transformative shifts, this paper proposes a service and resource discovery architecture as part of service provisioning for the future 6G edge-cloud-continuum. Through the architecture's orchestration and platform components, users will have access to services efficiently and on time. Blockchain underpins trust in this inherently trustless environment, while semantic networking dynamically extracts context from service requests, fostering efficient communication and service delivery. A key innovation lies in dynamic overlay zoning, which not only optimizes resource allocation but also endows our architecture with scalability, adaptability, and resilience. Notably, our architecture excels at predictive capabilities, harnessing learning algorithms to anticipate user and service instance behavior, thereby enhancing network responsiveness and preserving service continuity. This comprehensive architecture paves the way for unparalleled resource optimization, latency reduction, and seamless service delivery, positioning it as an instrumental pillar in the unfolding 6G landscape. Simulation results show that our architecture provides near-optimal timely responses that significantly improve the network's potential, offering scalable and efficient service and resource discovery.

16 Aug 2024

Industrial Internet of Things (IIoT) is highly sensitive to data privacy and

cybersecurity threats. Federated Learning (FL) has emerged as a solution for

preserving privacy, enabling private data to remain on local IIoT clients while

cooperatively training models to detect network anomalies. However, both

synchronous and asynchronous FL architectures exhibit limitations, particularly

when dealing with clients with varying speeds due to data heterogeneity and

resource constraints. Synchronous architecture suffers from straggler effects,

while asynchronous methods encounter communication bottlenecks. Additionally,

FL models are prone to adversarial inference attacks aimed at disclosing

private training data. To address these challenges, we propose a Buffered FL

(BFL) framework empowered by homomorphic encryption for anomaly detection in

heterogeneous IIoT environments. BFL utilizes a novel weighted average time

approach to mitigate both straggler effects and communication bottlenecks,

ensuring fairness between clients with varying processing speeds through

collaboration with a buffer-based server. The performance results, derived from

two datasets, show the superiority of BFL compared to state-of-the-art FL

methods, demonstrating improved accuracy and convergence speed while enhancing

privacy preservation.

The effective distribution of user transmit powers is essential for the significant advancements that the emergence of 6G wireless networks brings. In recent studies, Deep Neural Networks (DNNs) have been employed to address this challenge. However, these methods frequently encounter issues regarding fairness and computational inefficiency when making decisions, rendering them unsuitable for future dynamic services that depend heavily on the participation of each individual user. To address this gap, this paper focuses on the challenge of transmit power allocation in wireless networks, aiming to optimize α-fairness to balance network utilization and user equity. We introduce a novel approach utilizing Kolmogorov-Arnold Networks (KANs), a class of machine learning models that offer low inference costs compared to traditional DNNs through superior explainability. The study provides a comprehensive problem formulation, establishing the NP-hardness of the power allocation problem. Then, two algorithms are proposed for dataset generation and decentralized KAN training, offering a flexible framework for achieving various fairness objectives in dynamic 6G environments. Extensive numerical simulations demonstrate the effectiveness of our approach in terms of fairness and inference cost. The results underscore the potential of KANs to overcome the limitations of existing DNN-based methods, particularly in scenarios that demand rapid adaptation and fairness.

Nowadays, while the demand for capacity continues to expand, the blossoming of Internet of Everything is bringing in a paradigm shift to new perceptions of communication networks, ushering in a plethora of totally unique services. To provide these services, Virtual Network Functions (VNFs) must be established and reachable by end-users, which will generate and consume massive volumes of data that must be processed locally for service responsiveness and scalability. For this to be realized, a solid cloud-network Integrated infrastructure is a necessity, and since cloud and network domains would be diverse in terms of characteristics but limited in terms of capability, communication and computing resources should be jointly controlled to unleash its full potential. Although several innovative methods have been proposed to allocate the resources, most of them either ignored network resources or relaxed the network as a simple graph, which are not applicable to Beyond 5G because of its dynamism and stringent QoS requirements. This paper fills in the gap by studying the joint problem of communication and computing resource allocation, dubbed CCRA, including VNF placement and assignment, traffic prioritization, and path selection considering capacity constraints as well as link and queuing delays, with the goal of minimizing overall cost. We formulate the problem as a non-linear programming model, and propose two approaches, dubbed B\&B-CCRA and WF-CCRA respectively, based on the Branch \& Bound and Water-Filling algorithms. Numerical simulations show that B\&B-CCRA can solve the problem optimally, whereas WF-CCRA can provide near-optimal solutions in significantly less time.

There are no more papers matching your filters at the moment.