Pomona College

No experimental test precludes the possibility that the dark matter experiences forces beyond general relativity -- in fact, a variety of cosmic microwave background observations suggest greater late-time structure than predicted in the standard Λ cold dark matter model. We show that minimal models of scalar-mediated forces between dark matter particles do not enhance the growth of unbiased tracers of structure: weak lensing observables depend on the total density perturbation, for which the enhanced growth of the density contrast in the matter era is cancelled by the more rapid dilution of the background dark matter density. Moreover, the same background-level effects imply that scenarios compatible with CMB temperature and polarization anisotropies in fact suppress structure growth, as fixing the distance to last scattering requires a substantially increased density of dark energy. Though massive mediators undo these effects upon oscillating, they suppress structure even further because their gravitational impact as nonclustering subcomponents of matter outweighs the enhanced clustering strength of dark matter. We support these findings with analytic insight that clarifies the physical impact of dark forces and explains how primary CMB measurements calibrate the model's predictions for low-redshift observables. We discuss implications for neutrino mass limits and other cosmological anomalies, and we also consider how nonminimal extensions of the model might be engineered to enhance structure.

23 Sep 2025

California Institute of Technology

California Institute of Technology University of ChicagoPomona College

University of ChicagoPomona College NASA Goddard Space Flight CenterHoward University

NASA Goddard Space Flight CenterHoward University University of Maryland

University of Maryland The Pennsylvania State UniversityUniversity of ColoradoJet Propulsion Laboratory, California Institute of TechnologyThe Graduate Center of the City University of New YorkUniversity of WyomingU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryAnton Pannekoek Institute for Astronomy, University of AmsterdamSteward Observatory, The University of ArizonaCenter for Exoplanets and Habitable WorldsRandolph-Macon CollegeEarth and Planets Laboratory, Carnegie Institution for ScienceMcDonald Observatory and Center for Planetary Systems Habitability, The University of Texas at AustinAstrophysics & Space Institute, Schmidt Sciences

The Pennsylvania State UniversityUniversity of ColoradoJet Propulsion Laboratory, California Institute of TechnologyThe Graduate Center of the City University of New YorkUniversity of WyomingU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryAnton Pannekoek Institute for Astronomy, University of AmsterdamSteward Observatory, The University of ArizonaCenter for Exoplanets and Habitable WorldsRandolph-Macon CollegeEarth and Planets Laboratory, Carnegie Institution for ScienceMcDonald Observatory and Center for Planetary Systems Habitability, The University of Texas at AustinAstrophysics & Space Institute, Schmidt SciencesWe report the confirmation and analysis of TOI-5349b, a transiting, warm, Saturn-like planet orbiting an early M-dwarf with a period of ∼3.3 days, which we confirmed as part of the Searching for GEMS (Giant Exoplanets around M-dwarf Stars) survey. TOI-5349b was initially identified in photometry from NASA's Transiting Exoplanet Survey Satellite (TESS) mission and subsequently confirmed using high-precision radial velocity (RV) measurements from the Habitable-zone Planet Finder (HPF) and MAROON-X spectrographs, and from ground-based transit observations obtained using the 0.6-m telescope at Red Buttes Observatory (RBO) and the 1.0-m telescope at the Table Mountain Facility of Pomona College. From a joint fit of the RV and photometric data, we determine the planet's mass and radius to be 0.40±0.02 MJ (127.4−5.7+5.9 M⊕) and 0.91±0.02 RJ (10.2±0.3 R⊕), respectively, resulting in a bulk density of ρp=0.66±0.06 g cm−3 (∼0.96 the density of Saturn). We determine that the host star is a metal-rich M1-type dwarf with a mass and radius of 0.61±0.02 M⊙ and 0.58±0.01 R⊙, and an effective temperature of Teff=3751±59 K. Our analysis highlights an emerging pattern, exemplified by TOI-5349, in which transiting GEMS often have Saturn-like masses and densities and orbit metal-rich stars. With the growing sample of GEMS planets, comparative studies of short-period gas giants orbiting M-dwarfs and Sun-like stars are needed to investigate how metallicity and disk conditions shape the formation and properties of these planets.

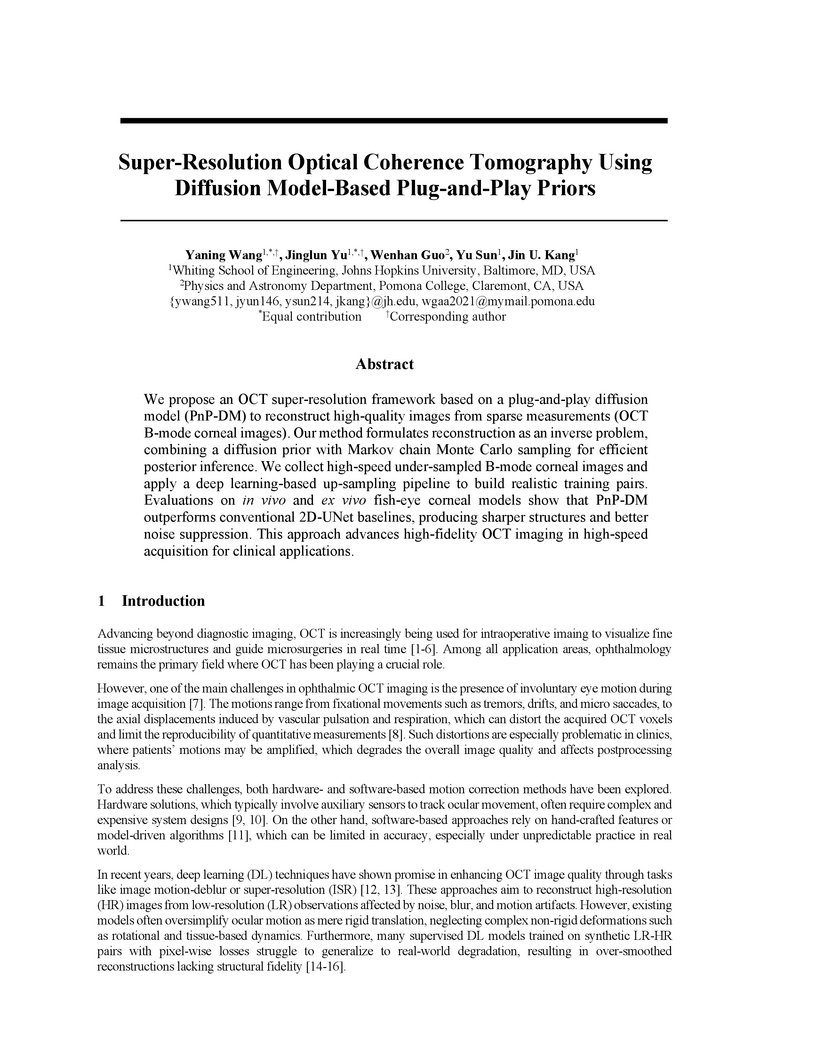

This paper introduces a Plug-and-Play (PnP) framework incorporating diffusion models to reconstruct high-quality optical coherence tomography (OCT) images from sparse, motion-corrupted measurements. The method consistently produces sharper anatomical boundaries and better noise suppression, outperforming traditional and deep learning baselines in quantitative metrics and visual fidelity.

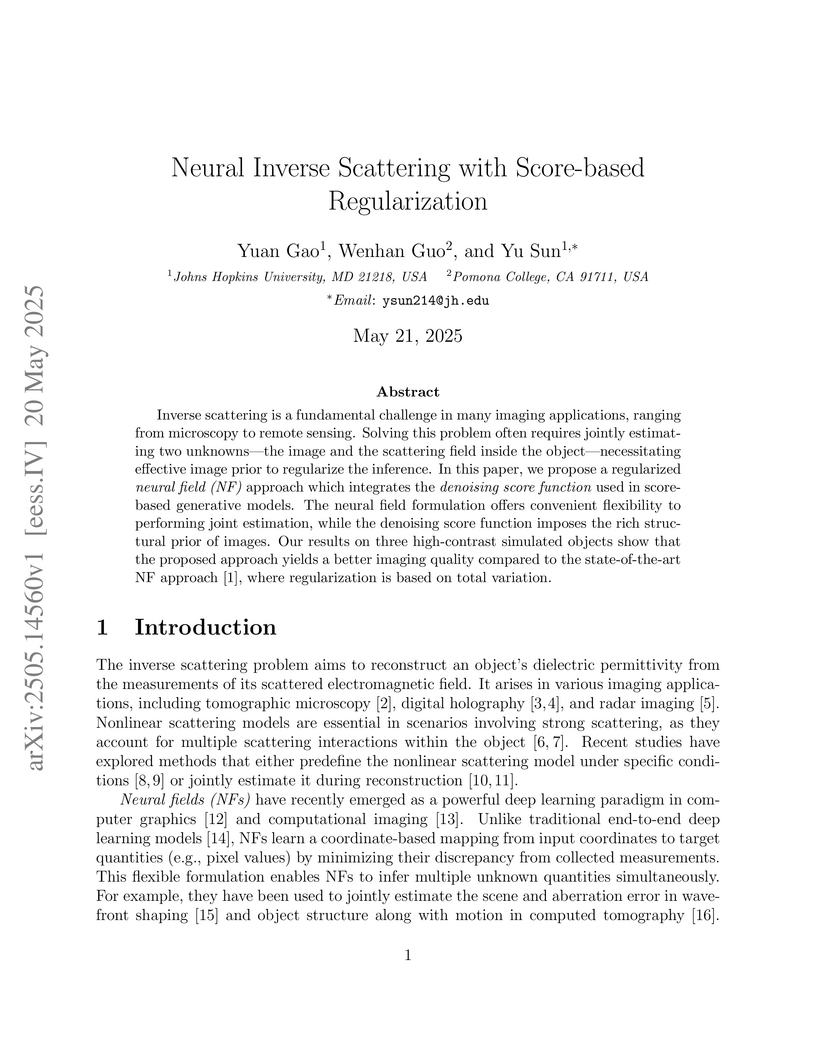

Inverse scattering is a fundamental challenge in many imaging applications,

ranging from microscopy to remote sensing. Solving this problem often requires

jointly estimating two unknowns -- the image and the scattering field inside

the object -- necessitating effective image prior to regularize the inference.

In this paper, we propose a regularized neural field (NF) approach which

integrates the denoising score function used in score-based generative models.

The neural field formulation offers convenient flexibility to performing joint

estimation, while the denoising score function imposes the rich structural

prior of images. Our results on three high-contrast simulated objects show that

the proposed approach yields a better imaging quality compared to the

state-of-the-art NF approach, where regularization is based on total variation.

30 Jul 2024

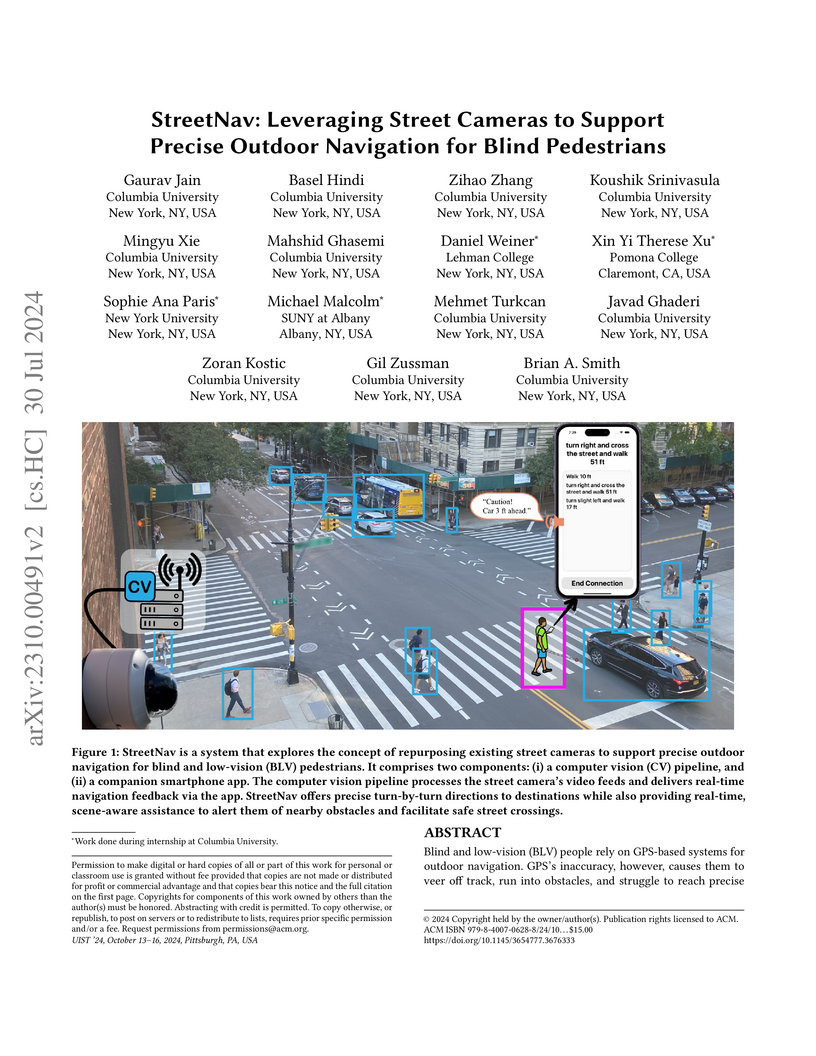

Blind and low-vision (BLV) people rely on GPS-based systems for outdoor navigation. GPS's inaccuracy, however, causes them to veer off track, run into obstacles, and struggle to reach precise destinations. While prior work has made precise navigation possible indoors via hardware installations, enabling this outdoors remains a challenge. Interestingly, many outdoor environments are already instrumented with hardware such as street cameras. In this work, we explore the idea of repurposing existing street cameras for outdoor navigation. Our community-driven approach considers both technical and sociotechnical concerns through engagements with various stakeholders: BLV users, residents, business owners, and Community Board leadership. The resulting system, StreetNav, processes a camera's video feed using computer vision and gives BLV pedestrians real-time navigation assistance. Our evaluations show that StreetNav guides users more precisely than GPS, but its technical performance is sensitive to environmental occlusions and distance from the camera. We discuss future implications for deploying such systems at scale.

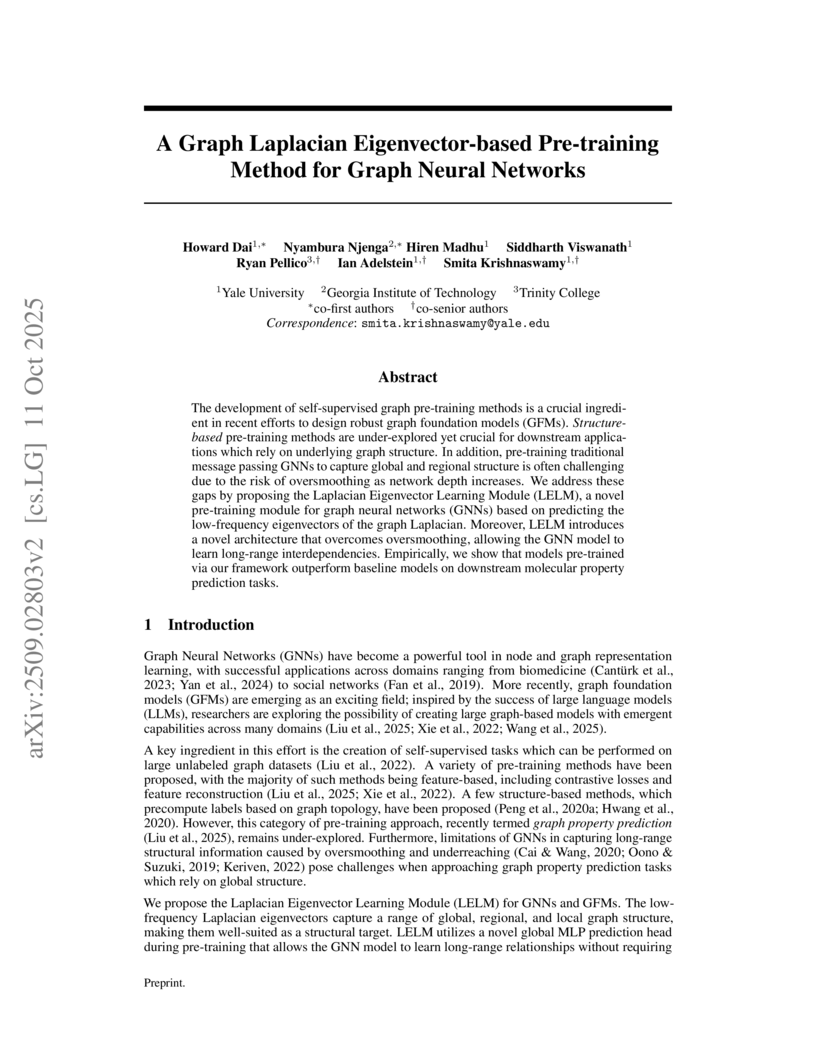

The development of self-supervised graph pre-training methods is a crucial ingredient in recent efforts to design robust graph foundation models (GFMs). Structure-based pre-training methods are under-explored yet crucial for downstream applications which rely on underlying graph structure. In addition, pre-training traditional message passing GNNs to capture global and regional structure is often challenging due to the risk of oversmoothing as network depth increases. We address these gaps by proposing the Laplacian Eigenvector Learning Module (LELM), a novel pre-training module for graph neural networks (GNNs) based on predicting the low-frequency eigenvectors of the graph Laplacian. Moreover, LELM introduces a novel architecture that overcomes oversmoothing, allowing the GNN model to learn long-range interdependencies. Empirically, we show that models pre-trained via our framework outperform baseline models on downstream molecular property prediction tasks.

Drawing motivation from the manifold hypothesis, which posits that most

high-dimensional data lies on or near low-dimensional manifolds, we apply

manifold learning to the space of neural networks. We learn manifolds where

datapoints are neural networks by introducing a distance between the hidden

layer representations of the neural networks. These distances are then fed to

the non-linear dimensionality reduction algorithm PHATE to create a manifold of

neural networks. We characterize this manifold using features of the

representation, including class separation, hierarchical cluster structure,

spectral entropy, and topological structure. Our analysis reveals that

high-performing networks cluster together in the manifold, displaying

consistent embedding patterns across all these features. Finally, we

demonstrate the utility of this approach for guiding hyperparameter

optimization and neural architecture search by sampling from the manifold.

18 Jun 2025

California Institute of Technology

California Institute of Technology University of Southern California

University of Southern California University of California, IrvinePomona College

University of California, IrvinePomona College The University of Texas at Austin

The University of Texas at Austin Yale UniversityUniversity of California, MercedCarnegie ObservatoriesCalifornia State University, San BernardinoCalifornia State Polytechnic University, Pomona

Yale UniversityUniversity of California, MercedCarnegie ObservatoriesCalifornia State University, San BernardinoCalifornia State Polytechnic University, PomonaApplying a realistic surface brightness detection limit of μᵥ ≈ 32.5 mag arcsec⁻² to ultra-faint galaxies in FIRE-2 simulations demonstrates that observed galaxy sizes are significantly reduced, aligning better with observations, but it also leads to a systematic overestimation of their enclosed dark matter mass within the observed half-light radius.

10 Jun 2025

This study uses FIRE-2 cosmological simulations to demonstrate that the elongated, "pickle-shaped" galaxies observed at high redshifts are transient evolutionary stages for Milky Way-mass galaxy progenitors. Stellar populations formed in these early elongated configurations subsequently relax and evolve into more axisymmetric disk-like or spheroidal structures by the present day.

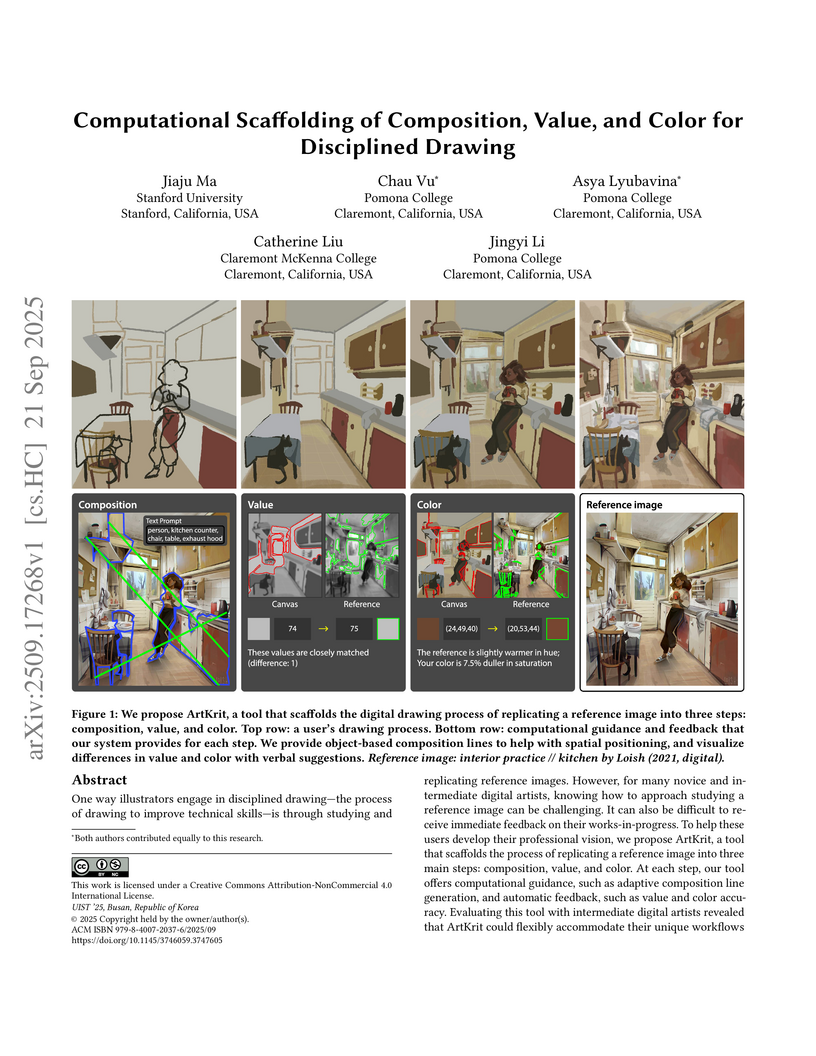

One way illustrators engage in disciplined drawing - the process of drawing to improve technical skills - is through studying and replicating reference images. However, for many novice and intermediate digital artists, knowing how to approach studying a reference image can be challenging. It can also be difficult to receive immediate feedback on their works-in-progress. To help these users develop their professional vision, we propose ArtKrit, a tool that scaffolds the process of replicating a reference image into three main steps: composition, value, and color. At each step, our tool offers computational guidance, such as adaptive composition line generation, and automatic feedback, such as value and color accuracy. Evaluating this tool with intermediate digital artists revealed that ArtKrit could flexibly accommodate their unique workflows. Our code and supplemental materials are available at this https URL .

28 Feb 2022

California Institute of TechnologyUniversity of Zurich

California Institute of TechnologyUniversity of Zurich Tel Aviv University

Tel Aviv University University of California, IrvinePomona College

University of California, IrvinePomona College The University of Texas at Austin

The University of Texas at Austin Northwestern University

Northwestern University University of Pennsylvania

University of Pennsylvania University of California, DavisUniversity of California, Merced

University of California, DavisUniversity of California, Merced Princeton UniversityCarnegie ObservatoriesUniversity of Connecticut

Princeton UniversityCarnegie ObservatoriesUniversity of Connecticut Flatiron InstituteUniversity of California BerkeleyCalifornia State Polytechnic University, PomonaUniversity of California at San Diego

Flatiron InstituteUniversity of California BerkeleyCalifornia State Polytechnic University, PomonaUniversity of California at San DiegoIncreasingly, uncertainties in predictions from galaxy formation simulations (at sub-Milky Way masses) are dominated by uncertainties in stellar evolution inputs. In this paper, we present the full set of updates from the FIRE-2 version of the Feedback In Realistic Environments (FIRE) project code, to the next version, FIRE-3. While the transition from FIRE-1 to FIRE-2 focused on improving numerical methods, here we update the stellar evolution tracks used to determine stellar feedback inputs, e.g. stellar mass-loss (O/B and AGB), spectra (luminosities and ionization rates), and supernova rates (core-collapse and Ia), as well as detailed mass-dependent yields. We also update the low-temperature cooling and chemistry, to enable improved accuracy at T≲104K and densities n≫1cm−3, and the meta-galactic ionizing background. All of these synthesize newer empirical constraints on these quantities and updated stellar evolution and yield models from a number of groups, addressing different aspects of stellar evolution. To make the updated models as accessible as possible, we provide fitting functions for all of the relevant updated tracks, yields, etc, in a form specifically designed so they can be directly 'plugged in' to existing galaxy formation simulations. We also summarize the default FIRE-3 implementations of 'optional' physics, including spectrally-resolved cosmic rays and supermassive black hole growth and feedback.

The basic notions of statistical mechanics (microstates, multiplicities) are quite simple, but understanding how the second law arises from these ideas requires working with cumbersomely large numbers. To avoid getting bogged down in mathematics, one can compute multiplicities numerically for a simple model system such as an Einstein solid -- a collection of identical quantum harmonic oscillators. A computer spreadsheet program or comparable software can compute the required combinatoric functions for systems containing a few hundred oscillators and units of energy. When two such systems can exchange energy, one immediately sees that some configurations are overwhelmingly more probable than others. Graphs of entropy vs. energy for the two systems can be used to motivate the theoretical definition of temperature, T=(∂S/∂U)−1, thus bridging the gap between the classical and statistical approaches to entropy. Further spreadsheet exercises can be used to compute the heat capacity of an Einstein solid, study the Boltzmann distribution, and explore the properties of a two-state paramagnetic system.

01 Jul 2010

IC 2118, also known as the Witch Head Nebula, is a wispy, roughly cometary, ~5 degree long reflection nebula, and is thought to be a site of triggered star formation. In order to search for new young stellar objects (YSOs), we have observed this region in 7 mid- and far-infrared bands using the Spitzer Space Telescope and in 4 bands in the optical using the U. S. Naval Observatory 40-inch telescope. We find infrared excesses in 4 of the 6 previously-known T Tauri stars in our combined infrared maps, and we find 6 entirely new candidate YSOs, one of which may be an edge-on disk. Most of the YSOs seen in the infrared are Class II objects, and they are all in the "head" of the nebula, within the most massive molecular cloud of the region.

06 Jan 2024

Privacy labels -- standardized, compact representations of data collection and data use practices -- are often presented as a solution to the shortcomings of privacy policies. Apple introduced mandatory privacy labels for apps in its App Store in December 2020; Google introduced mandatory labels for Android apps in July 2022. iOS app privacy labels have been evaluated and critiqued in prior work. In this work, we evaluated Android Data Safety Labels and explored how differences between the two label designs impact user comprehension and label utility. We conducted a between-subjects, semi-structured interview study with 12 Android users and 12 iOS users. While some users found Android Data Safety Labels informative and helpful, other users found them too vague. Compared to iOS App Privacy Labels, Android users found the distinction between data collection groups more intuitive and found explicit inclusion of omitted data collection groups more salient. However, some users expressed skepticism regarding elided information about collected data type categories. Most users missed critical information due to not expanding the accordion interface, and they were surprised by collection practices excluded from Android's definitions. Our findings also revealed that Android users generally appreciated information about security practices included in the labels, and iOS users wanted that information added.

No experimental test precludes the possibility that the dark matter experiences forces beyond general relativity -- in fact, a variety of cosmic microwave background observations suggest greater late-time structure than predicted in the standard Λ cold dark matter model. We show that minimal models of scalar-mediated forces between dark matter particles do not enhance the growth of unbiased tracers of structure: weak lensing observables depend on the total density perturbation, for which the enhanced growth of the density contrast in the matter era is cancelled by the more rapid dilution of the background dark matter density. Moreover, the same background-level effects imply that scenarios compatible with CMB temperature and polarization anisotropies in fact suppress structure growth, as fixing the distance to last scattering requires a substantially increased density of dark energy. Though massive mediators undo these effects upon oscillating, they suppress structure even further because their gravitational impact as nonclustering subcomponents of matter outweighs the enhanced clustering strength of dark matter. We support these findings with analytic insight that clarifies the physical impact of dark forces and explains how primary CMB measurements calibrate the model's predictions for low-redshift observables. We discuss implications for neutrino mass limits and other cosmological anomalies, and we also consider how nonminimal extensions of the model might be engineered to enhance structure.

30 Sep 2025

New high-precision microwave spectroscopic measurements and analysis of rotational energy level transitions in the ground vibronic state of the open-shell BaF molecule are reported with the purpose of contributing to studies of physics beyond the Standard Model. BaF is currently among the key candidate molecules being examined in the searches for a measurable electron electric dipole moment, eEDM, as well as the nuclear anapole moment. Employing Fourier-transform microwave spectroscopy, these new pure rotational transition frequencies for the 138Ba19F, 137Ba19F, 136Ba19F, 135Ba19F, and 134Ba19F isotopologues are analyzed here in a combined global fit with previous microwave data sets for 138Ba19F (v = 0 - 4), 137Ba19F, and 136Ba19F using the program SPFIT. As a result, hyperfine parameters are significantly improved, and we observe a distinctive structure in a Born-Oppenheimer breakdown (BOB) analysis of the primary rotational constant. This can be understood using the nuclear field shifts due to the known isotopic variation in the size of barium nuclei and in combination with the smaller linear mass-dependent BOB terms.

05 Mar 2025

The detection of over a hundred gravitational wave signals from double

compacts objects have confirmed the existence of such binaries with tight

orbits. Two main formation channels are generally considered to explain the

formation of these merging binary black holes (BBHs): the isolated evolution of

stellar binaries, and the dynamical assembly in dense environments, namely star

clusters. Although their relative contributions remain unclear, several

analyses indicate that the detected BBH mergers probably originate from a

mixture of these two distinct scenarios. We study the formation of massive star

clusters across time and at a cosmological scale to estimate the contribution

of these dense stellar structures to the overall population of BBH mergers. To

this end, we propose three different models of massive star cluster formation

based on results obtained with zoom-in simulations of individual galaxies. We

apply these models to a large sample of realistic galaxies identified in the

(22.1 Mpc)3 cosmological volume simulation \firebox. Each galaxy

in this simulation has a unique star formation rate, with its own history of

halo mergers and metallicity evolution. Combined with predictions obtained with

the Cluster Monte Carlo code for stellar dynamics, we are able to estimate

populations of dynamically formed BBHs in a collection of realistic galaxies.

Across our three models, we infer a local merger rate of BBHs formed in massive

star clusters consistently in the range $1-10\

\mathrm{Gpc}^{-3}\mathrm{yr}^{-1}$. Compared with the local BBH merger rate

inferred by the LIGO-Virgo-KAGRA Collaboration (in the range $17.9-44\

\mathrm{Gpc}^{-3}\mathrm{yr}^{-1}atz=0.2$), this could potentially

represent up to half of all BBH mergers in the nearby Universe. This shows the

importance of this formation channel in the astrophysical production of merging

BBHs.

In this paper, error estimates of classification Random Forests are

quantitatively assessed. Based on the initial theoretical framework built by

Bates et al. (2023), the true error rate and expected error rate are

theoretically and empirically investigated in the context of a variety of error

estimation methods common to Random Forests. We show that in the classification

case, Random Forests' estimates of prediction error is closer on average to the

true error rate instead of the average prediction error. This is opposite the

findings of Bates et al. (2023) which are given for logistic regression. We

further show that our result holds across different error estimation strategies

such as cross-validation, bagging, and data splitting.

Nonlinear dimensionality reduction (NLDR) algorithms such as Isomap, LLE and

Laplacian Eigenmaps address the problem of representing high-dimensional

nonlinear data in terms of low-dimensional coordinates which represent the

intrinsic structure of the data. This paradigm incorporates the assumption that

real-valued coordinates provide a rich enough class of functions to represent

the data faithfully and efficiently. On the other hand, there are simple

structures which challenge this assumption: the circle, for example, is

one-dimensional but its faithful representation requires two real coordinates.

In this work, we present a strategy for constructing circle-valued functions on

a statistical data set. We develop a machinery of persistent cohomology to

identify candidates for significant circle-structures in the data, and we use

harmonic smoothing and integration to obtain the circle-valued coordinate

functions themselves. We suggest that this enriched class of coordinate

functions permits a precise NLDR analysis of a broader range of realistic data

sets.

Machine Learning-assisted directed evolution (MLDE) is a powerful tool for efficiently navigating antibody fitness landscapes. Many structure-aware MLDE pipelines rely on a single conformation or a single committee across all conformations, limiting their ability to separate conformational uncertainty from epistemic uncertainty. Here, we introduce a rank -conditioned committee (RCC) framework that leverages ranked conformations to assign a deep neural network committee per rank. This design enables a principled separation between epistemic uncertainty and conformational uncertainty. We validate our approach on SARS-CoV-2 antibody docking, demonstrating significant improvements over baseline strategies. Our results offer a scalable route for therapeutic antibody discovery while directly addressing the challenge of modeling conformational uncertainty.

There are no more papers matching your filters at the moment.