ensemble-methods

Inspired by the success of language models (LM), scaling up deep learning recommendation systems (DLRS) has become a recent trend in the community. All previous methods tend to scale up the model parameters during training time. However, how to efficiently utilize and scale up computational resources during test time remains underexplored, which can prove to be a scaling-efficient approach and bring orthogonal improvements in LM domains. The key point in applying test-time scaling to DLRS lies in effectively generating diverse yet meaningful outputs for the same instance. We propose two ways: One is to explore the heterogeneity of different model architectures. The other is to utilize the randomness of model initialization under a homogeneous architecture. The evaluation is conducted across eight models, including both classic and SOTA models, on three benchmarks. Sufficient evidence proves the effectiveness of both solutions. We further prove that under the same inference budget, test-time scaling can outperform parameter scaling. Our test-time scaling can also be seamlessly accelerated with the increase in parallel servers when deployed online, without affecting the inference time on the user side. Code is available.

09 Dec 2025

The MVP framework introduces a training-free, two-stage approach that significantly improves the reliability and accuracy of GUI grounding models by addressing coordinate prediction instability. It achieves this by aggregating predictions from multiple attention-guided, enlarged views, leading to new state-of-the-art performance on challenging benchmarks like ScreenSpot-Pro and UI-Vision.

Stellar and AGN-driven feedback processes affect the distribution of gas on a wide range of scales, from within galaxies well into the intergalactic medium. Yet, it remains unclear how feedback, through its connection to key galaxy properties, shapes the radial gas density profile in the host halo. We tackle this question using suites of the EAGLE, IllustrisTNG, and Simba cosmological hydrodynamical simulations, which span a variety of feedback models. We develop a random forest algorithm that predicts the radial gas density profile within haloes from the total halo mass and five global properties of the central galaxy: gas and stellar mass; star formation rate; mass and accretion rate of the central black hole (BH). The algorithm reproduces the simulated gas density profiles with an average accuracy of ∼80-90% over the halo mass range 10^{9.5} \, \mathrm{M}_{\odot} < M_{\rm 200c} < 10^{15} \, \mathrm{M}_{\odot} and redshift interval $0

The increasing use of synthetic media, particularly deepfakes, is an emerging challenge for digital content verification. Although recent studies use both audio and visual information, most integrate these cues within a single model, which remains vulnerable to modality mismatches, noise, and manipulation. To address this gap, we propose DeepAgent, an advanced multi-agent collaboration framework that simultaneously incorporates both visual and audio modalities for the effective detection of deepfakes. DeepAgent consists of two complementary agents. Agent-1 examines each video with a streamlined AlexNet-based CNN to identify the symbols of deepfake manipulation, while Agent-2 detects audio-visual inconsistencies by combining acoustic features, audio transcriptions from Whisper, and frame-reading sequences of images through EasyOCR. Their decisions are fused through a Random Forest meta-classifier that improves final performance by taking advantage of the different decision boundaries learned by each agent. This study evaluates the proposed framework using three benchmark datasets to demonstrate both component-level and fused performance. Agent-1 achieves a test accuracy of 94.35% on the combined Celeb-DF and FakeAVCeleb datasets. On the FakeAVCeleb dataset, Agent-2 and the final meta-classifier attain accuracies of 93.69% and 81.56%, respectively. In addition, cross-dataset validation on DeepFakeTIMIT confirms the robustness of the meta-classifier, which achieves a final accuracy of 97.49%, and indicates a strong capability across diverse datasets. These findings confirm that hierarchy-based fusion enhances robustness by mitigating the weaknesses of individual modalities and demonstrate the effectiveness of a multi-agent approach in addressing diverse types of manipulations in deepfakes.

Axis-aligned decision trees are fast and stable but struggle on datasets with rotated or interaction-dependent decision boundaries, where informative splits require linear combinations of features rather than single-feature thresholds. Oblique forests address this with per-node hyperplane splits, but at added computational cost and implementation complexity. We propose a simple alternative: JARF, Jacobian-Aligned Random Forests. Concretely, we first fit an axis-aligned forest to estimate class probabilities or regression outputs, compute finite-difference gradients of these predictions with respect to each feature, aggregate them into an expected Jacobian outer product that generalizes the expected gradient outer product (EGOP), and use it as a single global linear preconditioner for all inputs. This supervised preconditioner applies a single global rotation of the feature space, then hands the transformed data back to a standard axis-aligned forest, preserving off-the-shelf training pipelines while capturing oblique boundaries and feature interactions that would otherwise require many axis-aligned splits to approximate. The same construction applies to any model that provides gradients, though we focus on random forests and gradient-boosted trees in this work. On tabular classification and regression benchmarks, this preconditioning consistently improves axis-aligned forests and often matches or surpasses oblique baselines while improving training time. Our experimental results and theoretical analysis together indicate that supervised preconditioning can recover much of the accuracy of oblique forests while retaining the simplicity and robustness of axis-aligned trees.

Decision making often occurs in the presence of incomplete information, leading to the under- or overestimation of risk. Leveraging the observable information to learn the complete information is called nowcasting. In practice, incomplete information is often a consequence of reporting or observation delays. In this paper, we propose an expectation-maximisation (EM) framework for nowcasting that uses machine learning techniques to model both the occurrence as well as the reporting process of events. We allow for the inclusion of covariate information specific to the occurrence and reporting periods as well as characteristics related to the entity for which events occurred. We demonstrate how the maximisation step and the information flow between EM iterations can be tailored to leverage the predictive power of neural networks and (extreme) gradient boosting machines (XGBoost). With simulation experiments, we show that we can effectively model both the occurrence and reporting of events when dealing with high-dimensional covariate information. In the presence of non-linear effects, we show that our methodology outperforms existing EM-based nowcasting frameworks that use generalised linear models in the maximisation step. Finally, we apply the framework to the reporting of Argentinian Covid-19 cases, where the XGBoost-based approach again is most performant.

Access to multiple predictive models trained for the same task, whether in regression or classification, is increasingly common in many applications. Aggregating their predictive uncertainties to produce reliable and efficient uncertainty quantification is therefore a critical but still underexplored challenge, especially within the framework of conformal prediction (CP). While CP methods can generate individual prediction sets from each model, combining them into a single, more informative set remains a challenging problem. To address this, we propose SACP (Symmetric Aggregated Conformal Prediction), a novel method that aggregates nonconformity scores from multiple predictors. SACP transforms these scores into e-values and combines them using any symmetric aggregation function. This flexible design enables a robust, data-driven framework for selecting aggregation strategies that yield sharper prediction sets. We also provide theoretical insights that help justify the validity and performance of the SACP approach. Extensive experiments on diverse datasets show that SACP consistently improves efficiency and often outperforms state-of-the-art model aggregation baselines.

Accurate segmentation of cancerous lesions from 3D computed tomography (CT) scans is essential for automated treatment planning and response assessment. However, even state-of-the-art models combining self-supervised learning (SSL) pretrained transformers with convolutional decoders are susceptible to out-of-distribution (OOD) inputs, generating confidently incorrect tumor segmentations, posing risks for safe clinical deployment. Existing logit-based methods suffer from task-specific model biases, while architectural enhancements to explicitly detect OOD increase parameters and computational costs. Hence, we introduce a plug-and-play and lightweight post-hoc random forests-based OOD detection framework called RF-Deep that leverages deep features with limited outlier exposure. RF-Deep enhances generalization to imaging variations by repurposing the hierarchical features from the pretrained-then-finetuned backbone encoder, providing task-relevant OOD detection by extracting the features from multiple regions of interest anchored to the predicted tumor segmentations. Hence, it scales to images of varying fields-of-view. We compared RF-Deep against existing OOD detection methods using 1,916 CT scans across near-OOD (pulmonary embolism, negative COVID-19) and far-OOD (kidney cancer, healthy pancreas) datasets. RF-Deep achieved AUROC > 93.50 for the challenging near-OOD datasets and near-perfect detection (AUROC > 99.00) for the far-OOD datasets, substantially outperforming logit-based and radiomics approaches. RF-Deep maintained similar performance consistency across networks of different depths and pretraining strategies, demonstrating its effectiveness as a lightweight, architecture-agnostic approach to enhance the reliability of tumor segmentation from CT volumes.

Knowledge Distillation (KD) has emerged as a promising technique for model compression but faces critical limitations: (1) sensitivity to hyperparameters requiring extensive manual tuning, (2) capacity gap when distilling from very large teachers to small students, (3) suboptimal coordination in multi-teacher scenarios, and (4) inefficient use of computational resources. We present \textbf{HPM-KD}, a framework that integrates six synergistic components: (i) Adaptive Configuration Manager via meta-learning that eliminates manual hyperparameter tuning, (ii) Progressive Distillation Chain with automatically determined intermediate models, (iii) Attention-Weighted Multi-Teacher Ensemble that learns dynamic per-sample weights, (iv) Meta-Learned Temperature Scheduler that adapts temperature throughout training, (v) Parallel Processing Pipeline with intelligent load balancing, and (vi) Shared Optimization Memory for cross-experiment reuse. Experiments on CIFAR-10, CIFAR-100, and tabular datasets demonstrate that HPM-KD: achieves 10x-15x compression while maintaining 85% accuracy retention, eliminates the need for manual tuning, and reduces training time by 30-40% via parallelization. Ablation studies confirm independent contribution of each component (0.10-0.98 pp). HPM-KD is available as part of the open-source DeepBridge library.

Machine learning (ML) offers a powerful path toward discovering sustainable polymer materials, but progress has been limited by the lack of large, high-quality, and openly accessible polymer datasets. The Open Polymer Challenge (OPC) addresses this gap by releasing the first community-developed benchmark for polymer informatics, featuring a dataset with 10K polymers and 5 properties: thermal conductivity, radius of gyration, density, fractional free volume, and glass transition temperature. The challenge centers on multi-task polymer property prediction, a core step in virtual screening pipelines for materials discovery. Participants developed models under realistic constraints that include small data, label imbalance, and heterogeneous simulation sources, using techniques such as feature-based augmentation, transfer learning, self-supervised pretraining, and targeted ensemble strategies. The competition also revealed important lessons about data preparation, distribution shifts, and cross-group simulation consistency, informing best practices for future large-scale polymer datasets. The resulting models, analysis, and released data create a new foundation for molecular AI in polymer science and are expected to accelerate the development of sustainable and energy-efficient materials. Along with the competition, we release the test dataset at this https URL. We also release the data generation pipeline at this https URL, which simulates more than 25 properties, including thermal conductivity, radius of gyration, and density.

Time delays in communication channels present significant challenges for bilateral teleoperation systems, affecting both transparency and stability. Although traditional wave variable-based methods for a four-channel architecture ensure stability via passivity, they remain vulnerable to wave reflections and disturbances like variable delays and environmental noise. This article presents a data-driven hybrid framework that replaces the conventional wave-variable transform with an ensemble of three advanced sequence models, each optimized separately via the state-of-the-art Optuna optimizer, and combined through a stacking meta-learner. The base predictors include an LSTM augmented with Prophet for trend correction, an LSTM-based feature extractor paired with clustering and a random forest for improved regression, and a CNN-LSTM model for localized and long-term dynamics. Experimental validation was performed in Python using data generated from the baseline system implemented in MATLAB/Simulink. The results show that our optimized ensemble achieves a transparency comparable to the baseline wave-variable system under varying delays and noise, while ensuring stability through passivity constraints.

The rise of 5G/6G network technologies promises to enable applications like autonomous vehicles and virtual reality, resulting in a significant increase in connected devices and necessarily complicating network management. Even worse, these applications often have strict, yet heterogeneous, performance requirements across metrics like latency and reliability. Much recent work has thus focused on developing the ability to predict network performance. However, traditional methods for network modeling, like discrete event simulators and emulation, often fail to balance accuracy and scalability. Network Digital Twins (NDTs), augmented by machine learning, present a viable solution by creating virtual replicas of physical networks for real- time simulation and analysis. State-of-the-art models, however, fall short of full-fledged NDTs, as they often focus only on a single performance metric or simulated network data. We introduce M3Net, a Multi-Metric Mixture-of-experts (MoE) NDT that uses a graph neural network architecture to estimate multiple performance metrics from an expanded set of network state data in a range of scenarios. We show that M3Net significantly enhances the accuracy of flow delay predictions by reducing the MAPE (Mean Absolute Percentage Error) from 20.06% to 17.39%, while also achieving 66.47% and 78.7% accuracy on jitter and packets dropped for each flow

State-of-the-art person re-identification methods achieve impressive accuracy but remain largely opaque, leaving open the question: which high-level semantic attributes do these models actually rely on? We propose MoSAIC-ReID, a Mixture-of-Experts framework that systematically quantifies the importance of pedestrian attributes for re-identification. Our approach uses LoRA-based experts, each linked to a single attribute, and an oracle router that enables controlled attribution analysis. While MoSAIC-ReID achieves competitive performance on Market-1501 and DukeMTMC under the assumption that attribute annotations are available at test time, its primary value lies in providing a large-scale, quantitative study of attribute importance across intrinsic and extrinsic cues. Using generalized linear models, statistical tests, and feature-importance analyses, we reveal which attributes, such as clothing colors and intrinsic characteristics, contribute most strongly, while infrequent cues (e.g. accessories) have limited effect. This work offers a principled framework for interpretable ReID and highlights the requirements for integrating explicit semantic knowledge in practice. Code is available at this https URL

02 Dec 2025

Researchers at Google, Google DeepMind, and Google Research developed a distribution-calibrated aggregation method for "Thinking-LLM-as-a-Judge" evaluations, leveraging a Davidson-style probabilistic model to process multiple independent LLM judgments. This method consistently outperforms existing aggregation baselines across various benchmarks and sometimes matches or exceeds individual human rater performance, while reducing positional bias.

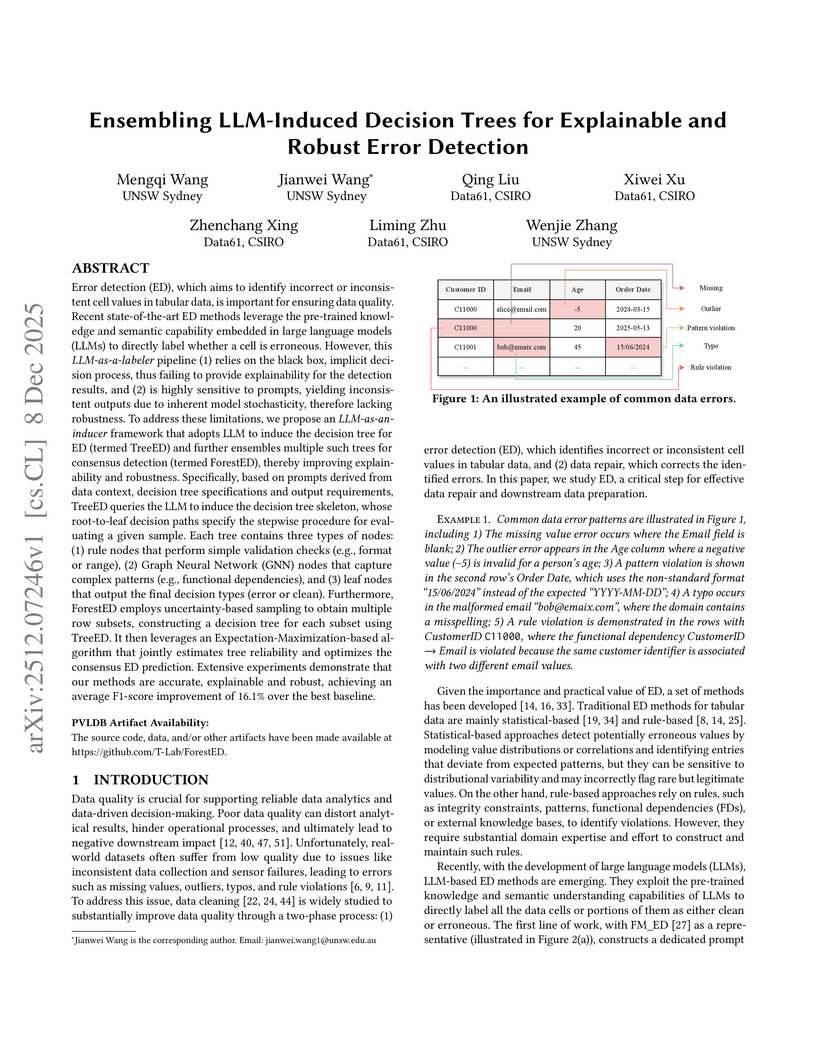

Error detection (ED), which aims to identify incorrect or inconsistent cell values in tabular data, is important for ensuring data quality. Recent state-of-the-art ED methods leverage the pre-trained knowledge and semantic capability embedded in large language models (LLMs) to directly label whether a cell is erroneous. However, this LLM-as-a-labeler pipeline (1) relies on the black box, implicit decision process, thus failing to provide explainability for the detection results, and (2) is highly sensitive to prompts, yielding inconsistent outputs due to inherent model stochasticity, therefore lacking robustness. To address these limitations, we propose an LLM-as-an-inducer framework that adopts LLM to induce the decision tree for ED (termed TreeED) and further ensembles multiple such trees for consensus detection (termed ForestED), thereby improving explainability and robustness. Specifically, based on prompts derived from data context, decision tree specifications and output requirements, TreeED queries the LLM to induce the decision tree skeleton, whose root-to-leaf decision paths specify the stepwise procedure for evaluating a given sample. Each tree contains three types of nodes: (1) rule nodes that perform simple validation checks (e.g., format or range), (2) Graph Neural Network (GNN) nodes that capture complex patterns (e.g., functional dependencies), and (3) leaf nodes that output the final decision types (error or clean). Furthermore, ForestED employs uncertainty-based sampling to obtain multiple row subsets, constructing a decision tree for each subset using TreeED. It then leverages an Expectation-Maximization-based algorithm that jointly estimates tree reliability and optimizes the consensus ED prediction. Extensive xperiments demonstrate that our methods are accurate, explainable and robust, achieving an average F1-score improvement of 16.1% over the best baseline.

The TrajMoE framework, developed by researchers from the Institute of Automation, Chinese Academy of Sciences, and Xiaomi, achieved third place in the navsim ICCV benchmark by introducing scenario-adaptive trajectory priors via Mixture of Experts and policy-driven trajectory scoring refinement using reinforcement learning. This approach enhances end-to-end autonomous driving systems' adaptability and robustness in complex environments, yielding a competitive score of 51.08.

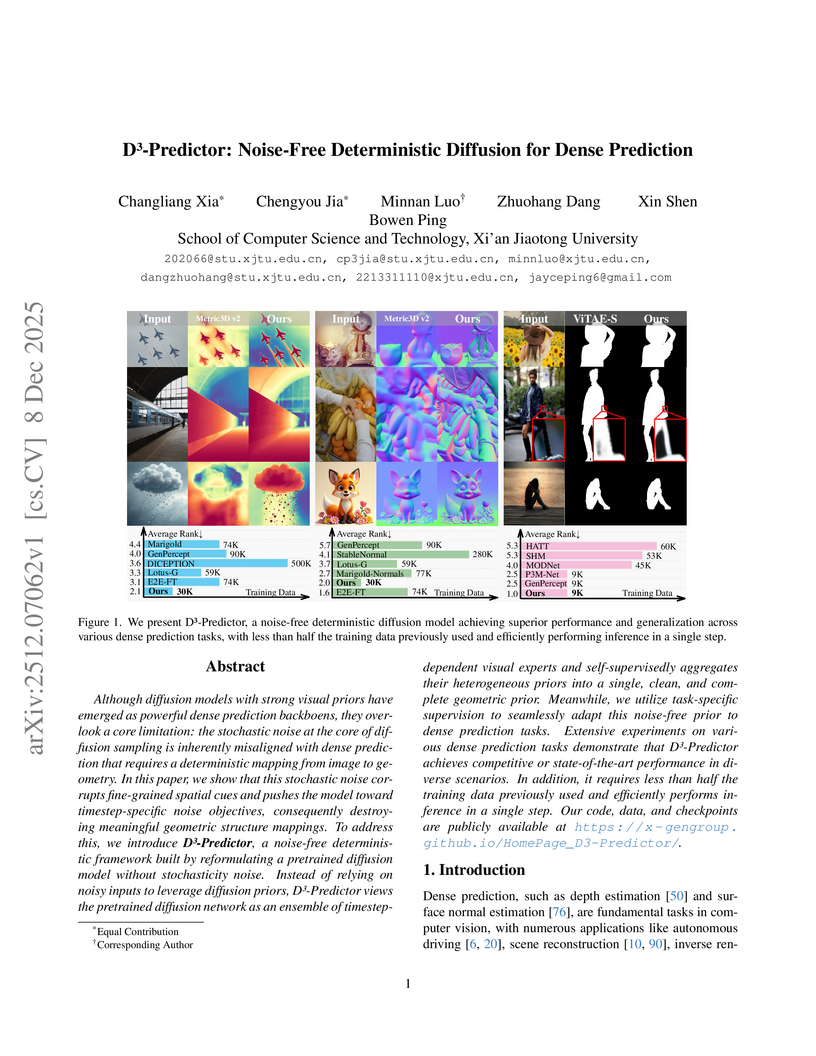

Although diffusion models with strong visual priors have emerged as powerful dense prediction backboens, they overlook a core limitation: the stochastic noise at the core of diffusion sampling is inherently misaligned with dense prediction that requires a deterministic mapping from image to geometry. In this paper, we show that this stochastic noise corrupts fine-grained spatial cues and pushes the model toward timestep-specific noise objectives, consequently destroying meaningful geometric structure mappings. To address this, we introduce D3-Predictor, a noise-free deterministic framework built by reformulating a pretrained diffusion model without stochasticity noise. Instead of relying on noisy inputs to leverage diffusion priors, D3-Predictor views the pretrained diffusion network as an ensemble of timestep-dependent visual experts and self-supervisedly aggregates their heterogeneous priors into a single, clean, and complete geometric prior. Meanwhile, we utilize task-specific supervision to seamlessly adapt this noise-free prior to dense prediction tasks. Extensive experiments on various dense prediction tasks demonstrate that D3-Predictor achieves competitive or state-of-the-art performance in diverse scenarios. In addition, it requires less than half the training data previously used and efficiently performs inference in a single step. Our code, data, and checkpoints are publicly available at this https URL.

Researchers from Tencent Youtu Lab and partner universities developed AlignGemini, a dual-perspective detector based on a Task-Model Alignment principle, achieving superior generalization and robustness in AI-generated image detection. The system demonstrated an average accuracy gain of +9.5 on diverse 'in-the-wild' benchmarks and a +11.9 pixel accuracy improvement on the new AIGI-Now benchmark.

Uncertainty Quantification (UQ) presents a pivotal challenge in the field of Artificial Intelligence (AI), profoundly impacting decision-making, risk assessment and model reliability. In this paper, we introduce Credal and Interval Deep Evidential Classifications (CDEC and IDEC, respectively) as novel approaches to address UQ in classification tasks. CDEC and IDEC leverage a credal set (closed and convex set of probabilities) and an interval of evidential predictive distributions, respectively, allowing us to avoid overfitting to the training data and to systematically assess both epistemic (reducible) and aleatoric (irreducible) uncertainties. When those surpass acceptable thresholds, CDEC and IDEC have the capability to abstain from classification and flag an excess of epistemic or aleatoric uncertainty, as relevant. Conversely, within acceptable uncertainty bounds, CDEC and IDEC provide a collection of labels with robust probabilistic guarantees. CDEC and IDEC are trained using standard backpropagation and a loss function that draws from the theory of evidence. They overcome the shortcomings of previous efforts, and extend the current evidential deep learning literature. Through extensive experiments on MNIST, CIFAR-10 and CIFAR-100, together with their natural OoD shifts (F-MNIST/K-MNIST, SVHN/Intel, TinyImageNet), we show that CDEC and IDEC achieve competitive predictive accuracy, state-of-the-art OoD detection under epistemic and total uncertainty, and tight, well-calibrated prediction regions that expand reliably under distribution shift. An ablation over ensemble size further demonstrates that CDEC attains stable uncertainty estimates with only a small ensemble.

Classification of functional data where observations are curves or trajectories poses unique challenges, particularly under severe class imbalance. Traditional Random Forest algorithms, while robust for tabular data, often fail to capture the intrinsic structure of functional observations and struggle with minority class detection. This paper introduces Functional Random Forest with Adaptive Cost-Sensitive Splitting (FRF-ACS), a novel ensemble framework designed for imbalanced functional data classification. The proposed method leverages basis expansions and Functional Principal Component Analysis (FPCA) to represent curves efficiently, enabling trees to operate on low dimensional functional features. To address imbalance, we incorporate a dynamic cost sensitive splitting criterion that adjusts class weights locally at each node, combined with a hybrid sampling strategy integrating functional SMOTE and weighted bootstrapping. Additionally, curve specific similarity metrics replace traditional Euclidean measures to preserve functional characteristics during leaf assignment. Extensive experiments on synthetic and real world datasets including biomedical signals and sensor trajectories demonstrate that FRF-ACS significantly improves minority class recall and overall predictive performance compared to existing functional classifiers and imbalance handling techniques. This work provides a scalable, interpretable solution for high dimensional functional data analysis in domains where minority class detection is critical.

There are no more papers matching your filters at the moment.