QinetiQ

Probabilities or confidence values produced by artificial intelligence (AI)

and machine learning (ML) models often do not reflect their true accuracy, with

some models being under or over confident in their predictions. For example, if

a model is 80% sure of an outcome, is it correct 80% of the time? Probability

calibration metrics measure the discrepancy between confidence and accuracy,

providing an independent assessment of model calibration performance that

complements traditional accuracy metrics. Understanding calibration is

important when the outputs of multiple systems are combined, for assurance in

safety or business-critical contexts, and for building user trust in models.

This paper provides a comprehensive review of probability calibration metrics

for classifier and object detection models, organising them according to a

number of different categorisations to highlight their relationships. We

identify 82 major metrics, which can be grouped into four classifier families

(point-based, bin-based, kernel or curve-based, and cumulative) and an object

detection family. For each metric, we provide equations where available,

facilitating implementation and comparison by future researchers.

We present a framework for characterizing neurosis in embodied AI: behaviors that are internally coherent yet misaligned with reality, arising from interactions among planning, uncertainty handling, and aversive memory. In a grid navigation stack we catalogue recurrent modalities including flip-flop, plan churn, perseveration loops, paralysis and hypervigilance, futile search, belief incoherence, tie break thrashing, corridor thrashing, optimality compulsion, metric mismatch, policy oscillation, and limited-visibility variants. For each we give lightweight online detectors and reusable escape policies (short commitments, a margin to switch, smoothing, principled arbitration). We then show that durable phobic avoidance can persist even under full visibility when learned aversive costs dominate local choice, producing long detours despite globally safe routes. Using First/Second/Third Law as engineering shorthand for safety latency, command compliance, and resource efficiency, we argue that local fixes are insufficient; global failures can remain. To surface them, we propose genetic-programming based destructive testing that evolves worlds and perturbations to maximize law pressure and neurosis scores, yielding adversarial curricula and counterfactual traces that expose where architectural revision, not merely symptom-level patches, is required.

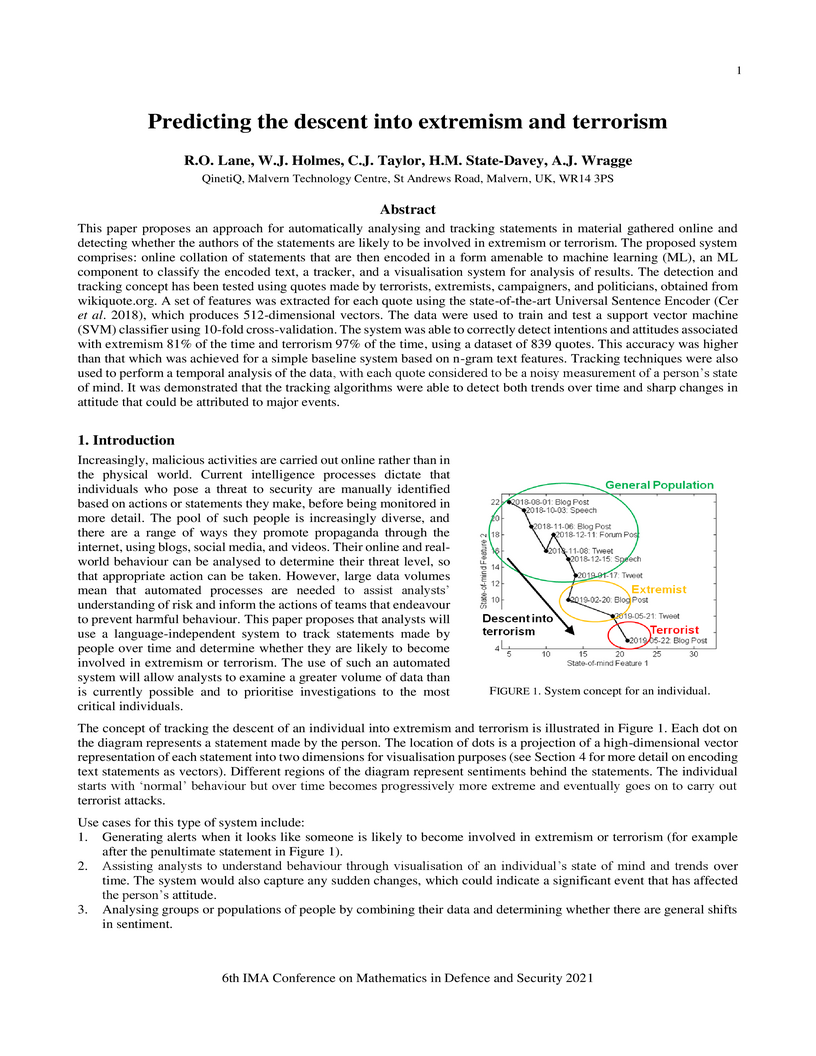

This paper proposes an approach for automatically analysing and tracking statements in material gathered online and detecting whether the authors of the statements are likely to be involved in extremism or terrorism. The proposed system comprises: online collation of statements that are then encoded in a form amenable to machine learning (ML), an ML component to classify the encoded text, a tracker, and a visualisation system for analysis of results. The detection and tracking concept has been tested using quotes made by terrorists, extremists, campaigners, and politicians, obtained from this http URL. A set of features was extracted for each quote using the state-of-the-art Universal Sentence Encoder (Cer et al. 2018), which produces 512-dimensional vectors. The data were used to train and test a support vector machine (SVM) classifier using 10-fold cross-validation. The system was able to correctly detect intentions and attitudes associated with extremism 81% of the time and terrorism 97% of the time, using a dataset of 839 quotes. This accuracy was higher than that which was achieved for a simple baseline system based on n-gram text features. Tracking techniques were also used to perform a temporal analysis of the data, with each quote considered to be a noisy measurement of a person's state of mind. It was demonstrated that the tracking algorithms were able to detect both trends over time and sharp changes in attitude that could be attributed to major events.

Understanding the confidence with which a machine learning model classifies

an input datum is an important, and perhaps under-investigated, concept. In

this paper, we propose a new calibration metric, the Entropic Calibration

Difference (ECD). Based on existing research in the field of state estimation,

specifically target tracking (TT), we show how ECD may be applied to binary

classification machine learning models. We describe the relative importance of

under- and over-confidence and how they are not conflated in the TT literature.

Indeed, our metric distinguishes under- from over-confidence. We consider this

important given that algorithms that are under-confident are likely to be

'safer' than algorithms that are over-confident, albeit at the expense of also

being over-cautious and so statistically inefficient. We demonstrate how this

new metric performs on real and simulated data and compare with other metrics

for machine learning model probability calibration, including the Expected

Calibration Error (ECE) and its signed counterpart, the Expected Signed

Calibration Error (ESCE).

24 Sep 2025

In this work we consider a routing problem and compare quadratic and higher-order representations using the Quantum Approximate Optimisation Algorithm (QAOA). The majority of works investigating QAOA use quadratic Hamiltonians to represent the considered problems, which can lead to poor scaling in qubit requirements. We address the gap of direct comparisons between quadratic and higher-order forms through an investigation into two distinct formulations of the same use case. We find that the higher-order form yields better solution quality and scales better in terms of numbers of qubits, but requires more two-qubit gates. We additionally consider a factoring method to reduce the gate depth of the higher-order version, which achieves a significant reduction in the number of two-qubit gates when run on real IBM hardware.

This study investigates the performance of the two most relevant computer vision deep learning architectures, Convolutional Neural Network and Vision Transformer, for event-based cameras. These cameras capture scene changes, unlike traditional frame-based cameras with capture static images, and are particularly suited for dynamic environments such as UAVs and autonomous vehicles. The deep learning models studied in this work are ResNet34 and ViT B16, fine-tuned on the GEN1 event-based dataset. The research evaluates and compares these models under both standard conditions and in the presence of simulated noise. Initial evaluations on the clean GEN1 dataset reveal that ResNet34 and ViT B16 achieve accuracies of 88% and 86%, respectively, with ResNet34 showing a slight advantage in classification accuracy. However, the ViT B16 model demonstrates notable robustness, particularly given its pre-training on a smaller dataset. Although this study focuses on ground-based vehicle classification, the methodologies and findings hold significant promise for adaptation to UAV contexts, including aerial object classification and event-based vision systems for aviation-related tasks.

Behavioural analytics provides insights into individual and crowd behaviour, enabling analysis of what previously happened and predictions for how people may be likely to act in the future. In defence and security, this analysis allows organisations to achieve tactical and strategic advantage through influence campaigns, a key counterpart to physical activities. Before action can be taken, online and real-world behaviour must be analysed to determine the level of threat. Huge data volumes mean that automated processes are required to attain an accurate understanding of risk. We describe the mathematical basis of technologies to analyse quotes in multiple languages. These include a Bayesian network to understand behavioural factors, state estimation algorithms for time series analysis, and machine learning algorithms for classification. We present results from studies of quotes in English, French, and Arabic, from anti-violence campaigners, politicians, extremists, and terrorists. The algorithms correctly identify extreme statements; and analysis at individual, group, and population levels detects both trends over time and sharp changes attributed to major geopolitical events. Group analysis shows that additional population characteristics can be determined, such as polarisation over particular issues and large-scale shifts in attitude. Finally, MP voting behaviour and statements from publicly-available records are analysed to determine the level of correlation between what people say and what they do.

17 Mar 2005

This paper reports the current status of the DARPA Quantum Network, which

became fully operational in BBN's laboratory in October 2003, and has been

continuously running in 6 nodes operating through telecommunications fiber

between Harvard University, Boston University, and BBN since June 2004. The

DARPA Quantum Network is the world's first quantum cryptography network, and

perhaps also the first QKD systems providing continuous operation across a

metropolitan area. Four more nodes are now being added to bring the total to 10

QKD nodes. This network supports a variety of QKD technologies, including

phase-modulated lasers through fiber, entanglement through fiber, and freespace

QKD. We provide a basic introduction and rational for this network, discuss the

February 2005 status of the various QKD hardware suites and software systems in

the network, and describe our operational experience with the DARPA Quantum

Network to date. We conclude with a discussion of our ongoing work.

A concept for an Impact Mitigation Preparation Mission, called Don Quijote, is to send two spacecraft to a Near-Earth Asteroid (NEA): an Orbiter and an Impactor. The Impactor collides with the asteroid while the Orbiter measures the resulting change in the asteroid's orbit, by means of a Radio Science Experiment (RSE) carried out before and after impact. Three parallel Phase A studies on Don Quijote were carried out for the European Space Agency: the research presented here reflects outcomes of the study by QinetiQ. We discuss the mission objectives with regards to the prioritisation of payload instruments, with emphasis on the interpretation of the impact. The Radio Science Experiment is described and it is examined how solar radiation pressure may increase the uncertainty in measuring the orbit of the target asteroid. It is determined that to measure the change in orbit accurately a thermal IR spectrometer is mandatory, to measure the Yarkovsky effect. The advantages of having a laser altimeter are discussed. The advantages of a dedicated wide-angle impact camera are discussed and the field-of-view is initially sized through a simple model of the impact.

There are no more papers matching your filters at the moment.