Queen Mary University London

To be considered reliable, a model must be calibrated so that its confidence in each decision closely reflects its true outcome. In this blogpost we'll take a look at the most commonly used definition for calibration and then dive into a frequently used evaluation measure for model calibration. We'll then cover some of the drawbacks of this measure and how these surfaced the need for additional notions of calibration, which require their own new evaluation measures. This post is not intended to be an in-depth dissection of all works on calibration, nor does it focus on how to calibrate models. Instead, it is meant to provide a gentle introduction to the different notions and their evaluation measures as well as to re-highlight some issues with a measure that is still widely used to evaluate calibration.

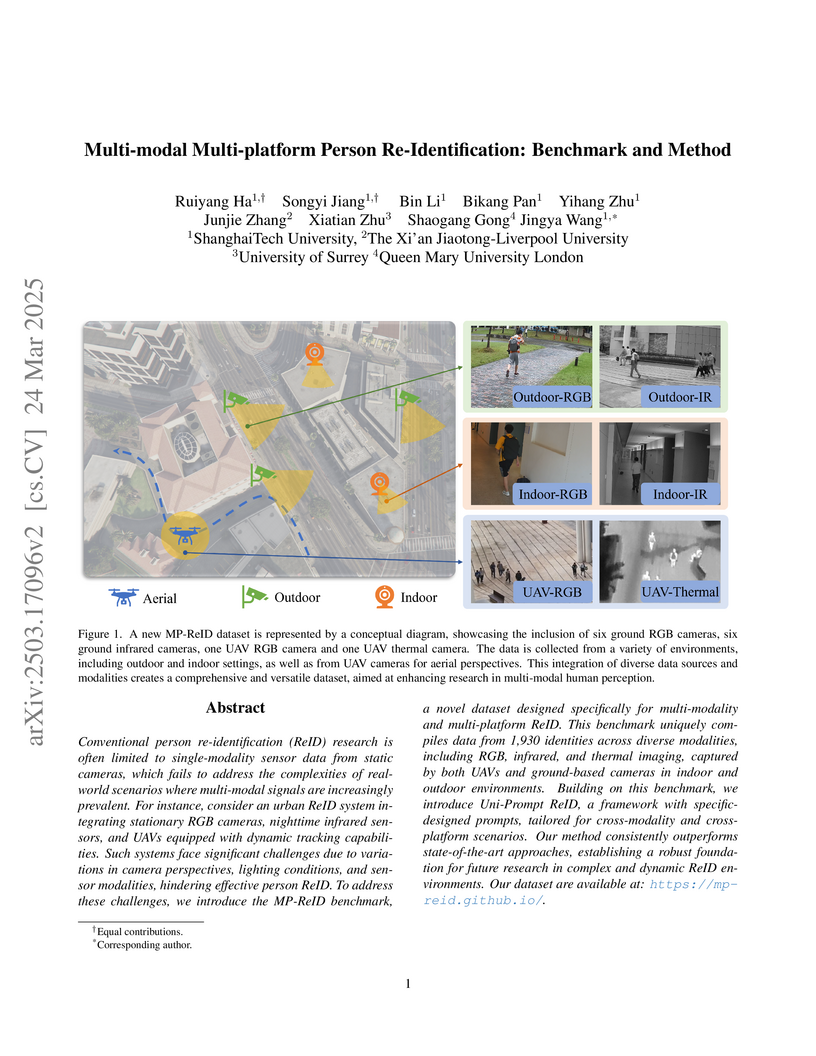

Conventional person re-identification (ReID) research is often limited to

single-modality sensor data from static cameras, which fails to address the

complexities of real-world scenarios where multi-modal signals are increasingly

prevalent. For instance, consider an urban ReID system integrating stationary

RGB cameras, nighttime infrared sensors, and UAVs equipped with dynamic

tracking capabilities. Such systems face significant challenges due to

variations in camera perspectives, lighting conditions, and sensor modalities,

hindering effective person ReID. To address these challenges, we introduce the

MP-ReID benchmark, a novel dataset designed specifically for multi-modality and

multi-platform ReID. This benchmark uniquely compiles data from 1,930

identities across diverse modalities, including RGB, infrared, and thermal

imaging, captured by both UAVs and ground-based cameras in indoor and outdoor

environments. Building on this benchmark, we introduce Uni-Prompt ReID, a

framework with specific-designed prompts, tailored for cross-modality and

cross-platform scenarios. Our method consistently outperforms state-of-the-art

approaches, establishing a robust foundation for future research in complex and

dynamic ReID environments. Our dataset are available

at:this https URL

Foundational image-language models have generated considerable interest due to their efficient adaptation to downstream tasks by prompt learning. Prompt learning treats part of the language model input as trainable while freezing the rest, and optimizes an Empirical Risk Minimization objective. However, Empirical Risk Minimization is known to suffer from distributional shifts which hurt generalizability to prompts unseen during training. By leveraging the regularization ability of Bayesian methods, we frame prompt learning from the Bayesian perspective and formulate it as a variational inference problem. Our approach regularizes the prompt space, reduces overfitting to the seen prompts and improves the prompt generalization on unseen prompts. Our framework is implemented by modeling the input prompt space in a probabilistic manner, as an a priori distribution which makes our proposal compatible with prompt learning approaches that are unconditional or conditional on the image. We demonstrate empirically on 15 benchmarks that Bayesian prompt learning provides an appropriate coverage of the prompt space, prevents learning spurious features, and exploits transferable invariant features. This results in better generalization of unseen prompts, even across different datasets and domains. Code available at: this https URL

Research on generative systems in music has seen considerable attention and growth in recent years. A variety of attempts have been made to systematically evaluate such systems.

We present an interdisciplinary review of the common evaluation targets, methodologies, and metrics for the evaluation of both system output and model use, covering subjective and objective approaches, qualitative and quantitative approaches, as well as empirical and computational methods. We examine the benefits and limitations of these approaches from a musicological, an engineering, and an HCI perspective.

A new class of tools, colloquially called generative AI, can produce high-quality artistic media for visual arts, concept art, music, fiction, literature, video, and animation. The generative capabilities of these tools are likely to fundamentally alter the creative processes by which creators formulate ideas and put them into production. As creativity is reimagined, so too may be many sectors of society. Understanding the impact of generative AI - and making policy decisions around it - requires new interdisciplinary scientific inquiry into culture, economics, law, algorithms, and the interaction of technology and creativity. We argue that generative AI is not the harbinger of art's demise, but rather is a new medium with its own distinct affordances. In this vein, we consider the impacts of this new medium on creators across four themes: aesthetics and culture, legal questions of ownership and credit, the future of creative work, and impacts on the contemporary media ecosystem. Across these themes, we highlight key research questions and directions to inform policy and beneficial uses of the technology.

In applied game theory the motivation of players is a key element. It is

encoded in the payoffs of the game form and often based on utility functions.

But there are cases were formal descriptions in the form of a utility function

do not exist. In this paper we introduce a representation of games where

players' goals are modeled based on so-called higher-order functions. Our

representation provides a general and powerful way to mathematically summarize

players' intentions. In our framework utility functions as well as preference

relations are special cases to describe players' goals. We show that in

higher-order functions formal descriptions of players may still exist where

utility functions do not using a classical example, a variant of Keynes' beauty

contest. We also show that equilibrium conditions based on Nash can be easily

adapted to our framework. Lastly, this framework serves as a stepping stone to

powerful tools from computer science that can be usefully applied to economic

game theory in the future such as computational and computability aspects.

The subject of "fairness" in artificial intelligence (AI) refers to assessing AI algorithms for potential bias based on demographic characteristics such as race and gender, and the development of algorithms to address this bias. Most applications to date have been in computer vision, although some work in healthcare has started to emerge. The use of deep learning (DL) in cardiac MR segmentation has led to impressive results in recent years, and such techniques are starting to be translated into clinical practice. However, no work has yet investigated the fairness of such models. In this work, we perform such an analysis for racial/gender groups, focusing on the problem of training data imbalance, using a nnU-Net model trained and evaluated on cine short axis cardiac MR data from the UK Biobank dataset, consisting of 5,903 subjects from 6 different racial groups. We find statistically significant differences in Dice performance between different racial groups. To reduce the racial bias, we investigated three strategies: (1) stratified batch sampling, in which batch sampling is stratified to ensure balance between racial groups; (2) fair meta-learning for segmentation, in which a DL classifier is trained to classify race and jointly optimized with the segmentation model; and (3) protected group models, in which a different segmentation model is trained for each racial group. We also compared the results to the scenario where we have a perfectly balanced database. To assess fairness we used the standard deviation (SD) and skewed error ratio (SER) of the average Dice values. Our results demonstrate that the racial bias results from the use of imbalanced training data, and that all proposed bias mitigation strategies improved fairness, with the best SD and SER resulting from the use of protected group models.

This paper introduces and develops Möbius homology, a homology theory for representations of finite posets into abelian categories. Although the connection between poset topology and Möbius functions is classical, we go further by establishing a direct connection between poset topology and Möbius inversions. In particular, we show that Möbius homology categorifies the Möbius inversion, as its Euler characteristic coincides with the Möbius inversion applied to the dimension function of the representation. We also present a homological version of Rota's Galois Connection Theorem, relating the Möbius homologies of two posets connected by a Galois connection.

Our main application concerns persistent homology over general posets. We prove that, under a suitable definition, the persistence diagram arises as an Euler characteristic over a poset of intervals, and thus Möbius homology provides a categorification of the persistence diagram. This furnishes a new invariant for persistent homology over arbitrary finite posets. Finally, leveraging our homological variant of Rota's Galois Connection Theorem, we establish several results about the persistence diagram.

University of Toronto

University of Toronto Michigan State University

Michigan State University University College London

University College London University of British ColumbiaUniversity of Wisconsin

University of British ColumbiaUniversity of Wisconsin Space Telescope Science InstituteUtah State UniversityQueen Mary University LondonUniversity of Ontario Institute of TechnologyMonterey Bay Aquarium Research InstituteSoftware CarpentrySoftware Sustainability Institute

Space Telescope Science InstituteUtah State UniversityQueen Mary University LondonUniversity of Ontario Institute of TechnologyMonterey Bay Aquarium Research InstituteSoftware CarpentrySoftware Sustainability InstituteScientists spend an increasing amount of time building and using software. However, most scientists are never taught how to do this efficiently. As a result, many are unaware of tools and practices that would allow them to write more reliable and maintainable code with less effort. We describe a set of best practices for scientific software development that have solid foundations in research and experience, and that improve scientists' productivity and the reliability of their software.

This paper is on long-term video understanding where the goal is to recognise

human actions over long temporal windows (up to minutes long). In prior work,

long temporal context is captured by constructing a long-term memory bank

consisting of past and future video features which are then integrated into

standard (short-term) video recognition backbones through the use of attention

mechanisms. Two well-known problems related to this approach are the quadratic

complexity of the attention operation and the fact that the whole feature bank

must be stored in memory for inference. To address both issues, we propose an

alternative to attention-based schemes which is based on a low-rank

approximation of the memory obtained using Singular Value Decomposition. Our

scheme has two advantages: (a) it reduces complexity by more than an order of

magnitude, and (b) it is amenable to an efficient implementation for the

calculation of the memory bases in an incremental fashion which does not

require the storage of the whole feature bank in memory. The proposed scheme

matches or surpasses the accuracy achieved by attention-based mechanisms while

being memory-efficient. Through extensive experiments, we demonstrate that our

framework generalises to different architectures and tasks, outperforming the

state-of-the-art in three datasets.

Phase field predictions of microscopic fracture and R-curve behaviour of fibre-reinforced composites

Phase field predictions of microscopic fracture and R-curve behaviour of fibre-reinforced composites

We present a computational framework to explore the effect of microstructure

and constituent properties upon the fracture toughness of fibre-reinforced

polymer composites. To capture microscopic matrix cracking and fibre-matrix

debonding, the framework couples the phase field fracture method and a cohesive

zone model in the context of the finite element method. Virtual single-notched

three point bending tests are conducted. The actual microstructure of the

composite is simulated by an embedded cell in the fracture process zone, while

the remaining area is homogenised to be an anisotropic elastic solid. A

detailed comparison of the predicted results with experimental observations

reveals that it is possible to accurately capture the crack path, interface

debonding and load versus displacement response. The sensitivity of the crack

growth resistance curve (R-curve) to the matrix fracture toughness and the

fibre-matrix interface properties is determined. The influence of porosity upon

the R-curve of fibre-reinforced composites is also explored, revealing a

stabler response with increasing void volume fraction. These results shed light

into microscopic fracture mechanisms and set the basis for efficient design of

high fracture toughness composites.

Existing knowledge distillation methods mostly focus on distillation of teacher's prediction and intermediate activation. However, the structured representation, which arguably is one of the most critical ingredients of deep models, is largely overlooked. In this work, we propose a novel {\em \modelname{}} ({\bf\em \shortname{})} method dedicated for distilling representational knowledge semantically from a pretrained teacher to a target student. The key idea is that we leverage the teacher's classifier as a semantic critic for evaluating the representations of both teacher and student and distilling the semantic knowledge with high-order structured information over all feature dimensions. This is accomplished by introducing a notion of cross-network logit computed through passing student's representation into teacher's classifier. Further, considering the set of seen classes as a basis for the semantic space in a combinatorial perspective, we scale \shortname{} to unseen classes for enabling effective exploitation of largely available, arbitrary unlabeled training data. At the problem level, this establishes an interesting connection between knowledge distillation with open-set semi-supervised learning (SSL). Extensive experiments show that our \shortname{} outperforms significantly previous state-of-the-art knowledge distillation methods on both coarse object classification and fine face recognition tasks, as well as less studied yet practically crucial binary network distillation. Under more realistic open-set SSL settings we introduce, we reveal that knowledge distillation is generally more effective than existing Out-Of-Distribution (OOD) sample detection, and our proposed \shortname{} is superior over both previous distillation and SSL competitors. The source code is available at \url{this https URL\_ossl}.

This paper tackles the problem of efficient video recognition. In this area,

video transformers have recently dominated the efficiency (top-1 accuracy vs

FLOPs) spectrum. At the same time, there have been some attempts in the image

domain which challenge the necessity of the self-attention operation within the

transformer architecture, advocating the use of simpler approaches for token

mixing. However, there are no results yet for the case of video recognition,

where the self-attention operator has a significantly higher impact (compared

to the case of images) on efficiency. To address this gap, in this paper, we

make the following contributions: (a) we construct a highly efficient \&

accurate attention-free block based on the shift operator, coined Affine-Shift

block, specifically designed to approximate as closely as possible the

operations in the MHSA block of a Transformer layer. Based on our Affine-Shift

block, we construct our Affine-Shift Transformer and show that it already

outperforms all existing shift/MLP--based architectures for ImageNet

classification. (b) We extend our formulation in the video domain to construct

Video Affine-Shift Transformer (VAST), the very first purely attention-free

shift-based video transformer. (c) We show that VAST significantly outperforms

recent state-of-the-art transformers on the most popular action recognition

benchmarks for the case of models with low computational and memory footprint.

Code will be made available.

We introduce string diagrams as a formal mathematical, graphical language to

represent, compose, program and reason about games. The language is well

established in quantum physics, quantum computing and quantum linguistic with

the semantics given by category theory. We apply this language to the game

theoretical setting and show examples how to use it for some economic games

where we highlight the compositional nature of our higher-order game theory.

University of Canterbury University of Waterloo

University of Waterloo Monash University

Monash University Imperial College London

Imperial College London University of Oxford

University of Oxford University of BristolUniversity of Edinburgh

University of BristolUniversity of Edinburgh University of British Columbia

University of British Columbia Australian National UniversityUniversity of Western Australia

Australian National UniversityUniversity of Western Australia Leiden UniversityMacquarie University

Leiden UniversityMacquarie University University of SydneyCardiff UniversityLiverpool John Moores UniversityUniversity of QueenslandUniversity of Portsmouth

University of SydneyCardiff UniversityLiverpool John Moores UniversityUniversity of QueenslandUniversity of Portsmouth University of St AndrewsUniversity of SussexUniversity of Birmingham

University of St AndrewsUniversity of SussexUniversity of Birmingham Durham UniversityUniversity of Nottingham

Durham UniversityUniversity of Nottingham European Southern ObservatoryUniversity of Central LancashireUniversity of the Western CapeCarnegie Institution for ScienceInternational Centre for Radio Astronomy ResearchSwinburne UniversityCerro Tololo Inter-American ObservatoryQueen Mary University LondonMax Planck Institute for Nuclear PhysicsAustralian Astronomical ObservatoryMax-Planck-Institut fuer AstrophysikUniversidade de S

ao Paulo

European Southern ObservatoryUniversity of Central LancashireUniversity of the Western CapeCarnegie Institution for ScienceInternational Centre for Radio Astronomy ResearchSwinburne UniversityCerro Tololo Inter-American ObservatoryQueen Mary University LondonMax Planck Institute for Nuclear PhysicsAustralian Astronomical ObservatoryMax-Planck-Institut fuer AstrophysikUniversidade de S

ao Paulo

University of Waterloo

University of Waterloo Monash University

Monash University Imperial College London

Imperial College London University of Oxford

University of Oxford University of BristolUniversity of Edinburgh

University of BristolUniversity of Edinburgh University of British Columbia

University of British Columbia Australian National UniversityUniversity of Western Australia

Australian National UniversityUniversity of Western Australia Leiden UniversityMacquarie University

Leiden UniversityMacquarie University University of SydneyCardiff UniversityLiverpool John Moores UniversityUniversity of QueenslandUniversity of Portsmouth

University of SydneyCardiff UniversityLiverpool John Moores UniversityUniversity of QueenslandUniversity of Portsmouth University of St AndrewsUniversity of SussexUniversity of Birmingham

University of St AndrewsUniversity of SussexUniversity of Birmingham Durham UniversityUniversity of Nottingham

Durham UniversityUniversity of Nottingham European Southern ObservatoryUniversity of Central LancashireUniversity of the Western CapeCarnegie Institution for ScienceInternational Centre for Radio Astronomy ResearchSwinburne UniversityCerro Tololo Inter-American ObservatoryQueen Mary University LondonMax Planck Institute for Nuclear PhysicsAustralian Astronomical ObservatoryMax-Planck-Institut fuer AstrophysikUniversidade de S

ao Paulo

European Southern ObservatoryUniversity of Central LancashireUniversity of the Western CapeCarnegie Institution for ScienceInternational Centre for Radio Astronomy ResearchSwinburne UniversityCerro Tololo Inter-American ObservatoryQueen Mary University LondonMax Planck Institute for Nuclear PhysicsAustralian Astronomical ObservatoryMax-Planck-Institut fuer AstrophysikUniversidade de S

ao PauloThe Galaxy And Mass Assembly (GAMA) survey is a multiwavelength photometric

and spectroscopic survey, using the AAOmega spectrograph on the

Anglo-Australian Telescope to obtain spectra for up to ~300000 galaxies over

280 square degrees, to a limiting magnitude of r_pet < 19.8 mag. The target

galaxies are distributed over 0

21 Jun 2021

Forecasting the number of Olympic medals for each nation is highly relevant for different stakeholders: Ex ante, sports betting companies can determine the odds while sponsors and media companies can allocate their resources to promising teams. Ex post, sports politicians and managers can benchmark the performance of their teams and evaluate the drivers of success. To significantly increase the Olympic medal forecasting accuracy, we apply machine learning, more specifically a two-staged Random Forest, thus outperforming more traditional naïve forecast for three previous Olympics held between 2008 and 2016 for the first time. Regarding the Tokyo 2020 Games in 2021, our model suggests that the United States will lead the Olympic medal table, winning 120 medals, followed by China (87) and Great Britain (74). Intriguingly, we predict that the current COVID-19 pandemic will not significantly alter the medal count as all countries suffer from the pandemic to some extent (data inherent) and limited historical data points on comparable diseases (model inherent).

Classical decision theory models behaviour in terms of utility maximisation

where utilities represent rational preference relations over outcomes. However,

empirical evidence and theoretical considerations suggest that we need to go

beyond this framework. We propose to represent goals by higher-order functions

or operators that take other functions as arguments where the max and argmax

operators are special cases. Our higher-order functions take a context function

as their argument where a context represents a process from actions to

outcomes. By that we can define goals being dependent on the actions and the

process in addition to outcomes only. This formulation generalises outcome

based preferences to context-dependent goals. We show how to uniformly

represent within our higher-order framework classical utility maximisation but

also various other extensions that have been debated in economics.

11 Jan 2021

As part of the search for planets around evolved stars, we can understand

planet populations around significantly higher-mass stars than the Sun on the

main sequence. This population is difficult to study any other way,

particularly with radial-velocities since these stars are too hot and rotate

too fast to measure precise velocities. Here we estimate stellar parameters for

all of the giant stars from the EXPRESS project, which aims to detect planets

orbiting evolved stars, and study their occurrence rate as a function of

stellar mass. We analyse high resolution echelle spectra of these stars, and

compute the atmospheric parameters by measuring the equivalent widths for a set

of iron lines, using an updated method implemented during this work. Physical

parameters are computed by interpolating through a grid of stellar evolutionary

models, following a procedure that carefully takes into account the post-MS

evolutionary phases. Probabilities of the star being in the red giant branch

(RBG) or the horizontal branch (HB) are estimated from the derived

distributions. Results: We find that, out of 166 evolved stars, 101 of them are

most likely in the RGB phase, while 65 of them are in the HB phase. The mean

derived mass is 1.41 and 1.87 Msun for RGB and HB stars, respectively. To

validate our method, we compared our results with interferometry and

asteroseismology studies. We find a difference in the radius with

interferometry of 1.7%. With asteroseismology, we find 2.4% difference in logg,

1.5% in radius, 6.2% in mass, and 11.9% in age. Compared with previous

spectroscopic studies, and find a 0.5% difference in Teff, 1% in logg, and 2%

in [Fe/H]. We also find a mean mass difference with respect to the EXPRESS

original catalogue of 16%. We show that the method presented here can greatly

improve the estimates of the stellar parameters for giant stars compared to

what was presented previously.

While deep learning holds great promise for disease diagnosis and prognosis in cardiac magnetic resonance imaging, its progress is often constrained by highly imbalanced and biased training datasets. To address this issue, we propose a method to alleviate imbalances inherent in datasets through the generation of synthetic data based on sensitive attributes such as sex, age, body mass index (BMI), and health condition. We adopt ControlNet based on a denoising diffusion probabilistic model to condition on text assembled from patient metadata and cardiac geometry derived from segmentation masks. We assess our method using a large-cohort study from the UK Biobank by evaluating the realism of the generated images using established quantitative metrics. Furthermore, we conduct a downstream classification task aimed at debiasing a classifier by rectifying imbalances within underrepresented groups through synthetically generated samples. Our experiments demonstrate the effectiveness of the proposed approach in mitigating dataset imbalances, such as the scarcity of diagnosed female patients or individuals with normal BMI level suffering from heart failure. This work represents a major step towards the adoption of synthetic data for the development of fair and generalizable models for medical classification tasks. Notably, we conduct all our experiments using a single, consumer-level GPU to highlight the feasibility of our approach within resource-constrained environments. Our code is available at this https URL.

In economic theory, an agent chooses from available alternatives -- modeled as a set. In decisions in the field or in the lab, however, agents do not have access to the set of alternatives at once. Instead, alternatives are represented by the outside world in a structured way. Online search results are lists of items, wine menus are often lists of lists (grouped by type or country), and online shopping often involves filtering items which can be viewed as navigating a tree. Representations constrain how an agent can choose. At the same time, an agent can also leverage representations when choosing, simplifying his/her choice process. For instance, in the case of a list he or she can use the order in which alternatives are represented to make his/her choice.

In this paper, we model representations and decision procedures operating on them. We show that choice procedures are related to classical choice functions by a canonical mapping. Using this mapping, we can ask whether properties of choice functions can be lifted onto the choice procedures which induce them. We focus on the obvious benchmark: rational choice. We fully characterize choice procedures which can be rationalized by a strict preference relation for general representations including lists, list of lists, trees and others. Our framework can thereby be used as the basis for new tests of rational behavior.

Classical choice theory operates on very limited information, typically budgets or menus and final choices. This is in stark contrast to the vast amount of data that specifically web companies collect about their users' choice process. Our framework offers a way to integrate such data into economic choice models.

There are no more papers matching your filters at the moment.