Robert Bosch Centre for Cyber-Physical Systems

Deep neural networks (DNNs) have shown exceptional performance when trained on well-illuminated images captured by Electro-Optical (EO) cameras, which provide rich texture details. However, in critical applications like aerial perception, it is essential for DNNs to maintain consistent reliability across all conditions, including low-light scenarios where EO cameras often struggle to capture sufficient detail. Additionally, UAV-based aerial object detection faces significant challenges due to scale variability from varying altitudes and slant angles, adding another layer of complexity. Existing methods typically address only illumination changes or style variations as domain shifts, but in aerial perception, correlation shifts also impact DNN performance. In this paper, we introduce the IndraEye dataset, a multi-sensor (EO-IR) dataset designed for various tasks. It includes 5,612 images with 145,666 instances, encompassing multiple viewing angles, altitudes, seven backgrounds, and different times of the day across the Indian subcontinent. The dataset opens up several research opportunities, such as multimodal learning, domain adaptation for object detection and segmentation, and exploration of sensor-specific strengths and weaknesses. IndraEye aims to advance the field by supporting the development of more robust and accurate aerial perception systems, particularly in challenging conditions. IndraEye dataset is benchmarked with object detection and semantic segmentation tasks. Dataset and source codes are available at this https URL.

Domain-adaptive thermal object detection plays a key role in facilitating

visible (RGB)-to-thermal (IR) adaptation by reducing the need for co-registered

image pairs and minimizing reliance on large annotated IR datasets. However,

inherent limitations of IR images, such as the lack of color and texture cues,

pose challenges for RGB-trained models, leading to increased false positives

and poor-quality pseudo-labels. To address this, we propose Semantic-Aware Gray

color Augmentation (SAGA), a novel strategy for mitigating color bias and

bridging the domain gap by extracting object-level features relevant to IR

images. Additionally, to validate the proposed SAGA for drone imagery, we

introduce the IndraEye, a multi-sensor (RGB-IR) dataset designed for diverse

applications. The dataset contains 5,612 images with 145,666 instances,

captured from diverse angles, altitudes, backgrounds, and times of day,

offering valuable opportunities for multimodal learning, domain adaptation for

object detection and segmentation, and exploration of sensor-specific strengths

and weaknesses. IndraEye aims to enhance the development of more robust and

accurate aerial perception systems, especially in challenging environments.

Experimental results show that SAGA significantly improves RGB-to-IR adaptation

for autonomous driving and IndraEye dataset, achieving consistent performance

gains of +0.4% to +7.6% (mAP) when integrated with state-of-the-art domain

adaptation techniques. The dataset and codes are available at

this https URL

22 Feb 2024

In this paper, we employ a 1D deep convolutional generative adversarial network (DCGAN) for sequential anomaly detection in energy time series data. Anomaly detection involves gradient descent to reconstruct energy sub-sequences, identifying the noise vector that closely generates them through the generator network. Soft-DTW is used as a differentiable alternative for the reconstruction loss and is found to be superior to Euclidean distance. Combining reconstruction loss and the latent space's prior probability distribution serves as the anomaly score. Our novel method accelerates detection by parallel computation of reconstruction of multiple points and shows promise in identifying anomalous energy consumption in buildings, as evidenced by performing experiments on hourly energy time series from 15 buildings.

The average reward criterion is relatively less studied as most existing

works in the Reinforcement Learning literature consider the discounted reward

criterion. There are few recent works that present on-policy average reward

actor-critic algorithms, but average reward off-policy actor-critic is

relatively less explored. In this work, we present both on-policy and

off-policy deterministic policy gradient theorems for the average reward

performance criterion. Using these theorems, we also present an Average Reward

Off-Policy Deep Deterministic Policy Gradient (ARO-DDPG) Algorithm. We first

show asymptotic convergence analysis using the ODE-based method. Subsequently,

we provide a finite time analysis of the resulting stochastic approximation

scheme with linear function approximator and obtain an ϵ-optimal

stationary policy with a sample complexity of Ω(ϵ−2.5). We

compare the average reward performance of our proposed ARO-DDPG algorithm and

observe better empirical performance compared to state-of-the-art on-policy

average reward actor-critic algorithms over MuJoCo-based environments.

22 Oct 2025

The paper focuses on designing a controller for unknown dynamical multi-agent systems to achieve temporal reach-avoid-stay tasks for each agent while preventing inter-agent collisions. The main objective is to generate a spatiotemporal tube (STT) for each agent and thereby devise a closed-form, approximation-free, and decentralized control strategy that ensures the system trajectory reaches the target within a specific time while avoiding time-varying unsafe sets and collisions with other agents. In order to achieve this, the requirements of STTs are formulated as a robust optimization problem (ROP) and solved using a sampling-based scenario optimization problem (SOP) to address the issue of infeasibility caused by the infinite number of constraints in ROP. The STTs are generated by solving the SOP, and the corresponding closed-form control is designed to fulfill the specified task. Finally, the effectiveness of our approach is demonstrated through two case studies, one involving omnidirectional robots and the other involving multiple drones modelled as Euler-Lagrange systems.

15 Oct 2025

This paper presents the first sufficient conditions that guarantee the stability and almost sure convergence of multi-timescale stochastic approximation (SA) iterates. It extends the existing results on one-timescale and two-timescale SA iterates to general N-timescale stochastic recursions, for any N≥1, using the ordinary differential equation (ODE) method. As an application, we study SA algorithms augmented with heavy-ball momentum in the context of Gradient Temporal Difference (GTD) learning. The added momentum introduces an auxiliary state evolving on an intermediate timescale, yielding a three-timescale recursion. We show that with appropriate momentum parameters, the scheme fits within our framework and converges almost surely to the same fixed point as baseline GTD. The stability and convergence of all iterates including the momentum state follow from our main results without ad hoc bounds. We then study off-policy actor-critic algorithms with a baseline learner, actor, and critic updated on separate timescales. In contrast to prior work, we eliminate projection steps from the actor update and instead use our framework to guarantee stability and almost sure convergence of all components. Finally, we extend the analysis to constrained policy optimization in the average reward setting, where the actor, critic, and dual variables evolve on three distinct timescales, and we verify that the resulting dynamics satisfy the conditions of our general theorem. These examples show how diverse reinforcement learning algorithms covering momentum acceleration, off-policy learning, and primal-dual methods-fit naturally into the proposed multi-timescale framework.

22 Oct 2025

This paper introduces a new framework for synthesizing time-varying control barrier functions (TV-CBFs) for general Signal Temporal Logic (STL) specifications using spatiotemporal tubes (STT). We first formulate the STT synthesis as a robust optimization problem (ROP) and solve it through a scenario optimization problem (SOP), providing formal guarantees that the resulting tubes capture the given STL specifications. These STTs are then used to construct TV-CBFs, ensuring that under any control law rendering them invariant, the system satisfies the STL tasks. We demonstrate the framework through case studies on a differential-drive mobile robot and a quadrotor, and provide a comparative analysis showing improved efficiency over existing approaches.

The paper considers the controller synthesis problem for general MIMO systems with unknown dynamics, aiming to fulfill the temporal reach-avoid-stay task, where the unsafe regions are time-dependent, and the target must be reached within a specified time frame. The primary aim of the paper is to construct the spatiotemporal tube (STT) using a sampling-based approach and thereby devise a closed-form approximation-free control strategy to ensure that system trajectory reaches the target set while avoiding time-dependent unsafe sets. The proposed scheme utilizes a novel method involving STTs to provide controllers that guarantee both system safety and reachability. In our sampling-based framework, we translate the requirements of STTs into a Robust optimization program (ROP). To address the infeasibility of ROP caused by infinite constraints, we utilize the sampling-based Scenario optimization program (SOP). Subsequently, we solve the SOP to generate the tube and closed-form controller for an unknown system, ensuring the temporal reach-avoid-stay specification. Finally, the effectiveness of the proposed approach is demonstrated through three case studies: an omnidirectional robot, a SCARA manipulator, and a magnetic levitation system.

Object detection and classification using aerial images is a challenging task

as the information regarding targets are not abundant. Synthetic Aperture

Radar(SAR) images can be used for Automatic Target Recognition(ATR) systems as

it can operate in all-weather conditions and in low light settings. But, SAR

images contain salt and pepper noise(speckle noise) that cause hindrance for

the deep learning models to extract meaningful features. Using just aerial view

Electro-optical(EO) images for ATR systems may also not result in high accuracy

as these images are of low resolution and also do not provide ample information

in extreme weather conditions. Therefore, information from multiple sensors can

be used to enhance the performance of Automatic Target Recognition(ATR)

systems. In this paper, we explore a methodology to use both EO and SAR sensor

information to effectively improve the performance of the ATR systems by

handling the shortcomings of each of the sensors. A novel Multi-Modal Domain

Fusion(MDF) network is proposed to learn the domain invariant features from

multi-modal data and use it to accurately classify the aerial view objects. The

proposed MDF network achieves top-10 performance in the Track-1 with an

accuracy of 25.3 % and top-5 performance in Track-2 with an accuracy of 34.26 %

in the test phase on the PBVS MAVOC Challenge dataset [18].

Adapting robot trajectories based on human instructions as per new situations

is essential for achieving more intuitive and scalable human-robot

interactions. This work proposes a flexible language-based framework to adapt

generic robotic trajectories produced by off-the-shelf motion planners like

RRT, A-star, etc, or learned from human demonstrations. We utilize pre-trained

LLMs to adapt trajectory waypoints by generating code as a policy for dense

robot manipulation, enabling more complex and flexible instructions than

current methods. This approach allows us to incorporate a broader range of

commands, including numerical inputs. Compared to state-of-the-art

feature-based sequence-to-sequence models which require training, our method

does not require task-specific training and offers greater interpretability and

more effective feedback mechanisms. We validate our approach through simulation

experiments on the robotic manipulator, aerial vehicle, and ground robot in the

Pybullet and Gazebo simulation environments, demonstrating that LLMs can

successfully adapt trajectories to complex human instructions.

03 Apr 2024

In neural network training, RMSProp and Adam remain widely favoured

optimisation algorithms. One of the keys to their performance lies in selecting

the correct step size, which can significantly influence their effectiveness.

Additionally, questions about their theoretical convergence properties continue

to be a subject of interest. In this paper, we theoretically analyse a constant

step size version of Adam in the non-convex setting and discuss why it is

important for the convergence of Adam to use a fixed step size. This work

demonstrates the derivation and effective implementation of a constant step

size for Adam, offering insights into its performance and efficiency in non

convex optimisation scenarios. (i) First, we provide proof that these adaptive

gradient algorithms are guaranteed to reach criticality for smooth non-convex

objectives with constant step size, and we give bounds on the running time.

Both deterministic and stochastic versions of Adam are analysed in this paper.

We show sufficient conditions for the derived constant step size to achieve

asymptotic convergence of the gradients to zero with minimal assumptions. Next,

(ii) we design experiments to empirically study Adam's convergence with our

proposed constant step size against stateof the art step size schedulers on

classification tasks. Lastly, (iii) we also demonstrate that our derived

constant step size has better abilities in reducing the gradient norms, and

empirically, we show that despite the accumulation of a few past gradients, the

key driver for convergence in Adam is the non-increasing step sizes.

Underwater mine detection with deep learning suffers from limitations due to the scarcity of real-world data.

This scarcity leads to overfitting, where models perform well on training data but poorly on unseen data. This paper proposes a Syn2Real (Synthetic to Real) domain generalization approach using diffusion models to address this challenge. We demonstrate that synthetic data generated with noise by DDPM and DDIM models, even if not perfectly realistic, can effectively augment real-world samples for training. The residual noise in the final sampled images improves the model's ability to generalize to real-world data with inherent noise and high variation. The baseline Mask-RCNN model when trained on a combination of synthetic and original training datasets, exhibited approximately a 60% increase in Average Precision (AP) compared to being trained solely on the original training data. This significant improvement highlights the potential of Syn2Real domain generalization for underwater mine detection tasks.

28 Jul 2020

We consider a multi-hypothesis testing problem involving a K-armed bandit.

Each arm's signal follows a distribution from a vector exponential family. The

actual parameters of the arms are unknown to the decision maker. The decision

maker incurs a delay cost for delay until a decision and a switching cost

whenever he switches from one arm to another. His goal is to minimise the

overall cost until a decision is reached on the true hypothesis. Of interest

are policies that satisfy a given constraint on the probability of false

detection. This is a sequential decision making problem where the decision

maker gets only a limited view of the true state of nature at each stage, but

can control his view by choosing the arm to observe at each stage. An

information-theoretic lower bound on the total cost (expected time for a

reliable decision plus total switching cost) is first identified, and a

variation on a sequential policy based on the generalised likelihood ratio

statistic is then studied. Due to the vector exponential family assumption, the

signal processing at each stage is simple; the associated conjugate prior

distribution on the unknown model parameters enables easy updates of the

posterior distribution. The proposed policy, with a suitable threshold for

stopping, is shown to satisfy the given constraint on the probability of false

detection. Under a continuous selection assumption, the policy is also shown to

be asymptotically optimal in terms of the total cost among all policies that

satisfy the constraint on the probability of false detection.

In this work, we provide a simulation framework to perform systematic studies on the effects of spinal joint compliance and actuation on bounding performance of a 16-DOF quadruped spined robot Stoch 2. Fast quadrupedal locomotion with active spine is an extremely hard problem, and involves a complex coordination between the various degrees of freedom. Therefore, past attempts at addressing this problem have not seen much success. Deep-Reinforcement Learning seems to be a promising approach, after its recent success in a variety of robot platforms, and the goal of this paper is to use this approach to realize the aforementioned behaviors. With this learning framework, the robot reached a bounding speed of 2.1 m/s with a maximum Froude number of 2. Simulation results also show that use of active spine, indeed, increased the stride length, improved the cost of transport, and also reduced the natural frequency to more realistic values.

12 Oct 2025

Incremental stability is a property of dynamical systems that ensures the convergence of trajectories with respect to each other rather than a fixed equilibrium point or a fixed trajectory. In this paper, we introduce a related stability notion called incremental input-to-state practical stability ({\delta}-ISpS), ensuring safety guarantees. We also present a feedback linearization based control design scheme that renders a partially unknown system incrementally input-to-state practically stable and safe with formal guarantees. To deal with the unknown dynamics, we utilize Gaussian process regression to approximate the model. Finally, we implement the controller synthesized by the proposed scheme on a manipulator example

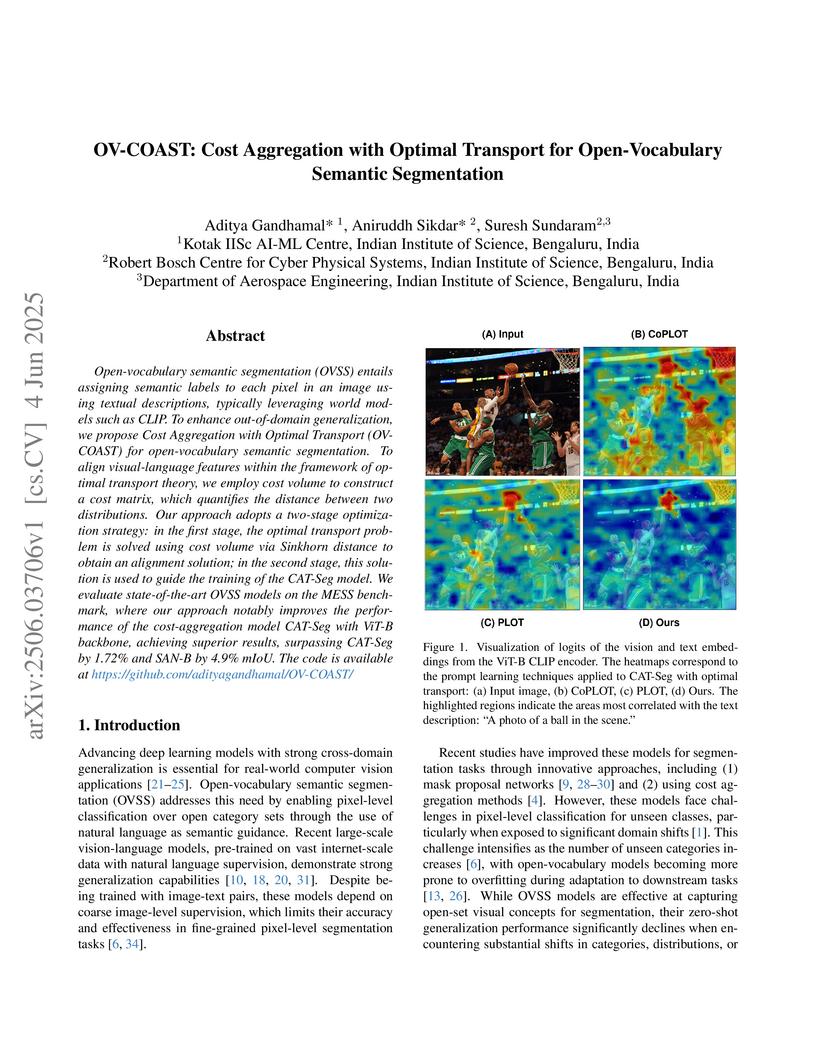

Open-vocabulary semantic segmentation (OVSS) entails assigning semantic labels to each pixel in an image using textual descriptions, typically leveraging world models such as CLIP. To enhance out-of-domain generalization, we propose Cost Aggregation with Optimal Transport (OV-COAST) for open-vocabulary semantic segmentation. To align visual-language features within the framework of optimal transport theory, we employ cost volume to construct a cost matrix, which quantifies the distance between two distributions. Our approach adopts a two-stage optimization strategy: in the first stage, the optimal transport problem is solved using cost volume via Sinkhorn distance to obtain an alignment solution; in the second stage, this solution is used to guide the training of the CAT-Seg model. We evaluate state-of-the-art OVSS models on the MESS benchmark, where our approach notably improves the performance of the cost-aggregation model CAT-Seg with ViT-B backbone, achieving superior results, surpassing CAT-Seg by 1.72 % and SAN-B by 4.9 % mIoU. The code is available at this https URL}{this https URL .

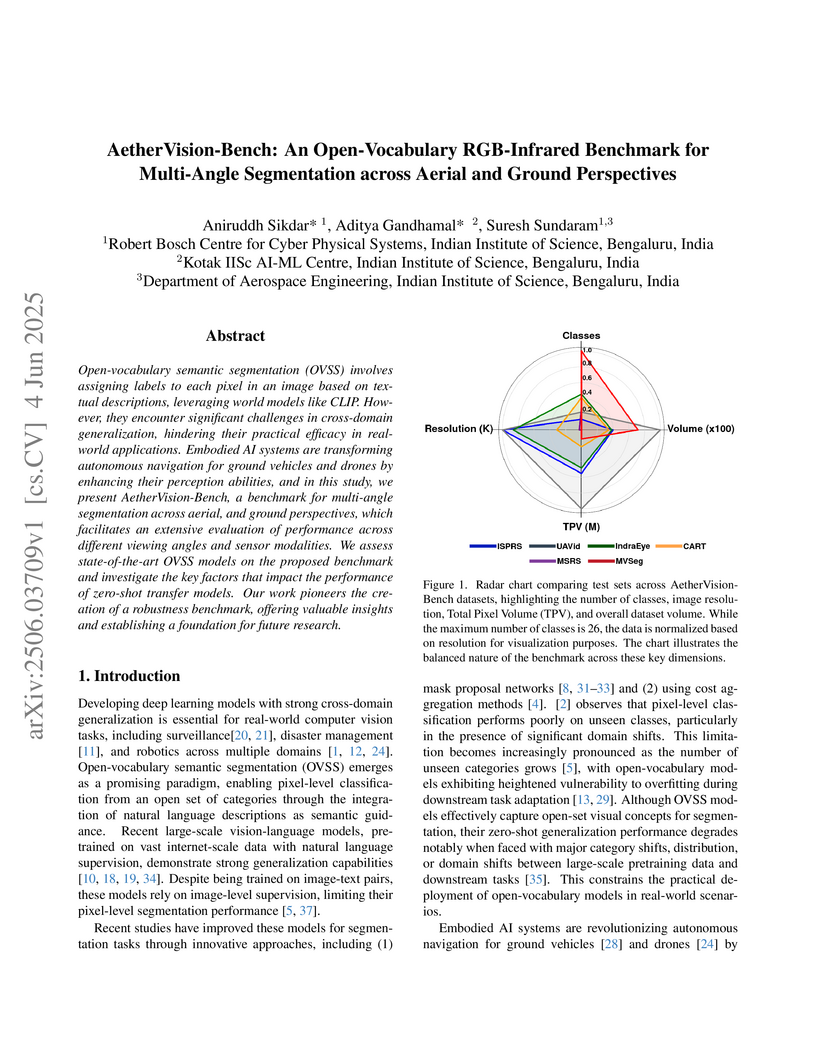

AetherVision-Bench is introduced as a comprehensive benchmark for open-vocabulary semantic segmentation, designed to evaluate model robustness across multi-angle (ground, slant, bird's-eye) and multi-modal (RGB-Infrared) perspectives. Evaluations revealed that current state-of-the-art models experience substantial performance degradation, with mIoU often decreasing by half, when facing sensor modality shifts or significant changes in viewing angles.

The paper addresses the problem of controller synthesis for control-affine

nonlinear systems to meet reach-avoid-stay specifications. Specifically, the

goal of the research is to obtain a closed-form control law ensuring that the

trajectories of the nonlinear system, reach a target set while avoiding all

unsafe regions and adhering to the state-space constraints. To tackle this

problem, we leverage the concept of the funnel-based control approach. Given an

arbitrary unsafe region, we introduce a circumvent function that guarantees the

system trajectory to steer clear of that region. Subsequently, an adaptive

funnel framework is proposed based on the target, followed by the construction

of a closed-form controller using the established funnel function, enforcing

the reach-avoid-stay specifications. To demonstrate the efficacy of the

proposed funnel-based control approach, a series of simulation experiments have

been carried out.

Estimating the number of clusters and cluster structures in unlabeled, complex, and high-dimensional datasets (like images) is challenging for traditional clustering algorithms. In recent years, a matrix reordering-based algorithm called Visual Assessment of Tendency (VAT), and its variants have attracted many researchers from various domains to estimate the number of clusters and inherent cluster structure present in the data. However, these algorithms face significant challenges when dealing with image data as they fail to effectively capture the crucial features inherent in images. To overcome these limitations, we propose a deep-learning-based framework that enables the assessment of cluster structure in complex image datasets. Our approach utilizes a self-supervised deep neural network to generate representative embeddings for the data. These embeddings are then reduced to 2-dimension using t-distributed Stochastic Neighbour Embedding (t-SNE) and inputted into VAT based algorithms to estimate the underlying cluster structure. Importantly, our framework does not rely on any prior knowledge of the number of clusters. Our proposed approach demonstrates superior performance compared to state-of-the-art VAT family algorithms and two other deep clustering algorithms on four benchmark image datasets, namely MNIST, FMNIST, CIFAR-10, and INTEL.

Dispatching policies such as the join shortest queue (JSQ), join smallest work (JSW) and their power of two variants are used in load balancing systems where the instantaneous queue length or workload information at all queues or a subset of them can be queried. In situations where the dispatcher has an associated memory, one can minimize this query overhead by maintaining a list of idle servers to which jobs can be dispatched. Recent alternative approaches that do not require querying such information include the cancel on start and cancel on complete based replication policies. The downside of such policies however is that the servers must communicate the start or completion of each service to the dispatcher and must allow cancellation of redundant copies. In practice, the requirements of query messaging, memory, and replica cancellation pose challenges in their implementation and their advantages are not clear. In this work, we consider load balancing policies that do not query load information, do not have a memory, and do not cancel replicas. Surprisingly, we were able to identify operating regimes where such policies have better performance when compared to some of the popular policies that utilize server feedback information. Our policies allow the dispatcher to append a timer to each job or its replica. A job or a replica is discarded if its timer expires before it starts receiving service. We analyze several variants of this policy which are novel, simple to implement, and also have remarkably good performance in some operating regimes, despite no feedback from servers to the dispatcher.

There are no more papers matching your filters at the moment.