Simon Fraser University

LYRA generates high-quality, geometrically consistent 3D and 4D scenes by distilling implicit 3D knowledge from a video diffusion model into explicit 3D Gaussian Splatting representations, eliminating the reliance on real-world multi-view training data. The framework achieves state-of-the-art results on several 3D reconstruction benchmarks and offers real-time rendering capabilities.

A teleoperation system enables real-time whole-body control of humanoid robots through human motion imitation, combining reinforcement learning with behavior cloning to achieve coordinated movements across diverse tasks while maintaining a 0.9-second latency on the Unitree G1 platform.

Physics-Based Motion Imitation with Adversarial Differential Discriminators introduces an Adversarial Differential Discriminator (ADD), an adversarial multi-objective optimization technique for physics-based character animation and multi-objective reinforcement learning. This method enables simulated characters to precisely replicate agile motions comparable to state-of-the-art tracking methods, but without requiring manual reward engineering, and also demonstrates broader applicability in robotics control.

Carnegie Mellon University

Carnegie Mellon University UC Berkeley

UC Berkeley National University of SingaporeIndiana University

National University of SingaporeIndiana University University of Bristol

University of Bristol Meta

Meta University of Texas at Austin

University of Texas at Austin University of Pennsylvania

University of Pennsylvania Johns Hopkins University

Johns Hopkins University University of Minnesota

University of Minnesota University of TokyoGeorgia TechKing Abdullah University of Science and TechnologyUniversity of CataniaSimon Fraser UniversityUniversity of North Carolina, Chapel HillInternational Institute of Information Technology, HyderabadUniversidad de Los AndesFAIR, MetaUniversity of Illinois Urbana

ChampaignProject Aria, Meta

University of TokyoGeorgia TechKing Abdullah University of Science and TechnologyUniversity of CataniaSimon Fraser UniversityUniversity of North Carolina, Chapel HillInternational Institute of Information Technology, HyderabadUniversidad de Los AndesFAIR, MetaUniversity of Illinois Urbana

ChampaignProject Aria, MetaEgo-Exo4D introduces the largest public dataset of time-synchronized, multimodal, multiview ego-exocentric video, capturing 740 participants performing skilled activities across 8 diverse domains in 123 natural environments. The dataset, a collaboration of 15 institutions, includes Project Aria data and extensive language annotations, supporting four benchmark families for understanding human skill.

19 Apr 2025

Google DeepMind

Google DeepMind Carnegie Mellon University

Carnegie Mellon University Georgia Institute of Technology

Georgia Institute of Technology Stanford UniversityHarbin Institute of Technology

Stanford UniversityHarbin Institute of Technology The University of Texas at Austin

The University of Texas at Austin NVIDIA

NVIDIA Duke UniversitySimon Fraser UniversityTechnische Universität MünchenCNRS-AIST Joint Robotics LaboratoryThe Institute for Human and Machine CognitionCNRS-University of Montpellier LIRMMThe University of Southern CaliforniaThe AI Institute

Duke UniversitySimon Fraser UniversityTechnische Universität MünchenCNRS-AIST Joint Robotics LaboratoryThe Institute for Human and Machine CognitionCNRS-University of Montpellier LIRMMThe University of Southern CaliforniaThe AI InstituteResearchers from Georgia Institute of Technology, Harbin Institute of Technology, Google DeepMind, and others compiled a comprehensive survey of humanoid locomotion and manipulation. It integrates traditional model-based methods with learning-based techniques and explores the emerging role of foundation models, highlighting the critical importance of whole-body tactile feedback.

04 Sep 2025

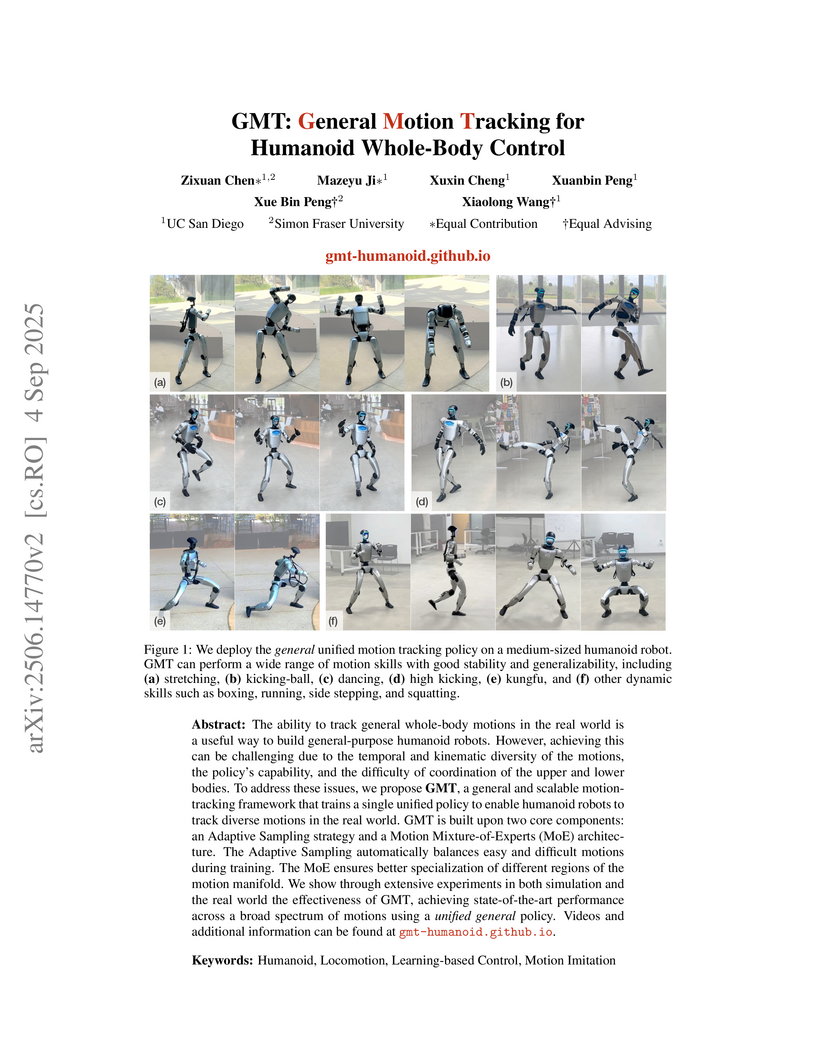

The GMT framework introduces a method for training a single, unified policy that enables humanoid robots to track a wide range of human motions with high fidelity in the real world. This approach, developed by researchers from UC San Diego and Simon Fraser University, achieves state-of-the-art tracking performance in simulation and demonstrates robust reproduction of diverse skills on a Unitree G1 robot.

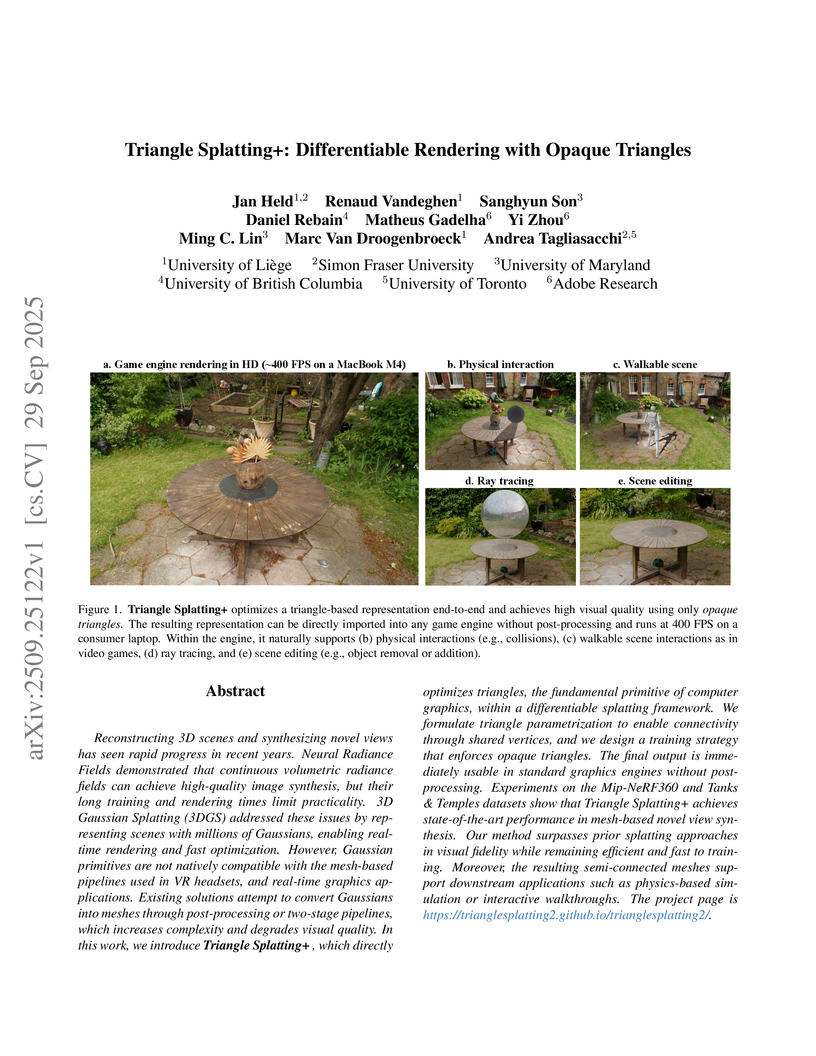

Triangle Splatting+, from a collaboration including University of Liège, Simon Fraser University, University of Maryland, and Adobe Research, optimizes explicit, opaque, and semi-connected triangle meshes for novel view synthesis. This method achieves state-of-the-art visual quality among mesh-based techniques, yielding outputs directly compatible with traditional game engines without post-processing for mesh extraction or coloring, and trains in approximately 39 minutes for Mip-NeRF360 scenes.

LeVERB is a framework designed for humanoid robots to execute agile whole-body actions through latent vision-language instructions, enabling zero-shot sim-to-real transfer. The framework achieved an average 58.5% success rate across diverse tasks in simulation, which is a 7.8 times improvement over a naive hierarchical VLA, and successfully generalized to unseen commands on a Unitree G1 robot.

16 Oct 2025

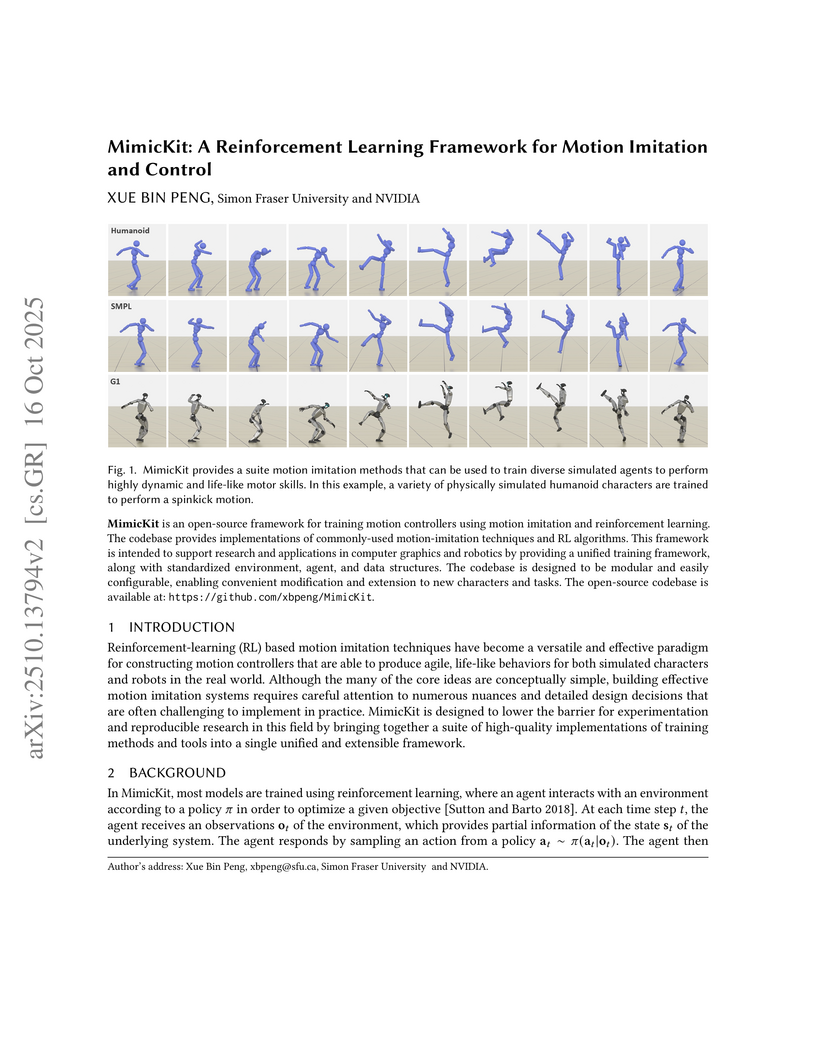

MimicKit is an open-source framework for training motion controllers using motion imitation and reinforcement learning. The codebase provides implementations of commonly-used motion-imitation techniques and RL algorithms. This framework is intended to support research and applications in computer graphics and robotics by providing a unified training framework, along with standardized environment, agent, and data structures. The codebase is designed to be modular and easily configurable, enabling convenient modification and extension to new characters and tasks. The open-source codebase is available at: this https URL.

16 Jun 2025

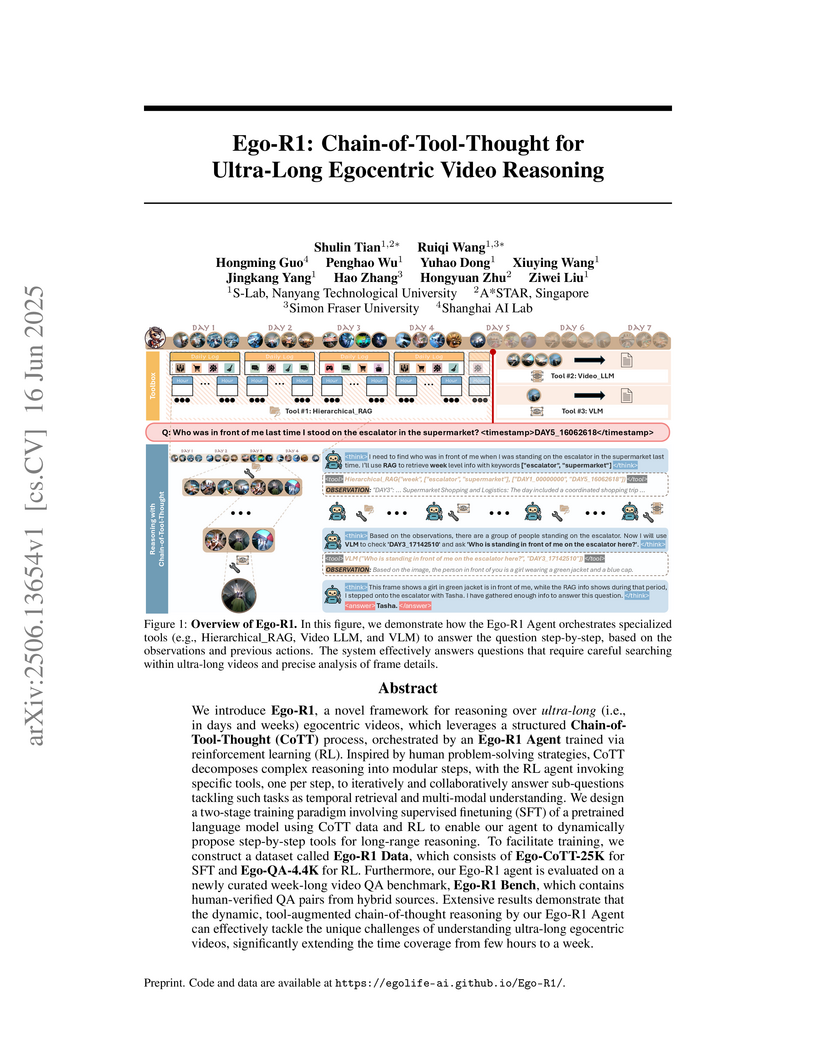

Ego-R1 introduces a Chain-of-Tool-Thought framework that dynamically orchestrates specialized perception tools for reasoning over ultra-long egocentric videos spanning days and weeks. The approach achieves 46.0% accuracy on a new week-long benchmark, surpassing state-of-the-art models like Gemini-1.5-Pro.

Researchers from UBC, Google Research, and Google DeepMind introduce an approach that reinterprets 3D Gaussian Splatting (3DGS) optimization as a Markov Chain Monte Carlo (MCMC) process, leveraging Stochastic Gradient Langevin Dynamics (SGLD) for principled exploration. This method achieves higher rendering quality and robustness to initialization, consistently outperforming conventional 3DGS across datasets like NeRF Synthetic and MipNeRF 360, while maintaining real-time inference speeds.

Researchers developed StableMotion, a framework for training motion cleanup models directly on unpaired, corrupted motion data using a diffusion-based approach with quality indicator variables. This method achieved a 68% reduction in motion pops and an 81% reduction in frozen frames on a proprietary soccer mocap dataset, while also outperforming state-of-the-art cleanup models on controlled benchmarks.

The CLOSD system introduces a method for controlling virtual characters by closing the loop between motion planning and physical execution. It combines an auto-regressive diffusion model for real-time motion planning with a physics-based reinforcement learning controller, enabling characters to perform diverse, physically plausible actions in response to text commands and interact realistically with environments.

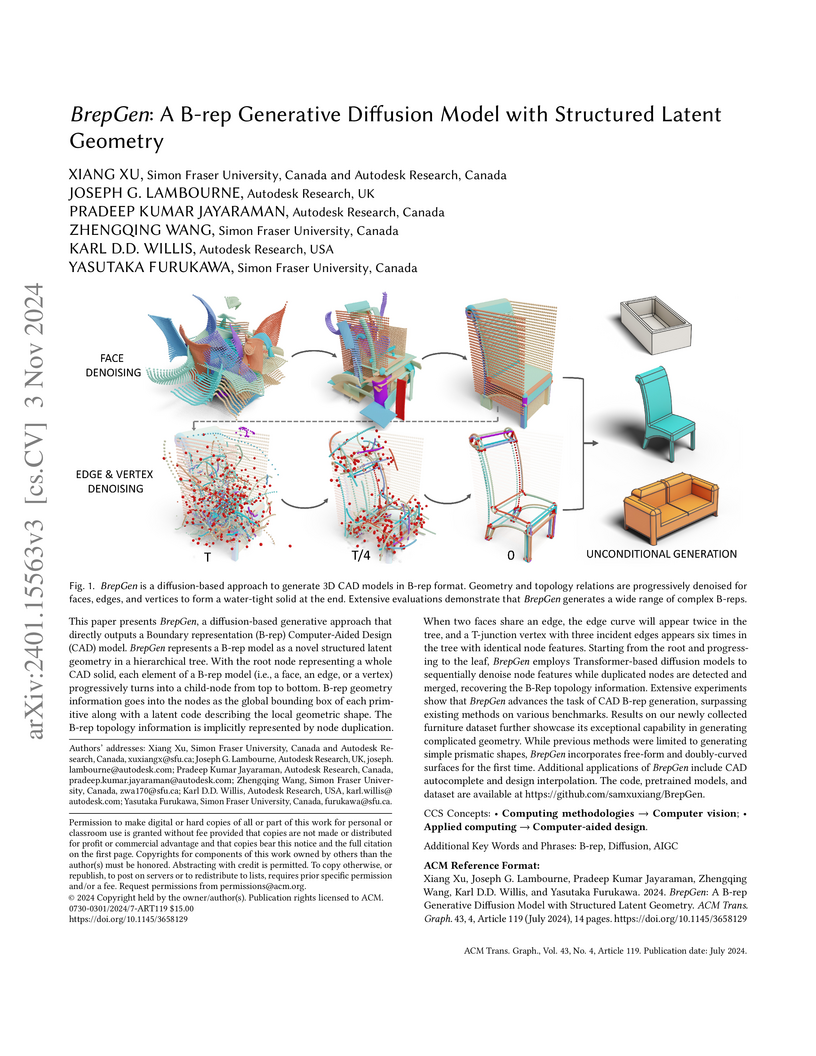

BrepGen introduces a generative diffusion model capable of directly synthesizing industrially-standard Boundary representation (B-rep) 3D models. It achieves this by employing a novel structured latent geometry representation that implicitly encodes topology, enabling the generation of complex, watertight models including free-form surfaces, and demonstrates capabilities like design autocompletion and interpolation.

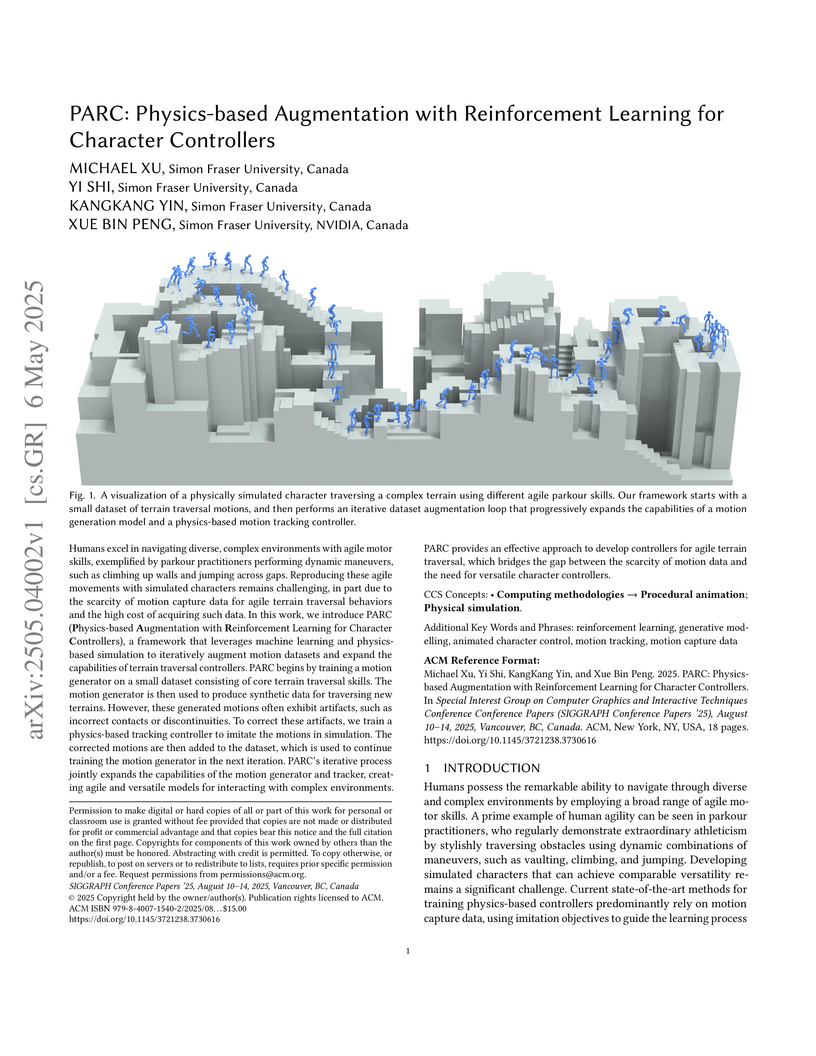

A framework called PARC enables training of agile terrain traversal controllers from small motion datasets through iterative co-training between a diffusion-based motion generator and physics-based tracking controller, progressively expanding the motion repertoire while maintaining physical plausibility through simulation-based correction.

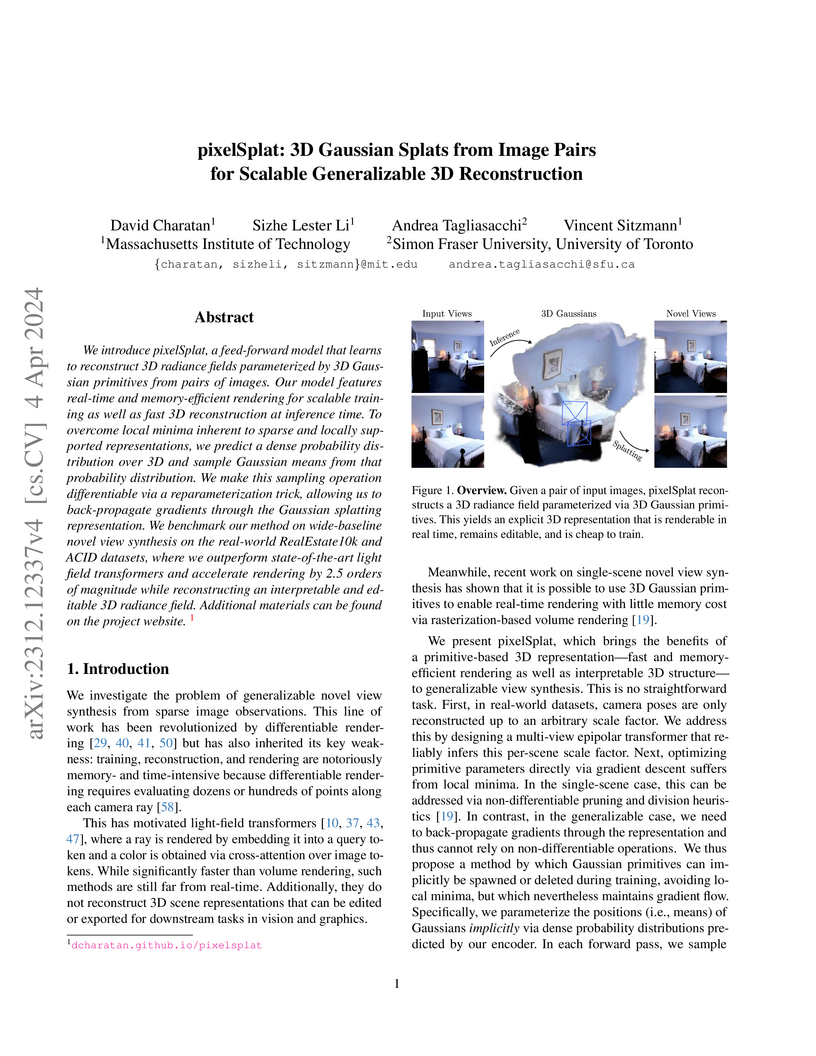

pixelSplat, developed by researchers at MIT and Simon Fraser University, reconstructs 3D Gaussian Splatting representations from just two input images in a single feed-forward pass. It achieves real-time novel view synthesis with explicit 3D scene geometry, rendering images approximately 650 times faster than prior state-of-the-art generalizable methods while improving perceptual quality.

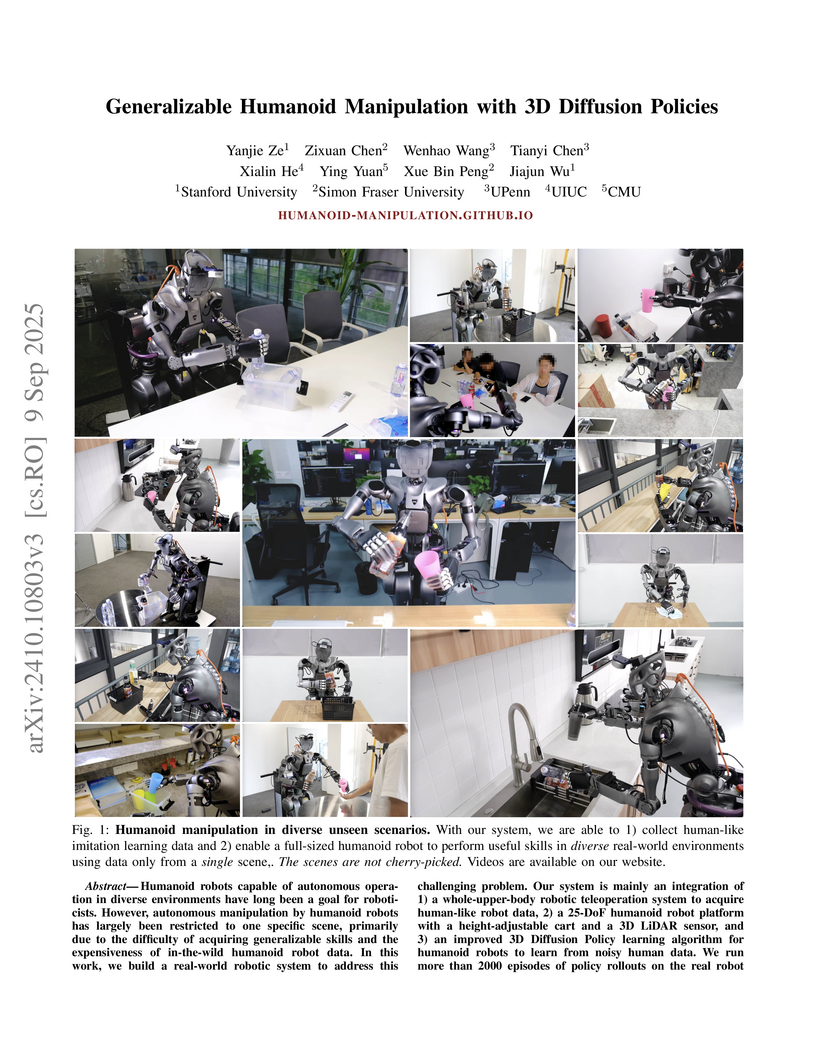

Humanoid robots capable of autonomous operation in diverse environments have long been a goal for roboticists. However, autonomous manipulation by humanoid robots has largely been restricted to one specific scene, primarily due to the difficulty of acquiring generalizable skills and the expensiveness of in-the-wild humanoid robot data. In this work, we build a real-world robotic system to address this challenging problem. Our system is mainly an integration of 1) a whole-upper-body robotic teleoperation system to acquire human-like robot data, 2) a 25-DoF humanoid robot platform with a height-adjustable cart and a 3D LiDAR sensor, and 3) an improved 3D Diffusion Policy learning algorithm for humanoid robots to learn from noisy human data. We run more than 2000 episodes of policy rollouts on the real robot for rigorous policy evaluation. Empowered by this system, we show that using only data collected in one single scene and with only onboard computing, a full-sized humanoid robot can autonomously perform skills in diverse real-world scenarios. Videos are available at this https URL .

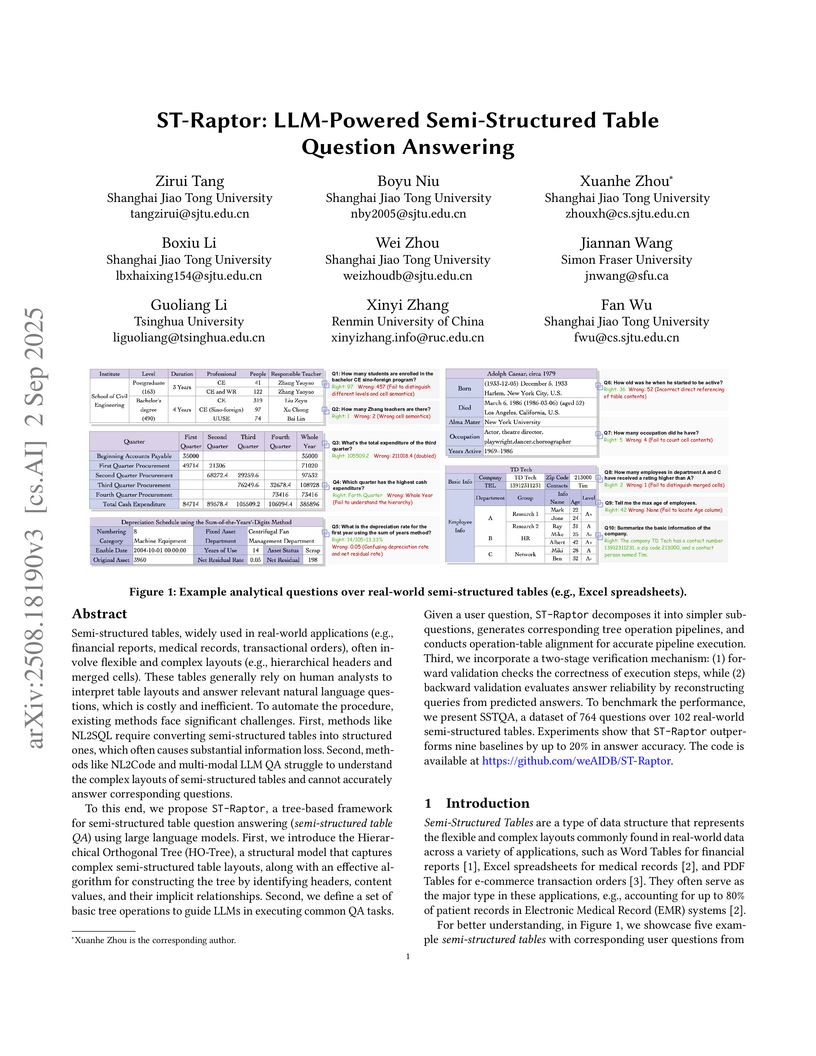

ST-Raptor introduces a Hierarchical Orthogonal Tree (HO-Tree) model and a pipeline-based question answering framework to process semi-structured tables. It achieved 72.39% accuracy on the new SSTQA benchmark, outperforming existing baselines by over 10.23%.

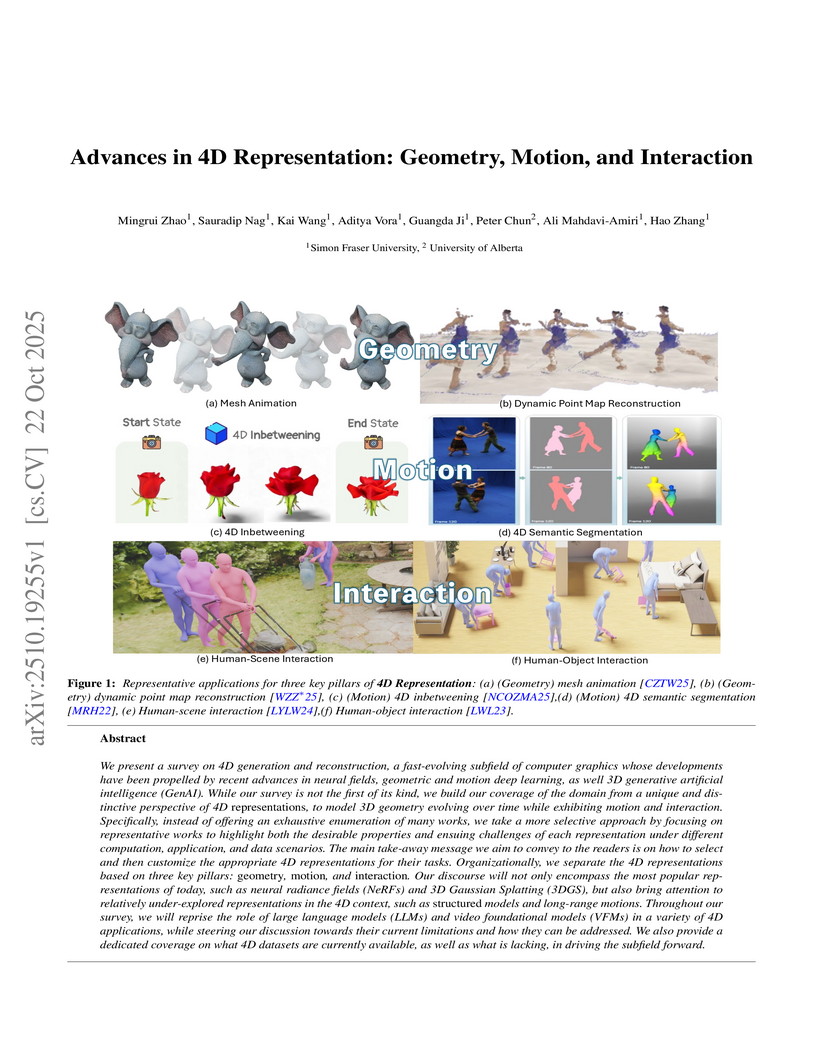

A survey provides a representation-centric framework for understanding recent advancements in 4D generation and reconstruction, critically analyzing various representations for geometry, motion, and interaction. It offers a detailed comparison of their properties, challenges, and trade-offs across dimensions such as visual fidelity, scalability, and temporal consistency to guide researchers in selecting and customizing appropriate 4D representations.

This work introduces Triangle Splatting, a differentiable rendering approach that optimizes unstructured 3D triangles to reconstruct photorealistic scenes from images. The method achieves state-of-the-art visual fidelity, notably improving perceptual quality over prior splatting techniques, and renders at thousands of frames per second, outperforming implicit methods by orders of magnitude.

There are no more papers matching your filters at the moment.