Ask or search anything...

Sorbonne Université

Sorbonne UniversitéThis study comprehensively characterized the cool circumgalactic medium (CGM) around galaxies at redshifts below 0.4 using data from the Dark Energy Spectroscopic Instrument (DESI) Year 1 survey. It reveals persistent correlations between cool gas absorption and galaxy properties like stellar mass and star formation rate, along with an unexpected absence of azimuthal anisotropy, indicating a possible evolution in CGM dynamics at lower redshifts.

View blogThe SPLADE model fine-tunes BERT to generate high-dimensional, sparse lexical representations for queries and documents, enabling efficient first-stage ranking using inverted indexes. It achieves effectiveness comparable to leading dense retrieval models while offering greater efficiency for large-scale systems.

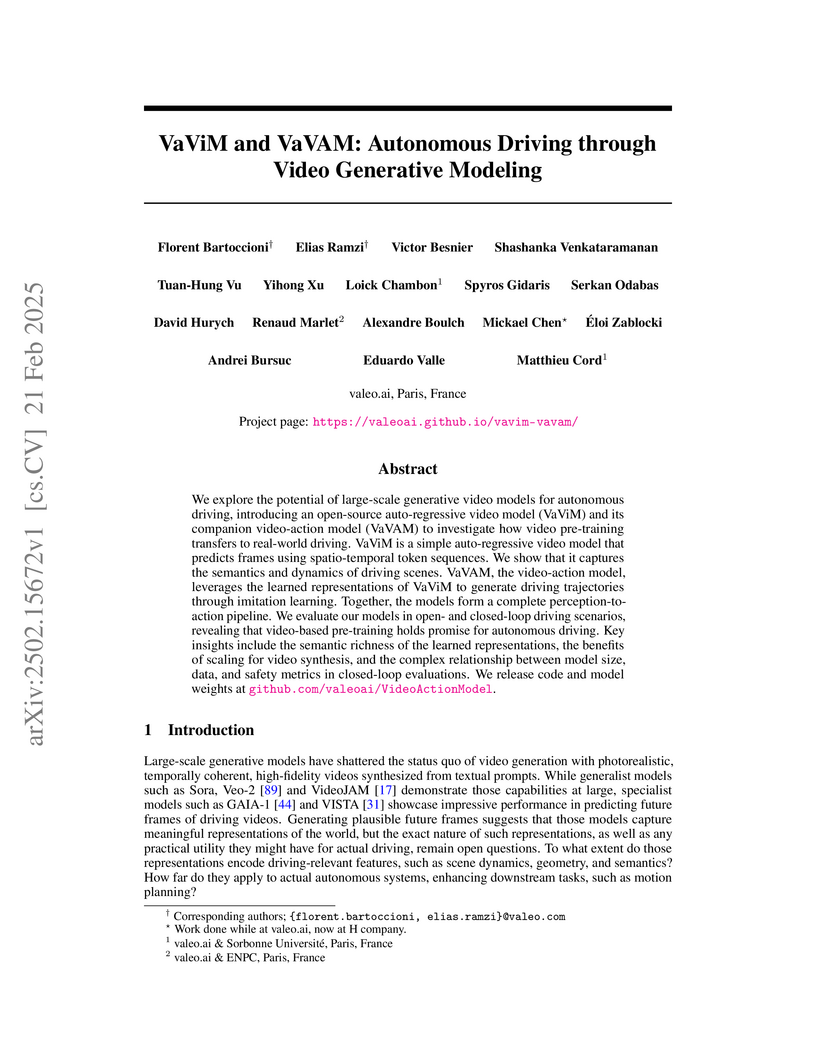

View blogThis research introduces VaViM and VaVAM, an end-to-end perception-to-action system for autonomous driving that leverages large-scale self-supervised video generative pre-training. It demonstrates that learning rich visual representations from raw video data can enable state-of-the-art performance in frontal driving scenarios within a closed-loop simulator, while also revealing complexities in scaling generative models for real-world safety.

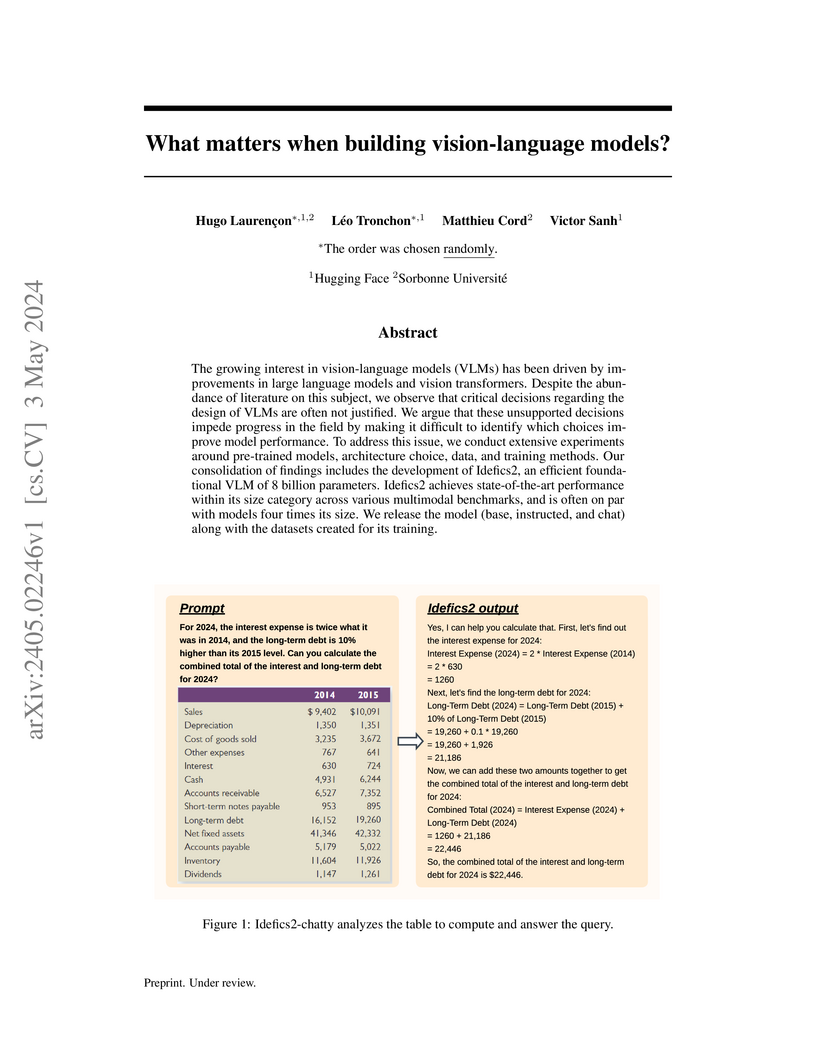

View blogThis research provides experimental clarity to core design choices in Vision-Language Models (VLMs) by systematically ablating architectural components and training strategies. The paper's insights culminate in the development and open-source release of Idefics2, an 8 billion parameter foundational VLM that achieves state-of-the-art performance within its size category while demonstrating improved efficiency.

View blogResearchers at Meta AI and Sorbonne Université developed DIFFEDIT, a method for semantic image editing that automatically infers editing masks from text prompts and uses DDIM encoding to preserve unedited content. The approach enables localized, high-fidelity modifications with pre-trained diffusion models, demonstrating superior trade-offs between text adherence and original image preservation compared to prior techniques.

View blogSPLADE v2, developed by Naver Labs Europe and Sorbonne Université, enhances sparse neural retrieval by incorporating max pooling, knowledge distillation, and document-only expansion. It achieves state-of-the-art performance on MS MARCO (36.8 MRR@10) and strong zero-shot generalization on BEIR, while maintaining efficiency suitable for inverted indexes.

View blogRewarded Soups proposes an efficient multi-policy strategy that achieves Pareto-optimal alignment for large foundation models by interpolating the weights of independently fine-tuned expert models. This approach allows for a posteriori customization to diverse user preferences with significantly fewer training runs compared to traditional multi-objective reinforcement learning.

View blogThis research provides the exact analytical expression for the most general tree-level boost-breaking cosmological correlator, essential for capturing realistic inflationary physics. It also develops a systematic framework for constructing accurate and efficient approximate templates for these signals, revealing new observable features such as a transient cosmological collider signal.

View blogResearchers performed the first direct, dynamical measurement of a black hole mass in a 'Little Red Dot' (Abell2744-QSO1) at z=7.04 using JWST NIRSpec IFS data, confirming the validity of single-epoch virial mass estimates for these early universe objects and revealing a black hole significantly overmassive relative to its host galaxy's stellar mass.

View blogResearchers from Inria and the University of Bordeaux, with collaborators from Hugging Face, developed GLAM, a method for functionally grounding Large Language Models in interactive textual environments using online Reinforcement Learning. GLAM-trained Flan-T5 models achieved superior sample efficiency and better generalization to novel objects and task compositions compared to traditional RL agents and behavioral cloning baselines.

View blogA collaborative study from Google DeepMind and several French universities established a theoretical explanation for why Latent Diffusion Models (LDMs) can exhibit sample quality degradation during their final denoising steps, unlike standard diffusion models. The research demonstrates this is an intrinsic outcome of latent dimensionality reduction, offering a principled framework to identify optimal stopping times and latent dimensions to prevent this degradation, which was empirically observed as increased FID scores on CelebA-HQ.

View blog CNRS

CNRS University of Oxford

University of Oxford

California Institute of Technology

California Institute of Technology

Hugging Face

Hugging Face

Meta

Meta

Flatiron Institute

Flatiron Institute

Tsinghua University

Tsinghua University

Google DeepMind

Google DeepMind Université Paris-Saclay

Université Paris-Saclay

University of Waterloo

University of Waterloo