Swedish University of Agricultural Sciences

Wuhan UniversityTechnische Universität WienUniversity of Helsinki Aalto UniversityIDEAS NCBRUniversity of Eastern FinlandUniversität InnsbruckUniversitat Politécnica de ValénciaNatural Resources Institute FinlandPolish Academy of ScienceFinnish Geospatial Research Institute FGISwedish University of Agricultural SciencesNorwegian Institute for Bioeconomy Research (NIBIO)Bruno Kessler Foundation (FBK)Forest Research Institute IBLNorwegian University of

Science and Technology

Aalto UniversityIDEAS NCBRUniversity of Eastern FinlandUniversität InnsbruckUniversitat Politécnica de ValénciaNatural Resources Institute FinlandPolish Academy of ScienceFinnish Geospatial Research Institute FGISwedish University of Agricultural SciencesNorwegian Institute for Bioeconomy Research (NIBIO)Bruno Kessler Foundation (FBK)Forest Research Institute IBLNorwegian University of

Science and Technology

Aalto UniversityIDEAS NCBRUniversity of Eastern FinlandUniversität InnsbruckUniversitat Politécnica de ValénciaNatural Resources Institute FinlandPolish Academy of ScienceFinnish Geospatial Research Institute FGISwedish University of Agricultural SciencesNorwegian Institute for Bioeconomy Research (NIBIO)Bruno Kessler Foundation (FBK)Forest Research Institute IBLNorwegian University of

Science and Technology

Aalto UniversityIDEAS NCBRUniversity of Eastern FinlandUniversität InnsbruckUniversitat Politécnica de ValénciaNatural Resources Institute FinlandPolish Academy of ScienceFinnish Geospatial Research Institute FGISwedish University of Agricultural SciencesNorwegian Institute for Bioeconomy Research (NIBIO)Bruno Kessler Foundation (FBK)Forest Research Institute IBLNorwegian University of

Science and TechnologyResearchers from the Finnish Geospatial Research Institute led a benchmark evaluating machine learning and deep learning algorithms for individual tree species classification using high-density multispectral airborne laser scanning data. Point-based deep learning methods, particularly Point Transformer architectures, achieved the highest accuracies (87.9% overall) on dense datasets, demonstrating that multi-channel spectral information significantly improves classification, especially for challenging minority species.

14 Feb 2025

To turn environmentally derived metabarcoding data into community matrices

for ecological analysis, sequences must first be clustered into operational

taxonomic units (OTUs). This task is particularly complex for data including

large numbers of taxa with incomplete reference libraries. OptimOTU offers a

taxonomically aware approach to OTU clustering. It uses a set of taxonomically

identified reference sequences to choose optimal genetic distance thresholds

for grouping each ancestor taxon into clusters which most closely match its

descendant taxa. Then, query sequences are clustered according to preliminary

taxonomic identifications and the optimized thresholds for their ancestor

taxon. The process follows the taxonomic hierarchy, resulting in a full

taxonomic classification of all the query sequences into named taxonomic groups

as well as placeholder "pseudotaxa" which accommodate the sequences that could

not be classified to a named taxon at the corresponding rank. The OptimOTU

clustering algorithm is implemented as an R package, with computationally

intensive steps implemented in C++ for speed, and incorporating open-source

libraries for pairwise sequence alignment. Distances may also be calculated

externally, and may be read from a UNIX pipe, allowing clustering of large

datasets where the full distance matrix would be inconveniently large to store

in memory. The OptimOTU bioinformatics pipeline includes a full workflow for

paired-end Illumina sequencing data that incorporates quality filtering,

denoising, artifact removal, taxonomic classification, and OTU clustering with

OptimOTU. The OptimOTU pipeline is developed for use on high performance

computing clusters, and scales to datasets with millions of reads per sample,

and tens of thousands of samples.

28 Feb 2025

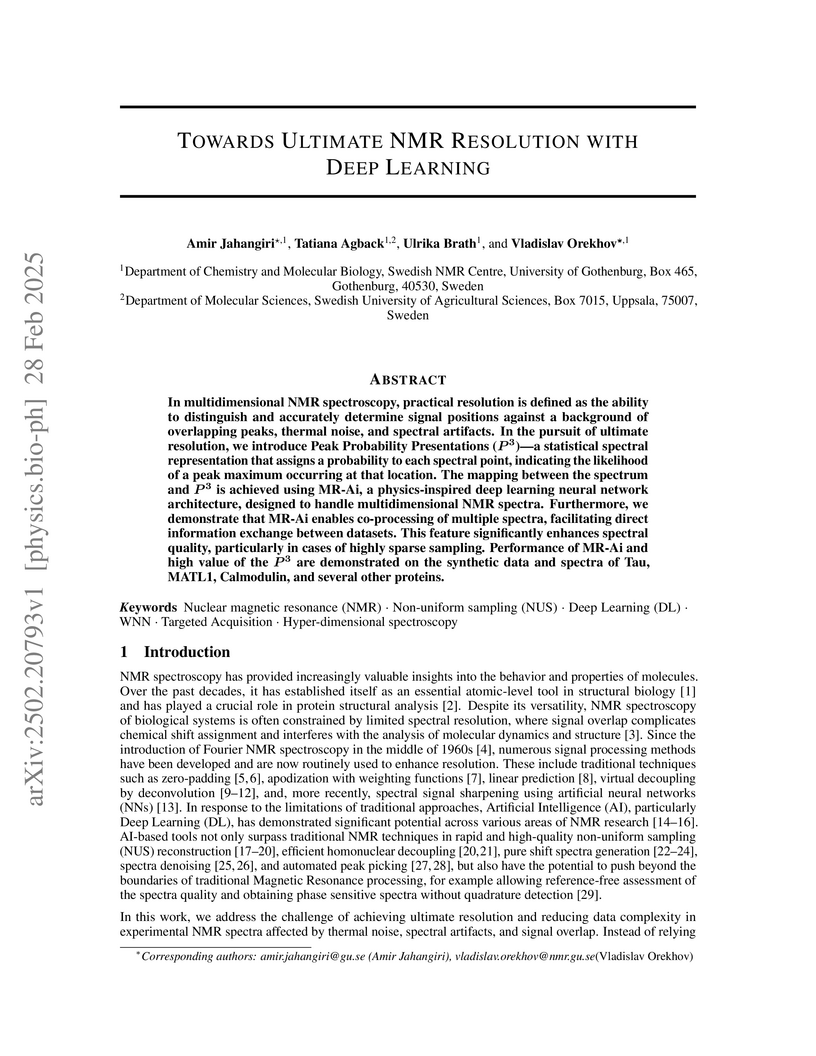

In multidimensional NMR spectroscopy, practical resolution is defined as the

ability to distinguish and accurately determine signal positions against a

background of overlapping peaks, thermal noise, and spectral artifacts. In the

pursuit of ultimate resolution, we introduce Peak Probability Presentations

(P3)- a statistical spectral representation that assigns a probability to

each spectral point, indicating the likelihood of a peak maximum occurring at

that location. The mapping between the spectrum and P3 is achieved using

MR-Ai, a physics-inspired deep learning neural network architecture, designed

to handle multidimensional NMR spectra. Furthermore, we demonstrate that MR-Ai

enables coprocessing of multiple spectra, facilitating direct information

exchange between datasets. This feature significantly enhances spectral

quality, particularly in cases of highly sparse sampling. Performance of MR-Ai

and high value of the P3 are demonstrated on the synthetic data and spectra

of Tau, MATL1, Calmodulin, and several other proteins.

04 Aug 2022

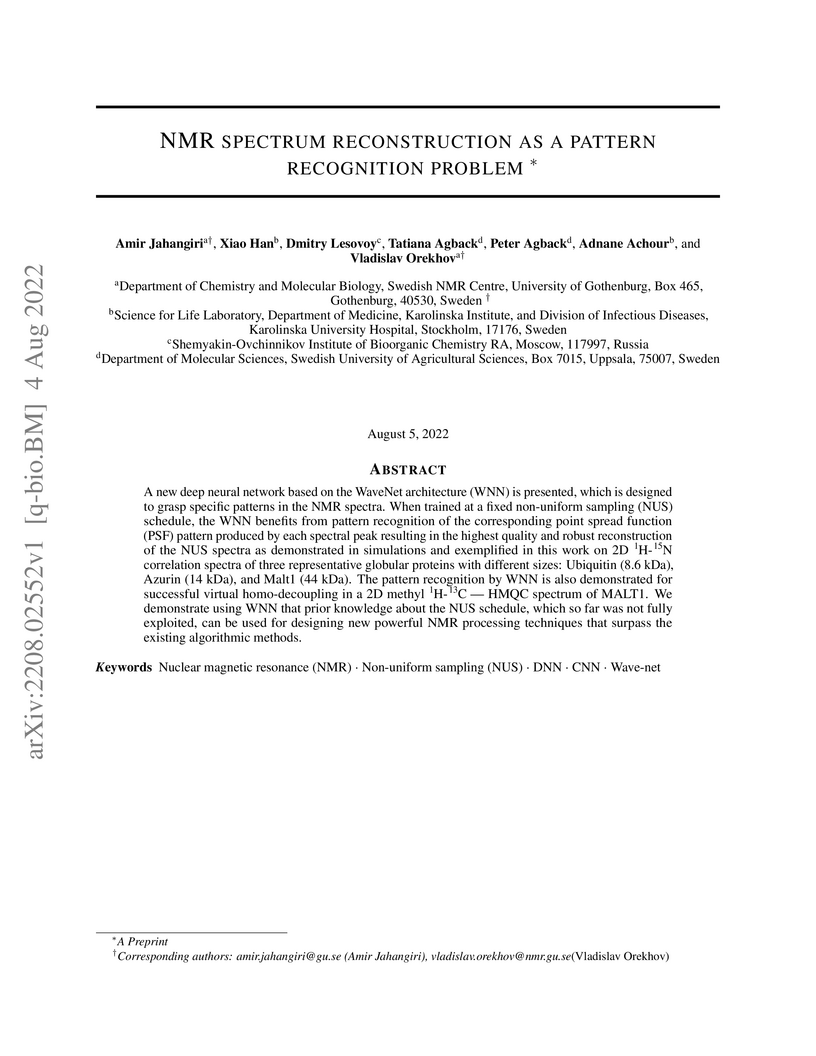

A new deep neural network based on the WaveNet architecture (WNN) is

presented, which is designed to grasp specific patterns in the NMR spectra.

When trained at a fixed non-uniform sampling (NUS) schedule, the WNN benefits

from pattern recognition of the corresponding point spread function (PSF)

pattern produced by each spectral peak resulting in the highest quality and

robust reconstruction of the NUS spectra as demonstrated in simulations and

exemplified in this work on 2D 1H-15N correlation spectra of three

representative globular proteins with different sizes: Ubiquitin (8.6 kDa),

Azurin (14 kDa), and Malt1 (44 kDa). The pattern recognition by WNN is also

demonstrated for successful virtual homo-decoupling in a 2D methyl 1H-13 HMQC

spectrum of MALT1. We demonstrate using WNN that prior knowledge about the NUS

schedule, which so far was not fully exploited, can be used for designing new

powerful NMR processing techniques that surpass the existing algorithmic

methods.

Effective early detection of potato late blight (PLB) is an essential aspect

of potato cultivation. However, it is a challenge to detect late blight at an

early stage in fields with conventional imaging approaches because of the lack

of visual cues displayed at the canopy level. Hyperspectral imaging can,

capture spectral signals from a wide range of wavelengths also outside the

visual wavelengths. In this context, we propose a deep learning classification

architecture for hyperspectral images by combining 2D convolutional neural

network (2D-CNN) and 3D-CNN with deep cooperative attention networks

(PLB-2D-3D-A). First, 2D-CNN and 3D-CNN are used to extract rich spectral space

features, and then the attention mechanism AttentionBlock and SE-ResNet are

used to emphasize the salient features in the feature maps and increase the

generalization ability of the model. The dataset is built with 15,360 images

(64x64x204), cropped from 240 raw images captured in an experimental field with

over 20 potato genotypes. The accuracy in the test dataset of 2000 images

reached 0.739 in the full band and 0.790 in the specific bands (492nm, 519nm,

560nm, 592nm, 717nm and 765nm). This study shows an encouraging result for

early detection of PLB with deep learning and proximal hyperspectral imaging.

06 Dec 2023

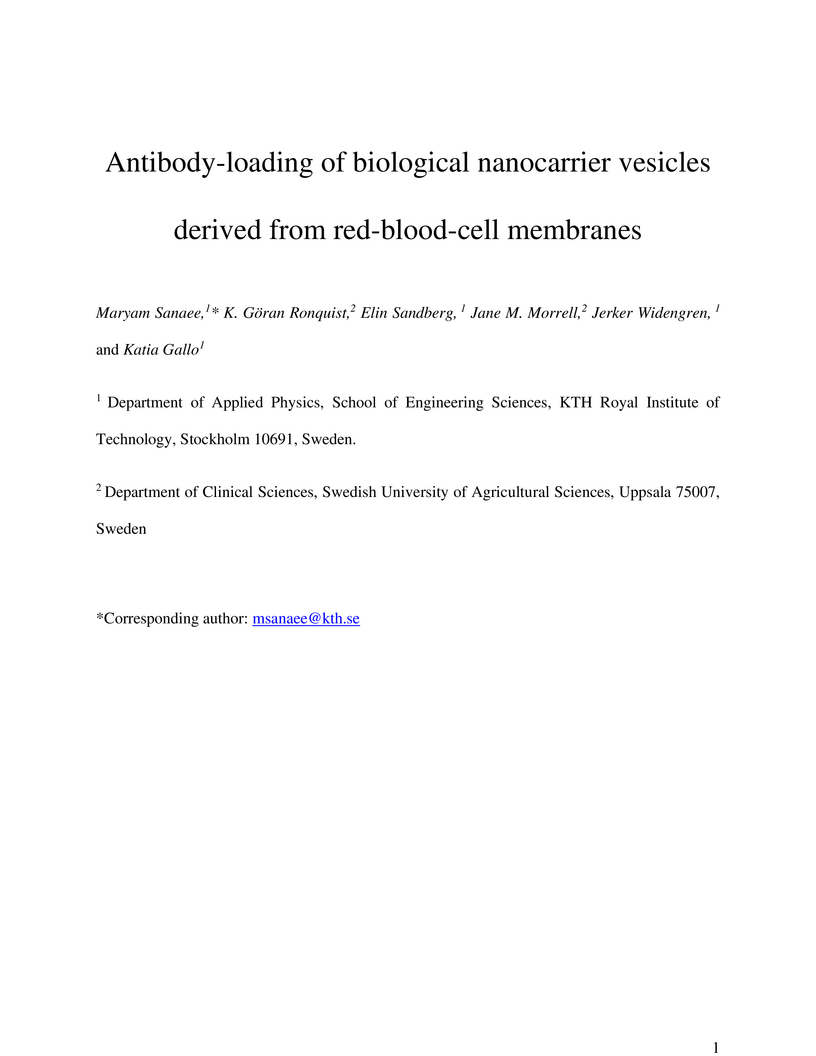

Antibodies, disruptive potent therapeutic agents against pharmacological

targets, face a barrier crossing immune-system and cellular-membranes. To

overcome these, various strategies have been explored including shuttling via

liposomes or bio-camouflaged nanoparticles. Here, we demonstrate the

feasibility to load antibodies into exosome-mimetic nanovesicles derived from

human red-blood-cell-membranes. The goat-anti-chicken antibodies are loaded

into erythrocyte-membrane derived nanovesicles and their loading yields are

characterized and compared with smaller dUTP-cargo. Applying dual-color

coincident fluorescence burst methodology, the loading yield of nanocarriers is

profiled at single-vesicle level overcoming their size-heterogeneity and

achieving a maximum of 38-41% antibody-loading yield at peak radius of 52 nm.

The average of 14 % yield and more than two antibodies per vesicle is

estimated, comparable to those of dUTP-loaded nanovesicles after additional

purification through exosome-spin-column. These results suggest a promising

route for enhancing biodistribution and intracellular accessibility for

therapeutic antibodies using novel, biocompatible, and low-immunogenicity

nanocarriers, suitable for large-scale pharmacological applications.

06 Dec 2023

Extracellular vesicles (EVs) and plasma membrane-derived exosome-mimetic

nanovesicles demonstrate significant potential for drug delivery. Latter

synthetic provides higher throughput over physiological EVs. However they face

size-stability and self-agglomeration challenges in physiological solutions to

be properly characterized and addressed. Here we demonstrate a fast and

high-throughput nanovesicle screening methodology relying on dynamic light

scattering (DLS) complemented by atomic force microscopy (AFM) measurements,

suitable for the evaluation of hydrodynamic size instabilities and aggregation

effects in nanovesicle solutions under varying experimental conditions and

apply it to the analysis of bio-engineered nanovesicles derived from

erythrocytes as well as physiological extracellular vesicles isolated from

animal seminal plasma. The synthetic vesicles exhibit a significantly higher

degree of agglomeration, with only 8 % of them falling within the typical

extracellular vesicle size range (30-200 nm) in their original preparation

conditions. Concurrent zeta potential measurements performed on both

physiological and synthetic nanovesicles yielded values in the range of -17 to

-22 mV, with no apparent correlation to their agglomeration tendencies.

However, mild sonication and dilution were found to be effective means to

restore the portion of EVs-like nanovesicles in synthetic preparations to

values of 54% and 63%, respectively, The results illustrate the capability of

this DLS-AFM-based analytical method for real-time, high-throughput and

quantitative assessments of agglomeration effects and size instabilities in

bioengineered nanovesicle solutions, providing a powerful and easy-to-use tool

to gain insights to overcome such deleterious effects and leverage the full

potential of this promising biocompatible drug-delivery carriers for a broad

range of pharmaceutical applications.

04 Nov 2016

Although animal locations gained via GPS, etc. are typically observed on a

discrete time scale, movement models formulated in continuous time are

preferable in order to avoid the struggles experienced in discrete time when

faced with irregular observations or the prospect of comparing analyses on

different time scales. A class of models able to emulate a range of movement

ideas are defined by representing movement as a combination of stochastic

processes describing both speed and bearing. A method for Bayesian inference

for such models is described through the use of a Markov chain Monte Carlo

approach. Such inference relies on an augmentation of the animal's locations in

discrete time that have been observed with error, with a more detailed movement

path gained via simulation techniques. Analysis on real data on an individual

reindeer (Rangifer tarandus) illustrates the presented methods.

12 Dec 2024

Dynamic latent space models are widely used for characterizing changes in networks and relational data over time. These models assign to each node latent attributes that characterize connectivity with other nodes, with these latent attributes dynamically changing over time. Node attributes can be organized as a three-way tensor with modes corresponding to nodes, latent space dimension, and time. Unfortunately, as the number of nodes and time points increases, the number of elements of this tensor becomes enormous, leading to computational and statistical challenges, particularly when data are sparse. We propose a new approach for massively reducing dimensionality by expressing the latent node attribute tensor as low rank. This leads to an interesting new nested exemplar latent space model, which characterizes the node attribute tensor as dependent on low-dimensional exemplar traits for each node, weights for each latent space dimension, and exemplar curves characterizing time variation. We study properties of this framework, including expressivity, and develop efficient Bayesian inference algorithms. The approach leads to substantial advantages in simulations and applications to ecological networks.

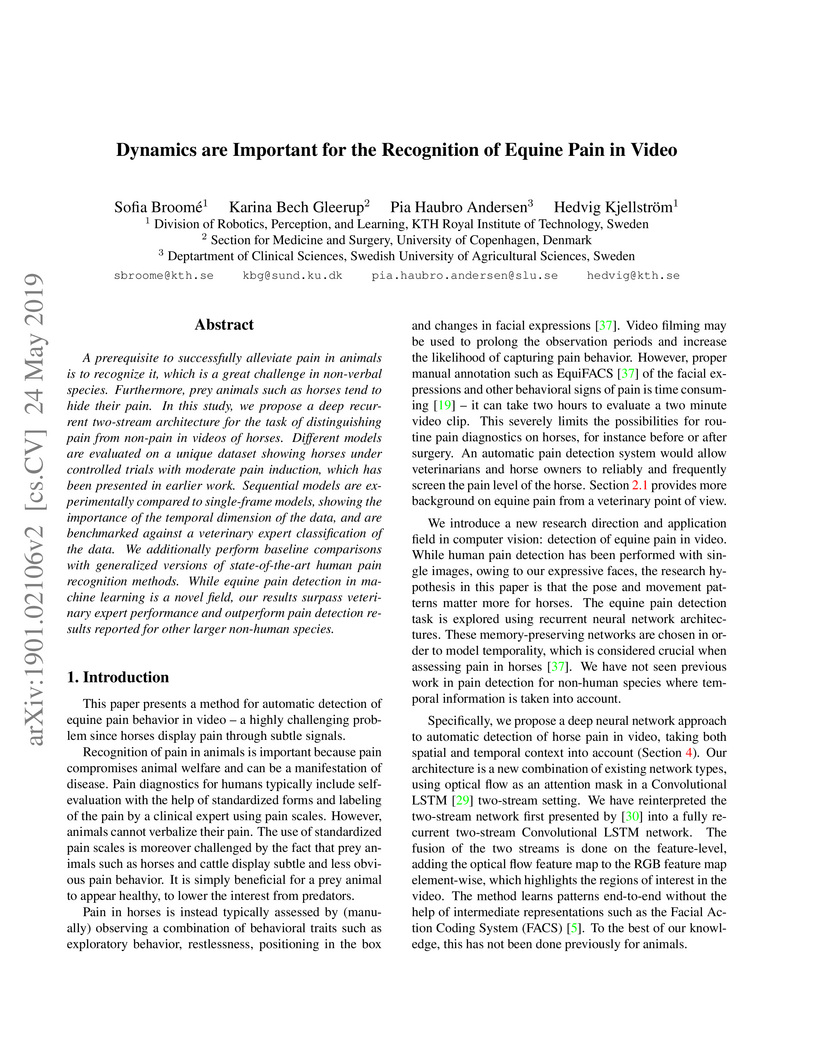

A prerequisite to successfully alleviate pain in animals is to recognize it, which is a great challenge in non-verbal species. Furthermore, prey animals such as horses tend to hide their pain. In this study, we propose a deep recurrent two-stream architecture for the task of distinguishing pain from non-pain in videos of horses. Different models are evaluated on a unique dataset showing horses under controlled trials with moderate pain induction, which has been presented in earlier work. Sequential models are experimentally compared to single-frame models, showing the importance of the temporal dimension of the data, and are benchmarked against a veterinary expert classification of the data. We additionally perform baseline comparisons with generalized versions of state-of-the-art human pain recognition methods. While equine pain detection in machine learning is a novel field, our results surpass veterinary expert performance and outperform pain detection results reported for other larger non-human species.

Noisy labels pose significant challenges for AI model training in veterinary medicine. This study examines expert assessment ambiguity in canine auscultation data, highlights the negative impact of label noise on classification performance, and introduces methods for label noise reduction. To evaluate whether label noise can be minimized by incorporating multiple expert opinions, a dataset of 140 heart sound recordings (HSR) was annotated regarding the intensity of holosystolic heart murmurs caused by Myxomatous Mitral Valve Disease (MMVD). The expert opinions facilitated the selection of 70 high-quality HSR, resulting in a noise-reduced dataset. By leveraging individual heart cycles, the training data was expanded and classification robustness was enhanced. The investigation encompassed training and evaluating three classification algorithms: AdaBoost, XGBoost, and Random Forest. While AdaBoost and Random Forest exhibited reasonable performances, XGBoost demonstrated notable improvements in classification accuracy. All algorithms showed significant improvements in classification accuracy due to the applied label noise reduction, most notably XGBoost. Specifically, for the detection of mild heart murmurs, sensitivity increased from 37.71% to 90.98% and specificity from 76.70% to 93.69%. For the moderate category, sensitivity rose from 30.23% to 55.81% and specificity from 64.56% to 97.19%. In the loud/thrilling category, sensitivity and specificity increased from 58.28% to 95.09% and from 84.84% to 89.69%, respectively. These results highlight the importance of minimizing label noise to improve classification algorithms for the detection of canine heart murmurs. Index Terms: AI diagnosis, canine heart disease, heart sound classification, label noise reduction, machine learning, XGBoost, veterinary cardiology, MMVD.

Insects comprise millions of species, many experiencing severe population declines under environmental and habitat changes. High-throughput approaches are crucial for accelerating our understanding of insect diversity, with DNA barcoding and high-resolution imaging showing strong potential for automatic taxonomic classification. However, most image-based approaches rely on individual specimen data, unlike the unsorted bulk samples collected in large-scale ecological surveys. We present the Mixed Arthropod Sample Segmentation and Identification (MassID45) dataset for training automatic classifiers of bulk insect samples. It uniquely combines molecular and imaging data at both the unsorted sample level and the full set of individual specimens. Human annotators, supported by an AI-assisted tool, performed two tasks on bulk images: creating segmentation masks around each individual arthropod and assigning taxonomic labels to over 17 000 specimens. Combining the taxonomic resolution of DNA barcodes with precise abundance estimates of bulk images holds great potential for rapid, large-scale characterization of insect communities. This dataset pushes the boundaries of tiny object detection and instance segmentation, fostering innovation in both ecological and machine learning research.

Monitoring respiration parameters such as respiratory rate could be beneficial to understand the impact of training on equine health and performance and ultimately improve equine welfare. In this work, we compare deep learning-based methods to an adapted signal processing method to automatically detect cyclic respiratory events and extract the dynamic respiratory rate from microphone recordings during high intensity exercise in Standardbred trotters. Our deep learning models are able to detect exhalation sounds (median F1 score of 0.94) in noisy microphone signals and show promising results on unlabelled signals at lower exercising intensity, where the exhalation sounds are less recognisable. Temporal convolutional networks were better at detecting exhalation events and estimating dynamic respiratory rates (median F1: 0.94, Mean Absolute Error (MAE) ± Confidence Intervals (CI): 1.44±1.04 bpm, Limits Of Agreements (LOA): 0.63±7.06 bpm) than long short-term memory networks (median F1: 0.90, MAE±CI: 3.11±1.58 bpm) and signal processing methods (MAE±CI: 2.36±1.11 bpm). This work is the first to automatically detect equine respiratory sounds and automatically compute dynamic respiratory rates in exercising horses. In the future, our models will be validated on lower exercising intensity sounds and different microphone placements will be evaluated in order to find the best combination for regular monitoring.

Recent years have witnessed the detection of an increasing number of complex organic molecules in interstellar space, some of them being of prebiotic interest. Disentangling the origin of interstellar prebiotic chemistry and its connection to biochemistry and ultimately to biology is an enormously challenging scientific goal where the application of complexity theory and network science has not been fully exploited. Encouraged by this idea, we present a theoretical and computational framework to model the evolution of simple networked structures toward complexity. In our environment, complex networks represent simplified chemical compounds, and interact optimizing the dynamical importance of their nodes. We describe the emergence of a transition from simple networks toward complexity when the parameter representing the environment reaches a critical value. Notably, although our system does not attempt to model the rules of real chemistry, nor is dependent on external input data, the results describe the emergence of complexity in the evolution of chemical diversity in the interstellar medium. Furthermore, they reveal an as yet unknown relationship between the abundances of molecules in dark clouds and the potential number of chemical reactions that yield them as products, supporting the ability of the conceptual framework presented here to shed light on real scenarios. Our work reinforces the notion that some of the properties that condition the extremely complex journey from the chemistry in space to prebiotic chemistry and finally to life could show relatively simple and universal patterns.

05 Nov 2015

Shifting from traditional hazard-based food safety management toward risk-based management requires statistical methods for evaluating intermediate targets in food production, such as microbiological criteria (MC), in terms of their effects on human risk of illness. A fully risk-based evaluation of MC involves several uncertainties that are related to both the underlying Quantitative Microbiological Risk Assessment (QMRA) model and the production-specific sample data on the prevalence and concentrations of microbes in production batches. We used Bayesian modeling for statistical inference and evidence synthesis of two sample data sets. Thus, parameter uncertainty was represented by a joint posterior distribution, which we then used to predict the risk and to evaluate the criteria for acceptance of production batches. We also applied the Bayesian model to compare alternative criteria, accounting for the statistical uncertainty of parameters, conditional on the data sets. Comparison of the posterior mean relative risk, E(RR∣data)=E(P(illness∣criterionismet)/P(illness)∣data), and relative posterior risk, RPR=P(illness∣data,criterionismet)/P(illness∣data), showed very similar results, but computing is more efficient for RPR. Based on the sample data, together with the QMRA model, one could achieve a relative risk of 0.4 by insisting that the default criterion be fulfilled for acceptance of each batch.

The recently developed Equine Facial Action Coding System (EquiFACS) provides

a precise and exhaustive, but laborious, manual labelling method of facial

action units of the horse. To automate parts of this process, we propose a Deep

Learning-based method to detect EquiFACS units automatically from images. We

use a cascade framework; we firstly train several object detectors to detect

the predefined Region-of-Interest (ROI), and secondly apply binary classifiers

for each action unit in related regions. We experiment with both regular CNNs

and a more tailored model transferred from human facial action unit

recognition. Promising initial results are presented for nine action units in

the eye and lower face regions. Code for the project is publicly available.

We explore the potential to control terrain vehicles using deep reinforcement in scenarios where human operators and traditional control methods are inadequate. This letter presents a controller that perceives, plans, and successfully controls a 16-tonne forestry vehicle with two frame articulation joints, six wheels, and their actively articulated suspensions to traverse rough terrain. The carefully shaped reward signal promotes safe, environmental, and efficient driving, which leads to the emergence of unprecedented driving skills. We test learned skills in a virtual environment, including terrains reconstructed from high-density laser scans of forest sites. The controller displays the ability to handle obstructing obstacles, slopes up to 27∘, and a variety of natural terrains, all with limited wheel slip, smooth, and upright traversal with intelligent use of the active suspensions. The results confirm that deep reinforcement learning has the potential to enhance control of vehicles with complex dynamics and high-dimensional observation data compared to human operators or traditional control methods, especially in rough terrain.

We present a method that uses high-resolution topography data of rough

terrain, and ground vehicle simulation, to predict traversability.

Traversability is expressed as three independent measures: the ability to

traverse the terrain at a target speed, energy consumption, and acceleration.

The measures are continuous and reflect different objectives for planning that

go beyond binary classification. A deep neural network is trained to predict

the traversability measures from the local heightmap and target speed. To

produce training data, we use an articulated vehicle with wheeled bogie

suspensions and procedurally generated terrains. We evaluate the model on

laser-scanned forest terrains, previously unseen by the model. The model

predicts traversability with an accuracy of 90%. Predictions rely on features

from the high-dimensional terrain data that surpass local roughness and slope

relative to the heading. Correlations show that the three traversability

measures are complementary to each other. With an inference speed 3000 times

faster than the ground truth simulation and trivially parallelizable, the model

is well suited for traversability analysis and optimal path planning over large

areas.

Weed and crop segmentation is becoming an increasingly integral part of precision farming that leverages the current computer vision and deep learning technologies. Research has been extensively carried out based on images captured with a camera from various platforms. Unmanned aerial vehicles (UAVs) and ground-based vehicles including agricultural robots are the two popular platforms for data collection in fields. They all contribute to site-specific weed management (SSWM) to maintain crop yield. Currently, the data from these two platforms is processed separately, though sharing the same semantic objects (weed and crop). In our paper, we have developed a deep convolutional network that enables to predict both field and aerial images from UAVs for weed segmentation and mapping with only field images provided in the training phase. The network learning process is visualized by feature maps at shallow and deep layers. The results show that the mean intersection of union (IOU) values of the segmentation for the crop (maize), weeds, and soil background in the developed model for the field dataset are 0.744, 0.577, 0.979, respectively, and the performance of aerial images from an UAV with the same model, the IOU values of the segmentation for the crop (maize), weeds and soil background are 0.596, 0.407, and 0.875, respectively. To estimate the effect on the use of plant protection agents, we quantify the relationship between herbicide spraying saving rate and grid size (spraying resolution) based on the predicted weed map. The spraying saving rate is up to 90% when the spraying resolution is at 1.78 x 1.78 cm2. The study shows that the developed deep convolutional neural network could be used to classify weeds from both field and aerial images and delivers satisfactory results.

16 Feb 2024

Model-assisted, two-stage forest survey sampling designs provide a means to combine airborne remote sensing data, collected in a sampling mode, with field plot data to increase the precision of national forest inventory estimates, while maintaining important properties of design-based inventories, such as unbiased estimation and quantification of uncertainty. In this study, we present a comprehensive set of model-assisted estimators for domain-level attributes in a two-stage sampling design, including new estimators for densities, and compare the performance of these estimators with standard poststratified estimators. Simulation was used to assess the statistical properties (bias, variability) of these estimators, with both simple random and systematic sampling configurations, and indicated that 1) all estimators were generally unbiased. and 2) the use of lidar in a sampling mode increased the precision of the estimators at all assessed field sampling intensities, with particularly marked increases in precision at lower field sampling intensities. Variance estimators are generally unbiased for model-assisted estimators without poststratification, while model-assisted estimators with poststratification were increasingly biased as field sampling intensity decreased. In general, these results indicate that airborne remote sensing, collected in a sampling mode, can be used to increase the efficiency of national forest inventories.

There are no more papers matching your filters at the moment.