The University of Pittsburgh

30 Sep 2025

Quantum random access memories (QRAMs) are pivotal for data-intensive quantum algorithms, but existing general-purpose and domain-specific architectures are hampered by a critical bottleneck: a heavy reliance on non-Clifford gates (e.g., T-gates), which are prohibitively expensive to implement fault-tolerantly. To address this challenge, we introduce the Stabilizer-QRAM (Stab-QRAM), a domain-specific architecture tailored for data with an affine Boolean structure (f(x)=Ax+b over F2), a class of functions vital for optimization, time-series analysis, and quantum linear systems algorithms. We demonstrate that the gate interactions required to implement the matrix A form a bipartite graph. By applying König's edge-coloring theorem to this graph, we prove that Stab-QRAM achieves an optimal logical circuit depth of O(logN) for N data items, matching its O(logN) space complexity. Critically, the Stab-QRAM is constructed exclusively from Clifford gates (CNOT and X), resulting in a zero T-count. This design completely circumvents the non-Clifford bottleneck, eliminating the need for costly magic state distillation and making it exceptionally suited for early fault-tolerant quantum computing platforms. We highlight Stab-QRAM's utility as a resource-efficient oracle for applications in discrete dynamical systems, and as a core component in Quantum Linear Systems Algorithms, providing a practical pathway for executing data-intensive tasks on emerging quantum hardware.

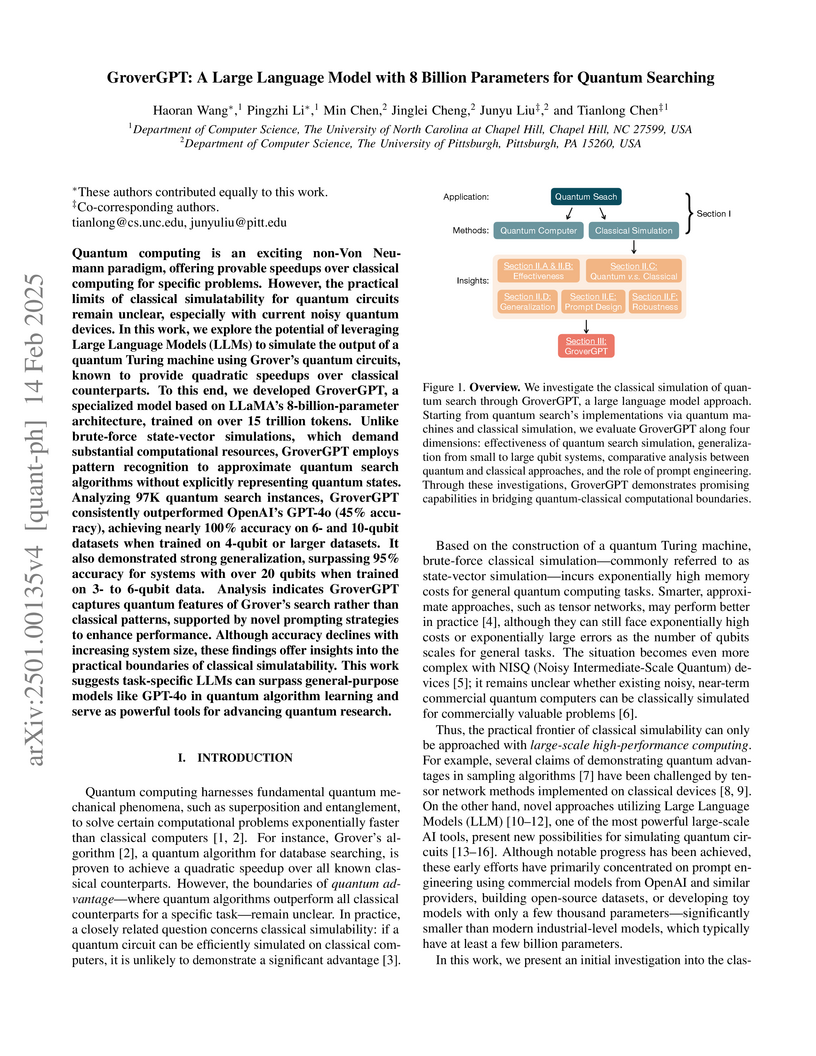

Quantum computing is an exciting non-Von Neumann paradigm, offering provable

speedups over classical computing for specific problems. However, the practical

limits of classical simulatability for quantum circuits remain unclear,

especially with current noisy quantum devices. In this work, we explore the

potential of leveraging Large Language Models (LLMs) to simulate the output of

a quantum Turing machine using Grover's quantum circuits, known to provide

quadratic speedups over classical counterparts. To this end, we developed

GroverGPT, a specialized model based on LLaMA's 8-billion-parameter

architecture, trained on over 15 trillion tokens. Unlike brute-force

state-vector simulations, which demand substantial computational resources,

GroverGPT employs pattern recognition to approximate quantum search algorithms

without explicitly representing quantum states. Analyzing 97K quantum search

instances, GroverGPT consistently outperformed OpenAI's GPT-4o (45\% accuracy),

achieving nearly 100\% accuracy on 6- and 10-qubit datasets when trained on

4-qubit or larger datasets. It also demonstrated strong generalization,

surpassing 95\% accuracy for systems with over 20 qubits when trained on 3- to

6-qubit data. Analysis indicates GroverGPT captures quantum features of

Grover's search rather than classical patterns, supported by novel prompting

strategies to enhance performance. Although accuracy declines with increasing

system size, these findings offer insights into the practical boundaries of

classical simulatability. This work suggests task-specific LLMs can surpass

general-purpose models like GPT-4o in quantum algorithm learning and serve as

powerful tools for advancing quantum research.

A diffusion probabilistic model (DPM) is a generative model renowned for its ability to produce high-quality outputs in tasks such as image and audio generation. However, training DPMs on large, high-dimensional datasets such as high-resolution images or audio incurs significant computational, energy, and hardware costs. In this work, we introduce efficient quantum algorithms for implementing DPMs through various quantum ODE solvers. These algorithms highlight the potential of quantum Carleman linearization for diverse mathematical structures, leveraging state-of-the-art quantum linear system solvers (QLSS) or linear combination of Hamiltonian simulations (LCHS). Specifically, we focus on two approaches: DPM-solver-k which employs exact k-th order derivatives to compute a polynomial approximation of ϵθ(xλ,λ); and UniPC which uses finite difference of ϵθ(xλ,λ) at different points (xsm,λsm) to approximate higher-order derivatives. As such, this work represents one of the most direct and pragmatic applications of quantum algorithms to large-scale machine learning models, presumably taking substantial steps towards demonstrating the practical utility of quantum computing.

GRAPHMOE integrates a self-rethinking mechanism into Mixture-of-Experts (MoE) networks by reconfiguring them into a pseudo-graph architecture. This design enables iterative reasoning, leading to improved performance on commonsense reasoning benchmarks, more balanced expert utilization, and state-of-the-art results even when trained with reduced precision.

Quantum resistance is vital for emerging cryptographic systems as quantum

technologies continue to advance towards large-scale, fault-tolerant quantum

computers. Resistance may be offered by quantum key distribution (QKD), which

provides information-theoretic security using quantum states of photons, but

may be limited by transmission loss at long distances. An alternative approach

uses classical means and is conjectured to be resistant to quantum attacks,

so-called post-quantum cryptography (PQC), but it is yet to be rigorously

proven, and its current implementations are computationally expensive. To

overcome the security and performance challenges present in each, here we

develop hybrid protocols by which QKD and PQC inter-operate within a joint

quantum-classical network. In particular, we consider different hybrid designs

that may offer enhanced speed and/or security over the individual performance

of either approach. Furthermore, we present a method for analyzing the security

of hybrid protocols in key distribution networks. Our hybrid approach paves the

way for joint quantum-classical communication networks, which leverage the

advantages of both QKD and PQC and can be tailored to the requirements of

various practical networks.

Quantum computing, a prominent non-Von Neumann paradigm beyond Moore's law, can offer superpolynomial speedups for certain problems. Yet its advantages in efficiency for tasks like machine learning remain under investigation, and quantum noise complicates resource estimations and classical comparisons. We provide a detailed estimation of space, time, and energy resources for fault-tolerant superconducting devices running the Harrow-Hassidim-Lloyd (HHL) algorithm, a quantum linear system solver relevant to linear algebra and machine learning. Excluding memory and data transfer, possible quantum advantages over the classical conjugate gradient method could emerge at N≈233∼248 or even lower, requiring O(105) physical qubits, O(1012∼1013) Joules, and O(106) seconds under surface code fault-tolerance with three types of magic state distillation (15-1, 116-12, 225-1). Key parameters include condition number, sparsity, and precision κ,s≈O(10∼100), ϵ∼0.01, and physical error 10−5. Our resource estimator adjusts N,κ,s,ϵ, providing a map of quantum-classical boundaries and revealing where a practical quantum advantage may arise. Our work quantitatively determine how advanced a fault-tolerant quantum computer should be to achieve possible, significant benefits on problems related to real-world.

Quantum computing offers theoretical advantages over classical computing for

specific tasks, yet the boundary of practical quantum advantage remains an open

question. To investigate this boundary, it is crucial to understand whether,

and how, classical machines can learn and simulate quantum algorithms. Recent

progress in large language models (LLMs) has demonstrated strong reasoning

abilities, prompting exploration into their potential for this challenge. In

this work, we introduce GroverGPT-2, an LLM-based method for simulating

Grover's algorithm using Chain-of-Thought (CoT) reasoning and quantum-native

tokenization. Building on its predecessor, GroverGPT-2 performs simulation

directly from quantum circuit representations while producing logically

structured and interpretable outputs. Our results show that GroverGPT-2 can

learn and internalize quantum circuit logic through efficient processing of

quantum-native tokens, providing direct evidence that classical models like

LLMs can capture the structure of quantum algorithms. Furthermore, GroverGPT-2

outputs interleave circuit data with natural language, embedding explicit

reasoning into the simulation. This dual capability positions GroverGPT-2 as a

prototype for advancing machine understanding of quantum algorithms and

modeling quantum circuit logic. We also identify an empirical scaling law for

GroverGPT-2 with increasing qubit numbers, suggesting a path toward scalable

classical simulation. These findings open new directions for exploring the

limits of classical simulatability, enhancing quantum education and research,

and laying groundwork for future foundation models in quantum computing.

The Learning with Errors (LWE) problem underlies modern lattice-based cryptography and is assumed to be quantum hard. Recent results show that estimating entanglement entropy is as hard as LWE, creating tension with quantum gravity and AdS/CFT, where entropies are computed by extremal surface areas. This suggests a paradoxical route to solving LWE by building holographic duals and measuring extremal surfaces, seemingly an easy task. Three possible resolutions arise: that AdS/CFT duality is intractable, that the quantum-extended Church-Turing thesis (QECTT) fails, or that LWE is easier than believed. We develop and analyze a fourth resolution: that estimating surface areas with the precision required for entropy is itself computationally hard. We construct two holographic quantum algorithms to formalize this. For entropy differences of order N, we show that measuring Ryu-Takayanagi geodesic lengths via heavy-field two-point functions needs exponentially many measurements in N, even when the boundary state is efficiently preparable. For order one corrections, we show that reconstructing the bulk covariance matrix and extracting entropy requires exponential time in N. Although these tasks are computationally intractable, we compare their efficiency with the Block Korkine-Zolotarev lattice reduction algorithm for LWE. Our results reconcile the tension with QECTT, showing that holographic entropy remains consistent with quantum computational limits without requiring an intractable holographic dictionary, and provide new insights into the quantum cryptanalysis of lattice-based cryptography.

02 Oct 2025

Chalcogenide-based optical phase change materials (OPCMs) exhibit a large contrast in refractive index when reversibly switched between their stable amorphous and crystalline states. OPCMs have rapidly gained attention due to their versatility as nonvolatile amplitude or phase modulators in various photonic devices. However, open challenges remain, such as achieving reliable response and transparency spanning into the visible spectrum, a combination of properties in which current broadband OPCMs (e.g., Ge2Sb2Se4Te1, Sb2Se3, or Sb2S3) fall short. Discovering novel materials or engineering existing ones is, therefore, crucial in extending the application scope of OPCMs. Here, we use magnetron co-sputtering to study the effects of Si doping into Sb2Se3. We employ ellipsometry, X-ray diffraction, Raman spectroscopy, and scanning and transmission electron microscopy to investigate the effects of Si doping on the optical properties and crystal structure and compare these results with those from first principles calculations. Moreover, we study the crystallization and melt-quenching of thin films via nano-differential scanning calorimetry (NanoDSC). Our experiments demonstrate that 20% Si doping increases the transparency window in both states, specifically to 800 nm (1.55 eV) in the amorphous phase, while reducing power consumption by lowering the melting temperature. However, this reduction comes at the cost of reducing the refractive index contrast between states and slowing the kinetics of the phase transition.

As science transitions from the age of lone geniuses to an era of

collaborative teams, the question of whether large teams can sustain the

creativity of individuals and continue driving innovation has become

increasingly important. Our previous research first revealed a negative

relationship between team size and the Disruption Index-a network-based metric

of innovation-by analyzing 65 million projects across papers, patents, and

software over half a century. This work has sparked lively debates within the

scientific community about the robustness of the Disruption Index in capturing

the impact of team size on innovation. Here, we present additional evidence

that the negative link between team size and disruption holds, even when

accounting for factors such as reference length, citation impact, and

historical time. We further show how a narrow 5-year window for measuring

disruption can misrepresent this relationship as positive, underestimating the

long-term disruptive potential of small teams. Like "sleeping beauties," small

teams need a decade or more to see their transformative contributions to

science.

Scholars are often categorized into two types: hedgehogs (specialists), who focus on working within a specific research field, and foxes (generalists), who actively contribute to a variety of fields. Despite the familiar anecdotes and popularity of this distinction, its empirical foundation has remained largely unexamined. We examine whether the research style of being a fox or a hedgehog is a stable personal trait or an evolving strategy over a scientist's career. Analyzing 2.3 million scholars' publication records over a century, we find that research styles exhibit remarkable stability. Notably, the proportion of fox-like scientists has dramatically declined in the past century, a phenomenon we term "the death of Renaissance scientists." This decline is particularly significant as science shifts toward team collaboration. Teams of foxes consistently outperform teams of hedgehogs in generating new ideas and directions, as confirmed by two emerging innovation metrics for papers: atypicality and disruption. Our research is the first to quantify the process and consequences of the decline of Renaissance scientists. By doing so, we establish a universal link between research styles, demographic shifts, and innovative output.

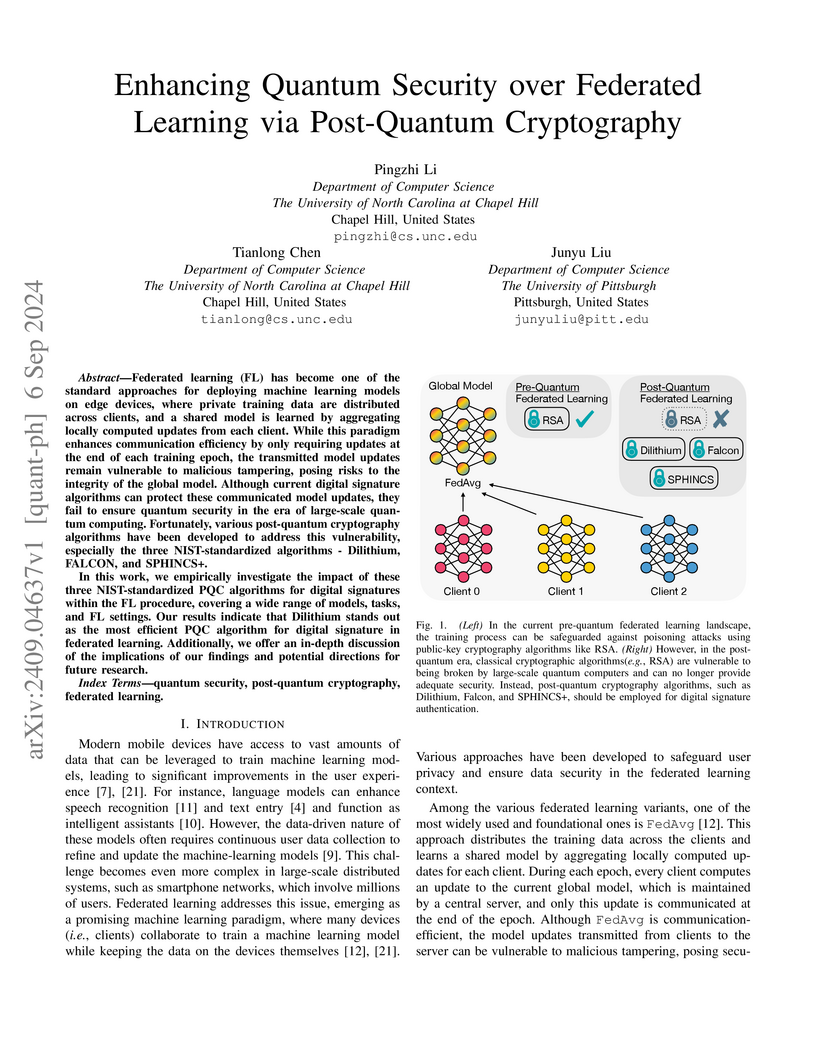

Federated learning (FL) has become one of the standard approaches for deploying machine learning models on edge devices, where private training data are distributed across clients, and a shared model is learned by aggregating locally computed updates from each client. While this paradigm enhances communication efficiency by only requiring updates at the end of each training epoch, the transmitted model updates remain vulnerable to malicious tampering, posing risks to the integrity of the global model. Although current digital signature algorithms can protect these communicated model updates, they fail to ensure quantum security in the era of large-scale quantum computing. Fortunately, various post-quantum cryptography algorithms have been developed to address this vulnerability, especially the three NIST-standardized algorithms - Dilithium, FALCON, and SPHINCS+. In this work, we empirically investigate the impact of these three NIST-standardized PQC algorithms for digital signatures within the FL procedure, covering a wide range of models, tasks, and FL settings. Our results indicate that Dilithium stands out as the most efficient PQC algorithm for digital signature in federated learning. Additionally, we offer an in-depth discussion of the implications of our findings and potential directions for future research.

06 Oct 2023

The past half-century has seen a dramatic increase in the scale and

complexity of scientific research, to which researchers have responded by

dedicating more time to education and training, narrowing their areas of

specialization, and collaborating in larger teams. A widely held view is that

such collaborations, by fostering specialization and encouraging novel

combinations of ideas, accelerate scientific innovation. However, recent

research challenges this notion, suggesting that small teams and solo

researchers consistently disrupt science and technology with fresh ideas and

opportunities, while larger teams tend to refine existing ones (Wu et al.

2019). This study, along with other relevant research, has garnered attention

for challenging the zeitgeist of our time that views collaboration as the

inevitable path forward in scientific and technological advancement. Yet, few

studies have re-evaluated its central finding: the innovative advantage of

small teams over large ones, using alternative measures. We explore innovation

by identifying papers proposing new scientific concepts and patents introducing

new technology codes. We analyzed 88 million research articles spanning from

1800 to 2020 and 7 million patent applications from 1976 to 2020 worldwide. Our

findings confirm that while large teams contribute to development, small teams

play a critical role in innovation by propelling fresh, original ideas in

science and technology.

Quantum machine learning, which involves running machine learning algorithms on quantum devices, may be one of the most significant flagship applications for these devices. Unlike its classical counterparts, the role of data in quantum machine learning has not been fully understood. In this work, we quantify the performances of quantum machine learning in the landscape of quantum data. Provided that the encoding of quantum data is sufficiently random, the performance, we find that the training efficiency and generalization capabilities in quantum machine learning will be exponentially suppressed with the increase in the number of qubits, which we call "the curse of random quantum data". Our findings apply to both the quantum kernel method and the large-width limit of quantum neural networks. Conversely, we highlight that through meticulous design of quantum datasets, it is possible to avoid these curses, thereby achieving efficient convergence and robust generalization. Our conclusions are corroborated by extensive numerical simulations.

13 Feb 2024

Researchers, government bodies, and organizations have been repeatedly calling for a shift in the responsible AI community from general principles to tangible and operationalizable practices in mitigating the potential sociotechnical harms of AI. Frameworks like the NIST AI RMF embody an emerging consensus on recommended practices in operationalizing sociotechnical harm mitigation. However, private sector organizations currently lag far behind this emerging consensus. Implementation is sporadic and selective at best. At worst, it is ineffective and can risk serving as a misleading veneer of trustworthy processes, providing an appearance of legitimacy to substantively harmful practices. In this paper, we provide a foundation for a framework for evaluating where organizations sit relative to the emerging consensus on sociotechnical harm mitigation best practices: a flexible maturity model based on the NIST AI RMF.

The recognition of individual contributions is central to the scientific

reward system, yet coauthored papers often obscure who did what. Traditional

proxies like author order assume a simplistic decline in contribution, while

emerging practices such as self-reported roles are biased and limited in scope.

We introduce a large-scale, behavior-based approach to identifying individual

contributions in scientific papers. Using author-specific LaTeX macros as

writing signatures, we analyze over 730,000 arXiv papers (1991-2023), covering

over half a million scientists. Validated against self-reports, author order,

disciplinary norms, and Overleaf records, our method reliably infers

author-level writing activity. Section-level traces reveal a hidden division of

labor: first authors focus on technical sections (e.g., Methods, Results),

while last authors primarily contribute to conceptual sections (e.g.,

Introduction, Discussion). Our findings offer empirical evidence of labor

specialization at scale and new tools to improve credit allocation in

collaborative research.

Recognition of individual contributions is fundamental to the scientific

reward system, yet coauthored papers obscure who did what. Traditional

proxies-author order and career stage-reinforce biases, while contribution

statements remain self-reported and limited to select journals. We construct

the first large-scale dataset on writing contributions by analyzing

author-specific macros in LaTeX files from 1.6 million papers (1991-2023) by 2

million scientists. Validation against self-reported statements (precision =

0.87), author order patterns, field-specific norms, and Overleaf records

(Spearman's rho = 0.6, p < 0.05) confirms the reliability of the created data.

Using explicit section information, we reveal a hidden division of labor within

scientific teams: some authors primarily contribute to conceptual sections

(e.g., Introduction and Discussion), while others focus on technical sections

(e.g., Methods and Experiments). These findings provide the first large-scale

evidence of implicit labor division in scientific teams, challenging

conventional authorship practices and informing institutional policies on

credit allocation.

Optimization techniques in deep learning are predominantly led by first-order gradient methodologies, such as SGD. However, neural network training can greatly benefit from the rapid convergence characteristics of second-order optimization. Newton's GD stands out in this category, by rescaling the gradient using the inverse Hessian. Nevertheless, one of its major bottlenecks is matrix inversion, which is notably time-consuming in O(N3) time with weak scalability.

Matrix inversion can be translated into solving a series of linear equations. Given that quantum linear solver algorithms (QLSAs), leveraging the principles of quantum superposition and entanglement, can operate within a polylog(N) time frame, they present a promising approach with exponential acceleration. Specifically, one of the most recent QLSAs demonstrates a complexity scaling of O(d⋅κlog(N⋅κ/ϵ)), depending on: {size~N, condition number~κ, error tolerance~ϵ, quantum oracle sparsity~d} of the matrix. However, this also implies that their potential exponential advantage may be hindered by certain properties (i.e. κ and d).

We propose Q-Newton, a hybrid quantum-classical scheduler for accelerating neural network training with Newton's GD. Q-Newton utilizes a streamlined scheduling module that coordinates between quantum and classical linear solvers, by estimating & reducing κ and constructing d for the quantum solver.

Our evaluation showcases the potential for Q-Newton to significantly reduce the total training time compared to commonly used optimizers like SGD. We hypothesize a future scenario where the gate time of quantum machines is reduced, possibly realized by attoseconds physics. Our evaluation establishes an ambitious and promising target for the evolution of quantum computing.

Theories of innovation emphasize the role of social networks and teams as

facilitators of breakthrough discoveries. Around the world, scientists and

inventors today are more plentiful and interconnected than ever before. But

while there are more people making discoveries, and more ideas that can be

reconfigured in novel ways, research suggests that new ideas are getting harder

to find-contradicting recombinant growth theory. In this paper, we shed new

light on this apparent puzzle. Analyzing 20 million research articles and 4

million patent applications across the globe over the past half-century, we

begin by documenting the rise of remote collaboration across cities,

underlining the growing interconnectedness of scientists and inventors

globally. We further show that across all fields, periods, and team sizes,

researchers in these remote teams are consistently less likely to make

breakthrough discoveries relative to their onsite counterparts. Creating a

dataset that allows us to explore the division of labor in knowledge production

within teams and across space, we find that among distributed team members,

collaboration centers on late-stage, technical tasks involving more codified

knowledge. Yet they are less likely to join forces in conceptual tasks-such as

conceiving new ideas and designing research-when knowledge is tacit. We

conclude that despite striking improvements in digital technology in recent

years, remote teams are less likely to integrate the knowledge of their members

to produce new, disruptive ideas.

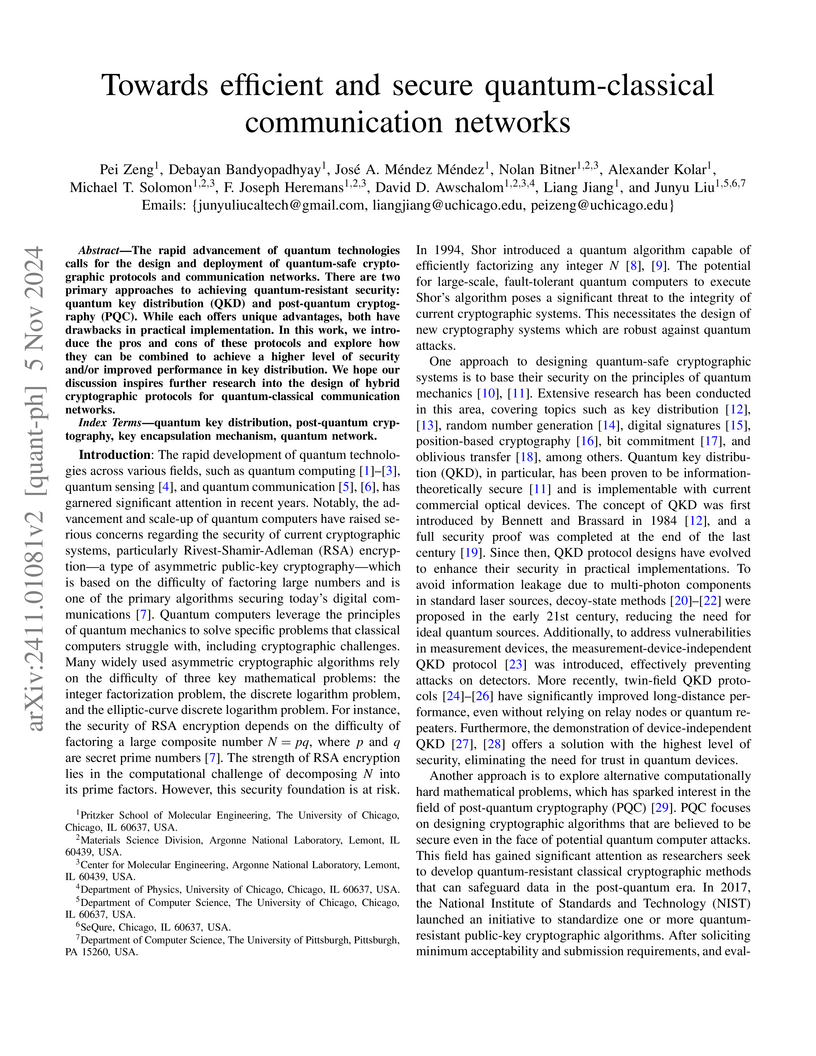

The rapid advancement of quantum technologies calls for the design and deployment of quantum-safe cryptographic protocols and communication networks. There are two primary approaches to achieving quantum-resistant security: quantum key distribution (QKD) and post-quantum cryptography (PQC). While each offers unique advantages, both have drawbacks in practical implementation. In this work, we introduce the pros and cons of these protocols and explore how they can be combined to achieve a higher level of security and/or improved performance in key distribution. We hope our discussion inspires further research into the design of hybrid cryptographic protocols for quantum-classical communication networks.

There are no more papers matching your filters at the moment.