University Hospital of Strasbourg

fine-CLIP enhances zero-shot surgical action recognition by integrating hierarchical prompt modeling with object-centric visual features, outperforming existing approaches in recognizing complex instrument-verb-target triplets. The method achieves higher F1 scores and mean Average Precision (mAP) in challenging unseen target and unseen instrument-verb scenarios.

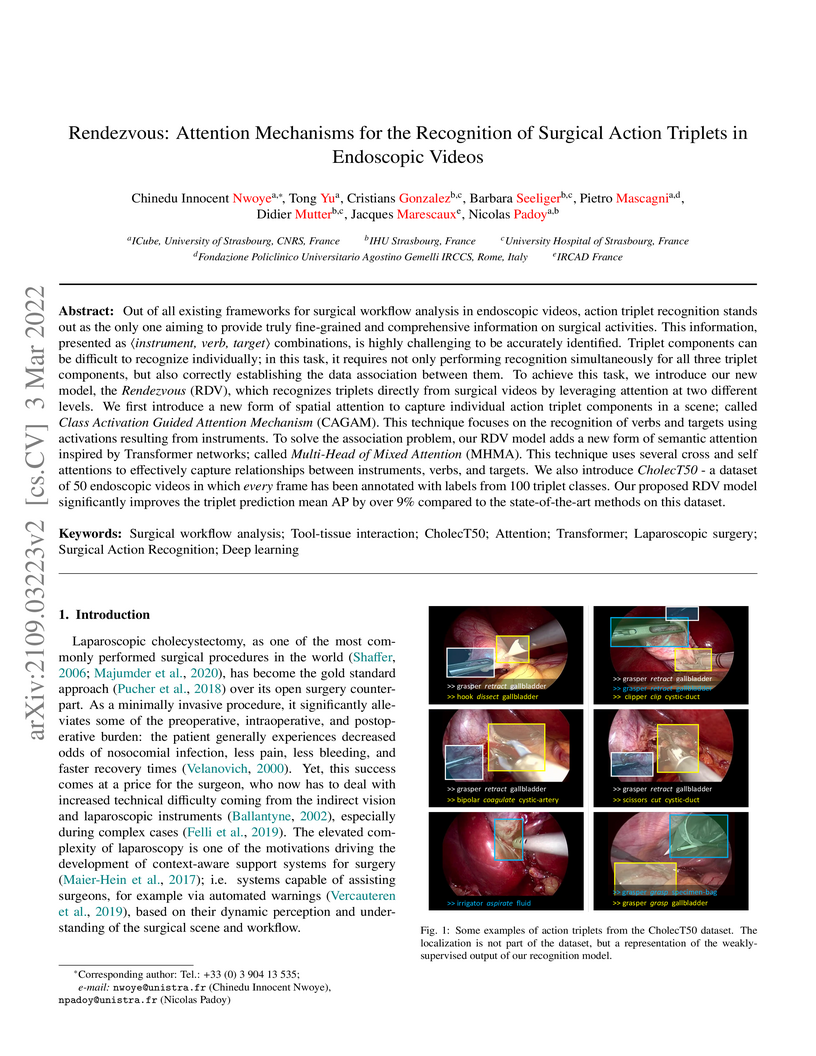

The paper presents Rendezvous (RDV), a novel deep learning model, and the CholecT50 dataset for fine-grained surgical action triplet recognition (〈instrument, verb, target〉) in endoscopic videos. RDV achieves a 29.9% AP_IVT, a 9.9% improvement over the previous state-of-the-art, by employing specialized attention mechanisms like the Class Activation Guided Attention Mechanism (CAGAM) and Multi-Head of Mixed Attention (MHMA) to better understand instrument-centric actions and component associations.

Surgical action triplets describe instrument-tissue interactions as (instrument, verb, target) combinations, thereby supporting a detailed analysis of surgical scene activities and workflow. This work focuses on surgical action triplet detection, which is challenging but more precise than the traditional triplet recognition task as it consists of joint (1) localization of surgical instruments and (2) recognition of the surgical action triplet associated with every localized instrument. Triplet detection is highly complex due to the lack of spatial triplet annotation. We analyze how the amount of instrument spatial annotations affects triplet detection and observe that accurate instrument localization does not guarantee better triplet detection due to the risk of erroneous associations with the verbs and targets. To solve the two tasks, we propose MCIT-IG, a two-stage network, that stands for Multi-Class Instrument-aware Transformer-Interaction Graph. The MCIT stage of our network models per class embedding of the targets as additional features to reduce the risk of misassociating triplets. Furthermore, the IG stage constructs a bipartite dynamic graph to model the interaction between the instruments and targets, cast as the verbs. We utilize a mixed-supervised learning strategy that combines weak target presence labels for MCIT and pseudo triplet labels for IG to train our network. We observed that complementing minimal instrument spatial annotations with target embeddings results in better triplet detection. We evaluate our model on the CholecT50 dataset and show improved performance on both instrument localization and triplet detection, topping the leaderboard of the CholecTriplet challenge in MICCAI 2022.

CNRS

CNRS University College London

University College London Shanghai Jiao Tong University

Shanghai Jiao Tong University Southern University of Science and TechnologyUniversity of AberdeenGerman Cancer Research Center (DKFZ)University of MinhoTechnical University MunichIndian Institute of Technology KharagpurUniversity of StrasbourgNational Center for Tumor Diseases (NCT)IHU StrasbourgMuroran Institute of TechnologyUniversity Hospital of StrasbourgIntuitive SurgicalNepal Applied Mathematics and Informatics Institute for research (NAAMII)Niigata University of Health and WelfareFondazione Policlinico Universitario Agostino Gemelli IRCCSIPCARIWOlink GmbHRedev Technology Ltd

Southern University of Science and TechnologyUniversity of AberdeenGerman Cancer Research Center (DKFZ)University of MinhoTechnical University MunichIndian Institute of Technology KharagpurUniversity of StrasbourgNational Center for Tumor Diseases (NCT)IHU StrasbourgMuroran Institute of TechnologyUniversity Hospital of StrasbourgIntuitive SurgicalNepal Applied Mathematics and Informatics Institute for research (NAAMII)Niigata University of Health and WelfareFondazione Policlinico Universitario Agostino Gemelli IRCCSIPCARIWOlink GmbHRedev Technology LtdFormalizing surgical activities as triplets of the used instruments, actions performed, and target anatomies is becoming a gold standard approach for surgical activity modeling. The benefit is that this formalization helps to obtain a more detailed understanding of tool-tissue interaction which can be used to develop better Artificial Intelligence assistance for image-guided surgery. Earlier efforts and the CholecTriplet challenge introduced in 2021 have put together techniques aimed at recognizing these triplets from surgical footage. Estimating also the spatial locations of the triplets would offer a more precise intraoperative context-aware decision support for computer-assisted intervention. This paper presents the CholecTriplet2022 challenge, which extends surgical action triplet modeling from recognition to detection. It includes weakly-supervised bounding box localization of every visible surgical instrument (or tool), as the key actors, and the modeling of each tool-activity in the form of triplet. The paper describes a baseline method and 10 new deep learning algorithms presented at the challenge to solve the task. It also provides thorough methodological comparisons of the methods, an in-depth analysis of the obtained results across multiple metrics, visual and procedural challenges; their significance, and useful insights for future research directions and applications in surgery.

Vision algorithms capable of interpreting scenes from a real-time video

stream are necessary for computer-assisted surgery systems to achieve

context-aware behavior. In laparoscopic procedures one particular algorithm

needed for such systems is the identification of surgical phases, for which the

current state of the art is a model based on a CNN-LSTM. A number of previous

works using models of this kind have trained them in a fully supervised manner,

requiring a fully annotated dataset. Instead, our work confronts the problem of

learning surgical phase recognition in scenarios presenting scarce amounts of

annotated data (under 25% of all available video recordings). We propose a

teacher/student type of approach, where a strong predictor called the teacher,

trained beforehand on a small dataset of ground truth-annotated videos,

generates synthetic annotations for a larger dataset, which another model - the

student - learns from. In our case, the teacher features a novel CNN-biLSTM-CRF

architecture, designed for offline inference only. The student, on the other

hand, is a CNN-LSTM capable of making real-time predictions. Results for

various amounts of manually annotated videos demonstrate the superiority of the

new CNN-biLSTM-CRF predictor as well as improved performance from the CNN-LSTM

trained using synthetic labels generated for unannotated videos. For both

offline and online surgical phase recognition with very few annotated

recordings available, this new teacher/student strategy provides a valuable

performance improvement by efficiently leveraging the unannotated data.

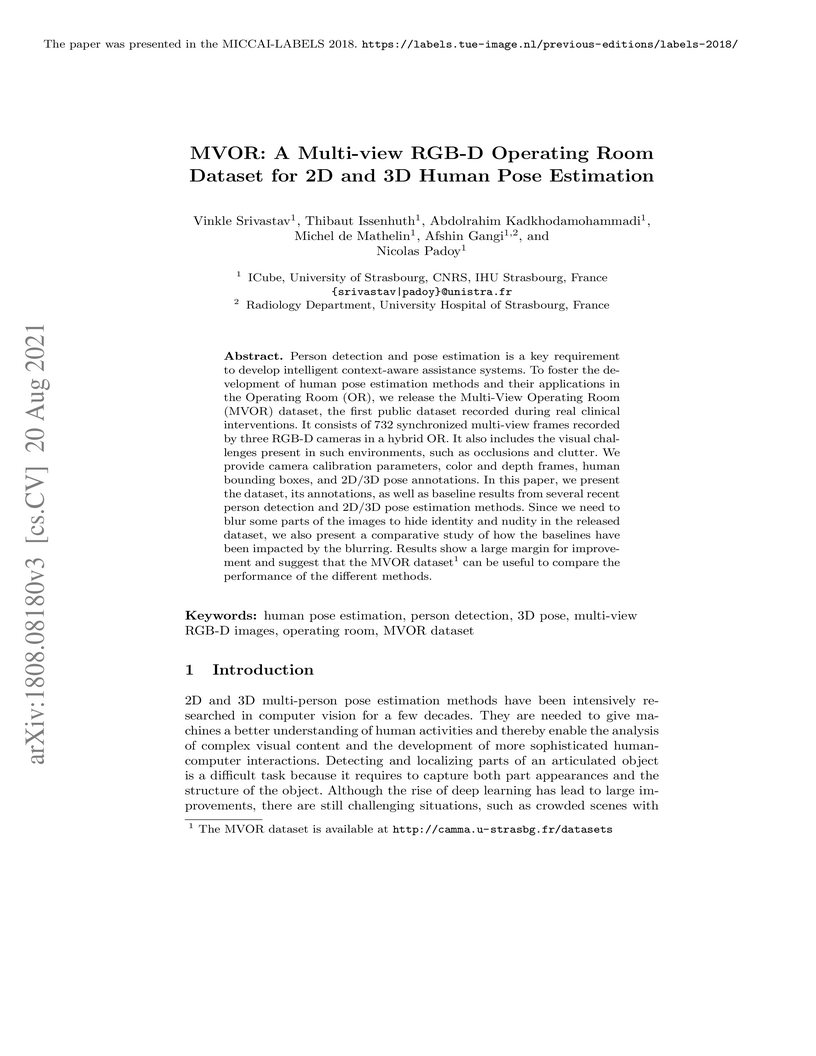

Person detection and pose estimation is a key requirement to develop intelligent context-aware assistance systems. To foster the development of human pose estimation methods and their applications in the Operating Room (OR), we release the Multi-View Operating Room (MVOR) dataset, the first public dataset recorded during real clinical interventions. It consists of 732 synchronized multi-view frames recorded by three RGB-D cameras in a hybrid OR. It also includes the visual challenges present in such environments, such as occlusions and clutter. We provide camera calibration parameters, color and depth frames, human bounding boxes, and 2D/3D pose annotations. In this paper, we present the dataset, its annotations, as well as baseline results from several recent person detection and 2D/3D pose estimation methods. Since we need to blur some parts of the images to hide identity and nudity in the released dataset, we also present a comparative study of how the baselines have been impacted by the blurring. Results show a large margin for improvement and suggest that the MVOR dataset can be useful to compare the performance of the different methods.

Self-MVA is a self-supervised approach for multi-view person association that operates without requiring camera calibration parameters or manually annotated identity labels. It achieves state-of-the-art performance across diverse uncalibrated environments, including those with similar-looking individuals like operating rooms.

The Segment Anything Model (SAM) has revolutionized open-set interactive

image segmentation, inspiring numerous adapters for the medical domain.

However, SAM primarily relies on sparse prompts such as point or bounding box,

which may be suboptimal for fine-grained instance segmentation, particularly in

endoscopic imagery, where precise localization is critical and existing prompts

struggle to capture object boundaries effectively. To address this, we

introduce S4M (Segment Anything with 4 Extreme Points), which augments SAM by

leveraging extreme points -- the top-, bottom-, left-, and right-most points of

an instance -- prompts. These points are intuitive to identify and provide a

faster, structured alternative to box prompts. However, a na\"ive use of

extreme points degrades performance, due to SAM's inability to interpret their

semantic roles. To resolve this, we introduce dedicated learnable embeddings,

enabling the model to distinguish extreme points from generic free-form points

and better reason about their spatial relationships. We further propose an

auxiliary training task through the Canvas module, which operates solely on

prompts -- without vision input -- to predict a coarse instance mask. This

encourages the model to internalize the relationship between extreme points and

mask distributions, leading to more robust segmentation. S4M outperforms other

SAM-based approaches on three endoscopic surgical datasets, demonstrating its

effectiveness in complex scenarios. Finally, we validate our approach through a

human annotation study on surgical endoscopic videos, confirming that extreme

points are faster to acquire than bounding boxes.

The tool presence detection challenge at M2CAI 2016 consists of identifying the presence/absence of seven surgical tools in the images of cholecystectomy videos. Here, we propose to use deep architectures that are based on our previous work where we presented several architectures to perform multiple recognition tasks on laparoscopic videos. In this technical report, we present the tool presence detection results using two architectures: (1) a single-task architecture designed to perform solely the tool presence detection task and (2) a multi-task architecture designed to perform jointly phase recognition and tool presence detection. The results show that the multi-task network only slightly improves the tool presence detection results. In constrast, a significant improvement is obtained when there are more data available to train the networks. This significant improvement can be regarded as a call for action for other institutions to start working toward publishing more datasets into the community, so that better models could be generated to perform the task.

Surgical tool localization is an essential task for the automatic analysis of endoscopic videos. In the literature, existing methods for tool localization, tracking and segmentation require training data that is fully annotated, thereby limiting the size of the datasets that can be used and the generalization of the approaches. In this work, we propose to circumvent the lack of annotated data with weak supervision. We propose a deep architecture, trained solely on image level annotations, that can be used for both tool presence detection and localization in surgical videos. Our architecture relies on a fully convolutional neural network, trained end-to-end, enabling us to localize surgical tools without explicit spatial annotations. We demonstrate the benefits of our approach on a large public dataset, Cholec80, which is fully annotated with binary tool presence information and of which 5 videos have been fully annotated with bounding boxes and tool centers for the evaluation.

Understanding the workflow of surgical procedures in complex operating rooms

requires a deep understanding of the interactions between clinicians and their

environment. Surgical activity recognition (SAR) is a key computer vision task

that detects activities or phases from multi-view camera recordings. Existing

SAR models often fail to account for fine-grained clinician movements and

multi-view knowledge, or they require calibrated multi-view camera setups and

advanced point-cloud processing to obtain better results. In this work, we

propose a novel calibration-free multi-view multi-modal pretraining framework

called Multiview Pretraining for Video-Pose Surgical Activity Recognition

PreViPS, which aligns 2D pose and vision embeddings across camera views. Our

model follows CLIP-style dual-encoder architecture: one encoder processes

visual features, while the other encodes human pose embeddings. To handle the

continuous 2D human pose coordinates, we introduce a tokenized discrete

representation to convert the continuous 2D pose coordinates into discrete pose

embeddings, thereby enabling efficient integration within the dual-encoder

framework. To bridge the gap between these two modalities, we propose several

pretraining objectives using cross- and in-modality geometric constraints

within the embedding space and incorporating masked pose token prediction

strategy to enhance representation learning. Extensive experiments and ablation

studies demonstrate improvements over the strong baselines, while

data-efficiency experiments on two distinct operating room datasets further

highlight the effectiveness of our approach. We highlight the benefits of our

approach for surgical activity recognition in both multi-view and single-view

settings, showcasing its practical applicability in complex surgical

environments. Code will be made available at:

this https URL

Surgical future prediction, driven by real-time AI analysis of surgical video, is critical for operating room safety and efficiency. It provides actionable insights into upcoming events, their timing, and risks-enabling better resource allocation, timely instrument readiness, and early warnings for complications (e.g., bleeding, bile duct injury). Despite this need, current surgical AI research focuses on understanding what is happening rather than predicting future events. Existing methods target specific tasks in isolation, lacking unified approaches that span both short-term (action triplets, events) and long-term horizons (remaining surgery duration, phase transitions). These methods rely on coarse-grained supervision while fine-grained surgical action triplets and steps remain underexplored. Furthermore, methods based only on future feature prediction struggle to generalize across different surgical contexts and procedures. We address these limits by reframing surgical future prediction as state-change learning. Rather than forecasting raw observations, our approach classifies state transitions between current and future timesteps. We introduce SurgFUTR, implementing this through a teacher-student architecture. Video clips are compressed into state representations via Sinkhorn-Knopp clustering; the teacher network learns from both current and future clips, while the student network predicts future states from current videos alone, guided by our Action Dynamics (ActDyn) module. We establish SFPBench with five prediction tasks spanning short-term (triplets, events) and long-term (remaining surgery duration, phase and step transitions) horizons. Experiments across four datasets and three procedures show consistent improvements. Cross-procedure transfer validates generalizability.

Human pose estimation (HPE) is a key building block for developing AI-based

context-aware systems inside the operating room (OR). The 24/7 use of images

coming from cameras mounted on the OR ceiling can however raise concerns for

privacy, even in the case of depth images captured by RGB-D sensors. Being able

to solely use low-resolution privacy-preserving images would address these

concerns and help scale up the computer-assisted approaches that rely on such

data to a larger number of ORs. In this paper, we introduce the problem of HPE

on low-resolution depth images and propose an end-to-end solution that

integrates a multi-scale super-resolution network with a 2D human pose

estimation network. By exploiting intermediate feature-maps generated at

different super-resolution, our approach achieves body pose results on

low-resolution images (of size 64x48) that are on par with those of an approach

trained and tested on full resolution images (of size 640x480).

Searching through large volumes of medical data to retrieve relevant

information is a challenging yet crucial task for clinical care. However the

primitive and most common approach to retrieval, involving text in the form of

keywords, is severely limited when dealing with complex media formats.

Content-based retrieval offers a way to overcome this limitation, by using rich

media as the query itself. Surgical video-to-video retrieval in particular is a

new and largely unexplored research problem with high clinical value,

especially in the real-time case: using real-time video hashing, search can be

achieved directly inside of the operating room. Indeed, the process of hashing

converts large data entries into compact binary arrays or hashes, enabling

large-scale search operations at a very fast rate. However, due to fluctuations

over the course of a video, not all bits in a given hash are equally reliable.

In this work, we propose a method capable of mitigating this uncertainty while

maintaining a light computational footprint. We present superior retrieval

results (3-4 % top 10 mean average precision) on a multi-task evaluation

protocol for surgery, using cholecystectomy phases, bypass phases, and coming

from an entirely new dataset introduced here, critical events across six

different surgery types. Success on this multi-task benchmark shows the

generalizability of our approach for surgical video retrieval.

Accurate surgery duration estimation is necessary for optimal OR planning,

which plays an important role in patient comfort and safety as well as resource

optimization. It is, however, challenging to preoperatively predict surgery

duration since it varies significantly depending on the patient condition,

surgeon skills, and intraoperative situation. In this paper, we propose a deep

learning pipeline, referred to as RSDNet, which automatically estimates the

remaining surgery duration (RSD) intraoperatively by using only visual

information from laparoscopic videos. Previous state-of-the-art approaches for

RSD prediction are dependent on manual annotation, whose generation requires

expensive expert knowledge and is time-consuming, especially considering the

numerous types of surgeries performed in a hospital and the large number of

laparoscopic videos available. A crucial feature of RSDNet is that it does not

depend on any manual annotation during training, making it easily scalable to

many kinds of surgeries. The generalizability of our approach is demonstrated

by testing the pipeline on two large datasets containing different types of

surgeries: 120 cholecystectomy and 170 gastric bypass videos. The experimental

results also show that the proposed network significantly outperforms a

traditional method of estimating RSD without utilizing manual annotation.

Further, this work provides a deeper insight into the deep learning network

through visualization and interpretation of the features that are automatically

learned.

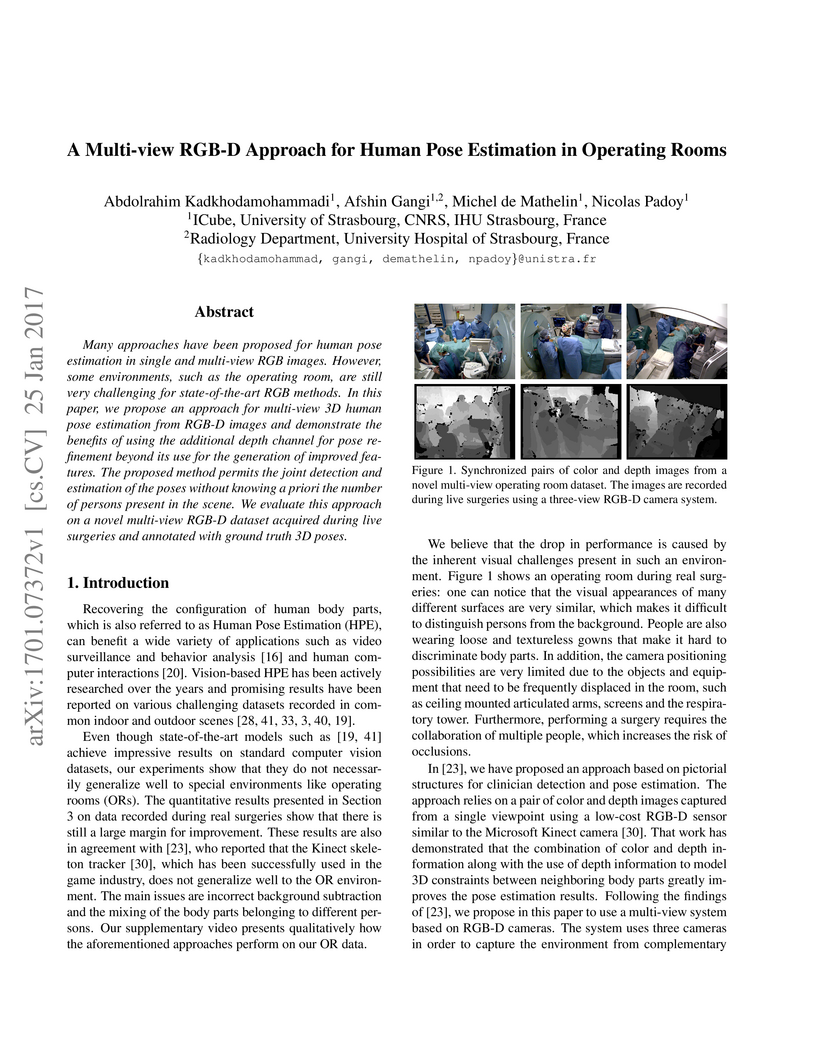

Many approaches have been proposed for human pose estimation in single and multi-view RGB images. However, some environments, such as the operating room, are still very challenging for state-of-the-art RGB methods. In this paper, we propose an approach for multi-view 3D human pose estimation from RGB-D images and demonstrate the benefits of using the additional depth channel for pose refinement beyond its use for the generation of improved features. The proposed method permits the joint detection and estimation of the poses without knowing a priori the number of persons present in the scene. We evaluate this approach on a novel multi-view RGB-D dataset acquired during live surgeries and annotated with ground truth 3D poses.

Real-time algorithms for automatically recognizing surgical phases are needed

to develop systems that can provide assistance to surgeons, enable better

management of operating room (OR) resources and consequently improve safety

within the OR. State-of-the-art surgical phase recognition algorithms using

laparoscopic videos are based on fully supervised training. This limits their

potential for widespread application, since creation of manual annotations is

an expensive process considering the numerous types of existing surgeries and

the vast amount of laparoscopic videos available. In this work, we propose a

new self-supervised pre-training approach based on the prediction of remaining

surgery duration (RSD) from laparoscopic videos. The RSD prediction task is

used to pre-train a convolutional neural network (CNN) and long short-term

memory (LSTM) network in an end-to-end manner. Our proposed approach utilizes

all available data and reduces the reliance on annotated data, thereby

facilitating the scaling up of surgical phase recognition algorithms to

different kinds of surgeries. Additionally, we present EndoN2N, an end-to-end

trained CNN-LSTM model for surgical phase recognition and evaluate the

performance of our approach on a dataset of 120 Cholecystectomy laparoscopic

videos (Cholec120). This work also presents the first systematic study of

self-supervised pre-training approaches to understand the amount of annotations

required for surgical phase recognition. Interestingly, the proposed RSD

pre-training approach leads to performance improvement even when all the

training data is manually annotated and outperforms the single pre-training

approach for surgical phase recognition presently published in the literature.

It is also observed that end-to-end training of CNN-LSTM networks boosts

surgical phase recognition performance.

Automatic recognition of fine-grained surgical activities, called steps, is a

challenging but crucial task for intelligent intra-operative computer

assistance. The development of current vision-based activity recognition

methods relies heavily on a high volume of manually annotated data. This data

is difficult and time-consuming to generate and requires domain-specific

knowledge. In this work, we propose to use coarser and easier-to-annotate

activity labels, namely phases, as weak supervision to learn step recognition

with fewer step annotated videos. We introduce a step-phase dependency loss to

exploit the weak supervision signal. We then employ a Single-Stage Temporal

Convolutional Network (SS-TCN) with a ResNet-50 backbone, trained in an

end-to-end fashion from weakly annotated videos, for temporal activity

segmentation and recognition. We extensively evaluate and show the

effectiveness of the proposed method on a large video dataset consisting of 40

laparoscopic gastric bypass procedures and the public benchmark CATARACTS

containing 50 cataract surgeries.

Purpose: Automatic segmentation and classification of surgical activity is

crucial for providing advanced support in computer-assisted interventions and

autonomous functionalities in robot-assisted surgeries. Prior works have

focused on recognizing either coarse activities, such as phases, or

fine-grained activities, such as gestures. This work aims at jointly

recognizing two complementary levels of granularity directly from videos,

namely phases and steps. Method: We introduce two correlated surgical

activities, phases and steps, for the laparoscopic gastric bypass procedure. We

propose a Multi-task Multi-Stage Temporal Convolutional Network (MTMS-TCN)

along with a multi-task Convolutional Neural Network (CNN) training setup to

jointly predict the phases and steps and benefit from their complementarity to

better evaluate the execution of the procedure. We evaluate the proposed method

on a large video dataset consisting of 40 surgical procedures (Bypass40).

Results: We present experimental results from several baseline models for both

phase and step recognition on the Bypass40 dataset. The proposed MTMS-TCN

method outperforms in both phase and step recognition by 1-2% in accuracy,

precision and recall, compared to single-task methods. Furthermore, for step

recognition, MTMS-TCN achieves a superior performance of 3-6% compared to LSTM

based models in accuracy, precision, and recall. Conclusion: In this work, we

present a multi-task multi-stage temporal convolutional network for surgical

activity recognition, which shows improved results compared to single-task

models on the Bypass40 gastric bypass dataset with multi-level annotations. The

proposed method shows that the joint modeling of phases and steps is beneficial

to improve the overall recognition of each type of activity.

Objective: To evaluate the accuracy, computational cost and portability of a

new Natural Language Processing (NLP) method for extracting medication

information from clinical narratives. Materials and Methods: We propose an

original transformer-based architecture for the extraction of entities and

their relations pertaining to patients' medication regimen. First, we used this

approach to train and evaluate a model on French clinical notes, using a newly

annotated corpus from H\^opitaux Universitaires de Strasbourg. Second, the

portability of the approach was assessed by conducting an evaluation on

clinical documents in English from the 2018 n2c2 shared task. Information

extraction accuracy and computational cost were assessed by comparison with an

available method using transformers. Results: The proposed architecture

achieves on the task of relation extraction itself performance that are

competitive with the state-of-the-art on both French and English (F-measures

0.82 and 0.96 vs 0.81 and 0.95), but reduce the computational cost by 10.

End-to-end (Named Entity recognition and Relation Extraction) F1 performance is

0.69 and 0.82 for French and English corpus. Discussion: While an existing

system developed for English notes was deployed in a French hospital setting

with reasonable effort, we found that an alternative architecture offered

end-to-end drug information extraction with comparable extraction performance

and lower computational impact for both French and English clinical text

processing, respectively. Conclusion: The proposed architecture can be used to

extract medication information from clinical text with high performance and low

computational cost and consequently suits with usually limited hospital IT

resources

There are no more papers matching your filters at the moment.