University of Bath

Reversible Deep Equilibrium Models (RevDEQs) enable exact gradient computation and constant memory for implicit models by employing an algebraically reversible fixed-point solver, resolving the training instability and high computational cost of prior DEQ formulations. This approach achieved a 23.2 test perplexity on Wikitext-103 with 12 function evaluations and 94 million parameters, and 94.4% accuracy on CIFAR-10, outperforming comparable explicit and implicit models with fewer parameters and significantly reduced iterations.

Wav2Lip introduces a method for speech-driven lip synchronization, generating accurate lip movements for arbitrary identities from any speech input in unconstrained "in-the-wild" videos. The system achieves superior lip-sync accuracy with lower LSE-D and higher LSE-C scores, and was preferred in human evaluations over existing methods more than 90% of the time.

This work identifies anisotropy in the singular value spectra of parameters, activations, and gradients as the fundamental barrier to low-bit training of large language models (LLMs). These spectra are dominated by a small fraction of large singular values, inducing wide numerical ranges that cause quantization bias and severe spectral distortion, ultimately degrading training performance. This work presents Metis, a spectral-domain quantization framework that partitions anisotropic spectra into narrower sub-distributions for independent quantization, thereby reducing errors and preserving spectral structure. To minimize overhead, Metis leverages two key properties of the dominant spectral subspace: preservation via sparsely random sampling and preservation via random projection, reducing decomposition cost to a negligible level. On LLaMA-3 8B trained with 100B tokens, Metis enables robust W4A4G4 training with FP4 quantization of weights, activations, and gradients, yielding only a 0.4% training loss gap and a 0.1% degradation in downstream accuracy relative to BF16. Beyond matching BF16 fidelity, Metis also surpasses our implementation of Nvidia's recently announced (yet to be publicly released) FP4 recipe, consistently achieving lower loss and higher downstream accuracy while incurring significantly lower computational overhead. The code implementation for Metis is available at: this https URL.

An iterative multi-agent Large Language Model system, SG², facilitates grounded spatial reasoning and planning on scene graphs through schema-guided, programmatic information retrieval. The framework demonstrates superior success rates, such as 98% in BabyAI Numerical Q&A, across various tasks and environments, while also boosting the performance of smaller language models.

California Institute of Technology

California Institute of Technology Carnegie Mellon University

Carnegie Mellon University Google

Google New York University

New York University University of Chicago

University of Chicago National University of Singapore

National University of Singapore University of Oxford

University of Oxford Stanford University

Stanford University Meta

Meta CohereIllinois Institute of Technology

CohereIllinois Institute of Technology Google Research

Google Research NVIDIASony AI

NVIDIASony AI MicrosoftUCSBIntel LabsDFKIUMass Amherst

MicrosoftUCSBIntel LabsDFKIUMass Amherst Virginia Tech

Virginia Tech MIT

MIT The Ohio State UniversityIowa State UniversityUniversity of TrentoUniversity of BathTU EindhovenBocconi UniversityUniversity of YorkIntel CorporationIIT Delhi

The Ohio State UniversityIowa State UniversityUniversity of TrentoUniversity of BathTU EindhovenBocconi UniversityUniversity of YorkIntel CorporationIIT Delhi AdobePolytechnique MontrealQualcomm Technologies, Inc.Hessian.AINIKENebius AIAI Risk and Vulnerability AllianceSaferAIHaize LabsJuniper NetworksCerebras SystemsMeedanRANDFAIRNutanixEthrivaDotphotonPatronus AINational University, PhilippinesMLCommonsGraphcoreCenter for Security and Emerging TechnologyCredo AIReins AIBestech SystemsContext FundActiveFenceDigital Safety Research InstituteLF AI & DataPublic Authority for Applied Education and Training of KuwaitCoactive.AI

AdobePolytechnique MontrealQualcomm Technologies, Inc.Hessian.AINIKENebius AIAI Risk and Vulnerability AllianceSaferAIHaize LabsJuniper NetworksCerebras SystemsMeedanRANDFAIRNutanixEthrivaDotphotonPatronus AINational University, PhilippinesMLCommonsGraphcoreCenter for Security and Emerging TechnologyCredo AIReins AIBestech SystemsContext FundActiveFenceDigital Safety Research InstituteLF AI & DataPublic Authority for Applied Education and Training of KuwaitCoactive.AIThis paper introduces v0.5 of the AI Safety Benchmark, which has been created by the MLCommons AI Safety Working Group. The AI Safety Benchmark has been designed to assess the safety risks of AI systems that use chat-tuned language models. We introduce a principled approach to specifying and constructing the benchmark, which for v0.5 covers only a single use case (an adult chatting to a general-purpose assistant in English), and a limited set of personas (i.e., typical users, malicious users, and vulnerable users). We created a new taxonomy of 13 hazard categories, of which 7 have tests in the v0.5 benchmark. We plan to release version 1.0 of the AI Safety Benchmark by the end of 2024. The v1.0 benchmark will provide meaningful insights into the safety of AI systems. However, the v0.5 benchmark should not be used to assess the safety of AI systems. We have sought to fully document the limitations, flaws, and challenges of v0.5. This release of v0.5 of the AI Safety Benchmark includes (1) a principled approach to specifying and constructing the benchmark, which comprises use cases, types of systems under test (SUTs), language and context, personas, tests, and test items; (2) a taxonomy of 13 hazard categories with definitions and subcategories; (3) tests for seven of the hazard categories, each comprising a unique set of test items, i.e., prompts. There are 43,090 test items in total, which we created with templates; (4) a grading system for AI systems against the benchmark; (5) an openly available platform, and downloadable tool, called ModelBench that can be used to evaluate the safety of AI systems on the benchmark; (6) an example evaluation report which benchmarks the performance of over a dozen openly available chat-tuned language models; (7) a test specification for the benchmark.

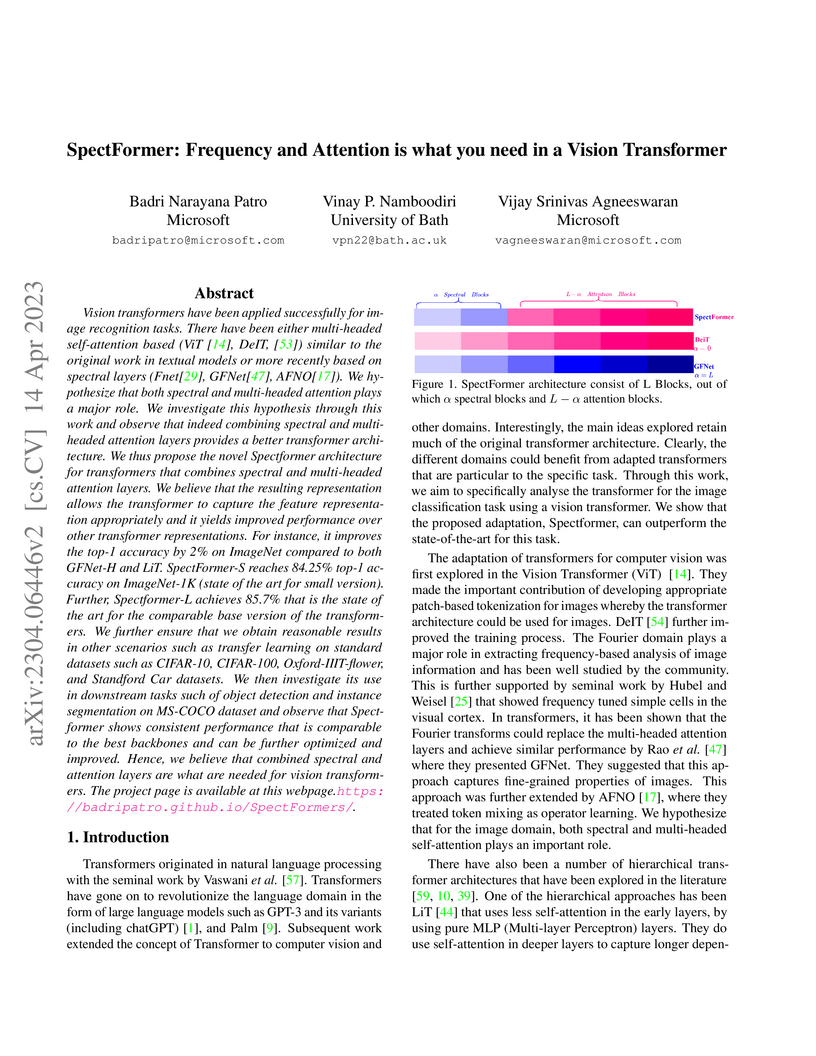

The SpectFormer architecture proposes a hybrid Vision Transformer that integrates spectral layers for local feature extraction and attention layers for global context. The model achieves state-of-the-art Top-1 accuracy on ImageNet-1K, reaching 84.3% for small models and 85.7% for large models, demonstrating strong performance across various computer vision tasks.

University of Cambridge

University of Cambridge UCLA

UCLA University College LondonUniversity of Innsbruck

University College LondonUniversity of Innsbruck Johns Hopkins UniversityINRIA ParisUniversity of BathChemnitz University of TechnologyJohannes Kepler University LinzUniversite Paris Dauphine-PSLUniversity of Applied Sciences Kufstein["École Polytechnique Fédérale de Lausanne"]

Johns Hopkins UniversityINRIA ParisUniversity of BathChemnitz University of TechnologyJohannes Kepler University LinzUniversite Paris Dauphine-PSLUniversity of Applied Sciences Kufstein["École Polytechnique Fédérale de Lausanne"]In recent years, a variety of learned regularization frameworks for solving inverse problems in imaging have emerged. These offer flexible modeling together with mathematical insights. The proposed methods differ in their architectural design and training strategies, making direct comparison challenging due to non-modular implementations. We address this gap by collecting and unifying the available code into a common framework. This unified view allows us to systematically compare the approaches and highlight their strengths and limitations, providing valuable insights into their future potential. We also provide concise descriptions of each method, complemented by practical guidelines.

University of California, Santa Barbara

University of California, Santa Barbara University of Copenhagen

University of Copenhagen Cohere

Cohere ETH ZürichCONICET

ETH ZürichCONICET Aalborg University

Aalborg University Emory UniversityTU DresdenUppsala UniversityUniversidad de Buenos Aires

Emory UniversityTU DresdenUppsala UniversityUniversidad de Buenos Aires Karlsruhe Institute of TechnologyFederal University of São CarlosMoscow Institute of Physics and TechnologyUniversity of BathUniversity of MontrealUniversity of São PauloComenius University in BratislavaPioneer Center for AICiscoNational University, PhilippinesHSE University (Higher School of Economics)

Karlsruhe Institute of TechnologyFederal University of São CarlosMoscow Institute of Physics and TechnologyUniversity of BathUniversity of MontrealUniversity of São PauloComenius University in BratislavaPioneer Center for AICiscoNational University, PhilippinesHSE University (Higher School of Economics)The evaluation of vision-language models (VLMs) has mainly relied on

English-language benchmarks, leaving significant gaps in both multilingual and

multicultural coverage. While multilingual benchmarks have expanded, both in

size and languages, many rely on translations of English datasets, failing to

capture cultural nuances. In this work, we propose Kaleidoscope, as the most

comprehensive exam benchmark to date for the multilingual evaluation of

vision-language models. Kaleidoscope is a large-scale, in-language multimodal

benchmark designed to evaluate VLMs across diverse languages and visual inputs.

Kaleidoscope covers 18 languages and 14 different subjects, amounting to a

total of 20,911 multiple-choice questions. Built through an open science

collaboration with a diverse group of researchers worldwide, Kaleidoscope

ensures linguistic and cultural authenticity. We evaluate top-performing

multilingual vision-language models and find that they perform poorly on

low-resource languages and in complex multimodal scenarios. Our results

highlight the need for progress on culturally inclusive multimodal evaluation

frameworks.

03 Oct 2025

University of Cambridge

University of Cambridge University of Copenhagen

University of Copenhagen University of Texas at AustinUniversität Heidelberg

University of Texas at AustinUniversität Heidelberg MIT

MIT Princeton UniversityUniversity of GenevaMax Planck Institute for AstrophysicsUniversity of Colorado Boulder

Princeton UniversityUniversity of GenevaMax Planck Institute for AstrophysicsUniversity of Colorado Boulder University of GroningenUniversity of BathInstitute of Science and Technology Austria (ISTA)Max Planck Institut fr Astronomie

University of GroningenUniversity of BathInstitute of Science and Technology Austria (ISTA)Max Planck Institut fr AstronomieThe population of the Little Red Dots (LRDs) may represent a key phase of supermassive black hole (SMBH) growth. A cocoon of dense excited gas is emerging as key component to explain the most striking properties of LRDs, such as strong Balmer breaks and Balmer absorption, as well as the weak IR emission. To dissect the structure of LRDs, we analyze new deep JWST/NIRSpec PRISM and G395H spectra of FRESCO-GN-9771, one of the most luminous known LRDs at z=5.5. These reveal a strong Balmer break, broad Balmer lines and very narrow [O III] emission. We unveil a forest of optical [Fe II] lines, which we argue is emerging from a dense (nH=109−10 cm−3) warm layer with electron temperature Te≈7000 K. The broad wings of Hα and Hβ have an exponential profile due to electron scattering in this same layer. The high Hα:Hβ:Hγ flux ratio of ≈10.4:1:0.14 is an indicator of collisional excitation and resonant scattering dominating the Balmer line emission. A narrow Hγ component, unseen in the other two Balmer lines due to outshining by the broad components, could trace the ISM of a normal host galaxy with a star formation rate ∼5 M⊙ yr−1. The warm layer is mostly opaque to Balmer transitions, producing a characteristic P-Cygni profile in the line centers suggesting outflowing motions. This same layer is responsible for shaping the Balmer break. The broad-band spectrum can be reasonably matched by a simple photoionized slab model that dominates the λ>1500 Å continuum and a low mass (∼108 M⊙) galaxy that could explain the narrow [O III], with only subdominant contribution to the UV continuum. Our findings indicate that Balmer lines are not directly tracing gas kinematics near the SMBH and that the BH mass scale is likely much lower than virial indicators suggest.

CNRS

CNRS University of Cambridge

University of Cambridge University of Chicago

University of Chicago University of Oxford

University of Oxford Stanford UniversityYonsei University

Stanford UniversityYonsei University University of Wisconsin-MadisonUniversiteit GentENS de LyonUniversity of BathLund UniversityKavli Institute for Particle Astrophysics & CosmologyCavendish LaboratoryKavli Institute for Cosmological PhysicsInstitut d'Astrophysique de ParisKavli Institute for CosmologyCentre de Recherche Astrophysique de LyonUniv. Lyon 1Sorbonne Université

University of Wisconsin-MadisonUniversiteit GentENS de LyonUniversity of BathLund UniversityKavli Institute for Particle Astrophysics & CosmologyCavendish LaboratoryKavli Institute for Cosmological PhysicsInstitut d'Astrophysique de ParisKavli Institute for CosmologyCentre de Recherche Astrophysique de LyonUniv. Lyon 1Sorbonne UniversitéWe present three cosmological radiation-hydrodynamic zoom simulations of the progenitor of a Milky Way-mass galaxy from the MEGATRON suite. The simulations combine on-the-fly radiative transfer with a detailed non-equilibrium thermochemical network (81 ions and molecules), resolving the cold and warm gas in the circumgalactic medium (CGM) on spatial scales down to 20 pc and on average 200 pc at cosmic noon. Comparing our full non-equilibrium calculation with local radiation to traditional post-processed photoionization equilibrium (PIE) models assuming a uniform UV background (UVB), we find that non-equilibrium physics and local radiation fields fundamentally impact the thermochemistry of the CGM. Recombination lags and local radiation anisotropy shift ions away from their PIE+UVB values and modify covering fractions (for example, HI damped Lyα absorbers differ by up to 40%). In addition, a resolution study with cooling-length refinement allows us to double the resolution in the cold and warm CGM gas, reaching 120 pc on average. When refining on cooling length, the mass of the lightest cold clumps decreases tenfold to ≈104M⊙, their boundary layers develop sharper ion stratification, and the warm gas is better resolved, boosting the abundance of warm gas tracers such as CIV and OIII. Together, these results demonstrate that non-equilibrium thermochemistry coupled to radiative transfer, combined with physically motivated resolution criteria, is essential to predict circumgalactic absorption and emission signatures and to guide the design of targeted observations with existing and upcoming facilities.

University of Cambridge

University of Cambridge University of Oxford

University of Oxford Stanford University

Stanford University OpenAI

OpenAI Yale UniversityElectronic Frontier Foundation

Yale UniversityElectronic Frontier Foundation Arizona State UniversityUniversity of LouisvilleUniversity of BathAmerican UniversityCenter for a New American SecurityCentre for the Study of Existential RiskFuture of Humanity InstituteOpen Philanthropy ProjectNew America FoundationCentre for the Future of IntelligenceEndgame

Arizona State UniversityUniversity of LouisvilleUniversity of BathAmerican UniversityCenter for a New American SecurityCentre for the Study of Existential RiskFuture of Humanity InstituteOpen Philanthropy ProjectNew America FoundationCentre for the Future of IntelligenceEndgameThis report surveys the landscape of potential security threats from

malicious uses of AI, and proposes ways to better forecast, prevent, and

mitigate these threats. After analyzing the ways in which AI may influence the

threat landscape in the digital, physical, and political domains, we make four

high-level recommendations for AI researchers and other stakeholders. We also

suggest several promising areas for further research that could expand the

portfolio of defenses, or make attacks less effective or harder to execute.

Finally, we discuss, but do not conclusively resolve, the long-term equilibrium

of attackers and defenders.

20 Mar 2025

The physical processes that led to the formation of billion solar mass black

holes within the first 700 million years of cosmic time remain a puzzle.

Several theoretical scenarios have been proposed to seed and rapidly grow black

holes, but direct observations of these mechanisms remain elusive. Here we

present a source 660 million years after the Big Bang that displays singular

properties: among the largest Hydrogen Balmer breaks reported at any redshift,

broad multi-peaked Hβ emission, and Balmer line absorption in multiple

transitions. We model this source as a "black hole star" (BH*) where the Balmer

break and absorption features are a result of extremely dense, turbulent gas

forming a dust-free "atmosphere" around a supermassive black hole. This source

may provide evidence of an early black hole embedded in dense gas -- a

theoretical configuration proposed to rapidly grow black holes via

super-Eddington accretion. Radiation from the BH* appears to dominate almost

all observed light, leaving limited room for contribution from its host galaxy.

We demonstrate that the recently discovered "Little Red Dots" (LRDs) with

perplexing spectral energy distributions can be explained as BH*s embedded in

relatively brighter host galaxies. This source provides evidence that black

hole masses in the LRDs may be over-estimated by orders of magnitude -- the BH*

is effectively dust-free contrary to the steep dust corrections applied while

modeling LRDs, and the physics that gives rise to the complex line shapes and

luminosities may deviate from assumptions underlying standard scaling

relations.

University of Notre Dame

University of Notre Dame University of Oxford

University of Oxford University of Texas at AustinLouisiana State UniversityUniversity of LuxembourgLuxembourg Institute of Science and Technology

University of Texas at AustinLouisiana State UniversityUniversity of LuxembourgLuxembourg Institute of Science and Technology University of VirginiaUniversity of BathKenyon CollegeSt. John’s UniversityNational University, PhilippinesMLCommonsInstitute for Technology & Society (ITS)Berklee College of MusicITESM

University of VirginiaUniversity of BathKenyon CollegeSt. John’s UniversityNational University, PhilippinesMLCommonsInstitute for Technology & Society (ITS)Berklee College of MusicITESMApplications of Generative AI (Gen AI) are expected to revolutionize a number

of different areas, ranging from science & medicine to education. The potential

for these seismic changes has triggered a lively debate about the potential

risks of the technology, and resulted in calls for tighter regulation, in

particular from some of the major tech companies who are leading in AI

development. This regulation is likely to put at risk the budding field of

open-source generative AI. Using a three-stage framework for Gen AI development

(near, mid and long-term), we analyze the risks and opportunities of

open-source generative AI models with similar capabilities to the ones

currently available (near to mid-term) and with greater capabilities

(long-term). We argue that, overall, the benefits of open-source Gen AI

outweigh its risks. As such, we encourage the open sourcing of models, training

and evaluation data, and provide a set of recommendations and best practices

for managing risks associated with open-source generative AI.

23 Mar 2018

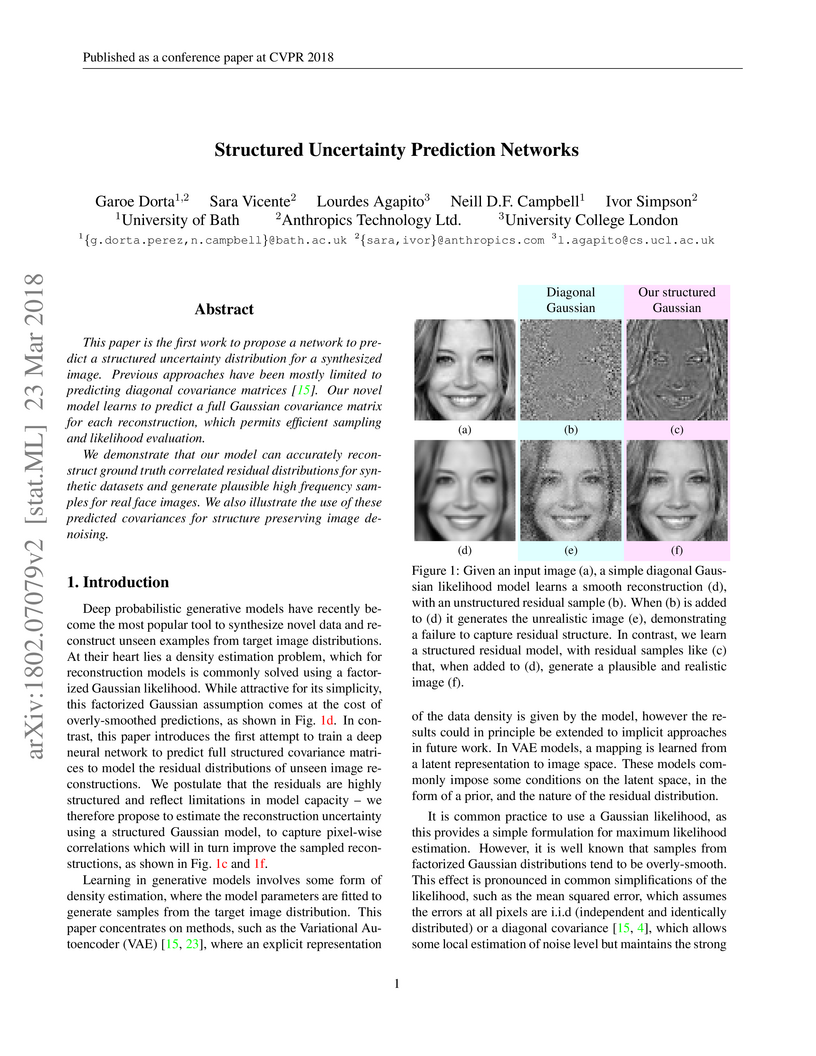

This paper is the first work to propose a network to predict a structured

uncertainty distribution for a synthesized image. Previous approaches have been

mostly limited to predicting diagonal covariance matrices. Our novel model

learns to predict a full Gaussian covariance matrix for each reconstruction,

which permits efficient sampling and likelihood evaluation.

We demonstrate that our model can accurately reconstruct ground truth

correlated residual distributions for synthetic datasets and generate plausible

high frequency samples for real face images. We also illustrate the use of

these predicted covariances for structure preserving image denoising.

11 Aug 2025

A training-free framework called Zoom-Refine enhances Multimodal Large Language Models (MLLMs) in interpreting high-resolution images by integrating localized region zooming with a self-refinement stage. This method improved accuracy on MME-Realworld reasoning tasks by 3.60% to 5.07% and on HR-Bench by 2.1% to 5.0% for leading MLLMs, offering greater efficiency than comparable training-free approaches.

Researchers at the University of Bath introduced SVERL-Performance (SVERL-P), a theoretically principled framework that quantifies the contribution of state features to an agent's expected return in reinforcement learning. This method addresses limitations of prior approaches by directly linking feature attribution to the agent's actual performance, providing more accurate and insightful explanations across various RL environments.

A tight correlation between the baryonic and observed acceleration of galaxies has been reported over a wide range of mass (10^8 < M_{\rm bar}/{\rm M}_\odot < 10^{11}) - the Radial Acceleration Relation (RAR). This has been interpreted as evidence that dark matter is actually a manifestation of some modified weak-field gravity theory. In this paper, we study the radially resolved RAR of 12 nearby dwarf galaxies, with baryonic masses in the range 10^4 < M_{\rm bar}/{\rm M}_\odot < 10^{7.5}, using a combination of literature data and data from the MUSE-Faint survey. We use stellar line-of-sight velocities and the Jeans modelling code GravSphere to infer the mass distributions of these galaxies, allowing us to compute the RAR. We compare the results with the EDGE simulations of isolated dwarf galaxies with similar stellar masses in a ΛCDM cosmology. We find that most of the observed dwarf galaxies lie systematically above the low-mass extrapolation of the RAR. Each galaxy traces a locus in the RAR space that can have a multi-valued observed acceleration for a given baryonic acceleration, while there is significant scatter from galaxy to galaxy. Our results indicate that the RAR does not apply to low-mass dwarf galaxies and that the inferred baryonic acceleration of these dwarfs does not contain enough information, on its own, to derive the observed acceleration. The simulated EDGE dwarfs behave similarly to the real data, lying systematically above the extrapolated RAR. We show that, in the context of modified weak-field gravity theories, these results cannot be explained by differential tidal forces from the Milky Way, nor by the galaxies being far from dynamical equilibrium, since none of the galaxies in our sample seems to experience strong tides. As such, our results provide further evidence for the need for invisible dark matter in the smallest dwarf galaxies.

University of Cambridge

University of Cambridge Imperial College London

Imperial College London University of Manchester

University of Manchester University College London

University College London University of Oxford

University of Oxford University of BristolUniversity of EdinburghThe Alan Turing Institute

University of BristolUniversity of EdinburghThe Alan Turing Institute King’s College LondonUniversity of SheffieldUniversity of Glasgow

King’s College LondonUniversity of SheffieldUniversity of Glasgow Queen Mary University of LondonUniversity of AberdeenHong Kong Baptist UniversityUniversity of BathCity St George’s, University of LondonUniversity of the West of EnglandSimula Research LaboratoryNHS EnglandBritish Antarctic SurveyCancer Research UK Scotland InstituteLloyds Banking Group

Queen Mary University of LondonUniversity of AberdeenHong Kong Baptist UniversityUniversity of BathCity St George’s, University of LondonUniversity of the West of EnglandSimula Research LaboratoryNHS EnglandBritish Antarctic SurveyCancer Research UK Scotland InstituteLloyds Banking GroupResearchers from the University of Sheffield and The Alan Turing Institute, along with a broad international collaboration, propose a deployment-centric workflow for multimodal AI development. This framework aims to broaden the field beyond its current vision and language focus by integrating real-world constraints and diverse data modalities to create more practical and impactful AI solutions.

MRI synthesis promises to mitigate the challenge of missing MRI modality in clinical practice. Diffusion model has emerged as an effective technique for image synthesis by modelling complex and variable data distributions. However, most diffusion-based MRI synthesis models are using a single modality. As they operate in the original image domain, they are memory-intensive and less feasible for multi-modal synthesis. Moreover, they often fail to preserve the anatomical structure in MRI. Further, balancing the multiple conditions from multi-modal MRI inputs is crucial for multi-modal synthesis. Here, we propose the first diffusion-based multi-modality MRI synthesis model, namely Conditioned Latent Diffusion Model (CoLa-Diff). To reduce memory consumption, we design CoLa-Diff to operate in the latent space. We propose a novel network architecture, e.g., similar cooperative filtering, to solve the possible compression and noise in latent space. To better maintain the anatomical structure, brain region masks are introduced as the priors of density distributions to guide diffusion process. We further present auto-weight adaptation to employ multi-modal information effectively. Our experiments demonstrate that CoLa-Diff outperforms other state-of-the-art MRI synthesis methods, promising to serve as an effective tool for multi-modal MRI synthesis.

Remarkable advances in recent 2D image and 3D shape generation have induced a significant focus on dynamic 4D content generation. However, previous 4D generation methods commonly struggle to maintain spatial-temporal consistency and adapt poorly to rapid temporal variations, due to the lack of effective spatial-temporal modeling. To address these problems, we propose a novel 4D generation network called 4DSTR, which modulates generative 4D Gaussian Splatting with spatial-temporal rectification. Specifically, temporal correlation across generated 4D sequences is designed to rectify deformable scales and rotations and guarantee temporal consistency. Furthermore, an adaptive spatial densification and pruning strategy is proposed to address significant temporal variations by dynamically adding or deleting Gaussian points with the awareness of their pre-frame movements. Extensive experiments demonstrate that our 4DSTR achieves state-of-the-art performance in video-to-4D generation, excelling in reconstruction quality, spatial-temporal consistency, and adaptation to rapid temporal movements.

There are no more papers matching your filters at the moment.