University of Camerino

In recent years, spatio-temporal graph neural networks (GNNs) have attracted considerable interest in the field of time series analysis, due to their ability to capture, at once, dependencies among variables and across time points. The objective of this systematic literature review is hence to provide a comprehensive overview of the various modeling approaches and application domains of GNNs for time series classification and forecasting. A database search was conducted, and 366 papers were selected for a detailed examination of the current state-of-the-art in the field. This examination is intended to offer to the reader a comprehensive review of proposed models, links to related source code, available datasets, benchmark models, and fitting results. All this information is hoped to assist researchers in their studies. To the best of our knowledge, this is the first and broadest systematic literature review presenting a detailed comparison of results from current spatio-temporal GNN models applied to different domains. In its final part, this review discusses current limitations and challenges in the application of spatio-temporal GNNs, such as comparability, reproducibility, explainability, poor information capacity, and scalability. This paper is complemented by a GitHub repository at this https URL providing additional interactive tools to further explore the presented findings.

19 Sep 2025

We investigate a theoretical protocol for the dissipative stabilization of mechanical quantum states in a multimode optomechanical system composed of multiple optical and mechanical modes. The scheme employs a single squeezed reservoir that drives one of the optical modes, while the remaining optical modes mediate an effective phonon-phonon interaction Hamiltonian. The interplay between these coherent interactions and the dissipation provided by the squeezed bath enables the steady-state preparation of targeted quantum states of the mechanical modes. In the absence of significant uncontrolled noise sources, the resulting dynamics closely approximate the model introduced in [Phys. Rev. Lett. 126, 020402 (2021)]. We analyze the performance of this protocol in generating mechanical cluster states defined on rectangular graphs.

We investigate the gravitational-wave background predicted by a two-scalar-field cosmological model that aims to unify primordial inflation with the dark sector, namely late-time dark energy and dark matter, in a single and self-consistent theoretical framework. The model is constructed from an action inspired by several extensions of general relativity and string-inspired scenarios and features a non-minimal interaction between the two scalar fields, while both remain minimally coupled to gravity. In this context, we derive the gravitational-wave energy spectrum over wavelengths ranging from today's Hubble horizon to those at the end of inflation. We employ the continuous Bogoliubov coefficient formalism, originally introduced to describe particle creation in an expanding Universe, in analogy to the well-established mechanism of gravitational particle production and, in particular, generalized to gravitons. Using this method, which enables an accurate description of graviton creation across all cosmological epochs, we find that inflation provides the dominant gravitational-wave contribution, while subdominant features arise at the inflation-radiation, radiation-matter, and matter-dark energy transitions, i.e., epochs naturally encoded inside our scalar field picture. The resulting energy density spectrum is thus compared with the sensitivity curves of the planned next-generation ground- and space-based gravitational-wave observatories. The comparison identifies frequency bands where the predicted signal could be probed, providing those windows associated with potentially detectable signals, bounded by our analyses. Consequences of our recipe are thus compared with numerical outcomes and the corresponding physical properties discussed in detail.

Bayesian Optimization (BO) is a class of black-box, surrogate-based heuristics that can efficiently optimize problems that are expensive to evaluate, and hence admit only small evaluation budgets. BO is particularly popular for solving numerical optimization problems in industry, where the evaluation of objective functions often relies on time-consuming simulations or physical experiments. However, many industrial problems depend on a large number of parameters. This poses a challenge for BO algorithms, whose performance is often reported to suffer when the dimension grows beyond 15 variables. Although many new algorithms have been proposed to address this problem, it is not well understood which one is the best for which optimization scenario.

In this work, we compare five state-of-the-art high-dimensional BO algorithms, with vanilla BO and CMA-ES on the 24 BBOB functions of the COCO environment at increasing dimensionality, ranging from 10 to 60 variables. Our results confirm the superiority of BO over CMA-ES for limited evaluation budgets and suggest that the most promising approach to improve BO is the use of trust regions. However, we also observe significant performance differences for different function landscapes and budget exploitation phases, indicating improvement potential, e.g., through hybridization of algorithmic components.

In the ever-evolving landscape of scientific computing, properly supporting

the modularity and complexity of modern scientific applications requires new

approaches to workflow execution, like seamless interoperability between

different workflow systems, distributed-by-design workflow models, and

automatic optimisation of data movements. In order to address this need, this

article introduces SWIRL, an intermediate representation language for

scientific workflows. In contrast with other product-agnostic workflow

languages, SWIRL is not designed for human interaction but to serve as a

low-level compilation target for distributed workflow execution plans. The main

advantages of SWIRL semantics are low-level primitives based on the

send/receive programming model and a formal framework ensuring the consistency

of the semantics and the specification of translating workflow models

represented by Directed Acyclic Graphs (DAGs) into SWIRL workflow descriptions.

Additionally, SWIRL offers rewriting rules designed to optimise execution

traces, accompanied by corresponding equivalence. An open-source SWIRL compiler

toolchain has been developed using the ANTLR Python3 bindings.

11 Oct 2025

The use of coarse graining to connect physical and information theoretic entropies has recently been given a precise formulation in terms of ``observational entropy'', describing entropy for observers with respect to a measurement. Here we consider observers with various locality restrictions, including local measurements (LO), measurements based on local operations with classical communication (LOCC), and separable measurements (SEP), with the idea that the ``entropy gap'' between the minimum locally measured observational entropy and the von Neumann entropy quantifies quantum correlations in a given state. After introducing entropy gaps for general classes of measurements and deriving their general properties, we specialize to LO, LOCC, SEP and other measurement classes related to the locality of subsystems. For those, we show that the entropy gap can be related to well-known measures of entanglement or non-classicality of the state (even though we point out that they are not entanglement monotones themselves). In particular, for bipartite pure states, all of the ``local'' entropy gaps reproduce the entanglement entropy, and for general multipartite states they are lower-bounded by the relative entropy of entanglement. The entropy gaps of the different measurement classes are ordered, and we show that in general (mixed and multipartite states) they are all different.

16 Jul 2023

This thesis treats networks providing quantum computation based on distributed paradigms. Compared to architectures relying on one processor, a network promises to be more scalable and less fault-prone. Developing a distributed system able to provide practical quantum computation comes with many challenges, each of which need to be faced with careful analysis in order to create a massive integration of several components properly engineered. In accordance with hardware technologies, currently under construction around the globe, telegates represent the fundamental inter-processor operations. Each telegate consists of several tasks: i) entanglement generation and distribution, ii) local operations, and iii) classical communications. Entanglement generation and distribution is an expensive resource, as it is time-consuming. The main contribution of this thesis is on the definition of compilers that minimize the impact of telegates on the overall fidelity. Specifically, we give rigorous formulations of the subject problem, allowing us to identify the inter-dependence between computation and communication. With the support of some of the best tools for reasoning -- i.e. network optimization, circuit manipulation, group theory and ZX-calculus -- we found new perspectives on the way a distributed quantum computing system should evolve.

08 Oct 2025

In the framework of quantum mechanics over a quadratic extension of the ultrametric field of p-adic numbers, we introduce a notion of tensor product of p-adic Hilbert spaces. To this end, following a standard approach, we first consider the algebraic tensor product of p-adic Hilbert spaces. We next define a suitable norm on this linear space. It turns out that, in the p-adic framework, this norm is the analogue of the projective norm associated with the tensor product of real or complex normed spaces. Eventually, by metrically completing the resulting p-adic normed space, and equipping it with a suitable inner product, we obtain the tensor product of p-adic Hilbert spaces. That this is indeed the correct p-adic counterpart of the tensor product of complex Hilbert spaces is also certified by establishing a natural isomorphism between this p-adic Hilbert space and the corresponding Hilbert-Schmidt class. Since the notion of subspace of a p-adic Hilbert space is highly nontrivial, we finally study the tensor product of subspaces, stressing both the analogies and the significant differences with respect to the standard complex case. These findings should provide us with the mathematical foundations necessary to explore quantum entanglement in the p-adic setting, with potential applications in the emerging field of p-adic quantum information theory.

Researchers from the University of Salerno, University of Camerino, and Institut Ruder Bošković compared the Lindblad master equation with a first-principles microscopic model to describe entanglement and purity dynamics in open quantum systems. The study revealed fundamental discrepancies in short-time predictions when system openness stems from internal correlations, finding that the Lindblad approach could suppress entanglement and predicted linear purity decay, contrasting with the microscopic model's consistent entanglement generation and quadratic purity decay.

04 Jun 2025

This review synthesizes recent advances in Relativistic Quantum Information, demonstrating how quantum entanglement provides crucial insights into gravitational phenomena. It explores the role of entanglement in the quantum-to-classical transition in cosmology, explains how vacuum entanglement can be harvested, and presents the 'island formula' as a resolution to the black hole information paradox by preserving unitarity.

25 Sep 2024

TU Dortmund University KU LeuvenGerman Research Center for Artificial Intelligence (DFKI)Gran Sasso Science InstituteUniversity of CamerinoUniversity of BayreuthUniversitat Politécnica de ValénciaUniversity of St.GallenTrier UniversityTUM School of Computation, Information and TechnologyMercadona TechFraunhofer Institute for Applied Information TechnologyOST - Eastern Switzerland University of Applied SciencesRWTH Aachen UniversitySapienza Universit

di Roma

KU LeuvenGerman Research Center for Artificial Intelligence (DFKI)Gran Sasso Science InstituteUniversity of CamerinoUniversity of BayreuthUniversitat Politécnica de ValénciaUniversity of St.GallenTrier UniversityTUM School of Computation, Information and TechnologyMercadona TechFraunhofer Institute for Applied Information TechnologyOST - Eastern Switzerland University of Applied SciencesRWTH Aachen UniversitySapienza Universit

di Roma

KU LeuvenGerman Research Center for Artificial Intelligence (DFKI)Gran Sasso Science InstituteUniversity of CamerinoUniversity of BayreuthUniversitat Politécnica de ValénciaUniversity of St.GallenTrier UniversityTUM School of Computation, Information and TechnologyMercadona TechFraunhofer Institute for Applied Information TechnologyOST - Eastern Switzerland University of Applied SciencesRWTH Aachen UniversitySapienza Universit

di Roma

KU LeuvenGerman Research Center for Artificial Intelligence (DFKI)Gran Sasso Science InstituteUniversity of CamerinoUniversity of BayreuthUniversitat Politécnica de ValénciaUniversity of St.GallenTrier UniversityTUM School of Computation, Information and TechnologyMercadona TechFraunhofer Institute for Applied Information TechnologyOST - Eastern Switzerland University of Applied SciencesRWTH Aachen UniversitySapienza Universit

di RomaA collaborative research manifesto clarifies the concept of Business Process Digital Twins (BPDTs) as virtual replicas of business processes enabling real-time monitoring, simulation, prediction, and optimization. It outlines a conceptual model for BPDTs and identifies twelve key challenges along with proposed research directions for the field.

25 May 2012

We analyze a region of fidelities for qubit which is obtained after an application of a 1 -> N universal quantum cloner. We express the allowed region for fidelities in terms of overlaps of pure states with irreps of S(n) (n = N+1) showing that the pure states can be taken with real coefficients only. Subsequently, the case n = 4, corresponding to a 1 -> 3 cloner is studied in more detail as an illustrative example. To obtain the main result, we make a convex hull of possible ranges of fidelities related to a given irrep. The formalism allows to construct the state giving rise to a given N-tuple of fidelities.

20 Nov 2025

We reveal the key role of the d-wave symmetry of the superconducting gap in strongly coupled two-dimensional superconductors in determining the properties of the Berezinskii-Kosterlitz-Thouless (BKT) transition, associated with a sizable enhancement of the phase stiffness compared to nodeless-gap superconductors. The enhanced stiffness originates from extended regions of vanishing gap around the nodal lines of the Brillouin zone (BZ). Our study, based on mean-field and BKT theory, presents a comparative analysis of s-wave and d-wave scenarios, highlighting the features of the latter that boost the stiffness and the BKT transition temperature (TBKT). The comparison focuses on two quantities: the mean-field critical temperature and the maximum superconducting gap related to the pairing strengths. We present a phase diagram showing the scaling of TBKT with respect to the mean-field critical temperature across the BCS-BEC crossover and the evolution of the pseudogap. We also present a zero-temperature phase-stiffness intensity map over the Brillouin zone, displaying a two-component structure consisting of low- and high-stiffness regions whose extent depends on microscopic parameters. These results identify the nodal gap structure of strongly coupled two-dimensional superconductors as a key mechanism enabling enhanced stiffness and elevated TBKT compared to their s-wave counterparts.

22 Apr 2025

In a two-membrane cavity optomechanical setup, two semi-transparent membranes

placed within an optical Fabry-P\'erot cavity yield a nontrivial dependence of

the frequency of a mode of the optical cavity on the membranes' positions,

which is due to interference. However, the system dynamics is typically

described by a radiation pressure force treatment in which the frequency shift

is expanded stopping at first order in the membrane displacements. In this

paper, we study the full dynamics of the system obtained by considering the

exact nonlinear dependence of the optomechanical interaction between two

membranes' vibrational modes and the driven cavity mode. We then compare this

dynamics with the standard treatment based on the Hamiltonian linear

interaction, and we find the conditions under which the two dynamics may

significantly depart from each other. In particular, we see that a parameter

regime exists in which the customary first-order treatment provides distinct

and incorrect predictions for the synchronization of two self-sustained

mechanical limit-cycles, and for Gaussian entanglement of the two membranes in

the case of two-tone driving.

12 Nov 2025

We describe the generation of correlated photon pairs by means of spontaneous parametric down-conversion of an optical pump in the form of a finite energy Airy beam. The optical system function, which contributes to the propagation of the down-converted beam before being registered by the detectors, is computed. The spectral function is utilized to calculate the biphoton amplitude for finding the coincidence count of the inbound Airy photons in both far-field and near-field configurations. We report the reconstruction of the finite energy Airy beam in the spatial correlation of the down-converted beams in near field scenario. In far field, the coincidence counts resembles the probability density of the biphoton in momentum space, revealing a direct mapping of the anti-correlation of the biphoton momentum. By examining the spatial Schmidt modes, we also demonstrate that longer crystals have tighter real-space correlations, but higher-dimensional angular correlations, whereas shorter crystals have fewer modes in momentum space and broader multimode correlations in position space.

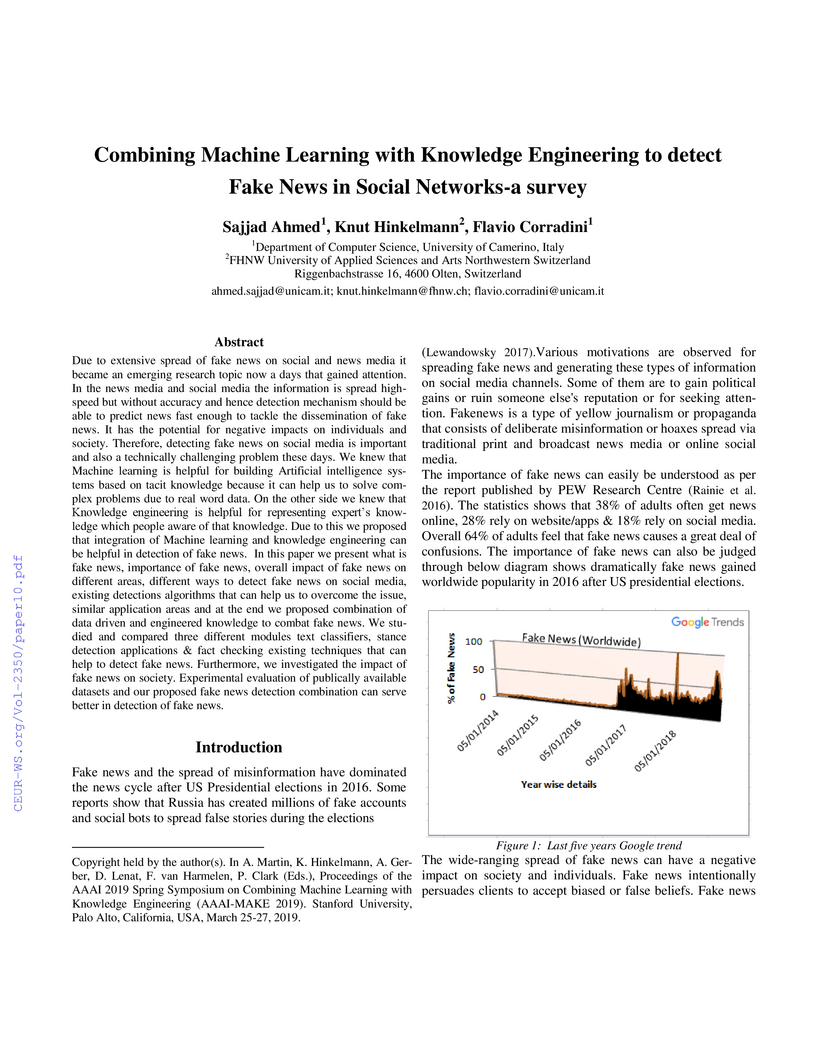

Due to extensive spread of fake news on social and news media it became an

emerging research topic now a days that gained attention. In the news media and

social media the information is spread highspeed but without accuracy and hence

detection mechanism should be able to predict news fast enough to tackle the

dissemination of fake news. It has the potential for negative impacts on

individuals and society. Therefore, detecting fake news on social media is

important and also a technically challenging problem these days. We knew that

Machine learning is helpful for building Artificial intelligence systems based

on tacit knowledge because it can help us to solve complex problems due to real

word data. On the other side we knew that Knowledge engineering is helpful for

representing experts knowledge which people aware of that knowledge. Due to

this we proposed that integration of Machine learning and knowledge engineering

can be helpful in detection of fake news. In this paper we present what is fake

news, importance of fake news, overall impact of fake news on different areas,

different ways to detect fake news on social media, existing detections

algorithms that can help us to overcome the issue, similar application areas

and at the end we proposed combination of data driven and engineered knowledge

to combat fake news. We studied and compared three different modules text

classifiers, stance detection applications and fact checking existing

techniques that can help to detect fake news. Furthermore, we investigated the

impact of fake news on society. Experimental evaluation of publically available

datasets and our proposed fake news detection combination can serve better in

detection of fake news.

The Berezinskii-Kosterlitz-Thouless (BKT) transition in ultra-thin NbN films is investigated in the presence of weak perpendicular magnetic fields. A jump in the phase stiffness at the BKT transition is detected up to 5 G, while the BKT features are smeared between 5 G and 50 G, disappearing altogether at 100 G, where conventional current-voltage behaviour is observed. Our findings demonstrate that weak magnetic fields, insignificant in bulk systems, deeply affect our ultra-thin system, promoting a crossover from Halperin-Nelson fluctuations to a BCS-like state with Ginzburg-Landau fluctuations, as the field increases. This behavior is related to field-induced free vortices that screen the vortex-antivortex interaction and smear the BKT transition.

The Berezinskii-Kosterlitz-Thouless (BKT) transition in ultra-thin NbN films is investigated in the presence of weak perpendicular magnetic fields. A jump in the phase stiffness at the BKT transition is detected up to 5 G, while the BKT features are smeared between 5 G and 50 G, disappearing altogether at 100 G, where conventional current-voltage behaviour is observed. Our findings demonstrate that weak magnetic fields, insignificant in bulk systems, deeply affect our ultra-thin system, promoting a crossover from Halperin-Nelson fluctuations to a BCS-like state with Ginzburg-Landau fluctuations, as the field increases. This behavior is related to field-induced free vortices that screen the vortex-antivortex interaction and smear the BKT transition.

A minimal observable length is a common feature of theories that aim to merge quantum physics and gravity. Quantum mechanically, this concept is associated to a nonzero minimal uncertainty in position measurements, which is encoded in deformed commutation relations. In spite of increasing theoretical interest, the subject suffers from the complete lack of dedicated experiments and bounds to the deformation parameters are roughly extrapolated from indirect measurements. As recently proposed, low-energy mechanical oscillators could allow to reveal the effect of a modified commutator. Here we analyze the free evolution of high quality factor micro- and nano-oscillators, spanning a wide range of masses around the Planck mass mP (≈22μg), and compare it with a model of deformed dynamics. Previous limits to the parameters quantifying the commutator deformation are substantially lowered.

23 May 2017

The search for a potential function S allowing to reconstruct a given metric tensor g and a given symmetric covariant tensor T on a manifold M is formulated as the Hamilton-Jacobi problem associated with a canonically defined Lagrangian on TM. The connection between this problem, the geometric structure of the space of pure states of quantum mechanics, and the theory of contrast functions of classical information geometry is outlined.

There are no more papers matching your filters at the moment.