University of Cassino and Southern Lazio

15 Feb 2025

The Integrated Sensing and Communications (ISAC) paradigm is anticipated to

be a cornerstone of the upcoming 6G networks. In order to optimize the use of

wireless resources, 6G ISAC systems need to harness the communication data

payload signals, which are inherently random, for both sensing and

communication (S&C) purposes. This tutorial paper provides a comprehensive

technical overview of the fundamental theory and signal processing

methodologies for ISAC transmission with random communication signals. We begin

by introducing the deterministic-random tradeoff (DRT) between S&C from an

information-theoretic perspective, emphasizing the need for specialized signal

processing techniques tailored to random ISAC signals. Building on this

foundation, we review the core signal models and processing pipelines for

communication-centric ISAC systems, and analyze the average squared

auto-correlation function (ACF) of random ISAC signals, which serves as a

fundamental performance metric for multi-target ranging tasks. Drawing insights

from these theoretical results, we outline the design principles for the three

key components of communication-centric ISAC systems: modulation schemes,

constellation design, and pulse shaping filters. The goal is to either enhance

sensing performance without compromising communication efficiency or to

establish a scalable tradeoff between the two. We then extend our analysis from

a single-antenna ISAC system to its multi-antenna counterpart, discussing

recent advancements in multi-input multi-output (MIMO) precoding techniques

specifically designed for random ISAC signals. We conclude by highlighting

several open challenges and future research directions in the field of sensing

with communication signals.

A latent diffusion autoencoder framework enables efficient unsupervised learning from 3D medical images by combining perceptual compression, latent diffusion modeling, and semantic encoding, achieving 20x faster inference while maintaining high reconstruction quality (SSIM: 0.962) and strong diagnostic performance for Alzheimer's disease (ROC-AUC: 90%).

10 Jun 2025

Cell-free (CF) massive multiple-input multiple-output (MIMO) is a promising approach for next-generation wireless networks, enabling scalable deployments of multiple small access points (APs) to enhance coverage and service for multiple user equipments (UEs). While most existing research focuses on low-frequency bands with Rayleigh fading models, emerging 5G trends are shifting toward higher frequencies, where geometric channel models and line-of-sight (LoS) propagation become more relevant. In this work, we explore how distributed massive MIMO in the LoS regime can achieve near-field-like conditions by forming artificially large arrays through coordinated AP deployments. We investigate centralized and decentralized CF architectures, leveraging structured channel estimation (SCE) techniques that exploit the line-of-sight properties of geometric channels. Our results demonstrate that dense distributed AP deployments significantly improve system performance w.r.t. the case of a co-located array, even in highly populated UE scenarios, while SCE approaches the performance of perfect CSI.

Human pose estimation, a vital task in computer vision, involves detecting and localising human joints in images and videos. While single-frame pose estimation has seen significant progress, it often fails to capture the temporal dynamics for understanding complex, continuous movements. We propose Poseidon, a novel multi-frame pose estimation architecture that extends the ViTPose model by integrating temporal information for enhanced accuracy and robustness to address these limitations. Poseidon introduces key innovations: (1) an Adaptive Frame Weighting (AFW) mechanism that dynamically prioritises frames based on their relevance, ensuring that the model focuses on the most informative data; (2) a Multi-Scale Feature Fusion (MSFF) module that aggregates features from different backbone layers to capture both fine-grained details and high-level semantics; and (3) a Cross-Attention module for effective information exchange between central and contextual frames, enhancing the model's temporal coherence. The proposed architecture improves performance in complex video scenarios and offers scalability and computational efficiency suitable for real-world applications. Our approach achieves state-of-the-art performance on the PoseTrack21 and PoseTrack18 datasets, achieving mAP scores of 88.3 and 87.8, respectively, outperforming existing methods.

This study presents an innovative method for Alzheimer's disease diagnosis using 3D MRI designed to enhance the explainability of model decisions. Our approach adopts a soft attention mechanism, enabling 2D CNNs to extract volumetric representations. At the same time, the importance of each slice in decision-making is learned, allowing the generation of a voxel-level attention map to produce an explainable MRI. To test our method and ensure the reproducibility of our results, we chose a standardized collection of MRI data from the Alzheimer's Disease Neuroimaging Initiative (ADNI). On this dataset, our method significantly outperforms state-of-the-art methods in (i) distinguishing AD from cognitive normal (CN) with an accuracy of 0.856 and Matthew's correlation coefficient (MCC) of 0.712, representing improvements of 2.4% and 5.3% respectively over the second-best, and (ii) in the prognostic task of discerning stable from progressive mild cognitive impairment (MCI) with an accuracy of 0.725 and MCC of 0.443, showing improvements of 10.2% and 20.5% respectively over the second-best. We achieved this prognostic result by adopting a double transfer learning strategy, which enhanced sensitivity to morphological changes and facilitated early-stage AD detection. With voxel-level precision, our method identified which specific areas are being paid attention to, identifying these predominant brain regions: the hippocampus, the amygdala, the parahippocampal, and the inferior lateral ventricles. All these areas are clinically associated with AD development. Furthermore, our approach consistently found the same AD-related areas across different cross-validation folds, proving its robustness and precision in highlighting areas that align closely with known pathological markers of the disease.

This work considers the problem of energy efficiency maximization in a RIS-based communication link, subject to not only the conventional maximum power constraints, but also additional constraints on the maximum exposure to electromagnetic radiations of the end-users. The RIS phase shifts, the transmit beamforming, the linear receive filter, and the transmit power are jointly optimized, and two provably convergent and low-complexity algorithms are developed. One algorithm can be applied to the general system setups, but does not guarantee global optimality. The second algorithm is provably optimal in a notable special case. The numerical results show that RIS-based communications can ensure high energy efficiency while fulfilling users' exposure constraints to radio frequency emissions.

This study addresses the challenge of access point (AP) and user equipment

(UE) association in cell-free massive MIMO networks. It introduces a deep

learning algorithm leveraging Bidirectional Long Short-Term Memory cells and a

hybrid probabilistic methodology for weight updating. This approach enhances

scalability by adapting to variations in the number of UEs without requiring

retraining. Additionally, the study presents a training methodology that

improves scalability not only with respect to the number of UEs but also to the

number of APs. Furthermore, a variant of the proposed AP-UE algorithm ensures

robustness against pilot contamination effects, a critical issue arising from

pilot reuse in channel estimation. Extensive numerical results validate the

effectiveness and adaptability of the proposed methods, demonstrating their

superiority over widely used heuristic alternatives.

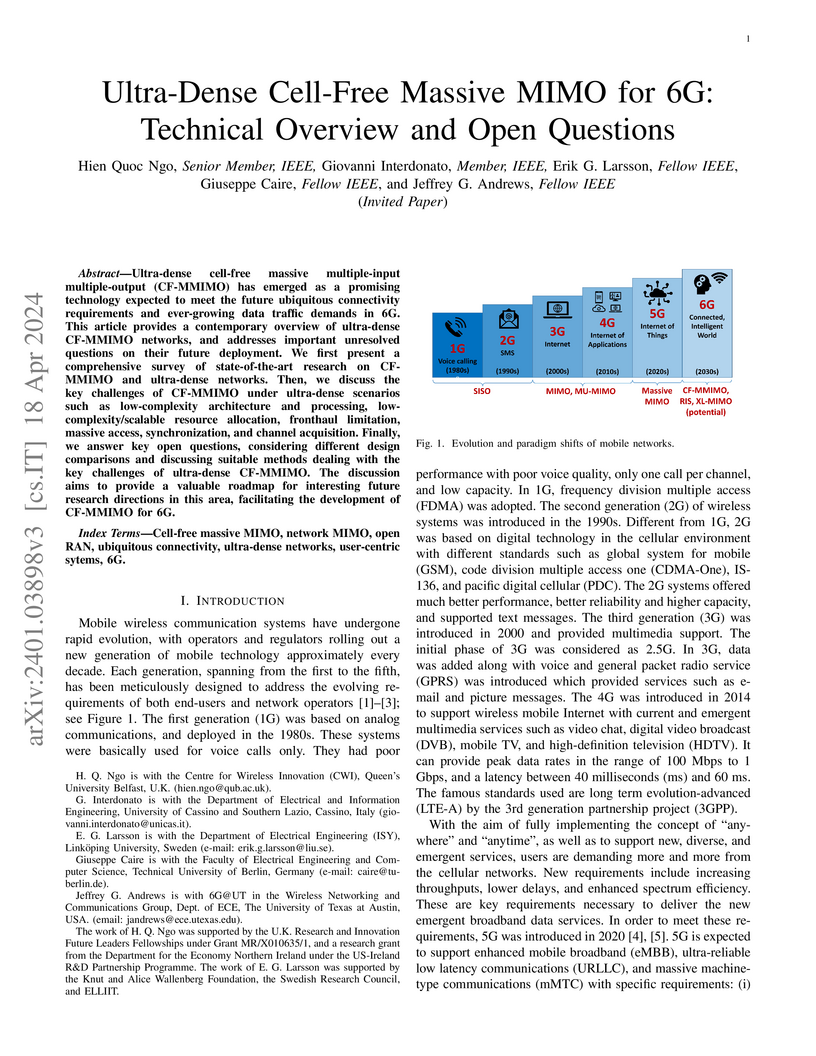

What will 5G be? What it will not be is an incremental advance on 4G. The

previous four generations of cellular technology have each been a major

paradigm shift that has broken backwards compatibility. And indeed, 5G will

need to be a paradigm shift that includes very high carrier frequencies with

massive bandwidths, extreme base station and device densities and unprecedented

numbers of antennas. But unlike the previous four generations, it will also be

highly integrative: tying any new 5G air interface and spectrum together with

LTE and WiFi to provide universal high-rate coverage and a seamless user

experience. To support this, the core network will also have to reach

unprecedented levels of flexibility and intelligence, spectrum regulation will

need to be rethought and improved, and energy and cost efficiencies will become

even more critical considerations. This paper discusses all of these topics,

identifying key challenges for future research and preliminary 5G

standardization activities, while providing a comprehensive overview of the

current literature, and in particular of the papers appearing in this special

issue.

19 Mar 2020

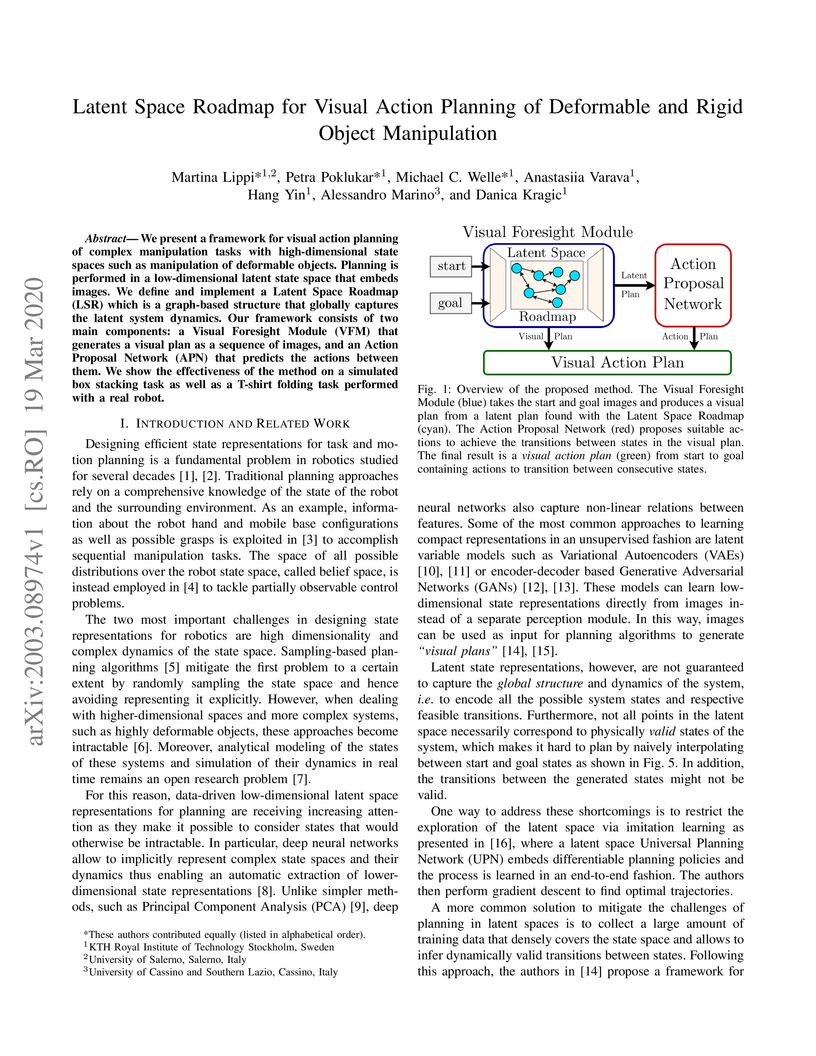

We present a framework for visual action planning of complex manipulation

tasks with high-dimensional state spaces such as manipulation of deformable

objects. Planning is performed in a low-dimensional latent state space that

embeds images. We define and implement a Latent Space Roadmap (LSR) which is a

graph-based structure that globally captures the latent system dynamics. Our

framework consists of two main components: a Visual Foresight Module (VFM) that

generates a visual plan as a sequence of images, and an Action Proposal Network

(APN) that predicts the actions between them. We show the effectiveness of the

method on a simulated box stacking task as well as a T-shirt folding task

performed with a real robot.

16 Aug 2021

University College LondonThe Chinese University of Hong Kong, ShenzhenWeizmann Institute of ScienceBeijing University of Posts and Telecommunications

University College LondonThe Chinese University of Hong Kong, ShenzhenWeizmann Institute of ScienceBeijing University of Posts and Telecommunications Southern University of Science and TechnologyConsorzio Nazionale Interuniversitario per le TelecomunicazioniHuawei Technologies Co LtdUniversity of Cassino and Southern LazioGBB Wireless Research

Southern University of Science and TechnologyConsorzio Nazionale Interuniversitario per le TelecomunicazioniHuawei Technologies Co LtdUniversity of Cassino and Southern LazioGBB Wireless ResearchAs the standardization of 5G is being solidified, researchers are speculating what 6G will be. Integrating sensing functionality is emerging as a key feature of the 6G Radio Access Network (RAN), allowing to exploit the dense cell infrastructure of 5G for constructing a perceptive network. In this paper, we provide a comprehensive overview on the background, range of key applications and state-of-the-art approaches of Integrated Sensing and Communications (ISAC). We commence by discussing the interplay between sensing and communications (S&C) from a historical point of view, and then consider multiple facets of ISAC and its performance gains. By introducing both ongoing and potential use cases, we shed light on industrial progress and standardization activities related to ISAC. We analyze a number of performance tradeoffs between S&C, spanning from information theoretical limits, tradeoffs in physical layer performance, to the tradeoff in cross-layer designs. Next, we discuss signal processing aspects of ISAC, namely ISAC waveform design and receive signal processing. As a step further, we provide our vision on the deeper integration between S&C within the framework of perceptive networks, where the two functionalities are expected to mutually assist each other, i.e., communication-assisted sensing and sensing-assisted communications. Finally, we summarize the paper by identifying the potential integration between ISAC and other emerging communication technologies, and their positive impact on the future of wireless networks.

03 Jul 2025

This study investigates a communication-centric integrated sensing and communication (ISAC) system that utilizes orthogonal time frequency space (OTFS) modulated signals emitted by low Earth orbit (LEO) satellites to estimate the parameters of space targets experiencing range migration, henceforth referred to as high-speed targets. Leveraging the specific signal processing performed by OTFS transceivers, we derive a novel input-output model for the echo generated by a high-speed target in scenarios where ideal and rectangular shaping filters are employed. Our findings reveal that the target response exhibits a sparse structure in the delay-Doppler domain, dependent solely upon the initial range and range-rate; notably, range migration causes a spread in the target response, marking a significant departure from previous studies. Utilizing this signal structure, we propose an approximate implementation of the maximum likelihood estimator for the target's initial range, range-rate, and amplitude. The estimation process involves obtaining coarse information on the target response using a block orthogonal matching pursuit algorithm, followed by a refinement step using a bank of matched filters focused on a smaller range and range-rate region. Finally, numerical examples are provided to evaluate the estimation performance.

This study presents an innovative method for Alzheimer's disease diagnosis using 3D MRI designed to enhance the explainability of model decisions. Our approach adopts a soft attention mechanism, enabling 2D CNNs to extract volumetric representations. At the same time, the importance of each slice in decision-making is learned, allowing the generation of a voxel-level attention map to produce an explainable MRI. To test our method and ensure the reproducibility of our results, we chose a standardized collection of MRI data from the Alzheimer's Disease Neuroimaging Initiative (ADNI). On this dataset, our method significantly outperforms state-of-the-art methods in (i) distinguishing AD from cognitive normal (CN) with an accuracy of 0.856 and Matthew's correlation coefficient (MCC) of 0.712, representing improvements of 2.4% and 5.3% respectively over the second-best, and (ii) in the prognostic task of discerning stable from progressive mild cognitive impairment (MCI) with an accuracy of 0.725 and MCC of 0.443, showing improvements of 10.2% and 20.5% respectively over the second-best. We achieved this prognostic result by adopting a double transfer learning strategy, which enhanced sensitivity to morphological changes and facilitated early-stage AD detection. With voxel-level precision, our method identified which specific areas are being paid attention to, identifying these predominant brain regions: the hippocampus, the amygdala, the parahippocampal, and the inferior lateral ventricles. All these areas are clinically associated with AD development. Furthermore, our approach consistently found the same AD-related areas across different cross-validation folds, proving its robustness and precision in highlighting areas that align closely with known pathological markers of the disease.

01 Aug 2022

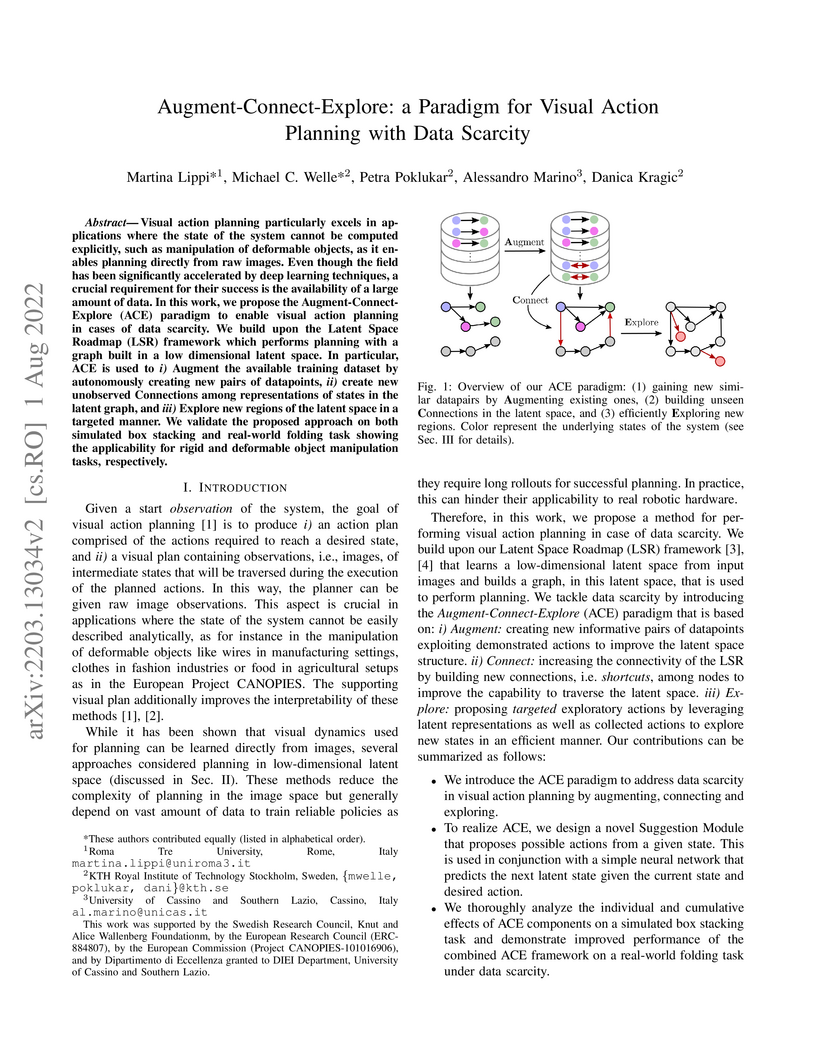

Visual action planning particularly excels in applications where the state of the system cannot be computed explicitly, such as manipulation of deformable objects, as it enables planning directly from raw images. Even though the field has been significantly accelerated by deep learning techniques, a crucial requirement for their success is the availability of a large amount of data. In this work, we propose the Augment-Connect-Explore (ACE) paradigm to enable visual action planning in cases of data scarcity.

We build upon the Latent Space Roadmap (LSR) framework which performs planning with a graph built in a low dimensional latent space. In particular, ACE is used to i) Augment the available training dataset by autonomously creating new pairs of datapoints, ii) create new unobserved Connections among representations of states in the latent graph, and iii) Explore new regions of the latent space in a targeted manner. We validate the proposed approach on both simulated box stacking and real-world folding task showing the applicability for rigid and deformable object manipulation tasks, respectively.

30 May 2019

In this paper we present an architecture for the operation of an assistive

robot finally aimed at allowing users with severe motion disabilities to

perform manipulation tasks that may help in daily-life operations. The robotic

system, based on a lightweight robot manipulator, receives high level commands

from the user through a Brain-Computer Interface based on P300 paradigm. The

motion of the manipulator is controlled relying on a closed loop inverse

kinematic algorithm that simultaneously manages multiple set-based and

equality-based tasks. The software architecture is developed relying on widely

used frameworks to operate BCIs and robots (namely, BCI2000 for the operation

of the BCI and ROS for the control of the manipulator) integrating control,

perception and communication modules developed for the application at hand.

Preliminary experiments have been conducted to show the potentialities of the

developed architecture.

Contrary to conventional massive MIMO cellular configurations plagued by inter-cell interference, cell-free massive MIMO systems distribute network resources across the coverage area, enabling users to connect with multiple access points (APs) and boosting both system capacity and fairness across user. In such systems, one critical functionality is the association between APs and users: determining the optimal association is indeed a combinatorial problem of prohibitive complexity. In this paper, a solution based on deep learning is thus proposed to solve the user clustering problem aimed at maximizing the sum spectral efficiency while controlling the number of active connections. The proposed solution can scale effectively with the number of users, leveraging long short-term memory cells to operate without the need for retraining. Numerical results show the effectiveness of the proposed solution, even in the presence of imperfect channel state information due to pilot contamination.

17 Jun 2024

In this study, we consider a pulse-Doppler radar relying on a simultaneously

transmitting and reflecting reconfigurable intelligent surface (STAR-RIS) for

scanning a given volume; the radar receiver is collocated with the STAR-RIS and

aims to detect moving targets and estimate their radial velocity in the

presence of clutter. To separate the echoes received from the transmissive and

reflective half-spaces, the STAR-RIS superimposes a different slow-time

modulation on the pulses redirected in each half-space, while the radar

detector employs a decision rule based on a generalized information criterion

(GIC). Two scanning policies are introduced, namely, simultaneous and

sequential scanning, with different tradeoffs in terms of radial velocity

estimation accuracy and complexity of the radar detector.

This paper proposes computationally efficient algorithms to maximize the

energy efficiency in multi-carrier wireless interference networks, by a

suitable allocation of the system radio resources, namely the transmit powers

and subcarrier assignment. The problem is formulated as the maximization of the

system Global Energy-Efficiency subject to both maximum power and minimum rate

constraints. This leads to a challenging non-convex fractional problem, which

is tackled through an interplay of fractional programming, learning, and game

theory. The proposed algorithmic framework is provably convergent and has a

complexity linear in both the number of users and subcarriers, whereas other

available solutions can only guarantee a polynomial complexity in the number of

users and subcarriers. Numerical results show that the proposed method performs

similarly as other, more complex, algorithms.

This work studies the problem of secrecy energy efficiency maximization in

multi-user wireless networks aided by reconfigurable intelligent surfaces, in

which an eavesdropper overhears the uplink communication. A provably convergent

optimization algorithm is proposed which optimizes the user's transmit power,

metasurface reflection coefficients, and base station receive filters. The

complexity of the proposed method is analyzed and numerical results are

provided to show the performance of the proposed optimization method.

Ultra-dense cell-free massive multiple-input multiple-output (CF-MMIMO) has emerged as a promising technology expected to meet the future ubiquitous connectivity requirements and ever-growing data traffic demands in 6G. This article provides a contemporary overview of ultra-dense CF-MMIMO networks, and addresses important unresolved questions on their future deployment. We first present a comprehensive survey of state-of-the-art research on CF-MMIMO and ultra-dense networks. Then, we discuss the key challenges of CF-MMIMO under ultra-dense scenarios such as low-complexity architecture and processing, low-complexity/scalable resource allocation, fronthaul limitation, massive access, synchronization, and channel acquisition. Finally, we answer key open questions, considering different design comparisons and discussing suitable methods dealing with the key challenges of ultra-dense CF-MMIMO. The discussion aims to provide a valuable roadmap for interesting future research directions in this area, facilitating the development of CF-MMIMO MIMO for 6G.

This work proposes a provably convergent and low complexity optimization

algorithm for the maximization of the secrecy energy efficiency in the uplink

of a wireless network aided by a Reconfigurable Intelligent Surface (RIS), in

the presence of an eavesdropper. The mobil users' transmit powers and the RIS

reflection coefficients are optimized. Numerical results show the performance

of the proposed methods and compare the use of active and nearly-passive RISs

from an energy-efficient perspective.

There are no more papers matching your filters at the moment.