CNIT

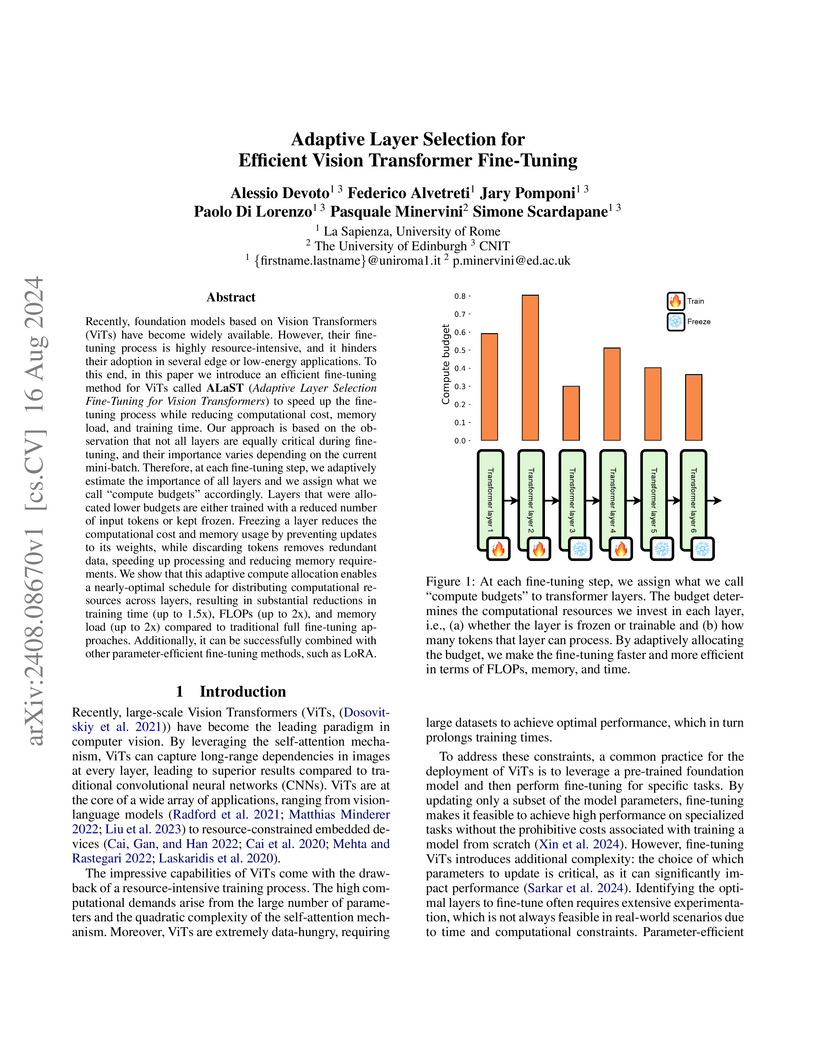

ALaST, an adaptive layer selection fine-tuning framework for Vision Transformers, dynamically learns layer and token importance to optimize resource allocation during training. The approach reduces computational operations by 40%, memory usage by 50%, and training time by 20% compared to full fine-tuning, all while preserving competitive classification accuracy.

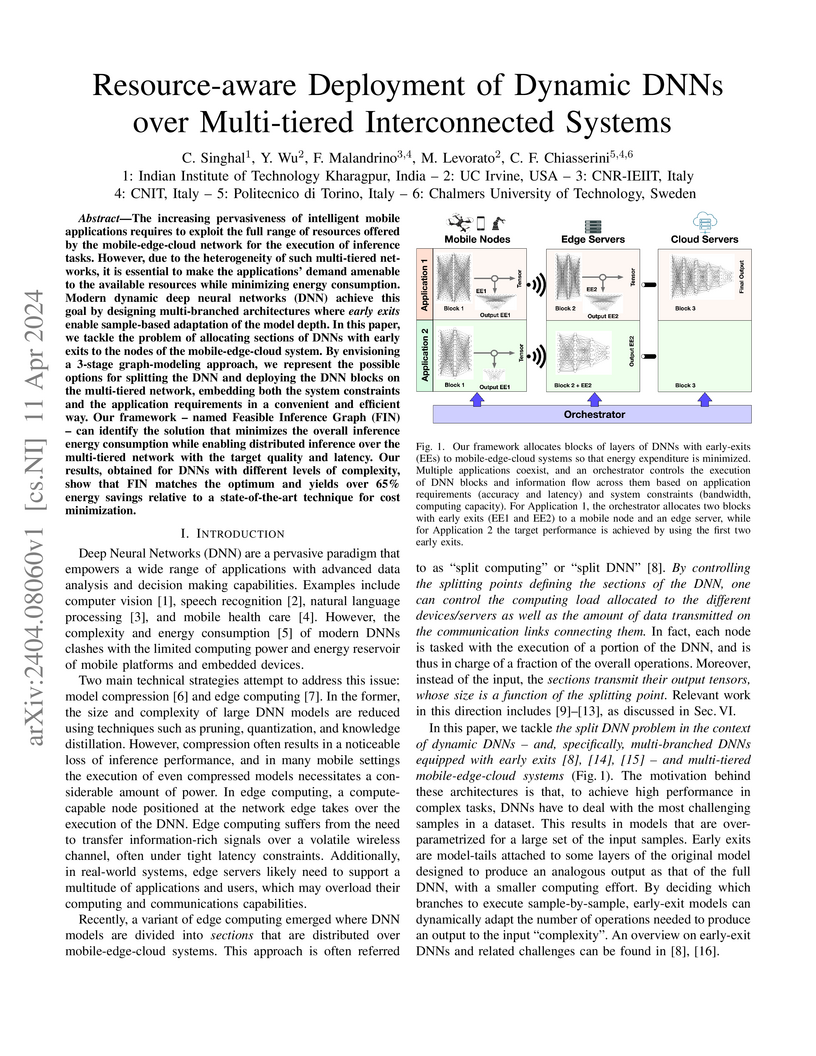

The increasing pervasiveness of intelligent mobile applications requires to

exploit the full range of resources offered by the mobile-edge-cloud network

for the execution of inference tasks. However, due to the heterogeneity of such

multi-tiered networks, it is essential to make the applications' demand

amenable to the available resources while minimizing energy consumption. Modern

dynamic deep neural networks (DNN) achieve this goal by designing

multi-branched architectures where early exits enable sample-based adaptation

of the model depth. In this paper, we tackle the problem of allocating sections

of DNNs with early exits to the nodes of the mobile-edge-cloud system. By

envisioning a 3-stage graph-modeling approach, we represent the possible

options for splitting the DNN and deploying the DNN blocks on the multi-tiered

network, embedding both the system constraints and the application requirements

in a convenient and efficient way. Our framework -- named Feasible Inference

Graph (FIN) -- can identify the solution that minimizes the overall inference

energy consumption while enabling distributed inference over the multi-tiered

network with the target quality and latency. Our results, obtained for DNNs

with different levels of complexity, show that FIN matches the optimum and

yields over 65% energy savings relative to a state-of-the-art technique for

cost minimization.

12 Feb 2024

The 6G-GOALS project proposes a paradigm shift for 6G networks from traditional bit-level communication to semantic and goal-oriented communication, aiming for efficient and sustainable AI-Native interactions. It outlines a novel O-RAN-aligned architecture with a 'semantic plane' and details foundational research pillars, alongside two planned proof-of-concepts for real-time semantic communication and cooperative robotics.

This paper proposes a Multi-Agent Deep Reinforcement Learning (MADRL) framework integrated with Graph Neural Networks (GNNs) for distributed power control in wireless networks. It demonstrates that learning-based graph construction, which optimizes communication links among base stations, outperforms fixed graph structures, leading to improved network throughput and enhanced generalization to varied network sizes and user distributions.

Holographic communication is intended as an holistic way to manipulate with unprecedented flexibility the electromagnetic field generated or sensed by an antenna. This is of particular interest when using large antennas at high frequency (e.g., the millimeter wave or terahertz), whose operating condition may easily fall in the Fresnel propagation region (radiating near-field), where the classical plane wave propagation assumption is no longer valid. This paper analyzes the optimal communication involving large intelligent surfaces, realized for example with metamaterials as possible enabling technology for holographic communication. It is shown that traditional propagation models must be revised and that, when exploiting spherical wave propagation in the Fresnel region with large surfaces, new opportunities are opened, for example, in terms of the number of orthogonal communication channels.

In the last years, several machine learning-based techniques have been proposed to monitor human movements from Wi-Fi channel readings. However, the development of domain-adaptive algorithms that robustly work across different environments is still an open problem, whose solution requires large datasets characterized by strong domain diversity, in terms of environments, persons and Wi-Fi hardware. To date, the few public datasets available are mostly obsolete - as obtained via Wi-Fi devices operating on 20 or 40 MHz bands - and contain little or no domain diversity, thus dramatically limiting the advancements in the design of sensing algorithms. The present contribution aims to fill this gap by providing a dataset of IEEE 802.11ac channel measurements over an 80 MHz bandwidth channel featuring notable domain diversity, through measurement campaigns that involved thirteen subjects across different environments, days, and with different hardware. Novel experimental data is provided by blocking the direct path between the transmitter and the monitor, and collecting measurements in a semi-anechoic chamber (no multi-path fading). Overall, the dataset - available on IEEE DataPort [1] - contains more than thirteen hours of channel state information readings (23.6 GB), allowing researchers to test activity/identity recognition and people counting algorithms.

This work addresses the comparison between active and passive RISs in wireless networks, with reference to the system energy efficiency (EE). To provably convergent and computationally-friendly EE maximization algorithms are developed, which optimize the reflection coefficients of the RIS, the transmit powers, and the linear receive filters. Numerical results show the performance of the proposed methods and discuss the operating points in which active or passive RISs should be preferred from an energy-efficient perspective.

AI-generated synthetic media, also called Deepfakes, have significantly influenced so many domains, from entertainment to cybersecurity. Generative Adversarial Networks (GANs) and Diffusion Models (DMs) are the main frameworks used to create Deepfakes, producing highly realistic yet fabricated content. While these technologies open up new creative possibilities, they also bring substantial ethical and security risks due to their potential misuse. The rise of such advanced media has led to the development of a cognitive bias known as Impostor Bias, where individuals doubt the authenticity of multimedia due to the awareness of AI's capabilities. As a result, Deepfake detection has become a vital area of research, focusing on identifying subtle inconsistencies and artifacts with machine learning techniques, especially Convolutional Neural Networks (CNNs). Research in forensic Deepfake technology encompasses five main areas: detection, attribution and recognition, passive authentication, detection in realistic scenarios, and active authentication. This paper reviews the primary algorithms that address these challenges, examining their advantages, limitations, and future prospects.

19 Aug 2022

We propose a technique to authenticate received packets in underwater

acoustic networks based on the physical layer features of the underwater

acoustic channel (UWAC). Several sensors a) locally estimate features (e.g.,

the number of taps or the delay spread) of the UWAC over which the packet is

received, b) obtain a compressed feature representation through a neural

network (NN), and c) transmit their representations to a central sink node

that, using a NN, decides whether the packet has been transmitted by the

legitimate node or by an impersonating attacker. Although the purpose of the

system is to make a binary decision as to whether a packet is authentic or not,

we show the importance of having a rich set of compressed features, while still

taking into account transmission rate limits among the nodes. We consider both

global training, where all NNs are trained together, and local training, where

each NN is trained individually. For the latter scenario, several alternatives

for the NN structure and loss function were used for training.

Over the years, significant contributions have been made by the research and industrial sectors to improve wearable devices towards the Internet of Wearable Things (IoWT) paradigm. However, wearables are still facing several challenges. Many stem from the limited battery power and insufficient computation resources available on wearable devices. On the other hand, with the popularity of smart wearables, there is a consistent increase in the development of new computationally intensive and latency-critical applications. In such a context, task offloading allows wearables to leverage the resources available on nearby edge devices to enhance the overall user experience. This paper proposes a framework for Reinforcement Learning (RL)-based task offloading in the IoWT. We formulate the task offloading process considering the tradeoff between energy consumption and task accomplishment time. Moreover, we model the task offloading problem as a Markov Decision Process (MDP) and utilize the Q-learning technique to enable the wearable device to make optimal task offloading decisions without prior knowledge. We evaluate the performance of the proposed framework through extensive simulations for various applications and system configurations conducted in the ns-3 network simulator. We also show how varying the main system parameters of the Q-learning algorithm affects the overall performance in terms of average task accomplishment time, average energy consumption, and percentage of tasks offloaded.

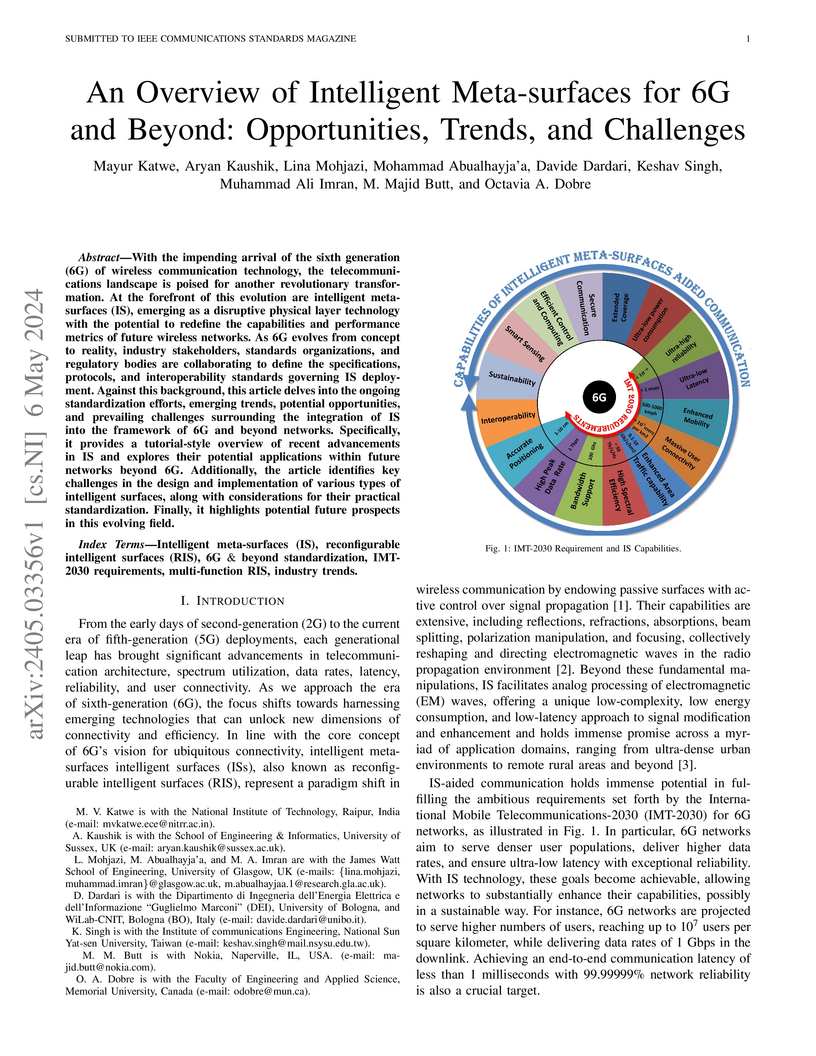

With the impending arrival of the sixth generation (6G) of wireless

communication technology, the telecommunications landscape is poised for

another revolutionary transformation. At the forefront of this evolution are

intelligent meta-surfaces (IS), emerging as a disruptive physical layer

technology with the potential to redefine the capabilities and performance

metrics of future wireless networks. As 6G evolves from concept to reality,

industry stakeholders, standards organizations, and regulatory bodies are

collaborating to define the specifications, protocols, and interoperability

standards governing IS deployment. Against this background, this article delves

into the ongoing standardization efforts, emerging trends, potential

opportunities, and prevailing challenges surrounding the integration of IS into

the framework of 6G and beyond networks. Specifically, it provides a

tutorial-style overview of recent advancements in IS and explores their

potential applications within future networks beyond 6G. Additionally, the

article identifies key challenges in the design and implementation of various

types of intelligent surfaces, along with considerations for their practical

standardization. Finally, it highlights potential future prospects in this

evolving field.

What will 5G be? What it will not be is an incremental advance on 4G. The

previous four generations of cellular technology have each been a major

paradigm shift that has broken backwards compatibility. And indeed, 5G will

need to be a paradigm shift that includes very high carrier frequencies with

massive bandwidths, extreme base station and device densities and unprecedented

numbers of antennas. But unlike the previous four generations, it will also be

highly integrative: tying any new 5G air interface and spectrum together with

LTE and WiFi to provide universal high-rate coverage and a seamless user

experience. To support this, the core network will also have to reach

unprecedented levels of flexibility and intelligence, spectrum regulation will

need to be rethought and improved, and energy and cost efficiencies will become

even more critical considerations. This paper discusses all of these topics,

identifying key challenges for future research and preliminary 5G

standardization activities, while providing a comprehensive overview of the

current literature, and in particular of the papers appearing in this special

issue.

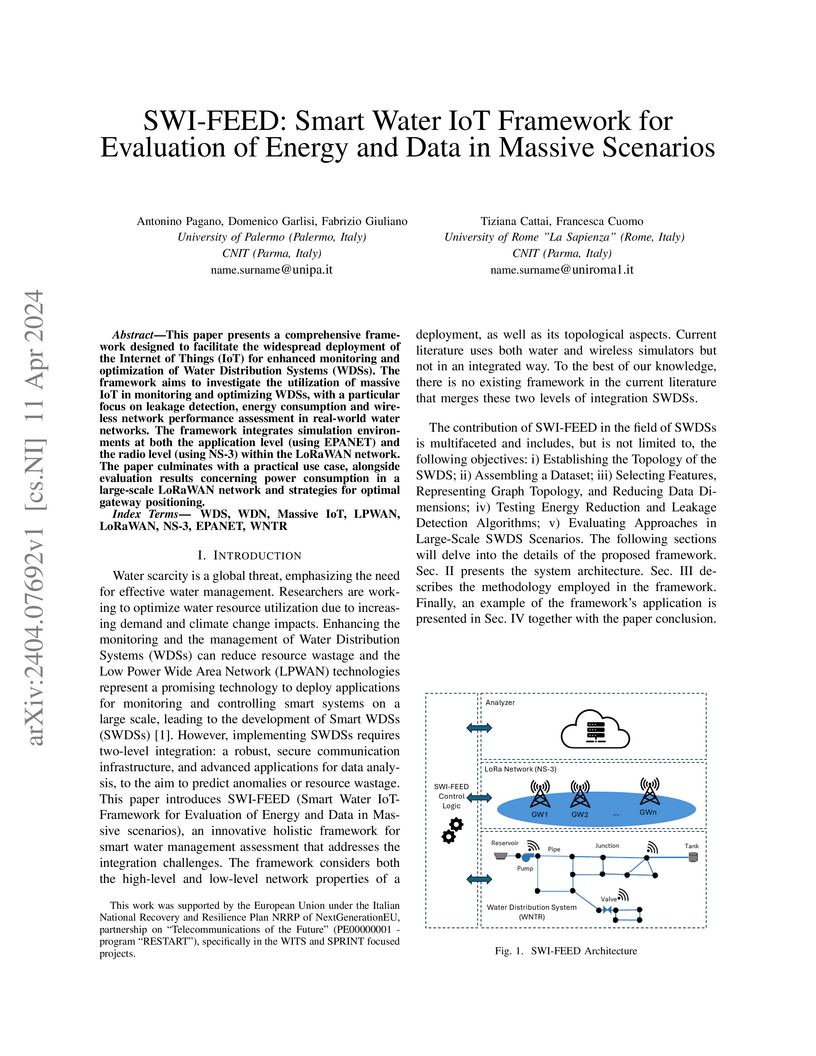

This paper presents a comprehensive framework designed to facilitate the

widespread deployment of the Internet of Things (IoT) for enhanced monitoring

and optimization of Water Distribution Systems (WDSs). The framework aims to

investigate the utilization of massive IoT in monitoring and optimizing WDSs,

with a particular focus on leakage detection, energy consumption and wireless

network performance assessment in real-world water networks. The framework

integrates simulation environments at both the application level (using EPANET)

and the radio level (using NS-3) within the LoRaWAN network. The paper

culminates with a practical use case, alongside evaluation results concerning

power consumption in a large-scale LoRaWAN network and strategies for optimal

gateway positioning.

30 Jun 2023

Effective integration of terrestrial and non-terrestrial segments is one of

the key research avenues in the design of current and future wireless

communication networks. To this aim, modern communication-satellite

constellations intend to attain sufficiently high throughput in terms of bit

rate per unit area on the ground by rather aggressive patterns of spatial

frequency reuse. This goal calls for on-board narrow-beam antennas, whose size

turns out to be in many cases incompatible with the size/mass and accommodation

constraints of the hosting satellite. This paper investigates the attainable

performance of large distributed arrays of antennas implemented as the ensemble

of a few to many simpler sub-antennas of smaller sizes, carried by one (small)

satellite each. The sub-antennas can in their turn be implemented like

(regular) 2D arrays of simple radiating elements, realizing an overall

(distributed) antenna architecture that we call "Formation of Arrays" (FoA).

The satellites that implement this radiating architecture need to be relatively

close to each other and constitute a formation of flying objects, to be

coordinated and controlled as a whole. In this paper, we develop a theoretical

analysis of an FoA antenna, and we show how to take advantage of this new

technology to improve network throughput in a multi-beam S-band mobile

communication network with low-earth or geostationary orbiting satellites

directly providing 5G-like communication services to hand-held user terminals.

The Industrial Internet of Things (IIoT) paradigm has emerged as a transformative force, revolutionizing industrial processes by integrating advanced wireless technologies into traditional procedures to enhance their efficiency. The importance of this paradigm shift has produced a massive, yet heterogeneous, proliferation of scientific contributions. However, these works lack a standardized and cohesive characterization of the IIoT framework coming from different entities, like the 3rd Generation Partnership Project (3GPP) or the 5G Alliance for Connected Industries and Automation (5G-ACIA), resulting in divergent perspectives and potentially hindering interoperability. To bridge this gap, this article offers a unified characterization of (i) the main IIoT application domains, (ii) their respective requirements, (iii) the principal technological gaps existing in the current literature, and, most importantly, (iv) we propose a systematic approach for assessing and addressing the identified research challenges. Therefore, this article serves as a roadmap for future research endeavors, promoting a unified vision of the IIoT paradigm and fostering collaborative efforts to advance the field.

The Internet of Things (IoT) revolution demands scalable, energy-efficient

communication protocols supporting widespread device deployments. The LoRa

technology, coupled with the LoRaWAN protocol, has emerged as a leading Low

Power Wide Area Network (LPWAN) solution, traditionally leveraging sub-GHz

frequency bands for reliable long-range communication. However, these bands

face constraints such as limited data rates and strict duty cycle regulations.

Recent advancements have introduced the 2.4 GHz spectrum, offering superior

data rates and unrestricted transmission opportunities at the cost of reduced

coverage and severe interference. To solve this trade-off, this paper proposes

a novel hybrid approach integrating multi-band (i.e., sub-GHz and 2.4 GHz) and

multi-hop communication into LoRaWAN, while preserving compatibility with the

existing standard. The proposed network architecture retains Gateways (GWs) and

End Devices (EDs) operating within the sub-GHz frequency while introducing

multi-band Relays (RLs) that act as forwarding nodes for 2.4 GHz EDs. Utilizing

our previously developed open-source and standards-compliant simulation

framework, we evaluate the network performance of our solution under realistic

deployment scenarios. The results demonstrate substantial improvements compared

to standard single-band and single-hop LoRaWAN networks, demonstrating the

potential of this approach to redefine LPWAN capabilities and bridge the gap

between current solutions and next-generation IoT applications.

In future 6G wireless networks, semantic and effectiveness aspects of

communications will play a fundamental role, incorporating meaning and

relevance into transmissions. However, obstacles arise when devices employ

diverse languages, logic, or internal representations, leading to semantic

mismatches that might jeopardize understanding. In latent space communication,

this challenge manifests as misalignment within high-dimensional

representations where deep neural networks encode data. This paper presents a

novel framework for goal-oriented semantic communication, leveraging relative

representations to mitigate semantic mismatches via latent space alignment. We

propose a dynamic optimization strategy that adapts relative representations,

communication parameters, and computation resources for energy-efficient,

low-latency, goal-oriented semantic communications. Numerical results

demonstrate our methodology's effectiveness in mitigating mismatches among

devices, while optimizing energy consumption, delay, and effectiveness.

Deepfake technology is rapidly advancing, posing significant challenges to

the detection of manipulated media content. Parallel to that, some adversarial

attack techniques have been developed to fool the deepfake detectors and make

deepfakes even more difficult to be detected. This paper explores the

application of super resolution techniques as a possible adversarial attack in

deepfake detection. Through our experiments, we demonstrate that minimal

changes made by these methods in the visual appearance of images can have a

profound impact on the performance of deepfake detection systems. We propose a

novel attack using super resolution as a quick, black-box and effective method

to camouflage fake images and/or generate false alarms on pristine images. Our

results indicate that the usage of super resolution can significantly impair

the accuracy of deepfake detectors, thereby highlighting the vulnerability of

such systems to adversarial attacks. The code to reproduce our experiments is

available at:

this https URL

Quantum Teleportation is the key communication functionality of the Quantum

Internet, allowing the "transmission' of qubits without either the physical

transfer of the particle storing the qubit or the violation of the quantum

mechanical principles. Quantum teleportation is facilitated by the action of

quantum entanglement, a somewhat counter-intuitive physical phenomenon with no

direct counterpart in the classical word. As a consequence, the very concept of

the classical communication system model has to be redesigned to account for

the peculiarities of quantum teleportation. This re-design is a crucial

prerequisite for constructing any effective quantum communication protocol. The

aim of this manuscript is to shed light on this key concept, with the objective

of allowing the reader: i) to appreciate the fundamental differences between

the transmission of classical information versus the teleportation of quantum

information; ii) to understand the communications functionalities underlying

quantum teleportation, and to grasp the challenges in the design and practical

employment of these functionalities; iii) to acknowledge that quantum

information is subject to the deleterious effects of a noise process termed as

quantum decoherence. This impairment has no direct counterpart in the classical

world; iv) to recognize how to contribute to the design and employment of the

Quantum Internet.

Motivated by the proliferation of Internet-of-Thing (IoT) devices and the rapid advances in the field of deep learning, there is a growing interest in pushing deep learning computations, conventionally handled by the cloud, to the edge of the network to deliver faster responses to end users, reduce bandwidth consumption to the cloud, and address privacy concerns. However, to fully realize deep learning at the edge, two main challenges still need to be addressed: (i) how to meet the high resource requirements of deep learning on resource-constrained devices, and (ii) how to leverage the availability of multiple streams of spatially correlated data, to increase the effectiveness of deep learning and improve application-level performance. To address the above challenges, we explore collaborative inference at the edge, in which edge nodes and end devices share correlated data and the inference computational burden by leveraging different ways to split computation and fuse data. Besides traditional centralized and distributed schemes for edge-end device collaborative inference, we introduce selective schemes that decrease bandwidth resource consumption by effectively reducing data redundancy. As a reference scenario, we focus on multi-view classification in a networked system in which sensing nodes can capture overlapping fields of view. The proposed schemes are compared in terms of accuracy, computational expenditure at the nodes, communication overhead, inference latency, robustness, and noise sensitivity. Experimental results highlight that selective collaborative schemes can achieve different trade-offs between the above performance metrics, with some of them bringing substantial communication savings (from 18% to 74% of the transmitted data with respect to centralized inference) while still keeping the inference accuracy well above 90%.

There are no more papers matching your filters at the moment.